Ian Jackson: Why we ve voted No to CfD for Derril Water solar farm

- Green electricity from your mainstream supplier is a lie

- Ripple

- Contracts for Difference

- Ripple and CfD

- Voting No

The Amazon Kids parental controls are extremely insufficient, and I strongly advise against getting any of the Amazon Kids series.

The initial permise (and some older reviews) look okay: you can set some time limits, and you can disable anything that requires buying.

With the hardware you get one year of the Amazon Kids+ subscription, which includes a lot of interesting content such as books and audio,

but also some apps. This seemed attractive: some learning apps, some decent games.

Sometimes there seems to be a special Amazon Kids+ edition , supposedly one that has advertisements reduced/removed and no purchasing.

However, there are so many things just wrong in Amazon Kids:

The Amazon Kids parental controls are extremely insufficient, and I strongly advise against getting any of the Amazon Kids series.

The initial permise (and some older reviews) look okay: you can set some time limits, and you can disable anything that requires buying.

With the hardware you get one year of the Amazon Kids+ subscription, which includes a lot of interesting content such as books and audio,

but also some apps. This seemed attractive: some learning apps, some decent games.

Sometimes there seems to be a special Amazon Kids+ edition , supposedly one that has advertisements reduced/removed and no purchasing.

However, there are so many things just wrong in Amazon Kids:

Closing arguments in the trial between various people and Craig Wright over whether he's Satoshi Nakamoto are wrapping up today, amongst a bewildering array of presented evidence. But one utterly astonishing aspect of this lawsuit is that expert witnesses for both sides agreed that much of the digital evidence provided by Craig Wright was unreliable in one way or another, generally including indications that it wasn't produced at the point in time it claimed to be. And it's fascinating reading through the subtle (and, in some cases, not so subtle) ways that that's revealed.

Closing arguments in the trial between various people and Craig Wright over whether he's Satoshi Nakamoto are wrapping up today, amongst a bewildering array of presented evidence. But one utterly astonishing aspect of this lawsuit is that expert witnesses for both sides agreed that much of the digital evidence provided by Craig Wright was unreliable in one way or another, generally including indications that it wasn't produced at the point in time it claimed to be. And it's fascinating reading through the subtle (and, in some cases, not so subtle) ways that that's revealed. After making my Elastic Neck Top

I knew I wanted to make another one less constrained by the amount of

available fabric.

I had a big cut of white cotton voile, I bought some more swimsuit

elastic, and I also had a spool of n 100 sewing cotton, but then I

postponed the project for a while I was working on other things.

Then FOSDEM 2024 arrived, I was going to remote it, and I was working on

my Augusta Stays, but

I knew that in the middle of FOSDEM I risked getting to the stage where

I needed to leave the computer to try the stays on: not something really

compatible with the frenetic pace of a FOSDEM weekend, even one spent at

home.

I needed a backup project1, and this was perfect: I already

had everything I needed, the pattern and instructions were already on my

site (so I didn t need to take pictures while working), and it was

mostly a lot of straight seams, perfect while watching conference

videos.

So, on the Friday before FOSDEM I cut all of the pieces, then spent

three quarters of FOSDEM on the stays, and when I reached the point

where I needed to stop for a fit test I started on the top.

Like the first one, everything was sewn by hand, and one week after I

had started everything was assembled, except for the casings for the

elastic at the neck and cuffs, which required about 10 km of sewing, and

even if it was just a running stitch it made me want to reconsider my

lifestyle choices a few times: there was really no reason for me not

to do just those seams by machine in a few minutes.

Instead I kept sewing by hand whenever I had time for it, and on the

next weekend it was ready. We had a rare day of sun during the weekend,

so I wore my thermal underwear, some other layer, a scarf around my

neck, and went outside with my SO to have a batch of pictures taken

(those in the jeans posts, and others for a post I haven t written yet.

Have I mentioned I have a backlog?).

And then the top went into the wardrobe, and it will come out again when

the weather will be a bit warmer. Or maybe it will be used under the

Augusta Stays, since I don t have a 1700 chemise yet, but that requires

actually finishing them.

The pattern for this project was already online,

of course, but I ve added a picture of the casing to the relevant

section, and everything is as usual #FreeSoftWear.

After making my Elastic Neck Top

I knew I wanted to make another one less constrained by the amount of

available fabric.

I had a big cut of white cotton voile, I bought some more swimsuit

elastic, and I also had a spool of n 100 sewing cotton, but then I

postponed the project for a while I was working on other things.

Then FOSDEM 2024 arrived, I was going to remote it, and I was working on

my Augusta Stays, but

I knew that in the middle of FOSDEM I risked getting to the stage where

I needed to leave the computer to try the stays on: not something really

compatible with the frenetic pace of a FOSDEM weekend, even one spent at

home.

I needed a backup project1, and this was perfect: I already

had everything I needed, the pattern and instructions were already on my

site (so I didn t need to take pictures while working), and it was

mostly a lot of straight seams, perfect while watching conference

videos.

So, on the Friday before FOSDEM I cut all of the pieces, then spent

three quarters of FOSDEM on the stays, and when I reached the point

where I needed to stop for a fit test I started on the top.

Like the first one, everything was sewn by hand, and one week after I

had started everything was assembled, except for the casings for the

elastic at the neck and cuffs, which required about 10 km of sewing, and

even if it was just a running stitch it made me want to reconsider my

lifestyle choices a few times: there was really no reason for me not

to do just those seams by machine in a few minutes.

Instead I kept sewing by hand whenever I had time for it, and on the

next weekend it was ready. We had a rare day of sun during the weekend,

so I wore my thermal underwear, some other layer, a scarf around my

neck, and went outside with my SO to have a batch of pictures taken

(those in the jeans posts, and others for a post I haven t written yet.

Have I mentioned I have a backlog?).

And then the top went into the wardrobe, and it will come out again when

the weather will be a bit warmer. Or maybe it will be used under the

Augusta Stays, since I don t have a 1700 chemise yet, but that requires

actually finishing them.

The pattern for this project was already online,

of course, but I ve added a picture of the casing to the relevant

section, and everything is as usual #FreeSoftWear.

This covers basically all my known omissions from last update except spellchecking of the Description field.

The X- style prefixes for field names are now understood and handled. This means the language server now considers XC-Package-Type the same as Package-Type.

More diagnostics:

- Fields without values now trigger an error marker

- Duplicated fields now trigger an error marker

- Fields used in the wrong paragraph now trigger an error marker

- Typos in field names or values now trigger a warning marker. For field names, X- style prefixes are stripped before typo detection is done.

- The value of the Section field is now validated against a dataset of known sections and trigger a warning marker if not known.

The "on-save trim end of line whitespace" now works. I had a logic bug in the server side code that made it submit "no change" edits to the editor.

The language server now provides "hover" documentation for field names. There is a small screenshot of this below. Sadly, emacs does not support markdown or, if it does, it does not announce the support for markdown. For now, all the documentation is always in markdown format and the language server will tag it as either markdown or plaintext depending on the announced support.

The language server now provides quick fixes for some of the more trivial problems such as deprecated fields or typos of fields and values.

Added more known fields including the XS-Autobuild field for non-free packages along with a link to the relevant devref section in its hover doc.

Despite its very limited feature set, I feel editing debian/control in emacs is now a much more pleasant experience. Coming back to the features that Otto requested, the above covers a grand total of zero. Sorry, Otto. It is not you, it is me.

- Diagnostics or linting of basic issues.

- Completion suggestions for all known field names that I could think of and values for some fields.

- Folding ranges (untested). This feature enables the editor to "fold" multiple lines. It is often used with multi-line comments and that is the feature currently supported.

- On save, trim trailing whitespace at the end of lines (untested). Might not be registered correctly on the server end.

Notable omission at this time:

- An error marker for syntax errors.

- An error marker for missing a mandatory field like Package or Architecture. This also includes Standards-Version, which is admittedly mandatory by policy rather than tooling falling part.

- An error marker for adding Multi-Arch: same to an Architecture: all package.

- Error marker for providing an unknown value to a field with a set of known values. As an example, writing foo in Multi-Arch would trigger this one.

- Warning marker for using deprecated fields such as DM-Upload-Allowed, or when setting a field to its default value for fields like Essential. The latter rule only applies to selected fields and notably Multi-Arch: no does not trigger a warning.

- Info level marker if a field like Priority duplicates the value of the Source paragraph.

- No errors are raised if a field does not have a value.

- No errors are raised if a field is duplicated inside a paragraph.

- No errors are used if a field is used in the wrong paragraph.

- No spellchecking of the Description field.

- No understanding that Foo and X[CBS]-Foo are related. As an example, XC-Package-Type is completely ignored despite being the old name for Package-Type.

- Quick fixes to solve these problems... :)

Obviously, the setup should get easier over time. The first three bullet points should eventually get resolved by merges and upload meaning you end up with an apt install command instead of them. For the editor part, I would obviously love it if we can add snippets for editors to make the automatically pick up the language server when the relevant file is installed.

- Build and install the deb of the main branch of pygls from https://salsa.debian.org/debian/pygls The package is in NEW and hopefully this step will soon just be a regular apt install.

- Build and install the deb of the rts-locatable branch of my python-debian fork from https://salsa.debian.org/nthykier/python-debian There is a draft MR of it as well on the main repo.

- Build and install the deb of the lsp-support branch of debputy from https://salsa.debian.org/debian/debputy

- Configure your editor to run debputy lsp debian/control as the language server for debian/control. This is depends on your editor. I figured out how to do it for emacs (see below). I also found a guide for neovim at https://neovim.io/doc/user/lsp. Note that debputy can be run from any directory here. The debian/control is a reference to the file format and not a concrete file in this case.

(with-eval-after-load 'eglot

(add-to-list 'eglot-server-programs

'(debian-control-mode . ("debputy" "lsp" "debian/control"))))

- [X] No errors are raised if a field does not have a value.

- [X] No errors are raised if a field is duplicated inside a paragraph.

- [X] No errors are used if a field is used in the wrong paragraph.

- [ ] No spellchecking of the Description field.

- [X] No understanding that Foo and X[CBS]-Foo are related. As an example, XC-Package-Type is completely ignored despite being the old name for Package-Type.

- [X] Fixed the on-save trim end of line whitespace. Bug in the server end.

- [X] Hover text for field names

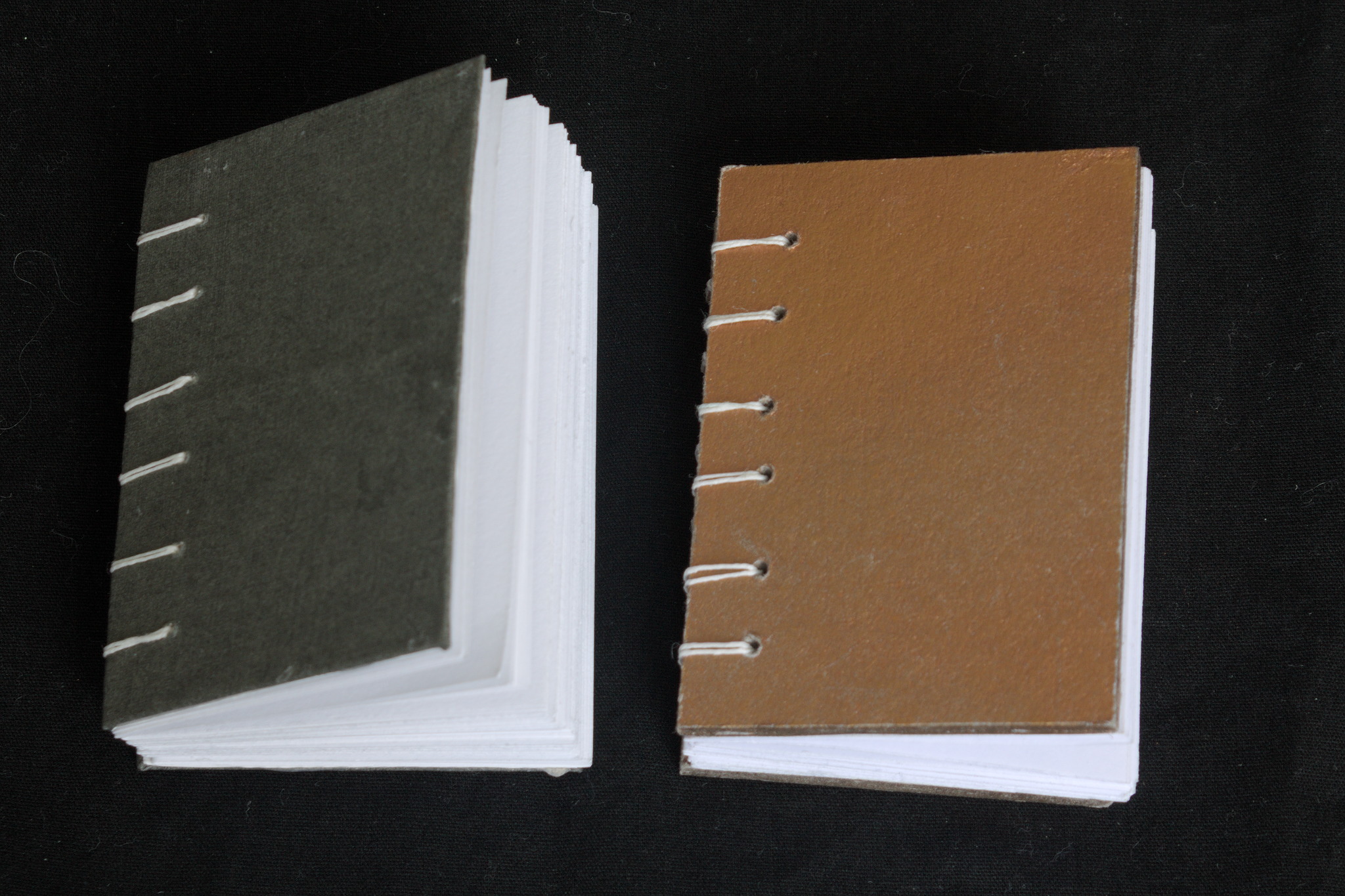

In 2022 I read a post on the fediverse by somebody who mentioned that

they had bought on a whim a cute tiny book years ago, and that it

had been a companion through hard times. Right now I can t find the

post, but it was pretty aaaaawwww.

In 2022 I read a post on the fediverse by somebody who mentioned that

they had bought on a whim a cute tiny book years ago, and that it

had been a companion through hard times. Right now I can t find the

post, but it was pretty aaaaawwww.

At the same time, I had discovered Coptic binding, and I wanted to do

some exercise to let my hands learn it, but apparently there is a limit

to the number of notebooks and sketchbooks a person needs (I m not 100%

sure I actually believe this, but I ve heard it is a thing).

At the same time, I had discovered Coptic binding, and I wanted to do

some exercise to let my hands learn it, but apparently there is a limit

to the number of notebooks and sketchbooks a person needs (I m not 100%

sure I actually believe this, but I ve heard it is a thing).

So I decided to start making minibooks with the intent to give them

away: I settled (mostly) on the A8 size, and used a combination of found

materials, leftovers from bigger projects and things I had in the Stash.

As for paper, I ve used a variety of the ones I have that are at the

very least good enough for non-problematic fountain pen inks.

So I decided to start making minibooks with the intent to give them

away: I settled (mostly) on the A8 size, and used a combination of found

materials, leftovers from bigger projects and things I had in the Stash.

As for paper, I ve used a variety of the ones I have that are at the

very least good enough for non-problematic fountain pen inks.

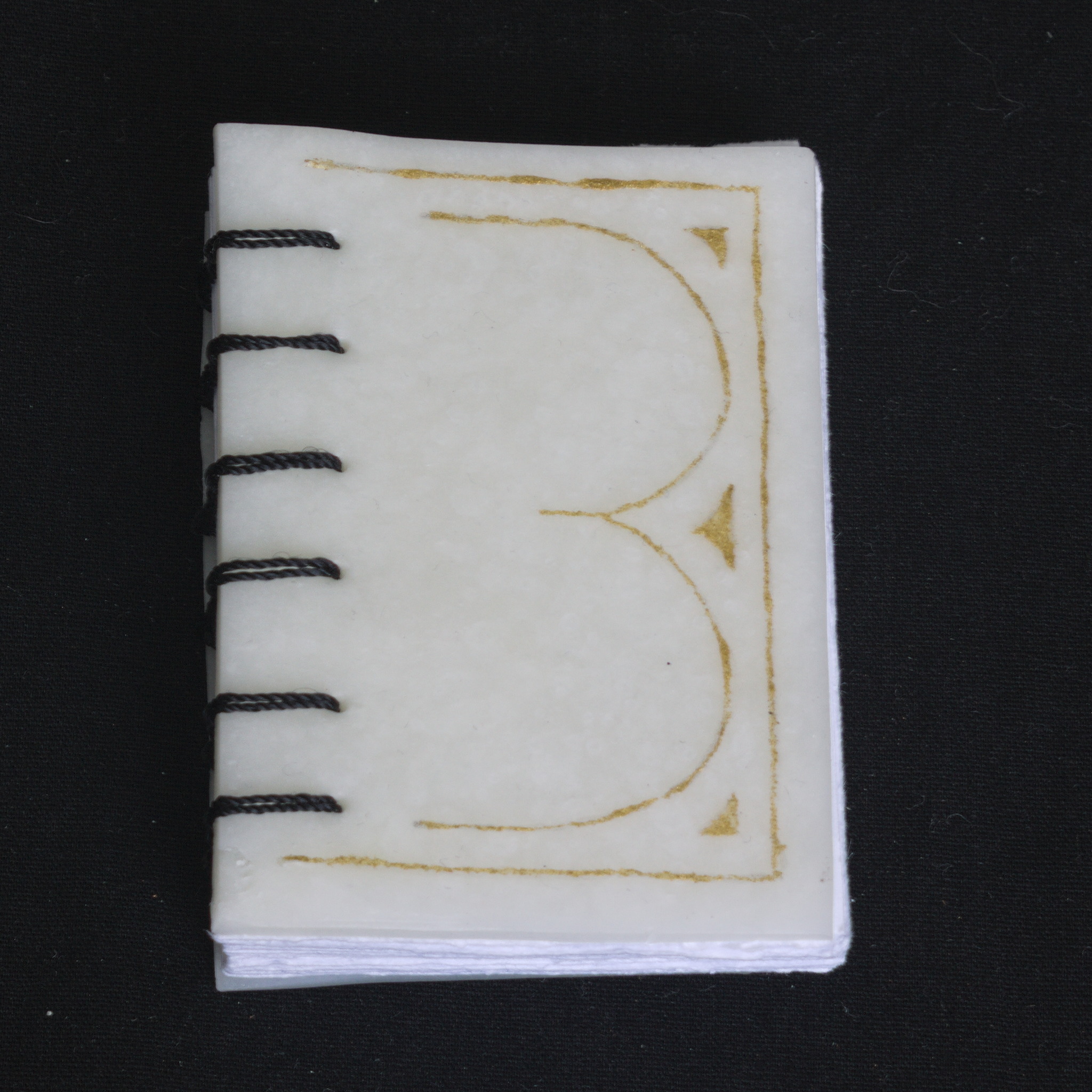

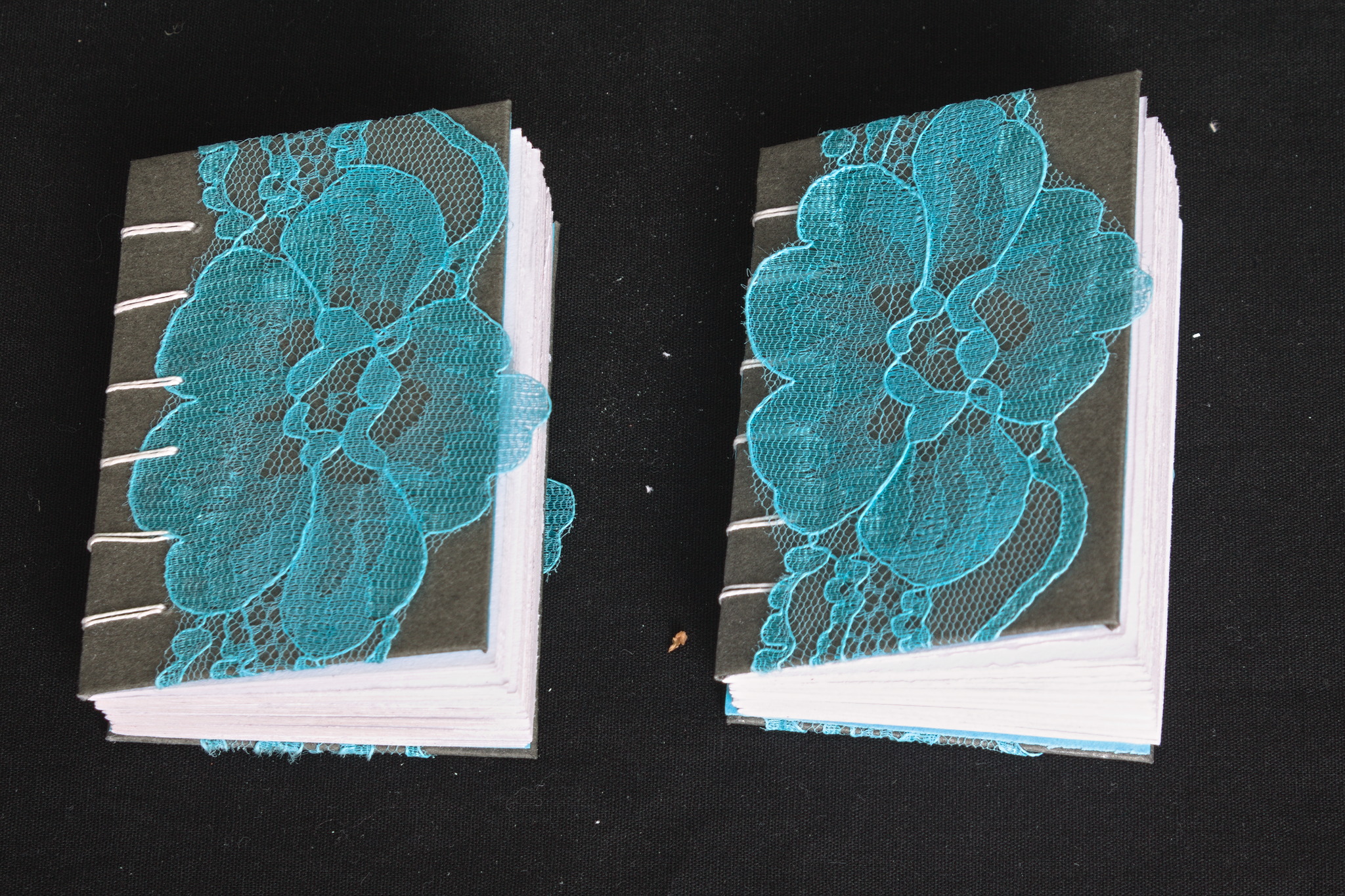

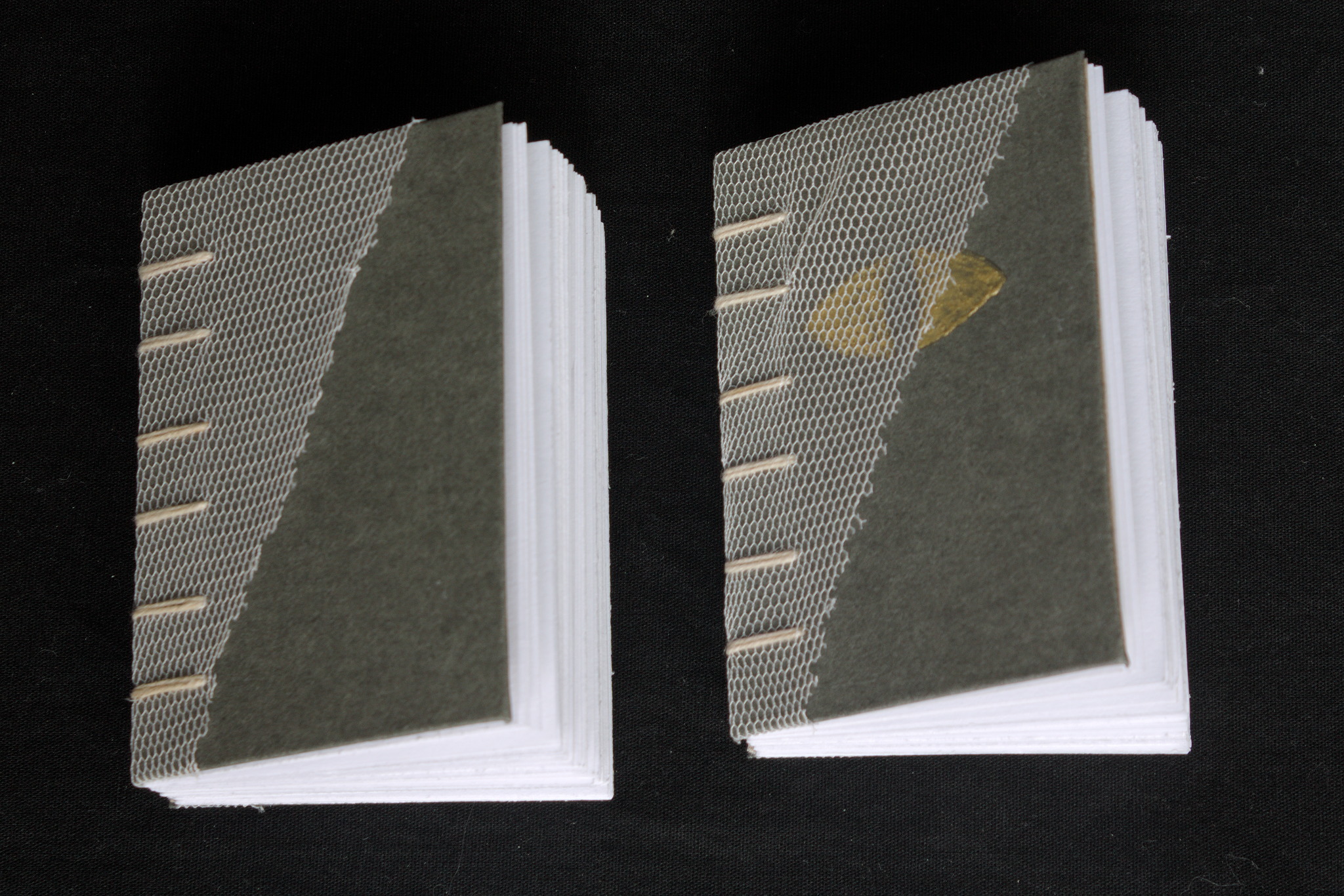

Thanks to the small size, and the way coptic binding works, I ve been

able to play around with the covers, experimenting with different styles

beyond the classic bookbinding cloth / paper covered cardboard,

including adding lace, covering food box cardboard with gesso and

decorating it with acrylic paints, embossing designs by gluing together

two layers of cardboard, one of which has holes, making covers

completely out of cernit, etc. Some of these I will probably also use in

future full-scale projects, but it s nice to find out what works and

what doesn t on a small scale.

Thanks to the small size, and the way coptic binding works, I ve been

able to play around with the covers, experimenting with different styles

beyond the classic bookbinding cloth / paper covered cardboard,

including adding lace, covering food box cardboard with gesso and

decorating it with acrylic paints, embossing designs by gluing together

two layers of cardboard, one of which has holes, making covers

completely out of cernit, etc. Some of these I will probably also use in

future full-scale projects, but it s nice to find out what works and

what doesn t on a small scale.

Now, after a year of sporadically making these I have to say that the

making went quite well: I enjoyed the making and the creativity in

making different covers. The giving away was a bit more problematic, as

I didn t really have a lot of chances to do so, so I believe I still

have most of them. In 2024 I ll try to look for more opportunities (and

if you live nearby and want one or a few feel free to ask!)

Now, after a year of sporadically making these I have to say that the

making went quite well: I enjoyed the making and the creativity in

making different covers. The giving away was a bit more problematic, as

I didn t really have a lot of chances to do so, so I believe I still

have most of them. In 2024 I ll try to look for more opportunities (and

if you live nearby and want one or a few feel free to ask!)

| Series: | Magic of the Lost #2 |

| Publisher: | Orbit |

| Copyright: | March 2023 |

| ISBN: | 0-316-54283-0 |

| Format: | Kindle |

| Pages: | 527 |

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

Books

Peter Watts: Blindsight (2006)

Peter Watts: Blindsight (2006) Reymer Banham: Los Angeles: The Architecture of Four Ecologies (2006)

Reymer Banham: Los Angeles: The Architecture of Four Ecologies (2006) Joanne McNeil: Lurking: How a Person Became a User (2020)

Joanne McNeil: Lurking: How a Person Became a User (2020) J. L. Carr: A Month in the Country (1980)

J. L. Carr: A Month in the Country (1980) Hilary Mantel: A Memoir of My Former Self: A Life in Writing (2023)

Hilary Mantel: A Memoir of My Former Self: A Life in Writing (2023) Adam Higginbotham: Midnight in Chernobyl (2019)

Adam Higginbotham: Midnight in Chernobyl (2019) Tony Judt: Postwar: A History of Europe Since 1945 (2005)

Tony Judt: Postwar: A History of Europe Since 1945 (2005) Tony Judt: Reappraisals: Reflections on the Forgotten Twentieth Century (2008)

Tony Judt: Reappraisals: Reflections on the Forgotten Twentieth Century (2008) Peter Apps: Show Me the Bodies: How We Let Grenfell Happen (2021)

Peter Apps: Show Me the Bodies: How We Let Grenfell Happen (2021) Joan Didion: Slouching Towards Bethlehem (1968)

Joan Didion: Slouching Towards Bethlehem (1968) Erik Larson: The Devil in the White City (2003)

Erik Larson: The Devil in the White City (2003)

Films Recent releases

| Series: | Janet Watson Chronicles #2 |

| Publisher: | Harper Voyager |

| Copyright: | July 2019 |

| ISBN: | 0-06-269938-5 |

| Format: | Kindle |

| Pages: | 325 |

By the influencers on the famous proprietary video platform1.

When I m crafting with no powertools I tend to watch videos, and this

autumn I ve seen a few in a row that were making red wool dresses, at

least one or two medieval kirtles. I don t remember which channels they

were, and I ve decided not to go back and look for them, at least for a

time.

By the influencers on the famous proprietary video platform1.

When I m crafting with no powertools I tend to watch videos, and this

autumn I ve seen a few in a row that were making red wool dresses, at

least one or two medieval kirtles. I don t remember which channels they

were, and I ve decided not to go back and look for them, at least for a

time.

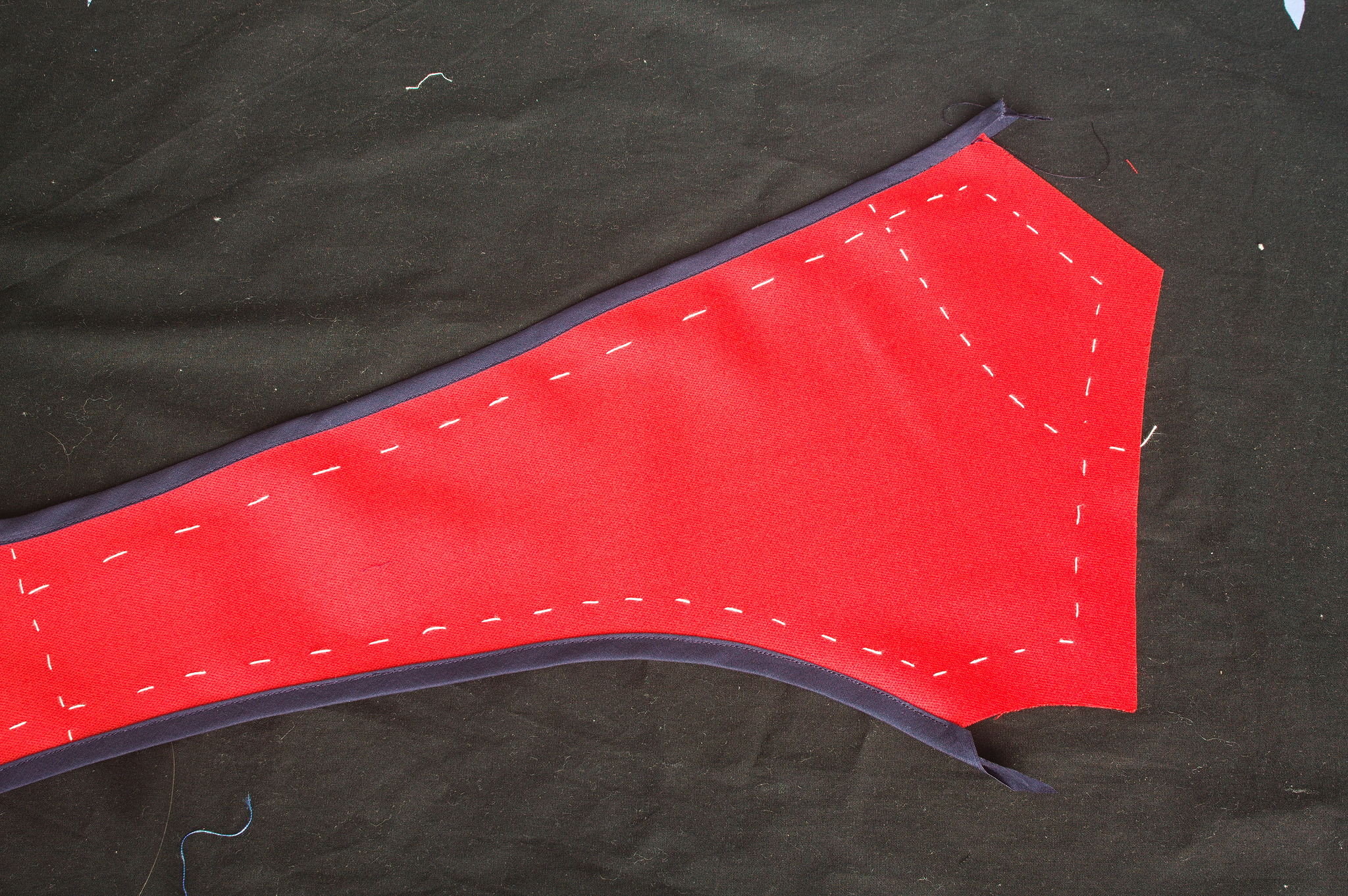

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a dress that

satisfied the latter requirement

and I still had more than half of the red wool faille I ve used for the

Garibaldi blouse (still not blogged, but I will get to it), and this

time I wanted it to be ready for this winter.

While the pattern I was going to use is Victorian, it was designed for

underwear, and this was designed to be outerwear, so from the very start

I decided not to bother too much with any kind of historical details or

techniques.

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a dress that

satisfied the latter requirement

and I still had more than half of the red wool faille I ve used for the

Garibaldi blouse (still not blogged, but I will get to it), and this

time I wanted it to be ready for this winter.

While the pattern I was going to use is Victorian, it was designed for

underwear, and this was designed to be outerwear, so from the very start

I decided not to bother too much with any kind of historical details or

techniques.

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.

Since this is a modern dress, with no historical accuracy at all, and I

have access to a serger, I decided to use some dark blue cotton voile

I ve had in my stash for quite some time, cut into bias strip, to bind

the raw edges before sewing. This works significantly better than bought

bias tape, which is a bit too stiff for this.

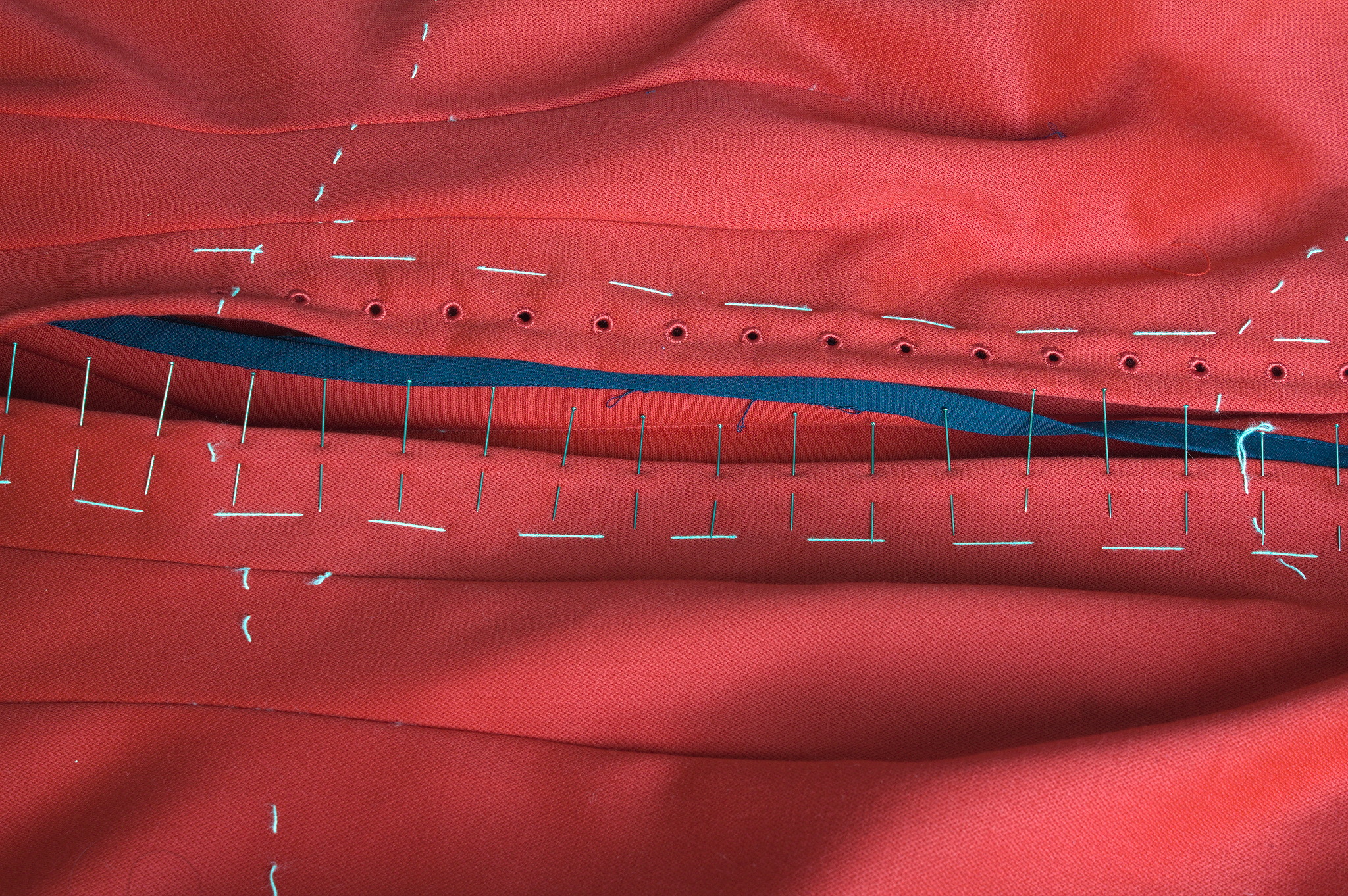

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

For the front opening, I ve decided to reinforce the areas where the

lacing holes will be with cotton: I ve used some other navy blue cotton,

also from the stash, and added two lines of cording to stiffen the front

edge.

So I ve cut the front in two pieces rather than on the fold, sewn the

reinforcements to the sewing allowances in such a way that the corded

edge was aligned with the center front and then sewn the bottom of the

front seam from just before the end of the reinforcements to the hem.

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

The allowances are then folded back, and then they are kept in place

by the worked lacing holes. The cotton was pinked, while for the wool I

used the selvedge of the fabric and there was no need for any finishing.

Behind the opening I ve added a modesty placket: I ve cut a strip of red

wool, a strip of cotton, folded the edge of the strip of cotton to the

center, added cording to the long sides, pressed the allowances of the

wool towards the wrong side, and then handstitched the cotton to the

wool, wrong sides facing. This was finally handstitched to one side of

the sewing allowance of the center front.

I ve also decided to add real pockets, rather than just slits, and for

some reason I decided to add them by hand after I had sewn the dress, so

I ve left opening in the side back seams, where the slits were in the

cotton dress. I ve also already worn the dress, but haven t added the

pockets yet, as I m still debating about their shape. This will be fixed

in the near future.

Another thing that will have to be fixed is the trim situation: I like

the fringe at the bottom, and I had enough to also make a belt, but this

makes the top of the dress a bit empty. I can t use the same fringe

tape, as it is too wide, but it would be nice to have something smaller

that matches the patterned part. And I think I can make something

suitable with tablet weaving, but I m not sure on which materials to

use, so it will have to be on hold for a while, until I decide on the

supplies and have the time for making it.

Another improvement I d like to add are detached sleeves, both matching

(I should still have just enough fabric) and contrasting, but first I

want to learn more about real kirtle construction, and maybe start

making sleeves that would be suitable also for a real kirtle.

Meanwhile, I ve worn it on Christmas (over my 1700s menswear shirt with

big sleeves) and may wear it again tomorrow (if I bother to dress up to

spend New Year s Eve at home :D )

Read all parts of the series

Part 1

// Part 2

// Part 3

// Part 4

I ve been wanting to write this post for over a year, but lacked energy

and time. Before 2023 is coming to an end, I want to close this series

and share some more insights with you and hopefully provide you with a

smile here and there.

For this round of interviews, four more kids around the ages of 8 to 13

were interviewed, 3 of them have a US background these 3

interviews were done by a friend who recorded these interviews for me,

thank you!

As opposed to the previous interviews, these four kids have parents who

have a more technical professional background. And this seems to make a

difference: even though none of these kids actually knew much better how

the internet really works than the other kids that I interviewed,

specifically in terms of physical infrastructures, they were much more

confident in using the internet, they were able to more correctly name

things they see on the internet, and they had partly radical ideas about

what they would like to learn or what they would want to change about

the internet!

Looking at these results, I think it s safe to say that social

reproduction is at work and that we need to improve education for kids

who do not profit from this type of social and cultural wealth at home.

But let s dive into the details.

Read all parts of the series

Part 1

// Part 2

// Part 3

// Part 4

I ve been wanting to write this post for over a year, but lacked energy

and time. Before 2023 is coming to an end, I want to close this series

and share some more insights with you and hopefully provide you with a

smile here and there.

For this round of interviews, four more kids around the ages of 8 to 13

were interviewed, 3 of them have a US background these 3

interviews were done by a friend who recorded these interviews for me,

thank you!

As opposed to the previous interviews, these four kids have parents who

have a more technical professional background. And this seems to make a

difference: even though none of these kids actually knew much better how

the internet really works than the other kids that I interviewed,

specifically in terms of physical infrastructures, they were much more

confident in using the internet, they were able to more correctly name

things they see on the internet, and they had partly radical ideas about

what they would like to learn or what they would want to change about

the internet!

Looking at these results, I think it s safe to say that social

reproduction is at work and that we need to improve education for kids

who do not profit from this type of social and cultural wealth at home.

But let s dive into the details.

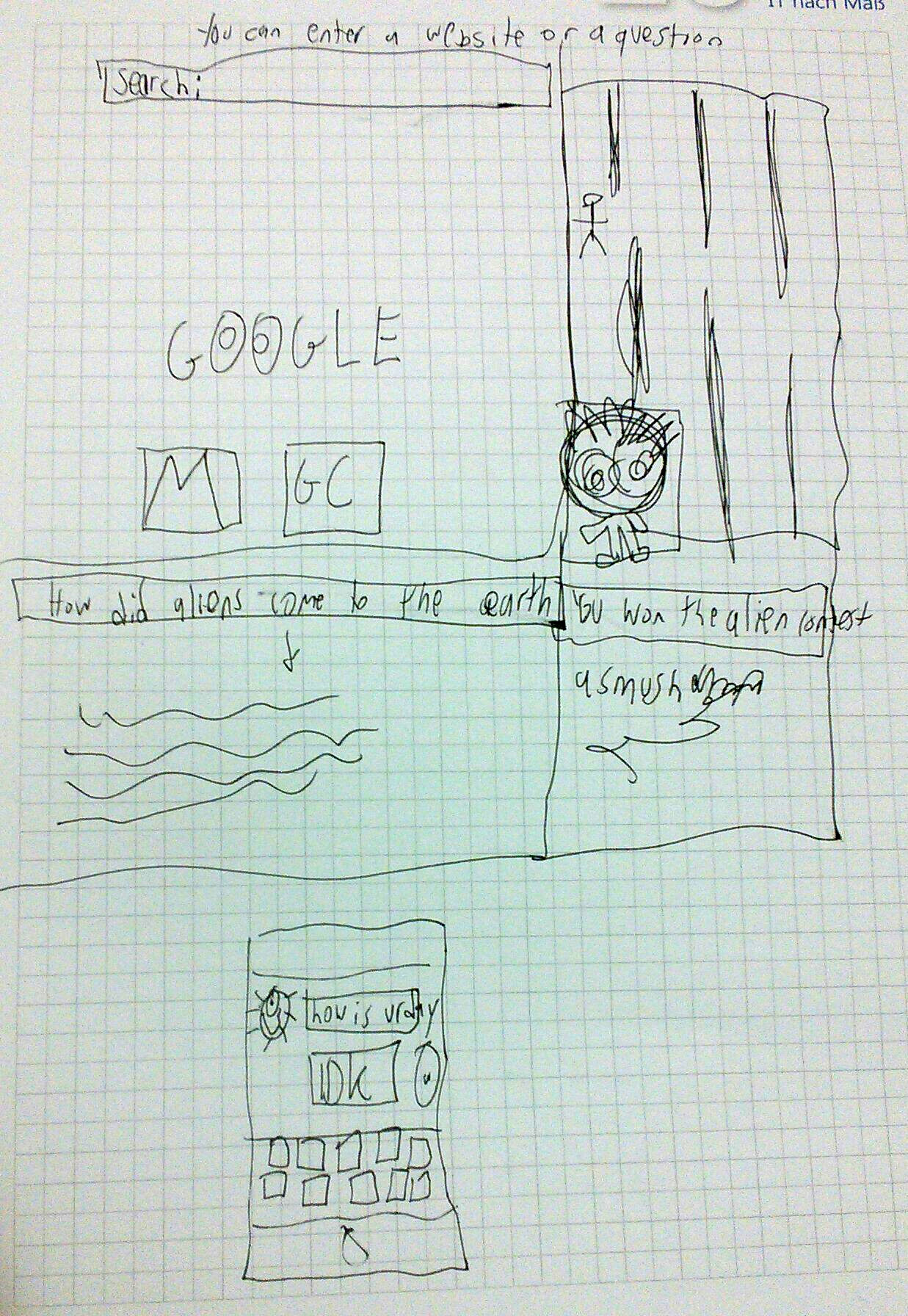

Well, I mean they re aliens, so I don t know if I wanna tell them much.(Parents laughing in the background.) Let s assume they re friendly aliens.

Well, I would say you can look anything up and play different games. And there are alien games. But mostly the enemies are aliens which you might be a little offended by. And you can get work done, if you needed to spy on humans. There s cameras, you can film yourself, yeah. And you can text people and call people who are far awayAnd what would be in a drawing that would explain the internet?

And here s what he explains about his drawing:

And here s what he explains about his drawing:

First, I would draw what I see when you open a new tab, Google.On the right side of the drawing we see something like Twitch.

I don t wanna offend the aliens, but you can film yourself playing a game, so here is the alien and he s playing a game.

And then you can ask questions like: How did aliens come to the Earth? And the answer will be here (below). And there ll be different websites that you can click on.

And you can also look up Who won the alien contest? And that would be Usmushgagu, and that guy won the alien contest.Do you think the information about alien intergalactic football is already on the internet?

Yeah! That s how fast the internet is.On the bottom of the drawing we see an iPhone and an instant messaging software.

There s also a device called an iPhone and with it you can text your friends. So here s the alien asking: How was ur day? and the friend might answer IDK [I don t know].Imagine that a wise and friendly dragon could teach you one thing about the internet that you ve always wanted to know. What would you ask the dragon to teach you about?

Is there a way you don t have to pay for any channels or subscriptions and you can get through any firewall?Imagine you could make the internet better for everyone. What would you do first?

Well you wouldn t have to pay for it [paywalls].Can you describe what happens between your device and a website when you visit a website?

Well, it takes 0.025 seconds. [ ] It s connecting.Wow, that s indeed fast! We were not able to obtain more details about what is that fast thing that s happening exactly

My dad knows everything.The kid has a laptop and a mobile phone, both with parental control they don t think that the controlling is fair. This kid uses the internet foremostly for listening to music and watching prank channels on Youtube but also to work with Purple Mash (a teaching platform for the computing curriculum used at their school), finding 3d printing models (that they ask their father to print with them because they did not manage to use the printer by themselves yet). Interestingly, and very differently from the non-tech-parent kids, this kid insists on using Firefox and Signal - the latter is not only used by their dad to tell them to come downstairs for dinner, but also to call their grandmother. This kid also shops online, with the help of the father who does the actual shopping for them using money that the kid earned by reading books. If you would need to explain to an alien who has landed on Earth what the internet is, what would you tell them?

The internet is something where you search, for example, you can look for music. You can also watch videos from around the world, and you can program stuff.Like most of the kids interviewed, this kid uses the internet mostly for media consumption, but with the difference that they also engage with technology by way of programming using Purple Mash.

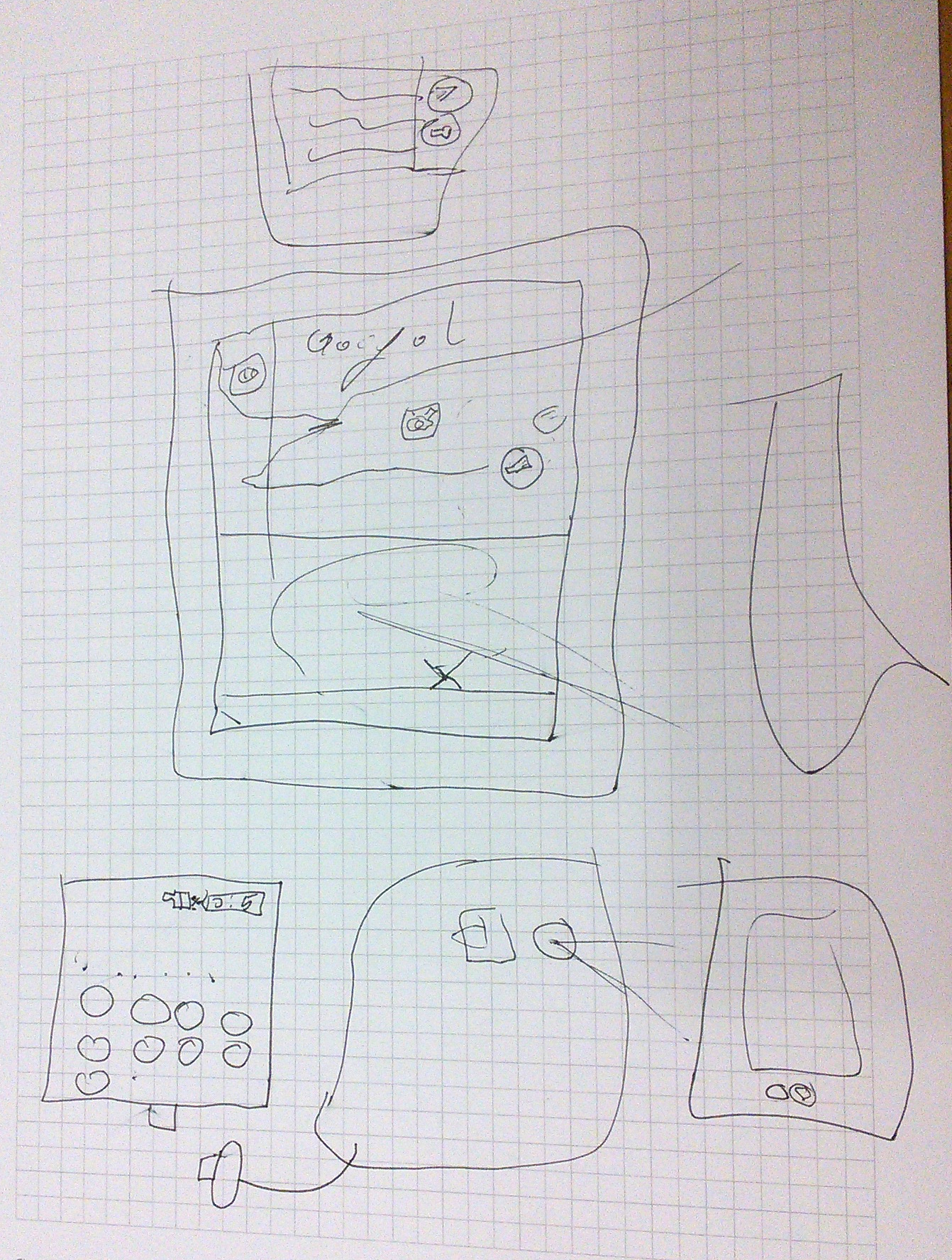

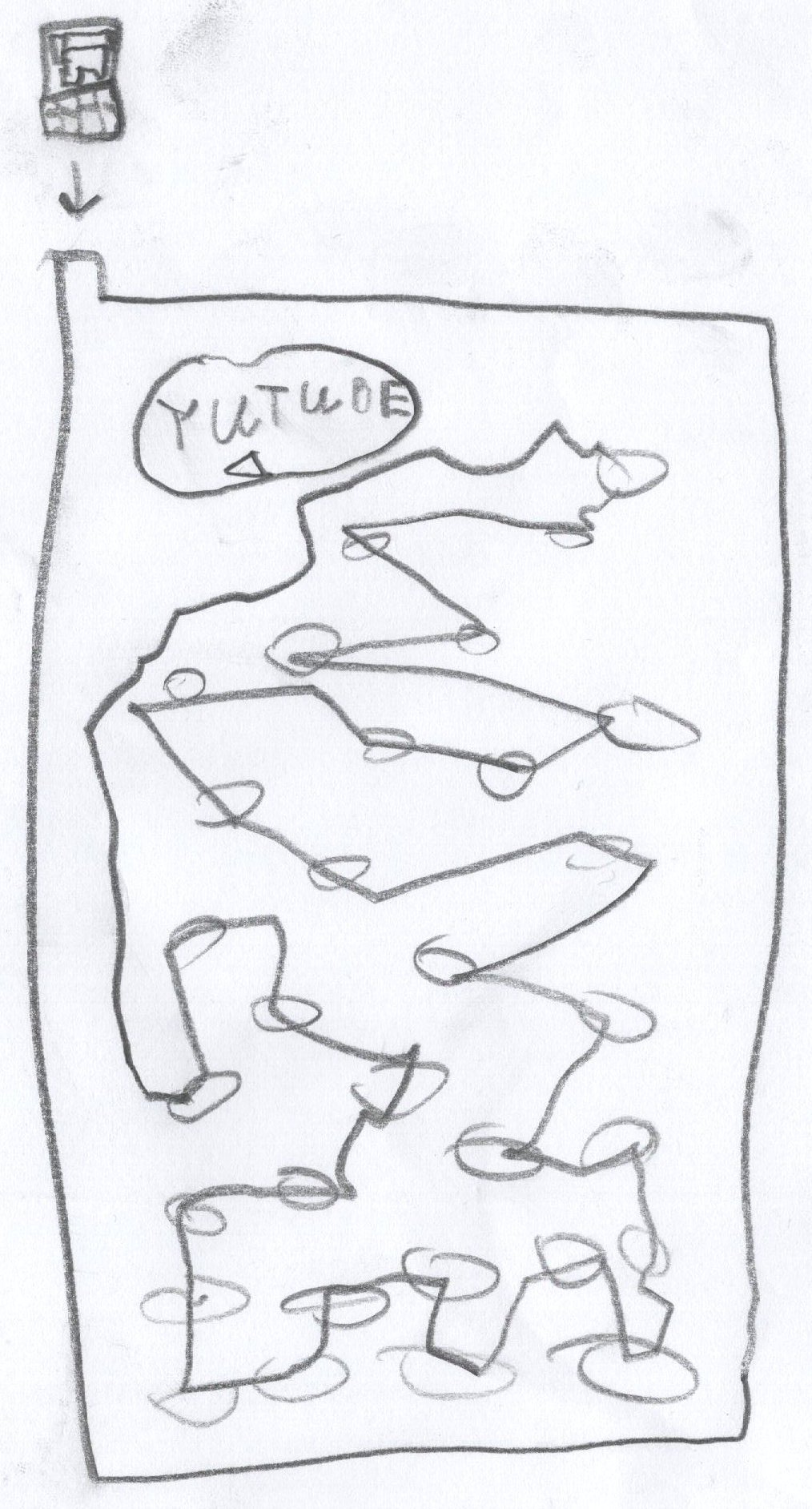

In their drawing we see a Youtube prank channel on a screen, an external

trackpad on the right (likely it s not a touch screen), and headphones.

Notice how there is no keyboard, or maybe it s folded away.

If you could ask a nice and friendly dragon anything you d like to

learn about the internet, what would it be?

In their drawing we see a Youtube prank channel on a screen, an external

trackpad on the right (likely it s not a touch screen), and headphones.

Notice how there is no keyboard, or maybe it s folded away.

If you could ask a nice and friendly dragon anything you d like to

learn about the internet, what would it be?

How do I shutdown my dad s computer forever?And what is it that he would do to improve the internet for everyone? Contrary to the kid living in the US, they think that

It takes too much time to load stuff!I wonder if this kid experiences the internet as being slow because they use the mobile network or because their connection somehow gets throttled as a way to control media consumption, or if the German internet infrastructure is just so much worse in certain regions If you could improve the internet for everyone, what would you do first? I d make a new Firefox app that loads the internet much faster.

In her drawing, we see again Google - it s clearly everywhere - and also

the interfaces for calling and texting someone.

To explain what the internet is, besides the fact that one

can use it for calling and listening to music, she says:

In her drawing, we see again Google - it s clearly everywhere - and also

the interfaces for calling and texting someone.

To explain what the internet is, besides the fact that one

can use it for calling and listening to music, she says:

[The internet] is something that you can [use to] see someone who is far away, so that you don t need to take time to get to them.Now, that s a great explanation, the internet providing the possibility for communication over a distance :) If she could ask a friendly dragon something she always wanted to know, she d ask how to make her phone come alive:

that it can talk to you, that it can see you, that it can smile and has eyes. It s like a new family member, you can talk to it.Sounds a bit like Siri, Alexa, or Furby, doesn t it? If you could improve the internet for everyone, what would you do first? She d have the phone be able to decide over her free time, her phone time. That would make the world better, not for the kids, but certainly for the parents.

An invisible world. A virtual world. But there s also the darknet.He told me he always watches that German show on public TV for kids that explains stuff: Checker Tobi. (In 2014, Checker Tobi actually produced an episode about the internet, which I d criticize for having only male characters, except for one female character: a secretary Google, a nice and friendly woman guiding the way through the huge library that s the internet ) This kid was the only one interviewed who managed to actually explain something about the internet, or rather about the hypertextual structure of the web. When I asked him to draw the internet, he made a drawing of a pin board. He explained:

Many items are attached to the pin board, and on the top left corner there s a computer, for example with Youtube and one can navigate like that between all the items, and start again from the beginning when done.

When I asked if he knew what actually happens between the device and a

website he visits, he put forth the hypothesis of the existence of some

kind of

When I asked if he knew what actually happens between the device and a

website he visits, he put forth the hypothesis of the existence of some

kind of

Waves, internet waves - all this stuff somehow needs to be transmitted.What he d like to learn:

How to get into the darknet? How do you become a Whitehat? I ve heard these words on the internet, the internet makes me clever.And what would he change on the internet if he could?

I want that right wing extreme stuff is not accessible anymore, or at least, that it rains turds ( Kackw rste ) whenever people watch such stuff. Or that people are always told: This video is scum.I suspect that his father has been talking with him about these things, and maybe these are also subjects he heard about when listening to punk music (he told me he does), or browsing Youtube.

| Publisher: | Dutton Books |

| Copyright: | February 2019 |

| Printing: | 2020 |

| ISBN: | 0-7352-3190-7 |

| Format: | Kindle |

| Pages: | 339 |

The Emacs part is superceded by a cleaner approach

I the upcoming term I want to use KC Lu's

web based stacker tool.

The key point is that it takes (small) programs encoded as part of the url.

Yesterday I spent some time integrating it into my existing

The Emacs part is superceded by a cleaner approach

I the upcoming term I want to use KC Lu's

web based stacker tool.

The key point is that it takes (small) programs encoded as part of the url.

Yesterday I spent some time integrating it into my existing

org-beamer workflow.

In my init.el I have

(defun org-babel-execute:stacker (body params)

(let* ((table '(? ?\n ?: ?/ ?? ?# ?[ ?] ?@ ?! ?$ ?& ??

?( ?) ?* ?+ ?, ?= ?%))

(slug (org-link-encode body table))

(simplified (replace-regexp-in-string "[%]20" "+" slug nil 'literal)))

(format "\\stackerlink %s " simplified)))

#+begin_src stacker :results value latex :exports both

(deffun (f x)

(let ([y 2])

(+ x y)))

(f 7)

#+end_src

#+RESULTS:

#+begin_export latex

\stackerlink %28deffun+%28f+x%29%0A++%28let+%28%5By+2%5D%29%0A++++%28%2B+x+y%29%29%29%0A%28f+7%29

#+end_export

\stackerlink macro is probably fancier than needed. One could

just use \href from hyperref.sty, but I wanted to match the

appearence of other links in my documents (buttons in the margins).

This is based on a now lost answer from stackoverflow.com;

I think it wasn't this one, but you get the main idea: use \hyper@normalise.

\makeatletter

% define \stacker@base appropriately

\DeclareRobustCommand* \stackerlink \hyper@normalise\stackerlink@

\def\stackerlink@#1 %

\begin tikzpicture [overlay]%

\coordinate (here) at (0,0);%

\draw (current page.south west - here)%

node[xshift=2ex,yshift=3.5ex,fill=magenta,inner sep=1pt]%

\hyper@linkurl \tiny\textcolor white stacker \stacker@base?program=#1 ; %

\end tikzpicture

\makeatother

| Series: | Sherlock Holmes #1 |

| Publisher: | AmazonClassics |

| Copyright: | 1887 |

| Printing: | February 2018 |

| ISBN: | 1-5039-5525-7 |

| Format: | Kindle |

| Pages: | 159 |

My surprise reached a climax, however, when I found incidentally that he was ignorant of the Copernican Theory and of the composition of the Solar System. That any civilized human being in this nineteenth century should not be aware that the earth travelled round the sun appeared to be to me such an extraordinary fact that I could hardly realize it. "You appear to be astonished," he said, smiling at my expression of surprise. "Now that I do know it I shall do my best to forget it." "To forget it!" "You see," he explained, "I consider that a man's brain originally is like a little empty attic, and you have to stock it with such furniture as you chose. A fool takes in all the lumber of every sort that he comes across, so that the knowledge which might be useful to him gets crowded out, or at best is jumbled up with a lot of other things so that he has a difficulty in laying his hands upon it. Now the skilful workman is very careful indeed as to what he takes into his brain-attic. He will have nothing but the tools which may help him in doing his work, but of these he has a large assortment, and all in the most perfect order. It is a mistake to think that that little room has elastic walls and can distend to any extent. Depend upon it there comes a time when for every addition of knowledge you forget something that you knew before. It is of the highest importance, therefore, not to have useless facts elbowing out the useful ones."This is directly contrary to my expectation that the best way to make leaps of deduction is to know something about a huge range of topics so that one can draw unexpected connections, particularly given the puzzle-box construction and odd details so beloved in classic mysteries. I'm now curious if Doyle stuck with this conception, and if there were any later mysteries that involved astronomy. Speaking of classic mysteries, A Study in Scarlet isn't quite one, although one can see the shape of the genre to come. Doyle does not "play fair" by the rules that have not yet been invented. Holmes at most points knows considerably more than the reader, including bits of evidence that are not described until Holmes describes them and research that Holmes does off-camera and only reveals when he wants to be dramatic. This is not the sort of story where the reader is encouraged to try to figure out the mystery before the detective. Rather, what Doyle seems to be aiming for, and what Watson attempts (unsuccessfully) as the reader surrogate, is slightly different: once Holmes makes one of his grand assertions, the reader is encouraged to guess what Holmes might have done to arrive at that conclusion. Doyle seems to want the reader to guess technique rather than outcome, while providing only vague clues in general descriptions of Holmes's behavior at a crime scene. The structure of this story is quite odd. The first part is roughly what you would expect: first-person narration from Watson, supposedly taken from his journals but not at all in the style of a journal and explicitly written for an audience. Part one concludes with Holmes capturing and dramatically announcing the name of the killer, who the reader has never heard of before. Part two then opens with... a western?

In the central portion of the great North American Continent there lies an arid and repulsive desert, which for many a long year served as a barrier against the advance of civilization. From the Sierra Nevada to Nebraska, and from the Yellowstone River in the north to the Colorado upon the south, is a region of desolation and silence. Nor is Nature always in one mood throughout the grim district. It comprises snow-capped and lofty mountains, and dark and gloomy valleys. There are swift-flowing rivers which dash through jagged ca ons; and there are enormous plains, which in winter are white with snow, and in summer are grey with the saline alkali dust. They all preserve, however, the common characteristics of barrenness, inhospitality, and misery.First, I have issues with the geography. That region contains some of the most beautiful areas on earth, and while a lot of that region is arid, describing it primarily as a repulsive desert is a bit much. Doyle's boundaries and distances are also confusing: the Yellowstone is a northeast-flowing river with its source in Wyoming, so the area between it and the Colorado does not extend to the Sierra Nevadas (or even to Utah), and it's not entirely clear to me that he realizes Nevada exists. This is probably what it's like for people who live anywhere else in the world when US authors write about their country. But second, there's no Holmes, no Watson, and not even the pretense of a transition from the detective novel that we were just reading. Doyle just launches into a random western with an omniscient narrator. It features a lean, grizzled man and an adorable child that he adopts and raises into a beautiful free spirit, who then falls in love with a wild gold-rush adventurer. This was written about 15 years before the first critically recognized western novel, so I can't blame Doyle for all the cliches here, but to a modern reader all of these characters are straight from central casting. Well, except for the villains, who are the Mormons. By that, I don't mean that the villains are Mormon. I mean Brigham Young is the on-page villain, plotting against the hero to force his adopted daughter into a Mormon harem (to use the word that Doyle uses repeatedly) and ruling Salt Lake City with an iron hand, border guards with passwords (?!), and secret police. This part of the book was wild. I was laughing out-loud at the sheer malevolent absurdity of the thirty-day countdown to marriage, which I doubt was the intended effect. We do eventually learn that this is the backstory of the murder, but we don't return to Watson and Holmes for multiple chapters. Which leads me to the other thing that surprised me: Doyle lays out this backstory, but then never has his characters comment directly on the morality of it, only the spectacle. Holmes cares only for the intellectual challenge (and for who gets credit), and Doyle sets things up so that the reader need not concern themselves with aftermath, punishment, or anything of that sort. I probably shouldn't have been surprised this does fit with the Holmes stereotype but I'm used to modern fiction where there is usually at least some effort to pass judgment on the events of the story. Doyle draws very clear villains, but is utterly silent on whether the murder is justified. Given its status in the history of literature, I'm not sorry to have read this book, but I didn't particularly enjoy it. It is very much of its time: everyone's moral character is linked directly to their physical appearance, and Doyle uses the occasional racial stereotype without a second thought. Prevailing writing styles have changed, so the prose feels long-winded and breathless. The rivalry between Holmes and the police detectives is tedious and annoying. I also find it hard to read novels from before the general absorption of techniques of emotional realism and interiority into all genres. The characters in A Study in Scarlet felt more like cartoon characters than fully-realized human beings. I have no strong opinion about the objective merits of this book in the context of its time other than to note that the sudden inserted western felt very weird. My understanding is that this is not considered one of the better Holmes stories, and Holmes gets some deeper characterization later on. Maybe I'll try another of Doyle's works someday, but for now my curiosity has been sated. Followed by The Sign of the Four. Rating: 4 out of 10

This is a post I wrote in June 2022, but did not publish back then.

After first publishing it in December 2023, a perfectionist insecure

part of me unpublished it again. After receiving positive feedback, i

slightly amended and republish it now.

In this post, I talk about unpaid work in F/LOSS, taking on the example

of hackathons, and why, in my opinion, the expectation of volunteer work

is hurting diversity.

Disclaimer: I don t have all the answers, only some ideas and questions.

This is a post I wrote in June 2022, but did not publish back then.

After first publishing it in December 2023, a perfectionist insecure

part of me unpublished it again. After receiving positive feedback, i

slightly amended and republish it now.

In this post, I talk about unpaid work in F/LOSS, taking on the example

of hackathons, and why, in my opinion, the expectation of volunteer work

is hurting diversity.

Disclaimer: I don t have all the answers, only some ideas and questions.

Indeed, while we have proven that there is a strong and significative correlation between the income and the participation in a free/libre software project, it is not possible for us to pronounce ourselves about the causality of this link.In the French original text:

En effet, si nous avons prouv qu il existe une corr lation forte et significative entre le salaire et la participation un projet libre, il ne nous est pas possible de nous prononcer sur la causalit de ce lien.Said differently, it is certain that there is a relationship between income and F/LOSS contribution, but it s unclear whether working on free/libre software ultimately helps finding a well paid job, or if having a well paid job is the cause enabling work on free/libre software. I would like to scratch this question a bit further, mostly relying on my own observations, experiences, and discussions with F/LOSS contributors.

It is unclear whether working on free/libre software ultimately helps finding a well paid job, or if having a well paid job is the cause enabling work on free/libre software.Maybe we need to imagine this cause-effect relationship over time: as a student, without children and lots of free time, hopefully some money from the state or the family, people can spend time on F/LOSS, collect experience, earn recognition - and later find a well-paid job and make unpaid F/LOSS contributions into a hobby, cementing their status in the community, while at the same time generating a sense of well-being from working on the common good. This is a quite common scenario. As the Flosspols study revealed however, boys often get their own computer at the age of 14, while girls get one only at the age of 20. (These numbers might be slightly different now, and possibly many people don t own an actual laptop or desktop computer anymore, instead they own mobile devices which are not exactly inciting them to look behind the surface, take apart, learn, appropriate technology.) In any case, the above scenario does not allow for people who join F/LOSS later in life, eg. changing careers, to find their place. I believe that F/LOSS projects cannot expect to have more women, people of color, people from working class backgrounds, people from outside of Germany, France, USA, UK, Australia, and Canada on board as long as volunteer work is the status quo and waged labour an earned privilege.

Next.