Narabu is a new intraframe video codec. You may or may not want to read

part 1

first.

The GPU, despite being extremely more flexible than it was fifteen years

ago, is still a very different beast from your CPU, and not all problems

map well to it performance-wise. Thus, before designing a codec, it's

useful to know what our platform looks like.

A GPU has lots of special functionality for graphics (well, duh), but we'll

be concentrating on the

compute shader subset in this context, ie., we

won't be drawing any polygons. Roughly, a GPU (as I understand it!) is built

up about as follows:

A GPU contains 1 20 cores; NVIDIA calls them SMs (shader multiprocessors),

Intel calls them subslices. (Trivia: A typical mid-range Intel GPU contains two cores,

and thus is designated GT2.) One such core usually runs the same program,

although on different data; there are exceptions, but typically, if your

program can't fill an entire core with parallelism, you're wasting energy.

Each core, in addition to tons (thousands!) of registers, also has some

shared memory (also called local memory sometimes, although that term

is overloaded), typically 32 64 kB, which you can think of in two ways:

Either as a sort-of explicit L1 cache, or as a way to communicate

internally on a core. Shared memory is a limited, precious resource in

many algorithms.

Each core/SM/subslice contains about 8 execution units (Intel

calls them EUs, NVIDIA/AMD calls them something else) and some memory

access logic. These multiplex a bunch of threads (say, 32) and run in

a round-robin-ish fashion. This means that a GPU can handle memory stalls

much better than a typical CPU, since it has so many streams to pick from;

even though each thread runs in-order, it can just kick off an operation

and then go to the next thread while the previous one is working.

Each execution unit has a bunch of ALUs (typically 16) and executes code in a SIMD

fashion. NVIDIA calls these ALUs CUDA cores , AMD calls them stream

processors . Unlike on CPU, this SIMD has full scatter/gather support

(although sequential access, especially in certain patterns, is

much more efficient

than random access), lane enable/disable so it can work with conditional

code, etc.. The typically fastest operation is a 32-bit float muladd;

usually that's single-cycle. GPUs

love 32-bit FP code. (In fact, in some

GPU languages, you won't even have 8-, 16-bit or 64-bit types. This is

annoying, but not the end of the world.)

The vectorization is not exposed to the user in typical code (GLSL has some

vector types, but they're usually just broken up into scalars, so that's a

red herring), although in some programming languages you can get to swizzle

the SIMD stuff internally to gain advantage of that (there's also schemes for

broadcasting bits by voting etc.). However, it is crucially important to

performance; if you have divergence within a warp, this means the GPU needs

to execute both sides of the if. So less divergent code is good.

Such a SIMD group is called a warp by NVIDIA (I don't know if the others have

names for it). NVIDIA has SIMD/warp width always 32; AMD used to be 64 but

is now 16. Intel supports 4 32 (the compiler will autoselect based on a bunch of

factors), although 16 is the most common.

The upshot of all of this is that you need massive amounts of parallelism

to be able to get useful performance out of a CPU. A rule of thumb is that

if you could have launched about a thousand threads for your problem on CPU,

it's a good fit for a GPU, although this is of course just a guideline.

There's a ton of APIs available to write compute shaders. There's CUDA (NVIDIA-only, but the

dominant player), D3D compute (Windows-only, but multi-vendor),

OpenCL (multi-vendor, but highly variable implementation quality),

OpenGL compute shaders (all platforms except macOS, which has too old drivers),

Metal (Apple-only) and probably some that I forgot. I've chosen to go for

OpenGL compute shaders since I already use OpenGL shaders a lot, and this

saves on interop issues. CUDA probably is more mature, but my laptop is

Intel. :-) No matter which one you choose, the programming model looks very

roughly like this pseudocode:

for (size_t workgroup_idx = 0; workgroup_idx < NUM_WORKGROUPS; ++workgroup_idx) // in parallel over cores

char shared_mem[REQUESTED_SHARED_MEM]; // private for each workgroup

for (size_t local_idx = 0; local_idx < WORKGROUP_SIZE; ++local_idx) // in parallel on each core

main(workgroup_idx, local_idx, shared_mem);

except in reality, the indices will be split in x/y/z for your convenience

(you control all six dimensions, of course), and if you haven't asked for too

much shared memory, the driver can silently make larger workgroups if it

helps increase parallelity (this is totally transparent to you). main()

doesn't return anything, but you can do reads and writes as you wish;

GPUs have large amounts of memory these days, and staggering amounts of

memory bandwidth.

Now for the bad part: Generally, you will have no debuggers, no way of

logging and no real profilers (if you're lucky, you can get to know how long

each compute shader invocation takes, but not what takes time within the

shader itself). Especially the latter is maddening; the only real recourse

you have is some timers, and then placing timer probes or trying to comment

out sections of your code to see if something goes faster. If you don't

get the answers you're looking for, forget printf you need to set up a

separate buffer, write some numbers into it and pull that buffer down to

the GPU. Profilers are an essential part of optimization, and I had really

hoped the world would be more mature here by now. Even CUDA doesn't give

you all that much insight sometimes I wonder if all of this is because

GPU drivers and architectures are

meant to be shrouded in mystery for

competitiveness reasons, but I'm honestly not sure.

So that's it for a crash course in GPU architecture. Next time, we'll start

looking at the Narabu codec itself.

I've finally landed a patch/feature for HLedger I've been working on-and-off

(mostly off) since around March.

HLedger has a powerful CSV importer which you configure with a set of rules.

Rules consist of conditional matchers (does field X in this CSV row match

this regular expression?) and field assignments (set the resulting

transaction's account to Y).

motivating problem 1

Here's an example of one of my rules for handling credit card repayments. This

rule is applied when I import a CSV for my current account, which pays the

credit card:

I've finally landed a patch/feature for HLedger I've been working on-and-off

(mostly off) since around March.

HLedger has a powerful CSV importer which you configure with a set of rules.

Rules consist of conditional matchers (does field X in this CSV row match

this regular expression?) and field assignments (set the resulting

transaction's account to Y).

motivating problem 1

Here's an example of one of my rules for handling credit card repayments. This

rule is applied when I import a CSV for my current account, which pays the

credit card:

Back in June 2018,

Back in June 2018,  So This is basically a call for adoption for the Raspberry Debian images

building service. I do intend to stick around and try to help. It s not only me

(although I m responsible for the build itself) we have a nice and healthy

group of Debian people hanging out in the

So This is basically a call for adoption for the Raspberry Debian images

building service. I do intend to stick around and try to help. It s not only me

(although I m responsible for the build itself) we have a nice and healthy

group of Debian people hanging out in the

I've been a fan of the products manufactured by

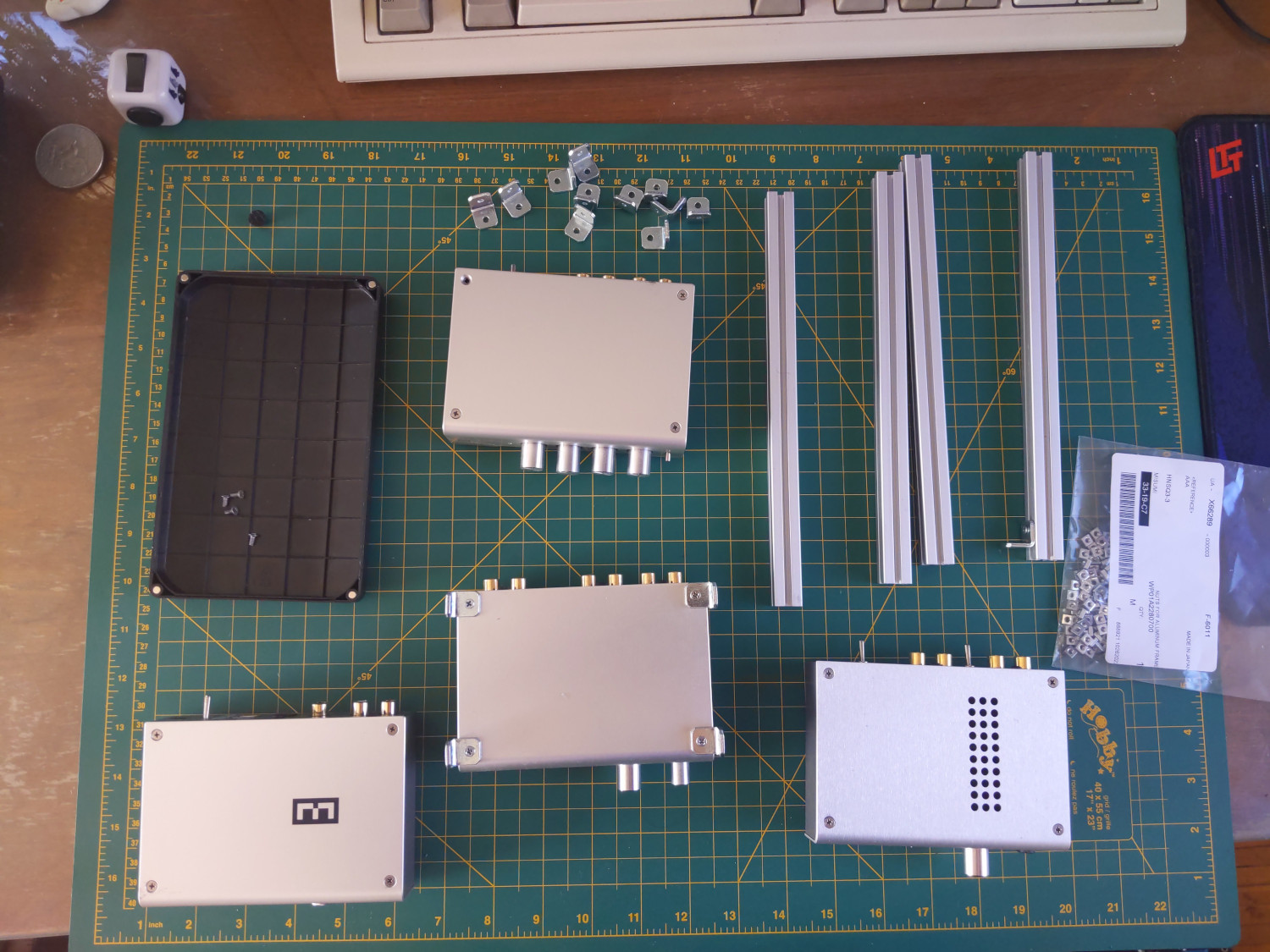

I've been a fan of the products manufactured by  This was my first time working with aluminium frame extrusions and I had tons

of fun! I specced the first version using

This was my first time working with aluminium frame extrusions and I had tons

of fun! I specced the first version using  For the curious ones, the cabling is done this way:

For the curious ones, the cabling is done this way:

More than a month has passed since the last update of TeX Live packages in Debian, so here is a new checkout!

More than a month has passed since the last update of TeX Live packages in Debian, so here is a new checkout! All arch all packages have been updated to the tlnet state as of 2020-06-29, see the detailed update list below.

Enjoy.

New packages

All arch all packages have been updated to the tlnet state as of 2020-06-29, see the detailed update list below.

Enjoy.

New packages

My favorite this time is

My favorite this time is  Narabu is a new intraframe video codec. You probably want to read

Narabu is a new intraframe video codec. You probably want to read

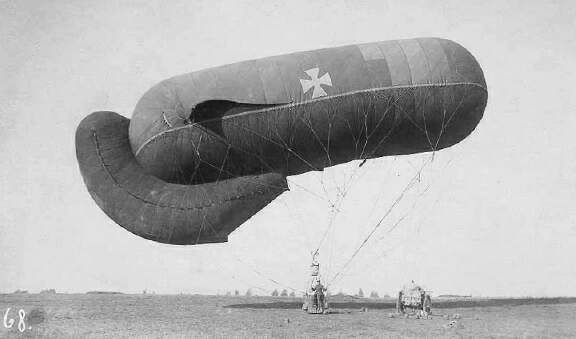

Over a hundred years ago, Russian leaders proposed a treaty

Over a hundred years ago, Russian leaders proposed a treaty