In my recent

post about data archiving to removable media, I laid out the difference between backing up and archiving, and also said I d evaluate

git-annex and

dar. This post evaluates git-annex. The next will look at dar, and then I ll make a comparison post.

What is git-annex?

git-annex is a fantastic and versatile program that does well, it s one of those things that can do so much that it s a bit hard to describe. Its homepage says:

git-annex allows managing large files with git, without storing the file contents in git. It can sync, backup, and archive your data, offline and online. Checksums and encryption keep your data safe and secure. Bring the power and distributed nature of git to bear on your large files with git-annex.

I think the particularly interesting features of git-annex aren t actually included in that list. Among the features of git-annex that make it shine for this purpose, its

location tracking is key. git-annex can know exactly which device has which file at which version at all times. Combined with its

preferred content settings, this lets you very easily say things like:

- I want exactly 1 copy of every file to exist within the set #1 of backup drives. Here s a drive in that set; copy to it whatever needs to be copied to satisfy that requirement.

- Now I have another set of backup drives. Periodically I will swap sets offsite. Copy whatever is needed to this drive in the second set, making sure that there is 1 copy of every file within this set as well, regardless of what s in the first set.

- Here s a directory I want to use to track the status of everything else. I don t want any copies at all here.

git-annex can be set to allow a configurable amount of free space to remain on a device, and it will fill it up with whatever copies are necessary up until it hits that limit. Very convenient!

git-annex will store files in a folder structure that mirrors the origin folder structure, in plain files just as they were. This maximizes the ability for a future person to access the content, since it is all viewable without any special tool at all. Of course, for things like optical media, git-annex will essentially be creating what amounts to incrementals. To obtain a consistent copy of the original tree, you would still need to use git-annex to process (export) the archives.

git-annex challenges

In my

prior post, I related some challenges with git-annex. The biggest of them quite poor performance of the directory special remote when dealing with many files has been

resolved by Joey, git-annex s author! That dramatically improves the git-annex use scenario here! The fixing commit is in the source tree but not yet in a release.

git-annex no doubt may still have performance challenges with repositories in the 100,000+-range, but in that order of magnitude it now looks usable. I m not sure about 1,000,000-file repositories (I haven t tested); there is a

page about scalability.

A few other more minor challenges remain:

- git-annex doesn t really preserve POSIX attributes; for instance, permissions, symlink destinations, and timestamps are all not preserved. Of these, timestamps are the most important for my particular use case.

- If your data set to archive contains Git repositories itself, these will not be included.

I worked around the timestamp issue by using the mtree-netbsd package in Debian. mtree writes out a summary of files and metadata in a tree, and can restore them. To save:

mtree -c -R nlink,uid,gid,mode -p /PATH/TO/REPO -X <(echo './.git') > /tmp/spec

And, after restoration, the timestamps can be applied with:

mtree -t -U -e < /tmp/spec

Walkthrough: initial setup

To use git-annex in this way, we have to do some setup. My general approach is this:

- There is a source of data that lives outside git-annex. I'll call this $SOURCEDIR.

- I'm going to name the directories holding my data $REPONAME.

- There will be a "coordination" git-annex repo. It will hold metadata only, and no data. This will let us track where things live. I'll call it $METAREPO.

- There will be drives. For this example, I'll call their mountpoints $DRIVE01 and $DRIVE02. For easy demonstration purposes, I used a ZFS dataset with a refquota set (to observe the size handling), but I could have as easily used a LVM volume, btrfs dataset, loopback filesystem, or USB drive. For optical discs, this would be a staging area or a UDF filesystem.

Let's get started! I've set all these shell variables appropriately for this example, and REPONAME to "testdata". We'll begin by setting up the metadata-only tracking repo.

$ REPONAME=testdata

$ mkdir "$METAREPO"

$ cd "$METAREPO"

$ git init

$ git config annex.thin true

There is a sort of complicated topic of how git-annex stores files in a repo, which varies depending on whether the data for the file is present in a given repo, and whether the file is locked or unlocked. Basically, the options I use here cause git-annex to mostly use hard links instead of symlinks or pointer files, for maximum compatibility with non-POSIX filesystems such as NTFS and UDF, which might be used on these devices. thin is part of that.

Let's continue:

$ git annex init 'local hub'

init local hub ok

(recording state in git...)

$ git annex wanted . "include=* and exclude=$REPONAME/*"

wanted . ok

(recording state in git...)

In a bit, we are going to import the source data under the directory named $REPONAME (here, testdata). The wanted command says: in this repository (represented by the bare dot), the files we want are matched by the rule that says eveyrthing except what's under $REPONAME. In other words, we don't want to make an unnecessary copy here.

Because I expect to use an mtree file as documented above, and it is not under $REPONAME/, it will be included. Let's just add it and tweak some things.

$ touch mtree

$ git annex add mtree

add mtree

ok

(recording state in git...)

$ git annex sync

git-annex sync will change default behavior to operate on --content in a future version of git-annex. Recommend you explicitly use --no-content (or -g) to prepare for that change. (Or you can configure annex.synccontent)

commit

[main (root-commit) 6044742] git-annex in local hub

1 file changed, 1 insertion(+)

create mode 120000 mtree

ok

$ ls -l

total 9

lrwxrwxrwx 1 jgoerzen jgoerzen 178 Jun 15 22:31 mtree -> .git/annex/objects/pX/ZJ/...

OK! We've added a file, and it got transformed into a symlink. That's the thing I said we were going to avoid, so:

git annex adjust --unlock-present

adjust

Switched to branch 'adjusted/main(unlockpresent)'

ok

$ ls -l

total 1

-rw-r--r-- 2 jgoerzen jgoerzen 0 Jun 15 22:31 mtree

You'll notice it transformed into a hard link (nlinks=2) file. Great! Now let's import the source data. For that, we'll use the directory special remote.

$ git annex initremote source type=directory directory=$SOURCEDIR importtree=yes \

encryption=none

initremote source ok

(recording state in git...)

$ git annex enableremote source directory=$SOURCEDIR

enableremote source ok

(recording state in git...)

$ git config remote.source.annex-readonly true

$ git config annex.securehashesonly true

$ git config annex.genmetadata true

$ git config annex.diskreserve 100M

$ git config remote.source.annex-tracking-branch main:$REPONAME

OK, so here we created a new remote named "source". We enabled it, and set some configuration. Most notably, that last line causes files from "source" to be imported under $REPONAME/ as we wanted earlier. Now we're ready to scan the source.

$ git annex sync

At this point, you'll see git-annex computing a hash for every file in the source directory.

I can verify with du that my metadata-only repo only uses 14MB of disk space, while my source is around 4GB.

Now we can see what git-annex thinks about file locations:

$ git-annex whereis less

whereis mtree (1 copy)

8aed01c5-da30-46c0-8357-1e8a94f67ed6 -- local hub [here]

ok

whereis testdata/[redacted] (0 copies)

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

failed

... many more lines ...

So remember we said we wanted mtree, but nothing under testdata, under this repo? That's exactly what we got. git-annex knows that the files under testdata can be found under the "source" special remote, but aren't in any git-annex repo -- yet. Now we'll start adding them.

Walkthrough: removable drives

I've set up two 500MB filesystems to represent removable drives. We'll see how git-annex works with them.

$ cd $DRIVE01

$ df -h .

Filesystem Size Used Avail Use% Mounted on

acrypt/no-backup/annexdrive01 500M 1.0M 499M 1% /acrypt/no-backup/annexdrive01

$ git clone $METAREPO

Cloning into 'testdata'...

done.

$ cd $REPONAME

$ git config annex.thin true

$ git annex init "test drive #1"

$ git annex adjust --hide-missing --unlock

adjust

Switched to branch 'adjusted/main(hidemissing-unlocked)'

ok

$ git annex sync

OK, that's the initial setup. Now let's enable the source remote and configure it the same way we did before:

$ git annex enableremote source directory=$SOURCEDIR

enableremote source ok

(recording state in git...)

$ git config remote.source.annex-readonly true

$ git config remote.source.annex-tracking-branch main:$REPONAME

$ git config annex.securehashesonly true

$ git config annex.genmetadata true

$ git config annex.diskreserve 100M

Now, we'll add the drive to a group called "driveset01" and configure what we want on it:

$ git annex group . driveset01

$ git annex wanted . '(not copies=driveset01:1)'

What this does is say: first of all, this drive is in a group named driveset01. Then, this drive wants any files for which there isn't already at least one copy in driveset01.

Now let's load up some files!

$ git annex sync --content

As the messages fly by from here, you'll see it mentioning that it got mtree, and then various files from "source" -- until, that is, the filesystem had less than 100MB free, at which point it complained of no space for the rest. Exactly like we wanted!

Now, we need to teach $METAREPO about $DRIVE01.

$ cd $METAREPO

$ git remote add drive01 $DRIVE01/$REPONAME

$ git annex sync drive01

git-annex sync will change default behavior to operate on --content in a future version of git-annex. Recommend you explicitly use --no-content (or -g) to prepare for that change. (Or you can configure annex.synccontent)

commit

On branch adjusted/main(unlockpresent)

nothing to commit, working tree clean

ok

merge synced/main (Merging into main...)

Updating d1d9e53..817befc

Fast-forward

(Merging into adjusted branch...)

Updating 7ccc20b..861aa60

Fast-forward

ok

pull drive01

remote: Enumerating objects: 214, done.

remote: Counting objects: 100% (214/214), done.

remote: Compressing objects: 100% (95/95), done.

remote: Total 110 (delta 6), reused 0 (delta 0), pack-reused 0

Receiving objects: 100% (110/110), 13.01 KiB 1.44 MiB/s, done.

Resolving deltas: 100% (6/6), completed with 6 local objects.

From /acrypt/no-backup/annexdrive01/testdata

* [new branch] adjusted/main(hidemissing-unlocked) -> drive01/adjusted/main(hidemissing-unlocked)

* [new branch] adjusted/main(unlockpresent) -> drive01/adjusted/main(unlockpresent)

* [new branch] git-annex -> drive01/git-annex

* [new branch] main -> drive01/main

* [new branch] synced/main -> drive01/synced/main

ok

OK! This step is important, because drive01 and drive02 (which we'll set up shortly) won't necessarily be able to reach each other directly, due to not being plugged in simultaneously. Our $METAREPO, however, will know all about where every file is, so that the "wanted" settings can be correctly resolved. Let's see what things look like now:

$ git annex whereis less

whereis mtree (2 copies)

8aed01c5-da30-46c0-8357-1e8a94f67ed6 -- local hub [here]

b46fc85c-c68e-4093-a66e-19dc99a7d5e7 -- test drive #1 [drive01]

ok

whereis testdata/[redacted] (1 copy)

b46fc85c-c68e-4093-a66e-19dc99a7d5e7 -- test drive #1 [drive01]

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

ok

If I scroll down a bit, I'll see the files past the 400MB mark that didn't make it onto drive01. Let's add another example drive!

Walkthrough: Adding a second drive

The steps for $DRIVE02 are the same as we did before, just with drive02 instead of drive01, so I'll omit listing it all a second time. Now look at this excerpt from whereis:

whereis testdata/[redacted] (1 copy)

b46fc85c-c68e-4093-a66e-19dc99a7d5e7 -- test drive #1 [drive01]

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

ok

whereis testdata/[redacted] (1 copy)

c4540343-e3b5-4148-af46-3f612adda506 -- test drive #2 [drive02]

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

ok

Look at that! Some files on drive01, some on drive02, some neither place. Perfect!

Walkthrough: Updates

So I've made some changes in the source directory: moved a file, added another, and deleted one. All of these were copied to drive01 above. How do we handle this?

First, we update the metadata repo:

$ cd $METAREPO

$ git annex sync

$ git annex dropunused all

OK, this has scanned $SOURCEDIR and noted changes. Let's see what whereis says:

$ git annex whereis less

...

whereis testdata/cp (0 copies)

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

failed

whereis testdata/file01-unchanged (1 copy)

b46fc85c-c68e-4093-a66e-19dc99a7d5e7 -- test drive #1 [drive01]

The following untrusted locations may also have copies:

9e48387e-b096-400a-8555-a3caf5b70a64 -- [source]

ok

So this looks right. The file I added was a copy of /bin/cp. I moved another file to one named file01-unchanged. Notice that it realized this was a rename and that the data still exists on drive01.

Well, let's update drive01.

$ cd $DRIVE01/$REPONAME

$ git annex sync --content

Looking at the testdata/ directory now, I see that file01-unchanged has been renamed, the deleted file is gone, but cp isn't yet here -- probably due to space issues; as it's new, it's undefined whether it or some other file would fill up free space. Let's work along a few more commands.

$ git annex get --auto

$ git annex drop --auto

$ git annex dropunused all

And now, let's make sure metarepo is updated with its state.

$ cd $METAREPO

$ git annex sync

We could do the same for drive02. This is how we would proceed with every update.

Walkthrough: Restoration

Now, we have bare files at reasonable locations in drive01 and drive02. But, to generate a consistent restore, we need to be able to actually do an export. Otherwise, we may have files with old names, duplicate files, etc. Let's assume that we lost our source and metadata repos and have to restore from scratch. We'll make a new $RESTOREDIR. We'll begin with drive01 since we used it most recently.

$ mv $METAREPO $METAREPO.disabled

$ mv $SOURCEDIR $SOURCEDIR.disabled

$ git clone $DRIVE01/$REPONAME $RESTOREDIR

$ cd $RESTOREDIR

$ git config annex.thin true

$ git annex init "restore"

$ git annex adjust --hide-missing --unlock

Now, we need to connect the drive01 and pull the files from it.

$ git remote add drive01 $DRIVE01/$REPONAME

$ git annex sync --content

Now, repeat with drive02:

$ git remote add drive02 $DRIVE02/$REPONAME

$ git annex sync --content

Now we've got all our content back! Here's what whereis looks like:

whereis testdata/file01-unchanged (3 copies)

3d663d0f-1a69-4943-8eb1-f4fe22dc4349 -- restore [here]

9e48387e-b096-400a-8555-a3caf5b70a64 -- source

b46fc85c-c68e-4093-a66e-19dc99a7d5e7 -- test drive #1 [origin]

ok

...

I was a little surprised that drive01 didn't seem to know what was on drive02. Perhaps that could have been remedied by adding more remotes there? I'm not entirely sure; I'd thought would have been able to do that automatically.

Conclusions

I think I have demonstrated two things:

First, git-annex is indeed an extremely powerful tool. I have only scratched the surface here. The location tracking is a neat feature, and being able to just access the data as plain files if all else fails is nice for future users.

Secondly, it is also a complex tool and difficult to get right for this purpose (I think much easier for some other purposes). For someone that doesn't live and breathe git-annex, it can be hard to get right. In fact, I'm not entirely sure I got it right here. Why didn't drive02 know what files were on drive01 and vice-versa? I don't know, and that reflects some kind of misunderstanding on my part about how metadata is synced; perhaps more care needs to be taken in restore, or done in a different order, than I proposed. I initially tried to do a restore by using git annex export to a directory special remote with exporttree=yes, but I couldn't ever get it to actually do anything, and I don't know why.

These two cut against each other. On the one hand, the raw accessibility of the data to someone with no computer skills is unmatched. On the other hand, I'm not certain I have the skill to always prepare the discs properly, or to do a proper consistent restore.

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

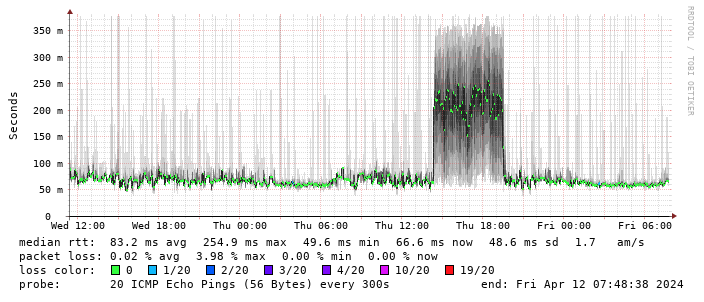

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

(There s a handy zte.js script to allow doing this from the device web interface.)

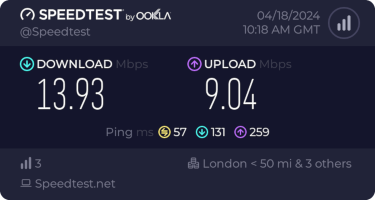

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

(There s a handy zte.js script to allow doing this from the device web interface.)

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

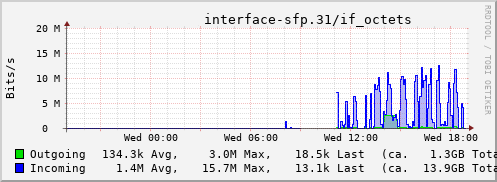

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

Years ago, at what I think I remember was DebConf 15, I hacked for a while

on debhelper to

Years ago, at what I think I remember was DebConf 15, I hacked for a while

on debhelper to

After making my

After making my

In late 2022 I prepared a batch of

In late 2022 I prepared a batch of  I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

A new maintenance release of our

A new maintenance release of our  CW for body size change mentions

I needed a corset, badly.

Years ago I had a chance to have my measurements taken by a former

professional corset maker and then a lesson in how to draft an underbust

corset, and that lead to me learning how nice wearing a well-fitted

corset feels.

Later I tried to extend that pattern up for a midbust corset, with

success.

And then my body changed suddenly, and I was no longer able to wear

either of those, and after a while I started missing them.

Since my body was still changing (if no longer drastically so), and I

didn t want to use expensive materials for something that had a risk of

not fitting after too little time, I decided to start by making myself a

summer lightweight corset in aida cloth and plastic boning (for which I

had already bought materials). It fitted, but not as well as the first

two ones, and I ve worn it quite a bit.

I still wanted back the feeling of wearing a comfy, heavy contraption of

coutil and steel, however.

After a lot of procrastination I redrafted a new pattern, scrapped

everything, tried again, had my measurements taken by a dressmaker

[#dressmaker], put them in the draft, cut a first mock-up in cheap

cotton, fixed the position of a seam, did a second mock-up in denim

[#jeans] from an old pair of jeans, and then cut into the cheap

herringbone coutil I was planning to use.

And that s when I went to see which one of the busks in my stash would

work, and realized that I had used a wrong vertical measurement and the

front of the corset was way too long for a midbust corset.

CW for body size change mentions

I needed a corset, badly.

Years ago I had a chance to have my measurements taken by a former

professional corset maker and then a lesson in how to draft an underbust

corset, and that lead to me learning how nice wearing a well-fitted

corset feels.

Later I tried to extend that pattern up for a midbust corset, with

success.

And then my body changed suddenly, and I was no longer able to wear

either of those, and after a while I started missing them.

Since my body was still changing (if no longer drastically so), and I

didn t want to use expensive materials for something that had a risk of

not fitting after too little time, I decided to start by making myself a

summer lightweight corset in aida cloth and plastic boning (for which I

had already bought materials). It fitted, but not as well as the first

two ones, and I ve worn it quite a bit.

I still wanted back the feeling of wearing a comfy, heavy contraption of

coutil and steel, however.

After a lot of procrastination I redrafted a new pattern, scrapped

everything, tried again, had my measurements taken by a dressmaker

[#dressmaker], put them in the draft, cut a first mock-up in cheap

cotton, fixed the position of a seam, did a second mock-up in denim

[#jeans] from an old pair of jeans, and then cut into the cheap

herringbone coutil I was planning to use.

And that s when I went to see which one of the busks in my stash would

work, and realized that I had used a wrong vertical measurement and the

front of the corset was way too long for a midbust corset.

Luckily I also had a few longer busks, I basted one to the denim mock up

and tried to wear it for a few hours, to see if it was too long to be

comfortable. It was just a bit, on the bottom, which could be easily

fixed with the Power Tools

Luckily I also had a few longer busks, I basted one to the denim mock up

and tried to wear it for a few hours, to see if it was too long to be

comfortable. It was just a bit, on the bottom, which could be easily

fixed with the Power Tools I could have been a bit more precise with the binding, as it doesn t

align precisely at the front edge, but then again, it s underwear,

nobody other than me and everybody who reads this post is going to see

it and I was in a hurry to see it finished. I will be more careful with

the next one.

I could have been a bit more precise with the binding, as it doesn t

align precisely at the front edge, but then again, it s underwear,

nobody other than me and everybody who reads this post is going to see

it and I was in a hurry to see it finished. I will be more careful with

the next one.

I also think that I haven t been careful enough when pressing the seams

and applying the tape, and I ve lost about a cm of width per part, so

I m using a lacing gap that is a bit wider than I planned for, but that

may change as the corset gets worn, and is still within tolerance.

Also, on the morning after I had finished the corset I woke up and

realized that I had forgotten to add garter tabs at the bottom edge.

I don t know whether I will ever use them, but I wanted the option, so

maybe I ll try to add them later on, especially if I can do it without

undoing the binding.

The next step would have been flossing, which I proceeded to postpone

until I ve worn the corset for a while: not because there is any reason

for it, but because I still don t know how I want to do it :)

What was left was finishing and uploading the

I also think that I haven t been careful enough when pressing the seams

and applying the tape, and I ve lost about a cm of width per part, so

I m using a lacing gap that is a bit wider than I planned for, but that

may change as the corset gets worn, and is still within tolerance.

Also, on the morning after I had finished the corset I woke up and

realized that I had forgotten to add garter tabs at the bottom edge.

I don t know whether I will ever use them, but I wanted the option, so

maybe I ll try to add them later on, especially if I can do it without

undoing the binding.

The next step would have been flossing, which I proceeded to postpone

until I ve worn the corset for a while: not because there is any reason

for it, but because I still don t know how I want to do it :)

What was left was finishing and uploading the

I use a LUKS-encrypted USB stick to store my GPG and SSH keys, which acts as a

backup and portable key setup when working on different laptops. One

inconvenience with LUKS-encrypted USB sticks is that you need to enter the

password every time you want to mount the device, either through a Window

Manager like KDE or using the cryptsetup luksOpen command. Fortunately, many

laptops nowadays come equipped with TPM2 modules, which can be utilized to

automatically decrypt the device and subsequently mount it. In this post, we'll

explore the usage of systemd-cryptenroll for this purpose, along with udev

rules and a set of scripts to automate the mounting of the encrypted USB.

First, ensure that your device has a TPM2 module. You can run the following

command to check:

I use a LUKS-encrypted USB stick to store my GPG and SSH keys, which acts as a

backup and portable key setup when working on different laptops. One

inconvenience with LUKS-encrypted USB sticks is that you need to enter the

password every time you want to mount the device, either through a Window

Manager like KDE or using the cryptsetup luksOpen command. Fortunately, many

laptops nowadays come equipped with TPM2 modules, which can be utilized to

automatically decrypt the device and subsequently mount it. In this post, we'll

explore the usage of systemd-cryptenroll for this purpose, along with udev

rules and a set of scripts to automate the mounting of the encrypted USB.

First, ensure that your device has a TPM2 module. You can run the following

command to check:

How-To use fwupd

As one cannot use

How-To use fwupd

As one cannot use  Update on 2023-03-23: thanks to Daniel Roschka for mentioning the

Update on 2023-03-23: thanks to Daniel Roschka for mentioning the  (no site yet)

(no site yet) in October 2021. they generally seem to keep up with

shipping. update (august 2022): they rolled out a second line of

laptops (12th gen), first batch shipped, second batch

in October 2021. they generally seem to keep up with

shipping. update (august 2022): they rolled out a second line of

laptops (12th gen), first batch shipped, second batch