Introduction

In 2020 I first setup a

Matrix [1] server. Matrix is a full featured instant messaging protocol which requires a less stringent definition of instant , messages being delayed for minutes aren t that uncommon in my experience. Matrix is a federated service where the servers all store copies of the room data, so when you connect your client to it s home server it gets all the messages that were published while you were offline, it is widely regarded as being IRC but without a need to be connected all the time. One of it s noteworthy features is support for end to end encryption (so the server can t access cleartext messages from users) as a core feature.

Matrix was designed for bridging with other protocols, the most well known of which is IRC.

The most common Matrix server software is

Synapse which is written in Python and uses a PostgreSQL database as it s backend [2]. My tests have shown that a lightly loaded Synapse server with less than a dozen users and only one or two active users will have noticeable performance problems if the PostgreSQL database is stored on SATA hard drives. This seems like the type of software that wouldn t have been developed before SSDs became commonly affordable.

The matrix-synapse is in Debian/Unstable and the backports repositories for Bullseye and Buster. As Matrix is still being very actively developed you want to have a recent version of all related software so Debian/Buster isn t a good platform for running it, Bullseye or Bookworm are the preferred platforms.

Configuring Synapse isn t really hard, but there are some postential problems. The first thing to do is to choose the DNS name, you can never change it without dropping the database (fresh install of all software and no documented way of keeping user configuration) so you don t want to get it wrong. Generally you will want the Matrix addresses at the top level of the domain you choose. When setting up a Matrix server for my local LUG I chose the top level of their domain luv.asn.au as the DNS name for the server.

If you don t want to run a server then there are many open servers offering free account.

Server Configuration

Part of doing this configuration required creating the URL https://luv.asn.au/.well-known/matrix/client with the following contents so clients know where to connect. Note that you should not setup Jitsi sections without first discussing it with the people who run the Jitsi server in question.

"m.homeserver":

"base_url": "https://luv.asn.au"

"jitsi":

"preferredDomain": "jitsi.perthchat.org"

"im.vector.riot.jitsi":

"preferredDomain": "jitsi.perthchat.org"

Also the URL https://luv.asn.au/.well-known/matrix/server for other servers to know where to connect:

"m.server": "luv.asn.au:8448"

If the base_url or the m.server points to a name that isn t configured then you need to add it to the web server configuration. See

section 3.1 of the documentation about well known Matrix client fields [3].

The SE Linux specific parts of the configuration are to run the following commands as Bookworm and Bullseye SE Linux policy have support for Synapse:

setsebool -P httpd_setrlimit 1

setsebool -P httpd_can_network_relay 1

setsebool -P matrix_postgresql_connect 1

To configure apache you have to enable proxy mode and SSL with the command a2enmod proxy ssl proxy_http and add the line Listen 8443 to /etc/apache2/ports.conf and restart Apache.

The command chmod 700 /etc/matrix-synapse should probably be run to improve security, there s no reason for less restrictive permissions on that directory.

In the /etc/matrix-synapse/homeserver.yaml file the macaroon_secret_key is a random key for generating tokens.

To use the matrix.org server as a trusted key server and not receive warnings put the following line in the config file:

suppress_key_server_warning: true

A line like the following is needed to configure the baseurl:

public_baseurl: https://luv.asn.au:8448/

To have Synapse directly accept port 8448 connections you have to change bind_addresses in the first section of listeners to the global listen IPv6 and IPv4 addresses.

The registration_shared_secret is a password for adding users. When you have set that you can write a shell script to add new users such as:

#!/bin/bash

# usage: matrix_new_user USER PASS

synapse_register_new_matrix_user -u $1 -p $2 -a -k THEPASSWORD

You need to set tls_certificate_path and tls_private_key_path to appropriate values, usually something like the following:

tls_certificate_path: "/etc/letsencrypt/live/www.luv.asn.au-0001/fullchain.pem"

tls_private_key_path: "/etc/letsencrypt/live/www.luv.asn.au-0001/privkey.pem"

For the database section you need something like the following which matches your PostgreSQL setup:

name: "psycopg2"

args:

user: WWWWWW

password: XXXXXXX

database: YYYYYYY

host: ZZZZZZ

cp_min: 5

cp_max: 10

You need to run psql commands like the following to set it up:

create role WWWWWW login password 'XXXXXXX';

create database YYYYYYY with owner WWWWWW ENCODING 'UTF8' LOCALE 'C' TEMPLATE 'template0';

For the Apache configuration you need something like the following for the port 8448 web server:

<VirtualHost *:8448>

SSLEngine on

...

ServerName luv.asn.au;

AllowEncodedSlashes NoDecode

ProxyPass /_matrix http://127.0.0.1:8008/_matrix nocanon

ProxyPassReverse /_matrix http://127.0.0.1:8008/_matrix

AllowEncodedSlashes NoDecode

ProxyPass /_matrix http://127.0.0.1:8008/_matrix nocanon

ProxyPassReverse /_matrix http://127.0.0.1:8008/_matrix

</VirtualHost>

Also you must add the ProxyPass section to the port 443 configuration (the server that is probably doing other more directly user visible things) for most (all?) end-user clients:

ProxyPass /_matrix http://127.0.0.1:8008/_matrix nocanon

This web page can be used to test listing rooms via federation without logging in [4]. If it gives the error Can t find this server or its room list then you must set allow_public_rooms_without_auth and allow_public_rooms_over_federation to true in /etc/matrix-synapse/homeserver.yaml.

The Matrix Federation Tester site [5] is good for testing new servers and for tests after network changes.

Clients

The Element (formerly known as Riot) client is the most common [6]. The following APT repository will allow you to install Element via apt install element-desktop on Debian/Buster.

deb https://packages.riot.im/debian/ default main

The Debian backports repository for Buster has the latest version of Quaternion, apt install quaternion should install that for you. Quaternion doesn t support end to end encryption (E2EE) and also doesn t seem to have good support for some other features like being invited to a room.

My current favourite client is

Schildi Chat on Android [7], which has a notification message 24*7 to reduce the incidence of Android killing it. Eventually I want to go to PinePhone or Librem 5 for all my phone use so I need to find a full featured Linux client that works on a small screen.

Comparing to Jabber

I plan to keep using Jabber for alerts because it really does instant messaging, it can reliably get the message to me within a matter of seconds. Also there are a selection of command-line clients for Jabber to allow sending messages from servers.

When I first investigated Matrix there was no program suitable for sending messages from a script and the libraries for the protocol made it unreasonably difficult to write one. Now there is

a Matrix client written in shell script [8] which might do that. But the delay in receiving messages is still a problem. Also the Matrix clients I ve tried so far have UIs that are more suited to serious chat than to quickly reading a notification message.

Bridges

Here is a

list of bridges between Matrix and other protocols [9]. You can run bridges yourself for many different messaging protocols including Slack, Discord, and Messenger. There are also bridges run for public use for most IRC channels.

Here is a

list of integrations with other services [10], this is for interacting with things other than IM systems such as RSS feeds, polls, and other things. This also has some frameworks for writing bots.

More Information

The

Debian wiki page about Matrix is good [11].

The

view.matrix.org site allows searching for public rooms [12].

I ended 2022 with a musical retrospective and very much enjoyed writing

that blog post. As such, I have decided to do the same for 2023! From now on,

this will probably be an annual thing :)

Albums

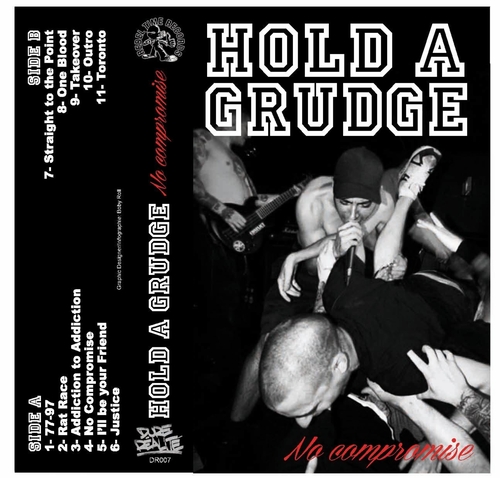

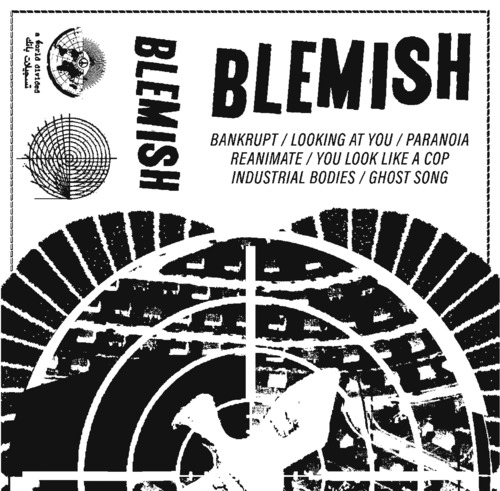

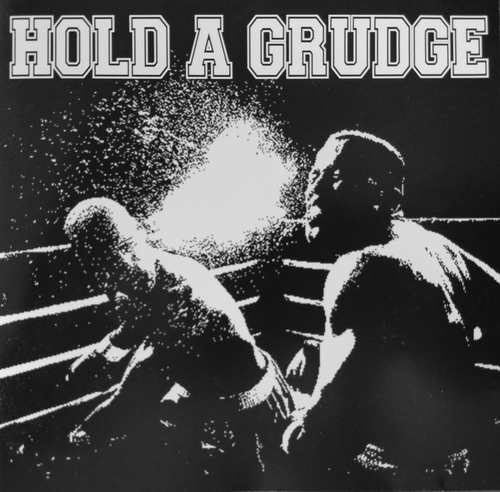

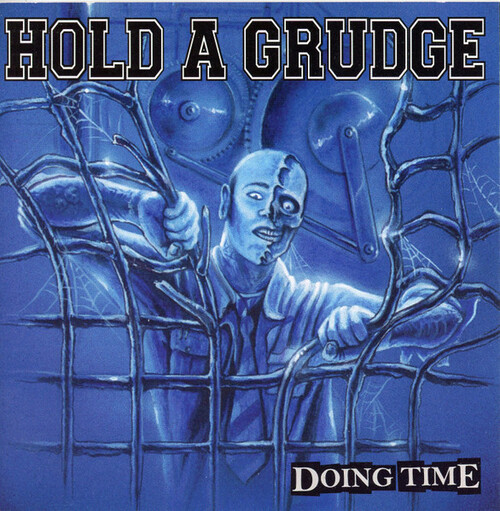

In 2023, I added 73 new albums to my collection nearly 2 albums every three

weeks! I listed them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available.

Once again this year, it seems that Punk (mostly O !) and Metal dominate my

list, mostly fueled by Angry Metal Guy and the amazing Montr al

Skinhead/Punk concert scene.

Concerts

A trend I started in 2022 was to go to as many concerts of artists I like as

possible. I'm happy to report I went to around 80% more concerts in 2023 than

in 2022! Looking back at my list, April was quite a busy month...

Here are the concerts I went to in 2023:

I ended 2022 with a musical retrospective and very much enjoyed writing

that blog post. As such, I have decided to do the same for 2023! From now on,

this will probably be an annual thing :)

Albums

In 2023, I added 73 new albums to my collection nearly 2 albums every three

weeks! I listed them below in the order in which I acquired them.

I purchased most of these albums when I could and borrowed the rest at

libraries. If you want to browse though, I added links to the album covers

pointing either to websites where you can buy them or to Discogs when digital

copies weren't available.

Once again this year, it seems that Punk (mostly O !) and Metal dominate my

list, mostly fueled by Angry Metal Guy and the amazing Montr al

Skinhead/Punk concert scene.

Concerts

A trend I started in 2022 was to go to as many concerts of artists I like as

possible. I'm happy to report I went to around 80% more concerts in 2023 than

in 2022! Looking back at my list, April was quite a busy month...

Here are the concerts I went to in 2023:

It was pointed out to me that I have not blogged about this, so better now than never:

Since 2021 I am together with four other hosts producing a regular podcast about Haskell, the

It was pointed out to me that I have not blogged about this, so better now than never:

Since 2021 I am together with four other hosts producing a regular podcast about Haskell, the  An exciting new release 0.4.21 of

An exciting new release 0.4.21 of  The brief NEWS entry follows:

The brief NEWS entry follows:

Check it out

Check it out

On

On  Official logo of DebConf23

Official logo of DebConf23

Suresh and me celebrating Onam in Kochi.

Suresh and me celebrating Onam in Kochi.

Four Points Hotel by Sheraton was the venue of DebConf23. Photo credits: Bilal

Four Points Hotel by Sheraton was the venue of DebConf23. Photo credits: Bilal

Photo of the pool. Photo credits: Andreas Tille.

Photo of the pool. Photo credits: Andreas Tille.

View from the hotel window.

View from the hotel window.

This place served as lunch and dinner place and later as hacklab during debconf. Photo credits: Bilal

This place served as lunch and dinner place and later as hacklab during debconf. Photo credits: Bilal

Picture of the awesome swag bag given at DebConf23. Photo credits: Ravi Dwivedi

Picture of the awesome swag bag given at DebConf23. Photo credits: Ravi Dwivedi

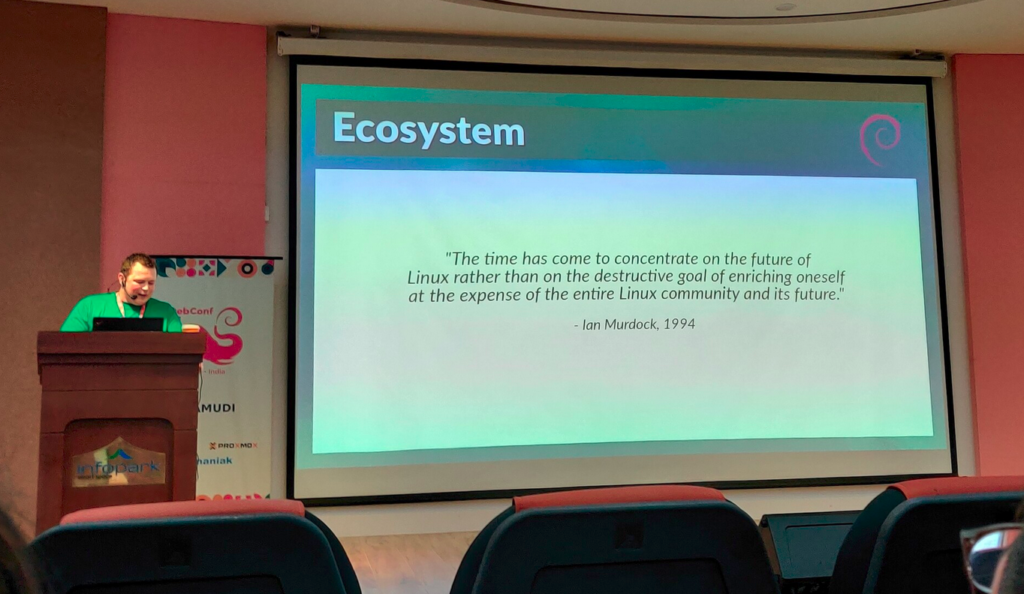

My presentation photo. Photo credits: Valessio

My presentation photo. Photo credits: Valessio

Selfie with Anisa and Kristi. Photo credits: Anisa.

Selfie with Anisa and Kristi. Photo credits: Anisa.

Me helping with the Cheese and Wine Party.

Me helping with the Cheese and Wine Party.

This picture was taken when there were few people in my room for the party.

This picture was taken when there were few people in my room for the party.

Sadhya Thali: A vegetarian meal served on banana leaf. Payasam and rasam were especially yummy! Photo credits: Ravi Dwivedi.

Sadhya Thali: A vegetarian meal served on banana leaf. Payasam and rasam were especially yummy! Photo credits: Ravi Dwivedi.

Sadhya thali being served at debconf23. Photo credits: Bilal

Sadhya thali being served at debconf23. Photo credits: Bilal

Group photo of our daytrip. Photo credits: Radhika Jhalani

Group photo of our daytrip. Photo credits: Radhika Jhalani

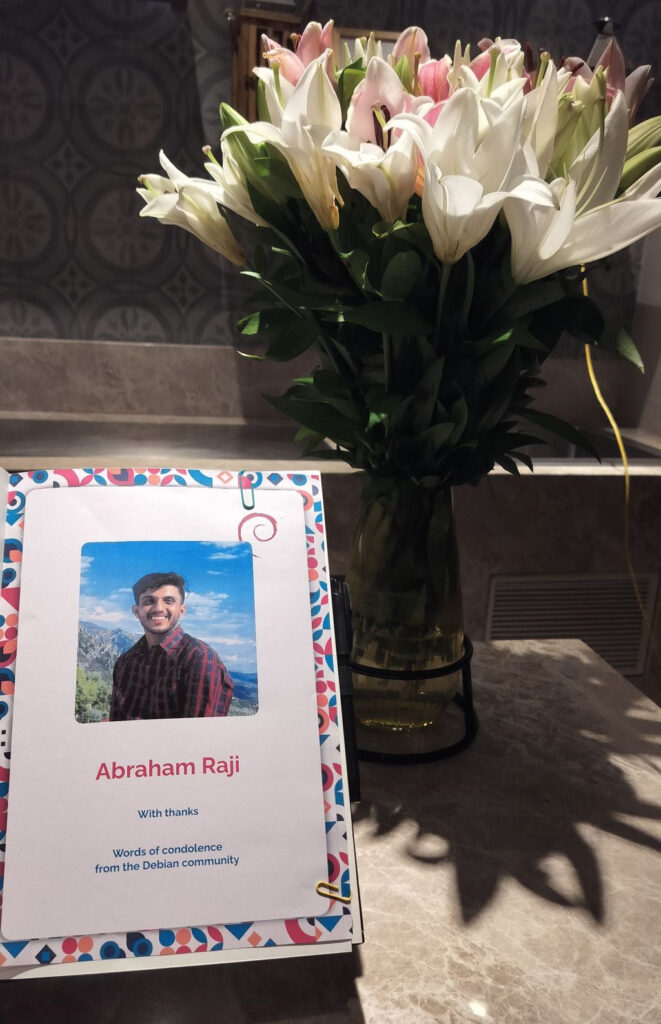

A selfie in memory of Abraham.

A selfie in memory of Abraham.

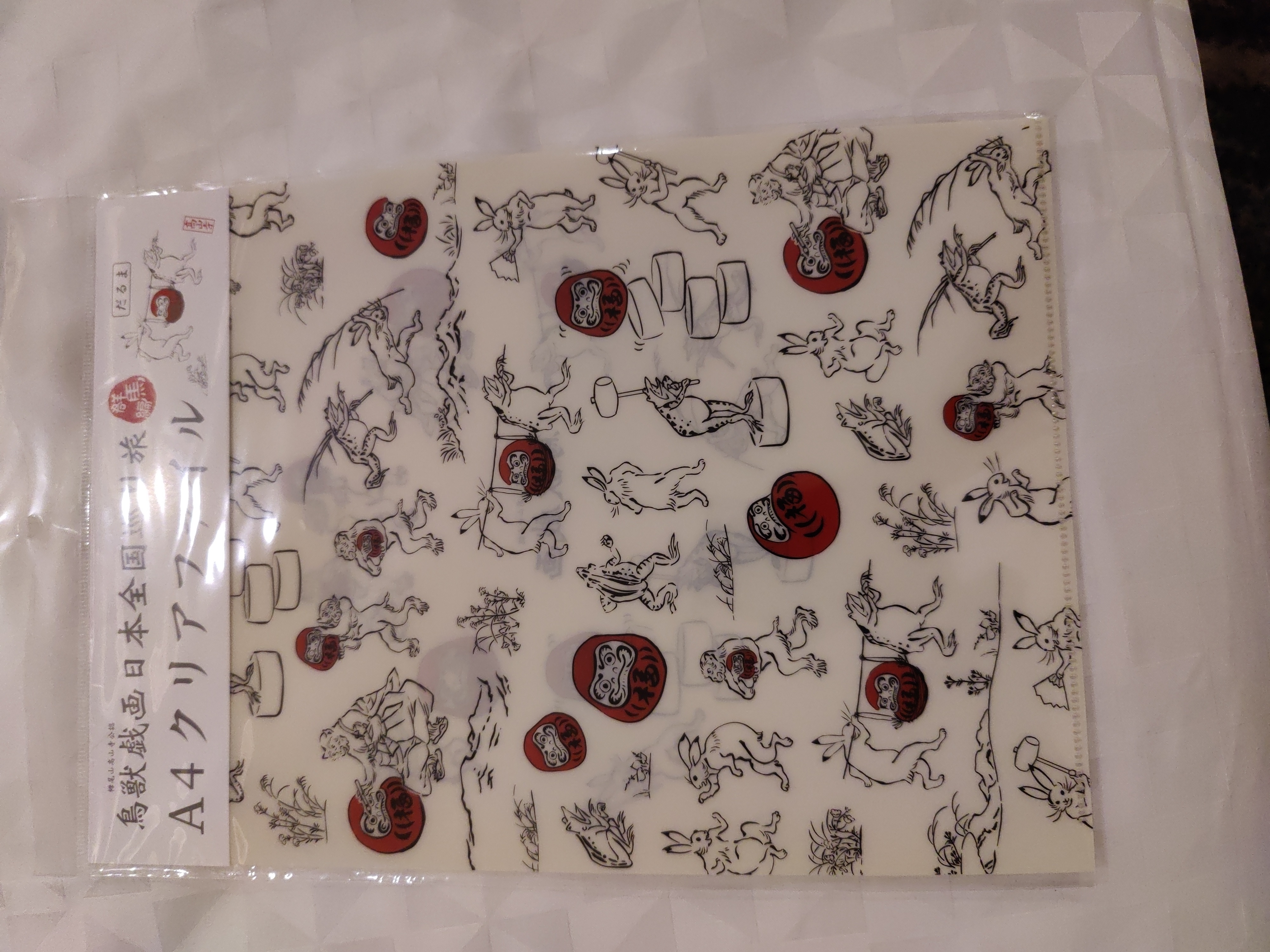

Thanks to Niibe Yutaka (the person towards your right hand) from Japan (FSIJ), who gave me a wonderful Japanese gift during debconf23: A folder to keep pages with ancient Japanese manga characters printed on it. I realized I immediately needed that :)

Thanks to Niibe Yutaka (the person towards your right hand) from Japan (FSIJ), who gave me a wonderful Japanese gift during debconf23: A folder to keep pages with ancient Japanese manga characters printed on it. I realized I immediately needed that :)

This is the Japanese gift I received.

This is the Japanese gift I received.

Bits from the DPL. Photo credits: Bilal

Bits from the DPL. Photo credits: Bilal

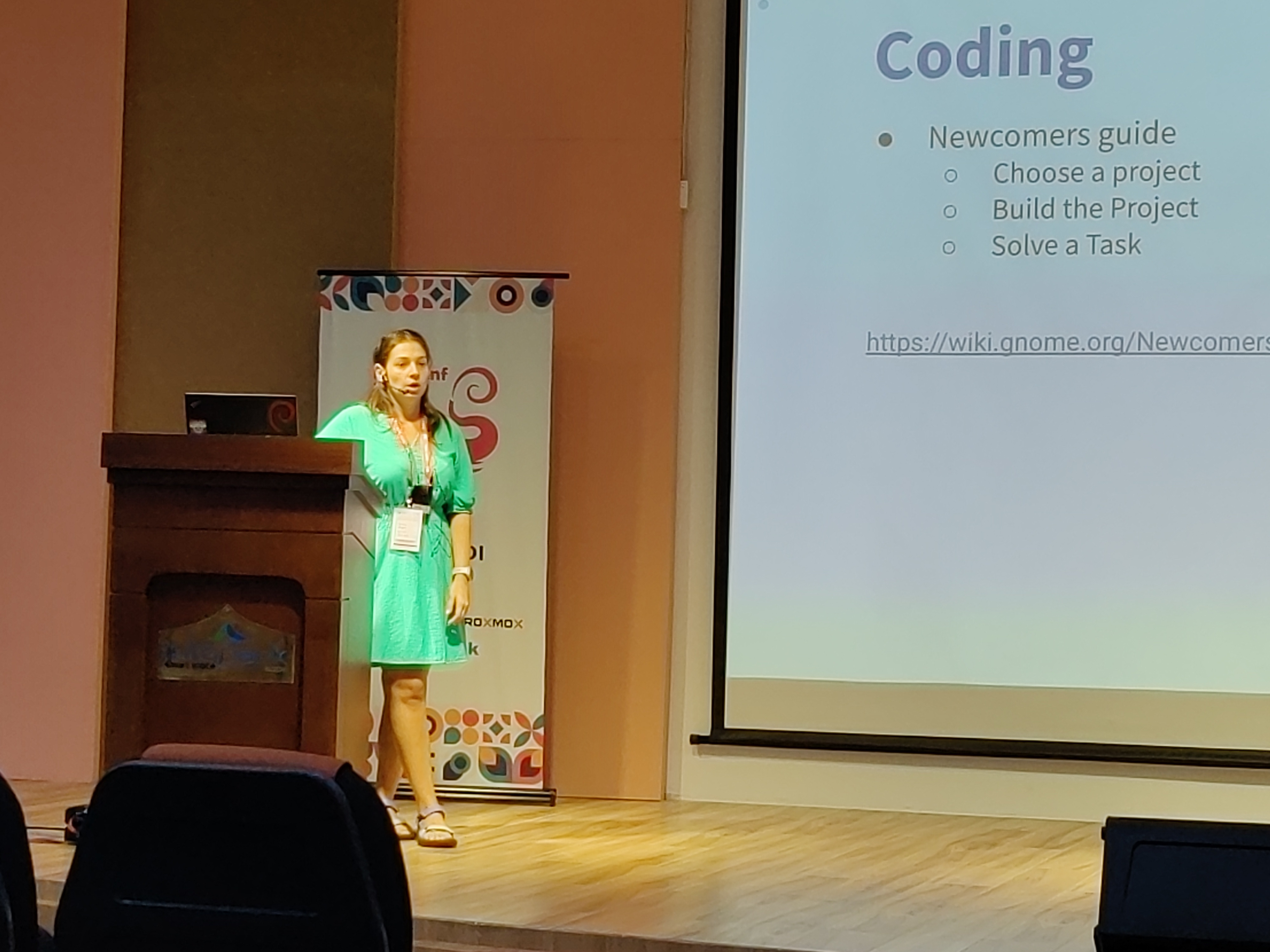

Kristi on GNOME community. Photo credits: Ravi Dwivedi.

Kristi on GNOME community. Photo credits: Ravi Dwivedi.

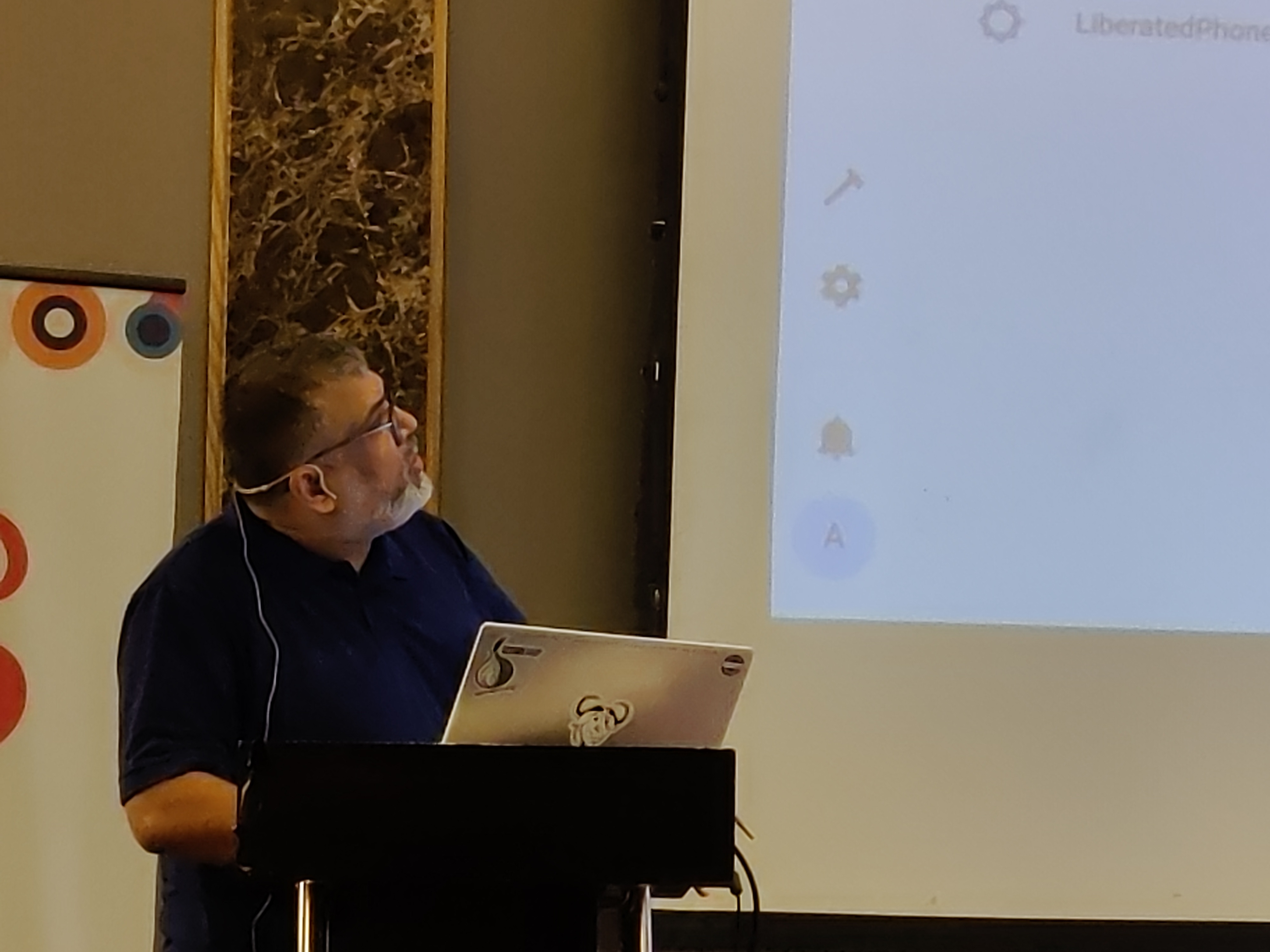

Abhas' talk on home automation. Photo credits: Ravi Dwivedi.

Abhas' talk on home automation. Photo credits: Ravi Dwivedi.

I was roaming around with a QR code on my T-shirt for downloading Prav.

I was roaming around with a QR code on my T-shirt for downloading Prav.

Me in mundu. Picture credits: Abhijith PA

Me in mundu. Picture credits: Abhijith PA

From left: Nilesh, Saswata, me, Sahil. Photo credits: Sahil.

From left: Nilesh, Saswata, me, Sahil. Photo credits: Sahil.

Ruchika (taking the selfie) and from left to right: Yash,

Ruchika (taking the selfie) and from left to right: Yash,  Joost and me going to Delhi. Photo credits: Ravi.

Joost and me going to Delhi. Photo credits: Ravi.

I very, very nearly didn t make it to DebConf this year, I had a bad cold/flu for a few days before I left, and after a negative covid-19 test just minutes before my flight, I decided to take the plunge and travel.

This is just everything in chronological order, more or less, it s the only way I could write it.

I very, very nearly didn t make it to DebConf this year, I had a bad cold/flu for a few days before I left, and after a negative covid-19 test just minutes before my flight, I decided to take the plunge and travel.

This is just everything in chronological order, more or less, it s the only way I could write it.

If you got one of these Cheese & Wine bags from DebConf, that s from the South African local group!

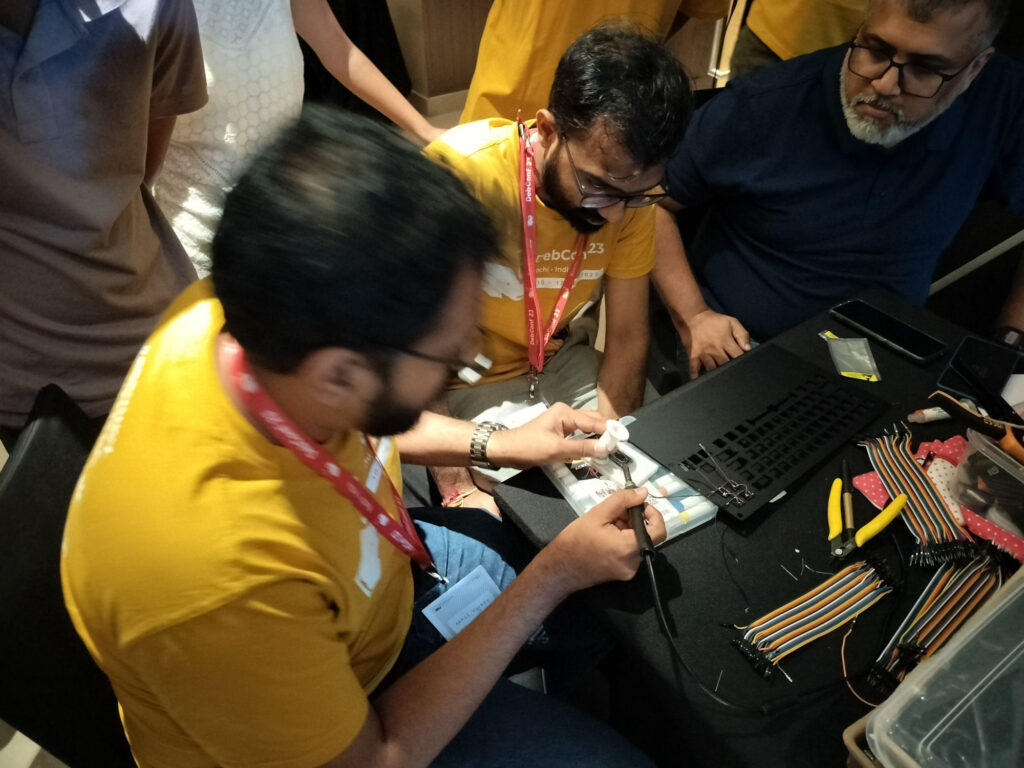

If you got one of these Cheese & Wine bags from DebConf, that s from the South African local group! Some hopefully harmless soldering.

Some hopefully harmless soldering.

Over the last month I ve performed some market research to better understand the potential for

Over the last month I ve performed some market research to better understand the potential for

I m trying to replace my old OpenPGP key with a new one. The old key wasn t compromised or lost or anything

bad. Is still valid, but I plan to get rid of it soon. It was created in 2013.

The new key id fingerprint is:

I m trying to replace my old OpenPGP key with a new one. The old key wasn t compromised or lost or anything

bad. Is still valid, but I plan to get rid of it soon. It was created in 2013.

The new key id fingerprint is:

And before anybody comments, I know that Android is no longer interested in supporting FOSS, their loss, not ours but that is entirely a blog post/article in itself. so let s leave that aside for now.

And before anybody comments, I know that Android is no longer interested in supporting FOSS, their loss, not ours but that is entirely a blog post/article in itself. so let s leave that aside for now.