Reproducible Builds: Reproducible Builds in February 2024

Welcome to the February 2024 report from the Reproducible Builds project! In our reports, we try to outline what we have been up to over the past month as well as mentioning some of the important things happening in software supply-chain security.

Welcome to the February 2024 report from the Reproducible Builds project! In our reports, we try to outline what we have been up to over the past month as well as mentioning some of the important things happening in software supply-chain security.

Reproducible Builds at FOSDEM 2024

Core Reproducible Builds developer Holger Levsen presented at the main track at FOSDEM on Saturday 3rd February this year in Brussels, Belgium. However, that wasn t the only talk related to Reproducible Builds.

However, please see our comprehensive FOSDEM 2024 news post for the full details and links.

Core Reproducible Builds developer Holger Levsen presented at the main track at FOSDEM on Saturday 3rd February this year in Brussels, Belgium. However, that wasn t the only talk related to Reproducible Builds.

However, please see our comprehensive FOSDEM 2024 news post for the full details and links.

Maintainer Perspectives on Open Source Software Security

Bernhard M. Wiedemann spotted that a recent report entitled Maintainer Perspectives on Open Source Software Security written by Stephen Hendrick and Ashwin Ramaswami of the Linux Foundation sports an infographic which mentions that 56% of [polled] projects support reproducible builds .

Bernhard M. Wiedemann spotted that a recent report entitled Maintainer Perspectives on Open Source Software Security written by Stephen Hendrick and Ashwin Ramaswami of the Linux Foundation sports an infographic which mentions that 56% of [polled] projects support reproducible builds .

Three new reproducibility-related academic papers

A total of three separate scholarly papers related to Reproducible Builds have appeared this month:

Signing in Four Public Software Package Registries: Quantity, Quality, and Influencing Factors by Taylor R. Schorlemmer, Kelechi G. Kalu, Luke Chigges, Kyung Myung Ko, Eman Abdul-Muhd, Abu Ishgair, Saurabh Bagchi, Santiago Torres-Arias and James C. Davis (Purdue University, Indiana, USA) is concerned with the problem that:

Signing in Four Public Software Package Registries: Quantity, Quality, and Influencing Factors by Taylor R. Schorlemmer, Kelechi G. Kalu, Luke Chigges, Kyung Myung Ko, Eman Abdul-Muhd, Abu Ishgair, Saurabh Bagchi, Santiago Torres-Arias and James C. Davis (Purdue University, Indiana, USA) is concerned with the problem that:

Package maintainers can guarantee package authorship through software signing [but] it is unclear how common this practice is, and whether the resulting signatures are created properly. Prior work has provided raw data on signing practices, but measured single platforms, did not consider time, and did not provide insight on factors that may influence signing. We lack a comprehensive, multi-platform understanding of signing adoption and relevant factors. This study addresses this gap. (arXiv, full PDF)

Reproducibility of Build Environments through Space and Time by Julien Malka, Stefano Zacchiroli and Th o Zimmermann (Institut Polytechnique de Paris, France) addresses:

Reproducibility of Build Environments through Space and Time by Julien Malka, Stefano Zacchiroli and Th o Zimmermann (Institut Polytechnique de Paris, France) addresses:

[The] principle of reusability [ ] makes it harder to reproduce projects build environments, even though reproducibility of build environments is essential for collaboration, maintenance and component lifetime. In this work, we argue that functional package managers provide the tooling to make build environments reproducible in space and time, and we produce a preliminary evaluation to justify this claim.

The abstract continues with the claim that Using historical data, we show that we are able to reproduce build environments of about 7 million Nix packages, and to rebuild 99.94% of the 14 thousand packages from a 6-year-old Nixpkgs revision. (arXiv, full PDF)

Options Matter: Documenting and Fixing Non-Reproducible Builds in Highly-Configurable Systems by Georges Aaron Randrianaina, Djamel Eddine Khelladi, Olivier Zendra and Mathieu Acher (Inria centre at Rennes University, France):

Options Matter: Documenting and Fixing Non-Reproducible Builds in Highly-Configurable Systems by Georges Aaron Randrianaina, Djamel Eddine Khelladi, Olivier Zendra and Mathieu Acher (Inria centre at Rennes University, France):

This paper thus proposes an approach to automatically identify configuration options causing non-reproducibility of builds. It begins by building a set of builds in order to detect non-reproducible ones through binary comparison. We then develop automated techniques that combine statistical learning with symbolic reasoning to analyze over 20,000 configuration options. Our methods are designed to both detect options causing non-reproducibility, and remedy non-reproducible configurations, two tasks that are challenging and costly to perform manually. (HAL Portal, full PDF)

Mailing list highlights

From our mailing list this month:

-

User cen posted a query asking How to verify a package by rebuilding it locally on Debian which received a followup from Vagrant Cascadian.

-

James Addison asked Two questions about build-path reproducibility in Debian regarding the differences in the testing performed by Debian s GitLab continuous integration (CI) pipeline and the Debian-specific testing performed by the Reproducible Builds project itself, and followed this with a separate but related question regarding misconfigured reprotest configurations.

Distribution work

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called fedora-repro-build that attempts to reproduce an existing package within a koji build environment. Although the projects README file lists a number of fields will always or almost always vary and there is a non-zero list of other known issues, this is an excellent first step towards full Fedora reproducibility.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

diffoscope

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 256, 257 and 258 to Debian and made the following additional changes:

- Use a deterministic name instead of trusting

gpg s use-embedded-filenames. Many thanks to Daniel Kahn Gillmor dkg@debian.org for reporting this issue and providing feedback. [ ][ ]

- Don t error-out with a traceback if we encounter

struct.unpack-related errors when parsing Python .pyc files. (#1064973). [ ]

- Don t try and compare

rdb_expected_diff on non-GNU systems as %p formatting can vary, especially with respect to MacOS. [ ]

- Fix compatibility with

pytest 8.0. [ ]

- Temporarily fix support for Python 3.11.8. [ ]

- Use the

7zip package (over p7zip-full) after a Debian package transition. (#1063559). [ ]

- Bump the minimum Black source code reformatter requirement to 24.1.1+. [ ]

- Expand an older changelog entry with a CVE reference. [ ]

- Make

test_zip black clean. [ ]

In addition, James Addison contributed a patch to parse the headers from the diff(1) correctly [ ][ ] thanks! And lastly, Vagrant Cascadian pushed updates in GNU Guix for diffoscope to version 255, 256, and 258, and updated trydiffoscope to 67.0.6.

reprotest

reprotest is our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. This month, Vagrant Cascadian made a number of changes, including:

- Create a (working) proof of concept for enabling a specific number of CPUs. [ ][ ]

- Consistently use 398 days for time variation rather than choosing randomly and update

README.rst to match. [ ][ ]

- Support a new

--vary=build_path.path option. [ ][ ][ ][ ]

Website updates

There were made a number of improvements to our website this month, including:

There were made a number of improvements to our website this month, including:

-

Chris Lamb:

- Improve the relative sizing of headers. [ ]

- Re-order and punch up the introduction and documentation on the

SOURCE_DATE_EPOCH page. [ ]

- Update

SOURCE_DATE_EPOCH documentation re. datetime.datetime.fromtimestamp. Thanks, James Addison. [ ]

- Add a post about Reproducible Builds at FOSDEM 2024. [ ]

-

Holger Levsen:

- Update the GNU Guix page to include their reproducibility QA page. [ ]

- Add Sune Vuorela and Jan-Benedict Glaw to our contributors list. [ ][ ]

-

Mattia Rizzolo:

- Add Sovereign Tech Fund s logo to our sponsors. [ ]

- Update our sponsors list. [ ]

Reproducibility testing framework

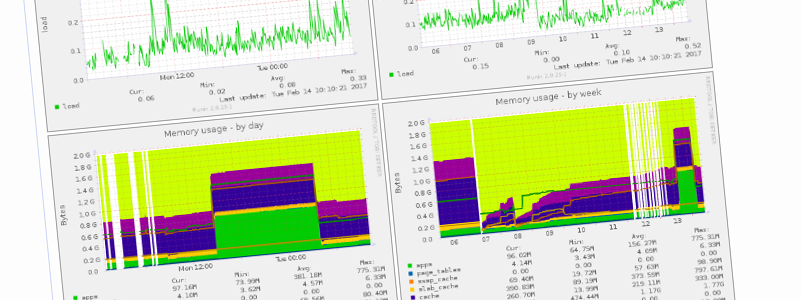

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

-

Debian-related changes:

-

Other changes:

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-size in the Jenkins shell monitor. [ ]

- Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64 nodes. [ ]

- Only set

/proc/$pid/oom_score_adj to -1000 if it has not already been done. [ ]

- Add the

opemwrt-target-tegra and jtx task to the list of zombie jobs. [ ][ ]

Vagrant Cascadian also made the following changes:

- Overhaul the handling of OpenSSH configuration files after updating from Debian bookworm. [ ][ ][ ]

- Add two new

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]

In addition, Alexander Couzens updated the OpenWrt configuration in order to replace the tegra target with mpc85xx [ ], Jan-Benedict Glaw updated the NetBSD build script to use a separate $TMPDIR to mitigate out of space issues on a tmpfs-backed /tmp [ ] and Zheng Junjie added a link to the GNU Guix tests [ ].

Lastly, node maintenance was performed by Holger Levsen [ ][ ][ ][ ][ ][ ] and Vagrant Cascadian [ ][ ][ ][ ].

Upstream patches

The Reproducible Builds project detects, dissects and attempts to fix as many currently-unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Philip Rinn:

gimagereader (date)

-

Bernhard M. Wiedemann:

grass (date-related issue)grub2 (filesystem ordering issue)latex2html (drop a non-deterministic log)mhvtl (tar)obs (build-tool issue)ollama (GZip embedding the modification time)presenterm (filesystem-ordering issue)qt6-quick3d (parallelism)

-

Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter.

python-parsl-doc (disable dynamic argument evaluation by Sphinx autodoc extension)python3-pytest-repeat (remove entry_points.txt creation that varied by shell)python3-selinux (remove packaged direct_url.json file that embeds build path)python3-sepolicy (remove packaged direct_url.json file that embeds build path)- #1064575 filed against

pyswarms.

- #1064638 filed against

python-x2go.

snapd (fix timestamp header in packaged manual-page)zzzeeksphinx (existing RB patch forwarded and merged (with modifications))

-

Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

Bernhard M. Wiedemann spotted that a recent report entitled Maintainer Perspectives on Open Source Software Security written by Stephen Hendrick and Ashwin Ramaswami of the Linux Foundation sports an infographic which mentions that 56% of [polled] projects support reproducible builds .

Bernhard M. Wiedemann spotted that a recent report entitled Maintainer Perspectives on Open Source Software Security written by Stephen Hendrick and Ashwin Ramaswami of the Linux Foundation sports an infographic which mentions that 56% of [polled] projects support reproducible builds .

Three new reproducibility-related academic papers

A total of three separate scholarly papers related to Reproducible Builds have appeared this month:

Signing in Four Public Software Package Registries: Quantity, Quality, and Influencing Factors by Taylor R. Schorlemmer, Kelechi G. Kalu, Luke Chigges, Kyung Myung Ko, Eman Abdul-Muhd, Abu Ishgair, Saurabh Bagchi, Santiago Torres-Arias and James C. Davis (Purdue University, Indiana, USA) is concerned with the problem that:

Signing in Four Public Software Package Registries: Quantity, Quality, and Influencing Factors by Taylor R. Schorlemmer, Kelechi G. Kalu, Luke Chigges, Kyung Myung Ko, Eman Abdul-Muhd, Abu Ishgair, Saurabh Bagchi, Santiago Torres-Arias and James C. Davis (Purdue University, Indiana, USA) is concerned with the problem that:

Package maintainers can guarantee package authorship through software signing [but] it is unclear how common this practice is, and whether the resulting signatures are created properly. Prior work has provided raw data on signing practices, but measured single platforms, did not consider time, and did not provide insight on factors that may influence signing. We lack a comprehensive, multi-platform understanding of signing adoption and relevant factors. This study addresses this gap. (arXiv, full PDF)

Reproducibility of Build Environments through Space and Time by Julien Malka, Stefano Zacchiroli and Th o Zimmermann (Institut Polytechnique de Paris, France) addresses:

Reproducibility of Build Environments through Space and Time by Julien Malka, Stefano Zacchiroli and Th o Zimmermann (Institut Polytechnique de Paris, France) addresses:

[The] principle of reusability [ ] makes it harder to reproduce projects build environments, even though reproducibility of build environments is essential for collaboration, maintenance and component lifetime. In this work, we argue that functional package managers provide the tooling to make build environments reproducible in space and time, and we produce a preliminary evaluation to justify this claim.

The abstract continues with the claim that Using historical data, we show that we are able to reproduce build environments of about 7 million Nix packages, and to rebuild 99.94% of the 14 thousand packages from a 6-year-old Nixpkgs revision. (arXiv, full PDF)

Options Matter: Documenting and Fixing Non-Reproducible Builds in Highly-Configurable Systems by Georges Aaron Randrianaina, Djamel Eddine Khelladi, Olivier Zendra and Mathieu Acher (Inria centre at Rennes University, France):

Options Matter: Documenting and Fixing Non-Reproducible Builds in Highly-Configurable Systems by Georges Aaron Randrianaina, Djamel Eddine Khelladi, Olivier Zendra and Mathieu Acher (Inria centre at Rennes University, France):

This paper thus proposes an approach to automatically identify configuration options causing non-reproducibility of builds. It begins by building a set of builds in order to detect non-reproducible ones through binary comparison. We then develop automated techniques that combine statistical learning with symbolic reasoning to analyze over 20,000 configuration options. Our methods are designed to both detect options causing non-reproducibility, and remedy non-reproducible configurations, two tasks that are challenging and costly to perform manually. (HAL Portal, full PDF)

Mailing list highlights

From our mailing list this month:

-

User cen posted a query asking How to verify a package by rebuilding it locally on Debian which received a followup from Vagrant Cascadian.

-

James Addison asked Two questions about build-path reproducibility in Debian regarding the differences in the testing performed by Debian s GitLab continuous integration (CI) pipeline and the Debian-specific testing performed by the Reproducible Builds project itself, and followed this with a separate but related question regarding misconfigured reprotest configurations.

Distribution work

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called fedora-repro-build that attempts to reproduce an existing package within a koji build environment. Although the projects README file lists a number of fields will always or almost always vary and there is a non-zero list of other known issues, this is an excellent first step towards full Fedora reproducibility.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

diffoscope

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 256, 257 and 258 to Debian and made the following additional changes:

- Use a deterministic name instead of trusting

gpg s use-embedded-filenames. Many thanks to Daniel Kahn Gillmor dkg@debian.org for reporting this issue and providing feedback. [ ][ ]

- Don t error-out with a traceback if we encounter

struct.unpack-related errors when parsing Python .pyc files. (#1064973). [ ]

- Don t try and compare

rdb_expected_diff on non-GNU systems as %p formatting can vary, especially with respect to MacOS. [ ]

- Fix compatibility with

pytest 8.0. [ ]

- Temporarily fix support for Python 3.11.8. [ ]

- Use the

7zip package (over p7zip-full) after a Debian package transition. (#1063559). [ ]

- Bump the minimum Black source code reformatter requirement to 24.1.1+. [ ]

- Expand an older changelog entry with a CVE reference. [ ]

- Make

test_zip black clean. [ ]

In addition, James Addison contributed a patch to parse the headers from the diff(1) correctly [ ][ ] thanks! And lastly, Vagrant Cascadian pushed updates in GNU Guix for diffoscope to version 255, 256, and 258, and updated trydiffoscope to 67.0.6.

reprotest

reprotest is our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. This month, Vagrant Cascadian made a number of changes, including:

- Create a (working) proof of concept for enabling a specific number of CPUs. [ ][ ]

- Consistently use 398 days for time variation rather than choosing randomly and update

README.rst to match. [ ][ ]

- Support a new

--vary=build_path.path option. [ ][ ][ ][ ]

Website updates

There were made a number of improvements to our website this month, including:

There were made a number of improvements to our website this month, including:

-

Chris Lamb:

- Improve the relative sizing of headers. [ ]

- Re-order and punch up the introduction and documentation on the

SOURCE_DATE_EPOCH page. [ ]

- Update

SOURCE_DATE_EPOCH documentation re. datetime.datetime.fromtimestamp. Thanks, James Addison. [ ]

- Add a post about Reproducible Builds at FOSDEM 2024. [ ]

-

Holger Levsen:

- Update the GNU Guix page to include their reproducibility QA page. [ ]

- Add Sune Vuorela and Jan-Benedict Glaw to our contributors list. [ ][ ]

-

Mattia Rizzolo:

- Add Sovereign Tech Fund s logo to our sponsors. [ ]

- Update our sponsors list. [ ]

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

-

Debian-related changes:

-

Other changes:

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-size in the Jenkins shell monitor. [ ]

- Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64 nodes. [ ]

- Only set

/proc/$pid/oom_score_adj to -1000 if it has not already been done. [ ]

- Add the

opemwrt-target-tegra and jtx task to the list of zombie jobs. [ ][ ]

Vagrant Cascadian also made the following changes:

- Overhaul the handling of OpenSSH configuration files after updating from Debian bookworm. [ ][ ][ ]

- Add two new

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]

In addition, Alexander Couzens updated the OpenWrt configuration in order to replace the tegra target with mpc85xx [ ], Jan-Benedict Glaw updated the NetBSD build script to use a separate $TMPDIR to mitigate out of space issues on a tmpfs-backed /tmp [ ] and Zheng Junjie added a link to the GNU Guix tests [ ].

Lastly, node maintenance was performed by Holger Levsen [ ][ ][ ][ ][ ][ ] and Vagrant Cascadian [ ][ ][ ][ ].

Upstream patches

The Reproducible Builds project detects, dissects and attempts to fix as many currently-unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Philip Rinn:

gimagereader (date)

-

Bernhard M. Wiedemann:

grass (date-related issue)grub2 (filesystem ordering issue)latex2html (drop a non-deterministic log)mhvtl (tar)obs (build-tool issue)ollama (GZip embedding the modification time)presenterm (filesystem-ordering issue)qt6-quick3d (parallelism)

-

Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter.

python-parsl-doc (disable dynamic argument evaluation by Sphinx autodoc extension)python3-pytest-repeat (remove entry_points.txt creation that varied by shell)python3-selinux (remove packaged direct_url.json file that embeds build path)python3-sepolicy (remove packaged direct_url.json file that embeds build path)- #1064575 filed against

pyswarms.

- #1064638 filed against

python-x2go.

snapd (fix timestamp header in packaged manual-page)zzzeeksphinx (existing RB patch forwarded and merged (with modifications))

-

Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

- User cen posted a query asking How to verify a package by rebuilding it locally on Debian which received a followup from Vagrant Cascadian.

- James Addison asked Two questions about build-path reproducibility in Debian regarding the differences in the testing performed by Debian s GitLab continuous integration (CI) pipeline and the Debian-specific testing performed by the Reproducible Builds project itself, and followed this with a separate but related question regarding misconfigured reprotest configurations.

Distribution work

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

In Debian this month, 5 reviews of Debian packages were added, 22 were updated and 8 were removed this month adding to Debian s knowledge about identified issues. A number of issue types were updated as well. [ ][ ][ ][ ] In addition, Roland Clobus posted his 23rd update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) and that there will likely be further work at a MiniDebCamp in Hamburg. Furthermore, Roland also responded in-depth to a query about a previous report

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called

Fedora developer Zbigniew J drzejewski-Szmek announced a work-in-progress script called fedora-repro-build that attempts to reproduce an existing package within a koji build environment. Although the projects README file lists a number of fields will always or almost always vary and there is a non-zero list of other known issues, this is an excellent first step towards full Fedora reproducibility.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Jelle van der Waa introduced a new linter rule for Arch Linux packages in order to detect cache files leftover by the Sphinx documentation generator which are unreproducible by nature and should not be packaged. At the time of writing, 7 packages in the Arch repository are affected by this.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard M. Wiedemann posted another monthly update for his work elsewhere in openSUSE.

diffoscope

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 256, 257 and 258 to Debian and made the following additional changes:

- Use a deterministic name instead of trusting

gpg s use-embedded-filenames. Many thanks to Daniel Kahn Gillmor dkg@debian.org for reporting this issue and providing feedback. [ ][ ]

- Don t error-out with a traceback if we encounter

struct.unpack-related errors when parsing Python .pyc files. (#1064973). [ ]

- Don t try and compare

rdb_expected_diff on non-GNU systems as %p formatting can vary, especially with respect to MacOS. [ ]

- Fix compatibility with

pytest 8.0. [ ]

- Temporarily fix support for Python 3.11.8. [ ]

- Use the

7zip package (over p7zip-full) after a Debian package transition. (#1063559). [ ]

- Bump the minimum Black source code reformatter requirement to 24.1.1+. [ ]

- Expand an older changelog entry with a CVE reference. [ ]

- Make

test_zip black clean. [ ]

In addition, James Addison contributed a patch to parse the headers from the diff(1) correctly [ ][ ] thanks! And lastly, Vagrant Cascadian pushed updates in GNU Guix for diffoscope to version 255, 256, and 258, and updated trydiffoscope to 67.0.6.

reprotest

reprotest is our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. This month, Vagrant Cascadian made a number of changes, including:

- Create a (working) proof of concept for enabling a specific number of CPUs. [ ][ ]

- Consistently use 398 days for time variation rather than choosing randomly and update

README.rst to match. [ ][ ]

- Support a new

--vary=build_path.path option. [ ][ ][ ][ ]

Website updates

There were made a number of improvements to our website this month, including:

There were made a number of improvements to our website this month, including:

-

Chris Lamb:

- Improve the relative sizing of headers. [ ]

- Re-order and punch up the introduction and documentation on the

SOURCE_DATE_EPOCH page. [ ]

- Update

SOURCE_DATE_EPOCH documentation re. datetime.datetime.fromtimestamp. Thanks, James Addison. [ ]

- Add a post about Reproducible Builds at FOSDEM 2024. [ ]

-

Holger Levsen:

- Update the GNU Guix page to include their reproducibility QA page. [ ]

- Add Sune Vuorela and Jan-Benedict Glaw to our contributors list. [ ][ ]

-

Mattia Rizzolo:

- Add Sovereign Tech Fund s logo to our sponsors. [ ]

- Update our sponsors list. [ ]

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

-

Debian-related changes:

-

Other changes:

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-size in the Jenkins shell monitor. [ ]

- Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64 nodes. [ ]

- Only set

/proc/$pid/oom_score_adj to -1000 if it has not already been done. [ ]

- Add the

opemwrt-target-tegra and jtx task to the list of zombie jobs. [ ][ ]

Vagrant Cascadian also made the following changes:

- Overhaul the handling of OpenSSH configuration files after updating from Debian bookworm. [ ][ ][ ]

- Add two new

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]

In addition, Alexander Couzens updated the OpenWrt configuration in order to replace the tegra target with mpc85xx [ ], Jan-Benedict Glaw updated the NetBSD build script to use a separate $TMPDIR to mitigate out of space issues on a tmpfs-backed /tmp [ ] and Zheng Junjie added a link to the GNU Guix tests [ ].

Lastly, node maintenance was performed by Holger Levsen [ ][ ][ ][ ][ ][ ] and Vagrant Cascadian [ ][ ][ ][ ].

Upstream patches

The Reproducible Builds project detects, dissects and attempts to fix as many currently-unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Philip Rinn:

gimagereader (date)

-

Bernhard M. Wiedemann:

grass (date-related issue)grub2 (filesystem ordering issue)latex2html (drop a non-deterministic log)mhvtl (tar)obs (build-tool issue)ollama (GZip embedding the modification time)presenterm (filesystem-ordering issue)qt6-quick3d (parallelism)

-

Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter.

python-parsl-doc (disable dynamic argument evaluation by Sphinx autodoc extension)python3-pytest-repeat (remove entry_points.txt creation that varied by shell)python3-selinux (remove packaged direct_url.json file that embeds build path)python3-sepolicy (remove packaged direct_url.json file that embeds build path)- #1064575 filed against

pyswarms.

- #1064638 filed against

python-x2go.

snapd (fix timestamp header in packaged manual-page)zzzeeksphinx (existing RB patch forwarded and merged (with modifications))

-

Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 256, 257 and 258 to Debian and made the following additional changes:

- Use a deterministic name instead of trusting

gpgs use-embedded-filenames. Many thanks to Daniel Kahn Gillmor dkg@debian.org for reporting this issue and providing feedback. [ ][ ] - Don t error-out with a traceback if we encounter

struct.unpack-related errors when parsing Python.pycfiles. (#1064973). [ ] - Don t try and compare

rdb_expected_diffon non-GNU systems as%pformatting can vary, especially with respect to MacOS. [ ] - Fix compatibility with

pytest8.0. [ ] - Temporarily fix support for Python 3.11.8. [ ]

- Use the

7zippackage (overp7zip-full) after a Debian package transition. (#1063559). [ ] - Bump the minimum Black source code reformatter requirement to 24.1.1+. [ ]

- Expand an older changelog entry with a CVE reference. [ ]

- Make

test_zipblack clean. [ ]

diff(1) correctly [ ][ ] thanks! And lastly, Vagrant Cascadian pushed updates in GNU Guix for diffoscope to version 255, 256, and 258, and updated trydiffoscope to 67.0.6.

reprotest

reprotest is our tool for building the same source code twice in different environments and then checking the binaries produced by each build for any differences. This month, Vagrant Cascadian made a number of changes, including:

- Create a (working) proof of concept for enabling a specific number of CPUs. [ ][ ]

- Consistently use 398 days for time variation rather than choosing randomly and update

README.rst to match. [ ][ ]

- Support a new

--vary=build_path.path option. [ ][ ][ ][ ]

Website updates

There were made a number of improvements to our website this month, including:

There were made a number of improvements to our website this month, including:

-

Chris Lamb:

- Improve the relative sizing of headers. [ ]

- Re-order and punch up the introduction and documentation on the

SOURCE_DATE_EPOCH page. [ ]

- Update

SOURCE_DATE_EPOCH documentation re. datetime.datetime.fromtimestamp. Thanks, James Addison. [ ]

- Add a post about Reproducible Builds at FOSDEM 2024. [ ]

-

Holger Levsen:

- Update the GNU Guix page to include their reproducibility QA page. [ ]

- Add Sune Vuorela and Jan-Benedict Glaw to our contributors list. [ ][ ]

-

Mattia Rizzolo:

- Add Sovereign Tech Fund s logo to our sponsors. [ ]

- Update our sponsors list. [ ]

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

-

Debian-related changes:

-

Other changes:

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-size in the Jenkins shell monitor. [ ]

- Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64 nodes. [ ]

- Only set

/proc/$pid/oom_score_adj to -1000 if it has not already been done. [ ]

- Add the

opemwrt-target-tegra and jtx task to the list of zombie jobs. [ ][ ]

Vagrant Cascadian also made the following changes:

- Overhaul the handling of OpenSSH configuration files after updating from Debian bookworm. [ ][ ][ ]

- Add two new

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]

In addition, Alexander Couzens updated the OpenWrt configuration in order to replace the tegra target with mpc85xx [ ], Jan-Benedict Glaw updated the NetBSD build script to use a separate $TMPDIR to mitigate out of space issues on a tmpfs-backed /tmp [ ] and Zheng Junjie added a link to the GNU Guix tests [ ].

Lastly, node maintenance was performed by Holger Levsen [ ][ ][ ][ ][ ][ ] and Vagrant Cascadian [ ][ ][ ][ ].

Upstream patches

The Reproducible Builds project detects, dissects and attempts to fix as many currently-unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Philip Rinn:

gimagereader (date)

-

Bernhard M. Wiedemann:

grass (date-related issue)grub2 (filesystem ordering issue)latex2html (drop a non-deterministic log)mhvtl (tar)obs (build-tool issue)ollama (GZip embedding the modification time)presenterm (filesystem-ordering issue)qt6-quick3d (parallelism)

-

Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter.

python-parsl-doc (disable dynamic argument evaluation by Sphinx autodoc extension)python3-pytest-repeat (remove entry_points.txt creation that varied by shell)python3-selinux (remove packaged direct_url.json file that embeds build path)python3-sepolicy (remove packaged direct_url.json file that embeds build path)- #1064575 filed against

pyswarms.

- #1064638 filed against

python-x2go.

snapd (fix timestamp header in packaged manual-page)zzzeeksphinx (existing RB patch forwarded and merged (with modifications))

-

Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

README.rst to match. [ ][ ]--vary=build_path.path option. [ ][ ][ ][ ] There were made a number of improvements to our website this month, including:

There were made a number of improvements to our website this month, including:

-

Chris Lamb:

- Improve the relative sizing of headers. [ ]

- Re-order and punch up the introduction and documentation on the

SOURCE_DATE_EPOCHpage. [ ] - Update

SOURCE_DATE_EPOCHdocumentation re.datetime.datetime.fromtimestamp. Thanks, James Addison. [ ] - Add a post about Reproducible Builds at FOSDEM 2024. [ ]

-

Holger Levsen:

- Update the GNU Guix page to include their reproducibility QA page. [ ]

- Add Sune Vuorela and Jan-Benedict Glaw to our contributors list. [ ][ ]

-

Mattia Rizzolo:

- Add Sovereign Tech Fund s logo to our sponsors. [ ]

- Update our sponsors list. [ ]

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In February, a number of changes were made by Holger Levsen:

-

Debian-related changes:

-

Other changes:

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-size in the Jenkins shell monitor. [ ]

- Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64 nodes. [ ]

- Only set

/proc/$pid/oom_score_adj to -1000 if it has not already been done. [ ]

- Add the

opemwrt-target-tegra and jtx task to the list of zombie jobs. [ ][ ]

Vagrant Cascadian also made the following changes:

- Overhaul the handling of OpenSSH configuration files after updating from Debian bookworm. [ ][ ][ ]

- Add two new

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]

In addition, Alexander Couzens updated the OpenWrt configuration in order to replace the tegra target with mpc85xx [ ], Jan-Benedict Glaw updated the NetBSD build script to use a separate $TMPDIR to mitigate out of space issues on a tmpfs-backed /tmp [ ] and Zheng Junjie added a link to the GNU Guix tests [ ].

Lastly, node maintenance was performed by Holger Levsen [ ][ ][ ][ ][ ][ ] and Vagrant Cascadian [ ][ ][ ][ ].

Upstream patches

The Reproducible Builds project detects, dissects and attempts to fix as many currently-unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Philip Rinn:

gimagereader (date)

-

Bernhard M. Wiedemann:

grass (date-related issue)grub2 (filesystem ordering issue)latex2html (drop a non-deterministic log)mhvtl (tar)obs (build-tool issue)ollama (GZip embedding the modification time)presenterm (filesystem-ordering issue)qt6-quick3d (parallelism)

-

Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter.

python-parsl-doc (disable dynamic argument evaluation by Sphinx autodoc extension)python3-pytest-repeat (remove entry_points.txt creation that varied by shell)python3-selinux (remove packaged direct_url.json file that embeds build path)python3-sepolicy (remove packaged direct_url.json file that embeds build path)- #1064575 filed against

pyswarms.

- #1064638 filed against

python-x2go.

snapd (fix timestamp header in packaged manual-page)zzzeeksphinx (existing RB patch forwarded and merged (with modifications))

-

Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

- Grant Jan-Benedict Glaw shell access to the Jenkins node. [ ]

- Enable debugging for NetBSD reproducibility testing. [ ]

- Use

/usr/bin/du --apparent-sizein the Jenkins shell monitor. [ ] - Revert reproducible nodes: mark osuosl2 as down . [ ]

- Thanks again to Codethink, for they have doubled the RAM on our

arm64nodes. [ ] - Only set

/proc/$pid/oom_score_adjto -1000 if it has not already been done. [ ] - Add the

opemwrt-target-tegraandjtxtask to the list of zombie jobs. [ ][ ]

armhf architecture build nodes, virt32z and virt64z, and insert them into the Munin monitoring. [ ][ ] [ ][ ]-

Philip Rinn:

gimagereader(date)

-

Bernhard M. Wiedemann:

grass(date-related issue)grub2(filesystem ordering issue)latex2html(drop a non-deterministic log)mhvtl(tar)obs(build-tool issue)ollama(GZip embedding the modification time)presenterm(filesystem-ordering issue)qt6-quick3d(parallelism)

- Chris Lamb:

-

James Addison:

- #1064519 filed against

flask-limiter. python-parsl-doc(disable dynamic argument evaluation by Sphinxautodocextension)python3-pytest-repeat(removeentry_points.txtcreation that varied by shell)python3-selinux(remove packageddirect_url.jsonfile that embeds build path)python3-sepolicy(remove packageddirect_url.jsonfile that embeds build path)- #1064575 filed against

pyswarms. - #1064638 filed against

python-x2go. snapd(fix timestamp header in packaged manual-page)zzzeeksphinx(existing RB patch forwarded and merged (with modifications))

- #1064519 filed against

- Johannes Schauer Marin Rodrigues:

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-buildsonirc.oftc.net. - Twitter: @ReproBuilds

- Mastodon: @reproducible_builds@fosstodon.org

-

Mailing list:

rb-general@lists.reproducible-builds.org

Dormitory room in Zostel Ernakulam, Kochi.

Dormitory room in Zostel Ernakulam, Kochi.

Beds in Zostel Ernakulam, Kochi.

Beds in Zostel Ernakulam, Kochi.

Onam sadya menu from Brindhavan restaurant.

Onam sadya menu from Brindhavan restaurant.

Sadya lined up for serving

Sadya lined up for serving

Sadya thali served on banana leaf.

Sadya thali served on banana leaf.

We were treated with such views during the Wayanad trip.

We were treated with such views during the Wayanad trip.

A road in Rippon.

A road in Rippon.

Entry to Kanthanpara Falls.

Entry to Kanthanpara Falls.

Kanthanpara Falls.

Kanthanpara Falls.

A view of Zostel Wayanad.

A view of Zostel Wayanad.

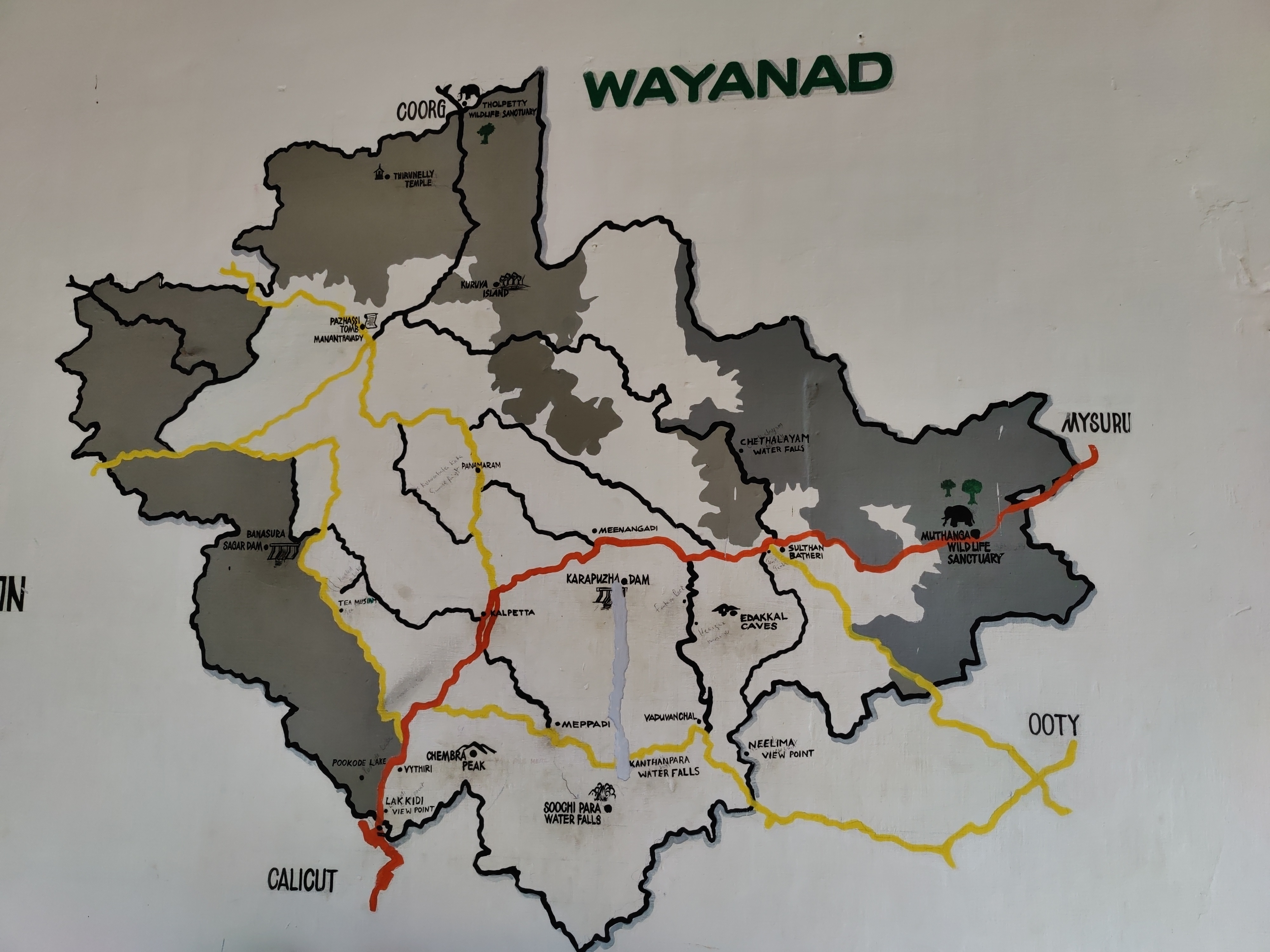

A map of Wayanad showing tourist places.

A map of Wayanad showing tourist places.

A view from inside the Zostel Wayanad property.

A view from inside the Zostel Wayanad property.

Terrain during trekking towards the Chembra peak.

Terrain during trekking towards the Chembra peak.

Heart-shaped lake at the Chembra peak.

Heart-shaped lake at the Chembra peak.

Me at the heart-shaped lake.

Me at the heart-shaped lake.

Views from the top of the Chembra peak.

Views from the top of the Chembra peak.

View of another peak from the heart-shaped lake.

View of another peak from the heart-shaped lake.

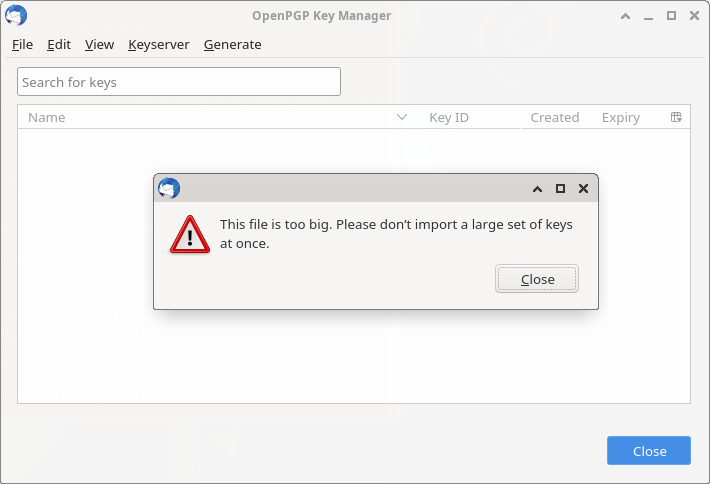

Thunderbird, srsly?

Thunderbird, srsly?

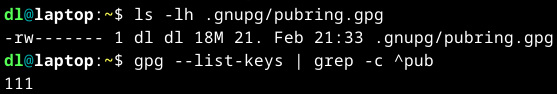

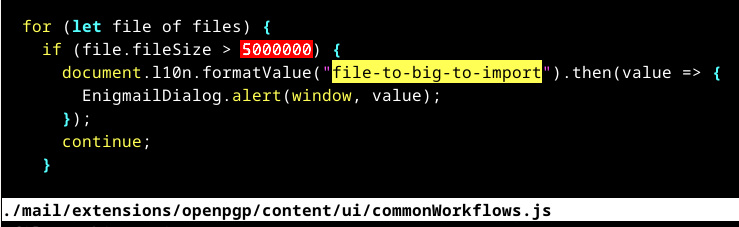

5MB (or 4.8MiB) import limit. Sure. My modest pubring (111 keys) is 18MB. The

5MB (or 4.8MiB) import limit. Sure. My modest pubring (111 keys) is 18MB. The

Here is my monthly update covering what I have been doing in the free software world during January 2021 (

Here is my monthly update covering what I have been doing in the free software world during January 2021 (

Last month, there has been

Last month, there has been

For more in-depth explanation on the different ways to encode a routing table

into a trie and a better understanding of radix trees, see

the

For more in-depth explanation on the different ways to encode a routing table

into a trie and a better understanding of radix trees, see

the  The

The  Getting meaningful results is challenging due to the size of the address

space. None of the scenarios have a fallback route and we only measure time for

successful hits

Getting meaningful results is challenging due to the size of the address

space. None of the scenarios have a fallback route and we only measure time for

successful hits Only 50% of the time is spent in the actual route lookup. The remaining time is

spent evaluating the routing rules (about 30 ns). This ratio is dependent on the

number of routes we inserted (only 1000 in this example). It should be noted the

Only 50% of the time is spent in the actual route lookup. The remaining time is

spent evaluating the routing rules (about 30 ns). This ratio is dependent on the

number of routes we inserted (only 1000 in this example). It should be noted the

Here is an approximate breakdown on the time spent:

Here is an approximate breakdown on the time spent:

All kernels are compiled with

All kernels are compiled with  Despite its more complex insertion logic, the IPv4 subsystem is able to insert 2

million routes in less than 10 seconds.

Despite its more complex insertion logic, the IPv4 subsystem is able to insert 2

million routes in less than 10 seconds.

The memory usage is therefore quite predictable and reasonable, as even a small

single-board computer can support several full views (20 MiB for each):

The memory usage is therefore quite predictable and reasonable, as even a small

single-board computer can support several full views (20 MiB for each):

The

The  The other day I had to deal with an outage in one of our LDAP servers,

which is running the old Debian Wheezy (yeah, I know, we should update it).

We are running openldap, the slapd daemon. And after searching the log files,

the cause of the outage was obvious:

The other day I had to deal with an outage in one of our LDAP servers,

which is running the old Debian Wheezy (yeah, I know, we should update it).

We are running openldap, the slapd daemon. And after searching the log files,

the cause of the outage was obvious:

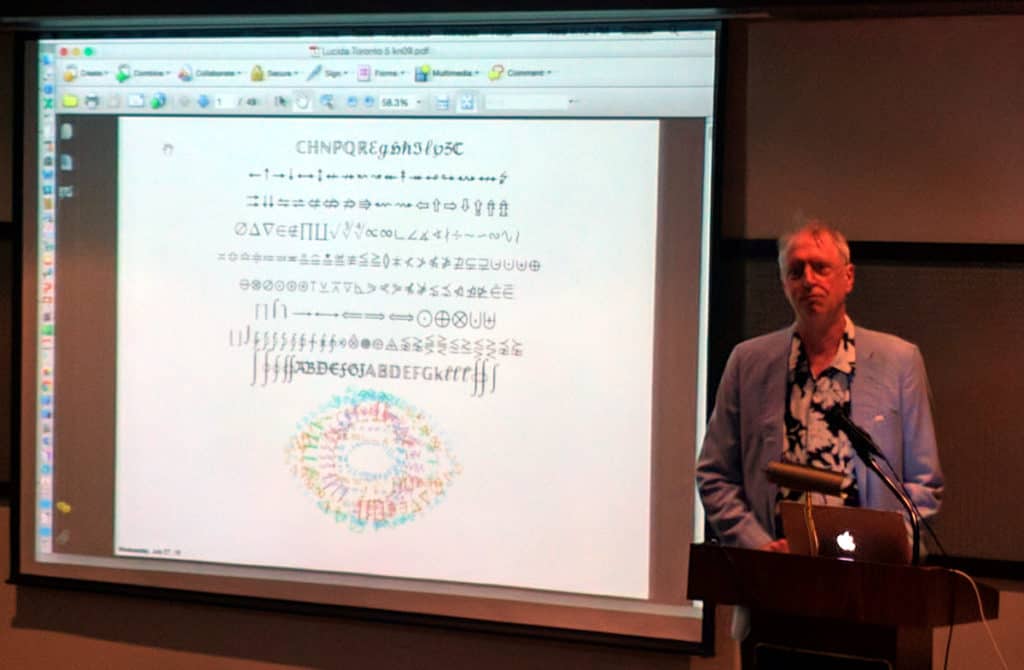

The last day of TUG 2016, or at least the last day of talks, brought four one-hour talks from special guests, and several others, where many talks told us personal stories and various histories.

The last day of TUG 2016, or at least the last day of talks, brought four one-hour talks from special guests, and several others, where many talks told us personal stories and various histories.

Excellent life music in a bit schick/sophisticated atmosphere was a good finish for this excellent day. With Herb from MacTeX and his wife we killed two bottles of red wine, before slowly tingling back to the hotel.

A great finishing day of talks.

Excellent life music in a bit schick/sophisticated atmosphere was a good finish for this excellent day. With Herb from MacTeX and his wife we killed two bottles of red wine, before slowly tingling back to the hotel.

A great finishing day of talks.