Reproducible Builds: Reproducible Builds in January 2022

Welcome to the January 2022 report from the Reproducible Builds project. In our reports, we try outline the most important things that have been happening in the past month. As ever, if you are interested in contributing to the project, please visit our Contribute page on our website.

Welcome to the January 2022 report from the Reproducible Builds project. In our reports, we try outline the most important things that have been happening in the past month. As ever, if you are interested in contributing to the project, please visit our Contribute page on our website.

An interesting blog post was published by Paragon Initiative Enterprises about Gossamer, a proposal for securing the PHP software supply-chain. Utilising code-signing and third-party attestations, Gossamer aims to mitigate the risks within the notorious PHP world via publishing attestations to a transparency log. Their post, titled Solving Open Source Supply Chain Security for the PHP Ecosystem goes into some detail regarding the design, scope and implementation of the system.

An interesting blog post was published by Paragon Initiative Enterprises about Gossamer, a proposal for securing the PHP software supply-chain. Utilising code-signing and third-party attestations, Gossamer aims to mitigate the risks within the notorious PHP world via publishing attestations to a transparency log. Their post, titled Solving Open Source Supply Chain Security for the PHP Ecosystem goes into some detail regarding the design, scope and implementation of the system.

This month, the Linux Foundation announced SupplyChainSecurityCon, a conference focused on exploring the security threats affecting the software supply chain, sharing best practices and mitigation tactics. The conference is part of the Linux Foundation s Open Source Summit North America and will take place June 21st 24th 2022, both virtually and in Austin, Texas.

This month, the Linux Foundation announced SupplyChainSecurityCon, a conference focused on exploring the security threats affecting the software supply chain, sharing best practices and mitigation tactics. The conference is part of the Linux Foundation s Open Source Summit North America and will take place June 21st 24th 2022, both virtually and in Austin, Texas.

Debian

There was a significant progress made in the Debian Linux distribution this month, including:

There was a significant progress made in the Debian Linux distribution this month, including:

-

Roland Clobus continued work on reproducible live images in the past month, and some of his work was merged into live-build itself. The ReproducibleInstalls/LiveImages page on the Debian Wiki as well as the existing/custom Jenkins hooks were updated to match.

-

Related to this, it is now possible to create a bit-by-bit reproducible chroots using

mmdebstrap when SOURCE_DATE_EPOCH is set. As of Debian 11 Bullseye, this works for all variants, except for the variant that includes all so-called Priority: standard packages where fontconfig caches, *.pyc files and man-db index.db are unreproducible. (These issues have been addressed in Tails and live-build by removing *.pyc files, in addition to removing index.db files as well. (Whilst index.db files can be regenerated, there is no straightforward method to re-creating *.pyc files, and the resulting installation will suffer from reduced performance of Python scripts. Ideally, no removal would be necessary as all files would be created reproducibly in the first place.)

-

Further to this work, Roland wrote up a comprehensive status update about live-build ISO images to our mailing list as well.

-

The PackageRebuilder instance running at

beta.tests.reproducible-builds.org is now aware/capable of building packages for Debian bookworm.

-

A longstanding issue around

fontconfig s cache files being unreproducible saw some progress this month. Debian bug number #864082 (originally filed in June 2017) was NMU d by josch, although this resulted in a small number of minor side-effects which have already been addressed with a follow-up patch.

-

120 reviews of Debian packages were added, 272 were updated and 31 were removed this month, all adding to our index of identified issues. A number of issue types were updated too [ ][ ][ ][ ].

-

kpcyrd blogged this month about Debian binary NMUs and

.buildinfo files in a post entitled, Reproducible Builds: Debian and the case of the missing version string.

Other distributions

kpcyrd reported on Twitter about the release of version 0.2.0 of pacman-bintrans, an experiment with binary transparency for the Arch Linux package manager, pacman. This new version is now able to query rebuilderd to check if a package was independently reproduced.

kpcyrd reported on Twitter about the release of version 0.2.0 of pacman-bintrans, an experiment with binary transparency for the Arch Linux package manager, pacman. This new version is now able to query rebuilderd to check if a package was independently reproduced.

In the world of openSUSE, however, Bernhard M. Wiedemann posted his monthly reproducible builds status report.

diffoscope

diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats. This month, Chris Lamb prepared and uploaded versions

diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats. This month, Chris Lamb prepared and uploaded versions 199, 200, 201 and 202 to Debian unstable (that were later backported to Debian bullseye-backports by Mattia Rizzolo), as well as made the following changes to the code itself:

-

New features:

- First attempt at incremental output support with a timeout. Now passing, for example,

--timeout=60 will mean that diffoscope will not recurse into any sub-archives after 60 seconds total execution time has elapsed. Note that this is not a fixed/strict timeout due to implementation issues. [ ][ ]

- Support both variants of

odt2txt, including the one provided by the unoconv package. [ ]

-

Bug fixes:

- Do not return with a UNIX exit code of 0 if we encounter with a file whose human-readable metadata matches literal file contents. [ ]

- Don t fail if comparing a nonexistent file with a

.pyc file (and add test). [ ][ ]

- If the

debian.deb822 module raises any exception on import, re-raise it as an ImportError. This should fix diffoscope on some Fedora systems. [ ]

- Even if a Sphinx

.inv inventory file is labelled The remainder of this file is compressed using zlib, it might not actually be. In this case, don t traceback and simply return the original content. [ ]

-

Documentation:

- Improve documentation for the new

--timeout option due to a few misconceptions. [ ]

- Drop reference in the manual page claiming the ability to compare non-existent files on the command-line. (This has not been possible since version 32 which was released in September 2015). [ ]

- Update X has been modified after

NT_GNU_BUILD_ID has been applied messages to, for example, not duplicating the full filename in the diffoscope output. [ ]

-

Codebase improvements:

In addition, Alyssa Ross fixed the comparison of CBFS names that contain spaces [ ],

Sergei Trofimovich fixed whitespace for compatibility with version 21.12 of the Black source code reformatter [ ] and Zbigniew J drzejewski-Szmek fixed JSON detection with a new version of file [ ].

Testing framework

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

-

Fr d ric Pierret (fepitre):

-

Holger Levsen:

- Install the

po4a package where appropriate, as it is needed for the Reproducible Builds website job [ ]. In addition, also run the i18n.sh and contributors.sh scripts [ ].

- Correct some grammar in Debian live image build output. [ ]

- Shell monitor improvements:

- Node health check improvements:

- Use the

devscripts package from bullseye-backports on Debian nodes. [ ]

- Use the Munin monitoring package bullseye-backports on Debian nodes too. [ ]

- Update New Year handling, needed to be able to detect real and fake dates. [ ][ ]

- Improve the error message of the script that powercycles the

arm64 architecture nodes hosted by Codethink. [ ]

-

Mattia Rizzolo:

-

Roland Clobus:

-

Vagrant Cascadian:

Upstream patches

The Reproducible Builds project attempts to fix as many currently-unreproducible packages as possible. In January, we wrote a large number of such patches, including:

-

Leonidas Spyropoulos:

freeplane (timestamps, file order)opensearch (timestamps, file order)

-

Bernhard M. Wiedemann:

cosign (date)gnome-todo (discover fix for single-CPU build failure)gstreamer-plugins-good (build fails without debuginfo)jogl2 (parallelism-related issue)libkolabxml (ASLR)monero-gui (CPU)python-pyudev (date)rekor (date)rivet (FTBFS-j1 has unreleased fix upstream)skaffold (date)

-

Chris Lamb:

- #1003159 filed against

dh-raku.

- #1003203 filed against

libgtkdatabox.

- #1003385 filed against

qcelemental.

- #1003646 filed against

node-ramda.

- #1003929 filed against

ncurses.

- #1004391 filed against

python-fluids (forwarded upstream).

- #1004676 filed against

node-istanbul.

-

Johannes Schauer Marin Rodrigues:

-

Roland Clobus:

- #1003449 filed against

texlive-base.

-

Vagrant Cascadian:

- #1003316 filed against

python-cooler.

- #1003319 filed against

insighttoolkit5.

- #1003368 filed against

dino-im.

- #1003370 filed against

libxtrxll.

- #1003371 filed against

libavif.

- #1003373 filed against

go-for-it.

- #1003375 filed against

clfft.

- #1003379 filed against

last-align.

- #1003430 filed against

gkrellm-leds.

- #1003488 and #1003489 filed against

last-align.

- #1003494 filed against

binutils-xtensa-lx106.

- #1003495 filed against

gcc-xtensa-lx106.

- #1003500 and #1003501 filed against

gcc-sh-elf.

- #1003787 filed against

pesign.

- #1003802 filed against

upb.

- #1003803 filed against

paho.mqtt.c.

- #1003804 filed against

imath.

- #1003808 filed against

libvcflib.

- #1003809 filed against

pkg-js-tools.

- #1003912 filed against

vdeplug4.

- #1003914 filed against

kget.

- #1003915 filed against

mathgl.

- #1003919 filed against

apulse.

- #1003920 filed against

akonadi.

- #1003922 filed against

recastnavigation.

- #1003923 filed against

soundkonverter.

- #1003924 filed against

indi.

- #1003978 filed against

akonadi-mime.

- #1003980 filed against

akonadi-import-wizard.

- #1003992 filed against

akonadi-contacts.

- #1003993 filed against

cryptominisat.

- #1003995 filed against

hipercontracer.

- #1003997 filed against

libksba.

- #1003999 filed against

leatherman.

- #1004002 filed against

segyio.

- #1004004 filed against

darkradiant.

- #1004005 filed against

openmm.

- #1004034 filed against

bagel.

- #1004053 filed against

kallisto.

- #1004057 filed against

evolution-ews.

And finally

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mailing list:

rb-general@lists.reproducible-builds.org

mmdebstrap when SOURCE_DATE_EPOCH is set. As of Debian 11 Bullseye, this works for all variants, except for the variant that includes all so-called Priority: standard packages where fontconfig caches, *.pyc files and man-db index.db are unreproducible. (These issues have been addressed in Tails and live-build by removing *.pyc files, in addition to removing index.db files as well. (Whilst index.db files can be regenerated, there is no straightforward method to re-creating *.pyc files, and the resulting installation will suffer from reduced performance of Python scripts. Ideally, no removal would be necessary as all files would be created reproducibly in the first place.)

beta.tests.reproducible-builds.org is now aware/capable of building packages for Debian bookworm.

fontconfig s cache files being unreproducible saw some progress this month. Debian bug number #864082 (originally filed in June 2017) was NMU d by josch, although this resulted in a small number of minor side-effects which have already been addressed with a follow-up patch.

.buildinfo files in a post entitled, Reproducible Builds: Debian and the case of the missing version string.

kpcyrd reported on Twitter about the release of version 0.2.0 of pacman-bintrans, an experiment with binary transparency for the Arch Linux package manager, pacman. This new version is now able to query rebuilderd to check if a package was independently reproduced.

kpcyrd reported on Twitter about the release of version 0.2.0 of pacman-bintrans, an experiment with binary transparency for the Arch Linux package manager, pacman. This new version is now able to query rebuilderd to check if a package was independently reproduced.

In the world of openSUSE, however, Bernhard M. Wiedemann posted his monthly reproducible builds status report.

diffoscope

diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats. This month, Chris Lamb prepared and uploaded versions

diffoscope is our in-depth and content-aware diff utility. Not only can it locate and diagnose reproducibility issues, it can provide human-readable diffs from many kinds of binary formats. This month, Chris Lamb prepared and uploaded versions 199, 200, 201 and 202 to Debian unstable (that were later backported to Debian bullseye-backports by Mattia Rizzolo), as well as made the following changes to the code itself:

-

New features:

- First attempt at incremental output support with a timeout. Now passing, for example,

--timeout=60 will mean that diffoscope will not recurse into any sub-archives after 60 seconds total execution time has elapsed. Note that this is not a fixed/strict timeout due to implementation issues. [ ][ ]

- Support both variants of

odt2txt, including the one provided by the unoconv package. [ ]

-

Bug fixes:

- Do not return with a UNIX exit code of 0 if we encounter with a file whose human-readable metadata matches literal file contents. [ ]

- Don t fail if comparing a nonexistent file with a

.pyc file (and add test). [ ][ ]

- If the

debian.deb822 module raises any exception on import, re-raise it as an ImportError. This should fix diffoscope on some Fedora systems. [ ]

- Even if a Sphinx

.inv inventory file is labelled The remainder of this file is compressed using zlib, it might not actually be. In this case, don t traceback and simply return the original content. [ ]

-

Documentation:

- Improve documentation for the new

--timeout option due to a few misconceptions. [ ]

- Drop reference in the manual page claiming the ability to compare non-existent files on the command-line. (This has not been possible since version 32 which was released in September 2015). [ ]

- Update X has been modified after

NT_GNU_BUILD_ID has been applied messages to, for example, not duplicating the full filename in the diffoscope output. [ ]

-

Codebase improvements:

In addition, Alyssa Ross fixed the comparison of CBFS names that contain spaces [ ],

Sergei Trofimovich fixed whitespace for compatibility with version 21.12 of the Black source code reformatter [ ] and Zbigniew J drzejewski-Szmek fixed JSON detection with a new version of file [ ].

Testing framework

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

-

Fr d ric Pierret (fepitre):

-

Holger Levsen:

- Install the

po4a package where appropriate, as it is needed for the Reproducible Builds website job [ ]. In addition, also run the i18n.sh and contributors.sh scripts [ ].

- Correct some grammar in Debian live image build output. [ ]

- Shell monitor improvements:

- Node health check improvements:

- Use the

devscripts package from bullseye-backports on Debian nodes. [ ]

- Use the Munin monitoring package bullseye-backports on Debian nodes too. [ ]

- Update New Year handling, needed to be able to detect real and fake dates. [ ][ ]

- Improve the error message of the script that powercycles the

arm64 architecture nodes hosted by Codethink. [ ]

-

Mattia Rizzolo:

-

Roland Clobus:

-

Vagrant Cascadian:

Upstream patches

The Reproducible Builds project attempts to fix as many currently-unreproducible packages as possible. In January, we wrote a large number of such patches, including:

-

Leonidas Spyropoulos:

freeplane (timestamps, file order)opensearch (timestamps, file order)

-

Bernhard M. Wiedemann:

cosign (date)gnome-todo (discover fix for single-CPU build failure)gstreamer-plugins-good (build fails without debuginfo)jogl2 (parallelism-related issue)libkolabxml (ASLR)monero-gui (CPU)python-pyudev (date)rekor (date)rivet (FTBFS-j1 has unreleased fix upstream)skaffold (date)

-

Chris Lamb:

- #1003159 filed against

dh-raku.

- #1003203 filed against

libgtkdatabox.

- #1003385 filed against

qcelemental.

- #1003646 filed against

node-ramda.

- #1003929 filed against

ncurses.

- #1004391 filed against

python-fluids (forwarded upstream).

- #1004676 filed against

node-istanbul.

-

Johannes Schauer Marin Rodrigues:

-

Roland Clobus:

- #1003449 filed against

texlive-base.

-

Vagrant Cascadian:

- #1003316 filed against

python-cooler.

- #1003319 filed against

insighttoolkit5.

- #1003368 filed against

dino-im.

- #1003370 filed against

libxtrxll.

- #1003371 filed against

libavif.

- #1003373 filed against

go-for-it.

- #1003375 filed against

clfft.

- #1003379 filed against

last-align.

- #1003430 filed against

gkrellm-leds.

- #1003488 and #1003489 filed against

last-align.

- #1003494 filed against

binutils-xtensa-lx106.

- #1003495 filed against

gcc-xtensa-lx106.

- #1003500 and #1003501 filed against

gcc-sh-elf.

- #1003787 filed against

pesign.

- #1003802 filed against

upb.

- #1003803 filed against

paho.mqtt.c.

- #1003804 filed against

imath.

- #1003808 filed against

libvcflib.

- #1003809 filed against

pkg-js-tools.

- #1003912 filed against

vdeplug4.

- #1003914 filed against

kget.

- #1003915 filed against

mathgl.

- #1003919 filed against

apulse.

- #1003920 filed against

akonadi.

- #1003922 filed against

recastnavigation.

- #1003923 filed against

soundkonverter.

- #1003924 filed against

indi.

- #1003978 filed against

akonadi-mime.

- #1003980 filed against

akonadi-import-wizard.

- #1003992 filed against

akonadi-contacts.

- #1003993 filed against

cryptominisat.

- #1003995 filed against

hipercontracer.

- #1003997 filed against

libksba.

- #1003999 filed against

leatherman.

- #1004002 filed against

segyio.

- #1004004 filed against

darkradiant.

- #1004005 filed against

openmm.

- #1004034 filed against

bagel.

- #1004053 filed against

kallisto.

- #1004057 filed against

evolution-ews.

And finally

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mailing list:

rb-general@lists.reproducible-builds.org

- First attempt at incremental output support with a timeout. Now passing, for example,

--timeout=60will mean that diffoscope will not recurse into any sub-archives after 60 seconds total execution time has elapsed. Note that this is not a fixed/strict timeout due to implementation issues. [ ][ ] - Support both variants of

odt2txt, including the one provided by theunoconvpackage. [ ]

- Do not return with a UNIX exit code of 0 if we encounter with a file whose human-readable metadata matches literal file contents. [ ]

- Don t fail if comparing a nonexistent file with a

.pycfile (and add test). [ ][ ] - If the

debian.deb822module raises any exception on import, re-raise it as anImportError. This should fix diffoscope on some Fedora systems. [ ] - Even if a Sphinx

.invinventory file is labelled The remainder of this file is compressed using zlib, it might not actually be. In this case, don t traceback and simply return the original content. [ ]

- Improve documentation for the new

--timeoutoption due to a few misconceptions. [ ] - Drop reference in the manual page claiming the ability to compare non-existent files on the command-line. (This has not been possible since version 32 which was released in September 2015). [ ]

- Update X has been modified after

NT_GNU_BUILD_IDhas been applied messages to, for example, not duplicating the full filename in the diffoscope output. [ ]

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

The Reproducible Builds project runs a significant testing framework at tests.reproducible-builds.org, to check packages and other artifacts for reproducibility. This month, the following changes were made:

- Fr d ric Pierret (fepitre):

-

Holger Levsen:

- Install the

po4apackage where appropriate, as it is needed for the Reproducible Builds website job [ ]. In addition, also run thei18n.shandcontributors.shscripts [ ]. - Correct some grammar in Debian live image build output. [ ]

- Shell monitor improvements:

- Node health check improvements:

- Use the

devscriptspackage from bullseye-backports on Debian nodes. [ ] - Use the Munin monitoring package bullseye-backports on Debian nodes too. [ ]

- Update New Year handling, needed to be able to detect real and fake dates. [ ][ ]

- Improve the error message of the script that powercycles the

arm64architecture nodes hosted by Codethink. [ ]

- Install the

- Mattia Rizzolo:

- Roland Clobus:

- Vagrant Cascadian:

Upstream patches

The Reproducible Builds project attempts to fix as many currently-unreproducible packages as possible. In January, we wrote a large number of such patches, including:

-

Leonidas Spyropoulos:

freeplane (timestamps, file order)opensearch (timestamps, file order)

-

Bernhard M. Wiedemann:

cosign (date)gnome-todo (discover fix for single-CPU build failure)gstreamer-plugins-good (build fails without debuginfo)jogl2 (parallelism-related issue)libkolabxml (ASLR)monero-gui (CPU)python-pyudev (date)rekor (date)rivet (FTBFS-j1 has unreleased fix upstream)skaffold (date)

-

Chris Lamb:

- #1003159 filed against

dh-raku.

- #1003203 filed against

libgtkdatabox.

- #1003385 filed against

qcelemental.

- #1003646 filed against

node-ramda.

- #1003929 filed against

ncurses.

- #1004391 filed against

python-fluids (forwarded upstream).

- #1004676 filed against

node-istanbul.

-

Johannes Schauer Marin Rodrigues:

-

Roland Clobus:

- #1003449 filed against

texlive-base.

-

Vagrant Cascadian:

- #1003316 filed against

python-cooler.

- #1003319 filed against

insighttoolkit5.

- #1003368 filed against

dino-im.

- #1003370 filed against

libxtrxll.

- #1003371 filed against

libavif.

- #1003373 filed against

go-for-it.

- #1003375 filed against

clfft.

- #1003379 filed against

last-align.

- #1003430 filed against

gkrellm-leds.

- #1003488 and #1003489 filed against

last-align.

- #1003494 filed against

binutils-xtensa-lx106.

- #1003495 filed against

gcc-xtensa-lx106.

- #1003500 and #1003501 filed against

gcc-sh-elf.

- #1003787 filed against

pesign.

- #1003802 filed against

upb.

- #1003803 filed against

paho.mqtt.c.

- #1003804 filed against

imath.

- #1003808 filed against

libvcflib.

- #1003809 filed against

pkg-js-tools.

- #1003912 filed against

vdeplug4.

- #1003914 filed against

kget.

- #1003915 filed against

mathgl.

- #1003919 filed against

apulse.

- #1003920 filed against

akonadi.

- #1003922 filed against

recastnavigation.

- #1003923 filed against

soundkonverter.

- #1003924 filed against

indi.

- #1003978 filed against

akonadi-mime.

- #1003980 filed against

akonadi-import-wizard.

- #1003992 filed against

akonadi-contacts.

- #1003993 filed against

cryptominisat.

- #1003995 filed against

hipercontracer.

- #1003997 filed against

libksba.

- #1003999 filed against

leatherman.

- #1004002 filed against

segyio.

- #1004004 filed against

darkradiant.

- #1004005 filed against

openmm.

- #1004034 filed against

bagel.

- #1004053 filed against

kallisto.

- #1004057 filed against

evolution-ews.

And finally

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Twitter: @ReproBuilds

-

Mailing list:

rb-general@lists.reproducible-builds.org

freeplane(timestamps, file order)opensearch(timestamps, file order)

cosign(date)gnome-todo(discover fix for single-CPU build failure)gstreamer-plugins-good(build fails without debuginfo)jogl2(parallelism-related issue)libkolabxml(ASLR)monero-gui(CPU)python-pyudev(date)rekor(date)rivet(FTBFS-j1 has unreleased fix upstream)skaffold(date)

- #1003159 filed against

dh-raku. - #1003203 filed against

libgtkdatabox. - #1003385 filed against

qcelemental. - #1003646 filed against

node-ramda. - #1003929 filed against

ncurses. - #1004391 filed against

python-fluids(forwarded upstream). - #1004676 filed against

node-istanbul.

- #1003449 filed against

texlive-base.

- #1003316 filed against

python-cooler. - #1003319 filed against

insighttoolkit5. - #1003368 filed against

dino-im. - #1003370 filed against

libxtrxll. - #1003371 filed against

libavif. - #1003373 filed against

go-for-it. - #1003375 filed against

clfft. - #1003379 filed against

last-align. - #1003430 filed against

gkrellm-leds. - #1003488 and #1003489 filed against

last-align. - #1003494 filed against

binutils-xtensa-lx106. - #1003495 filed against

gcc-xtensa-lx106. - #1003500 and #1003501 filed against

gcc-sh-elf. - #1003787 filed against

pesign. - #1003802 filed against

upb. - #1003803 filed against

paho.mqtt.c. - #1003804 filed against

imath. - #1003808 filed against

libvcflib. - #1003809 filed against

pkg-js-tools. - #1003912 filed against

vdeplug4. - #1003914 filed against

kget. - #1003915 filed against

mathgl. - #1003919 filed against

apulse. - #1003920 filed against

akonadi. - #1003922 filed against

recastnavigation. - #1003923 filed against

soundkonverter. - #1003924 filed against

indi. - #1003978 filed against

akonadi-mime. - #1003980 filed against

akonadi-import-wizard. - #1003992 filed against

akonadi-contacts. - #1003993 filed against

cryptominisat. - #1003995 filed against

hipercontracer. - #1003997 filed against

libksba. - #1003999 filed against

leatherman. - #1004002 filed against

segyio. - #1004004 filed against

darkradiant. - #1004005 filed against

openmm. - #1004034 filed against

bagel. - #1004053 filed against

kallisto. - #1004057 filed against

evolution-ews.

-

IRC:

#reproducible-buildsonirc.oftc.net. - Twitter: @ReproBuilds

-

Mailing list:

rb-general@lists.reproducible-builds.org

Some of you might have noticed that I m into keyboards since a few

years ago into mechanical keyboards to be precise.

Preface

It basically started with the

Some of you might have noticed that I m into keyboards since a few

years ago into mechanical keyboards to be precise.

Preface

It basically started with the

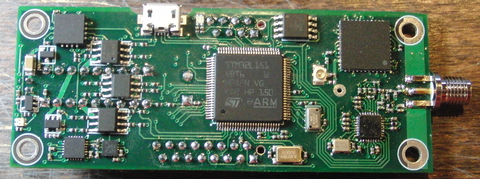

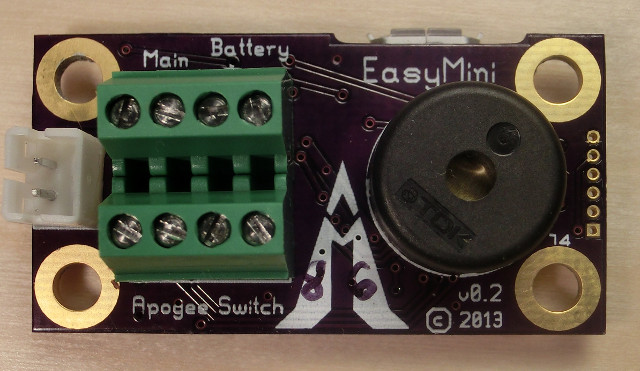

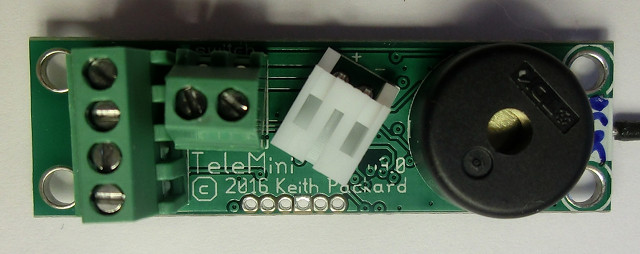

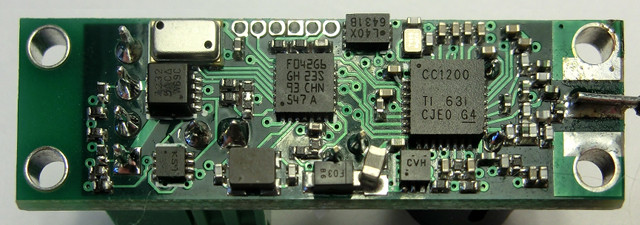

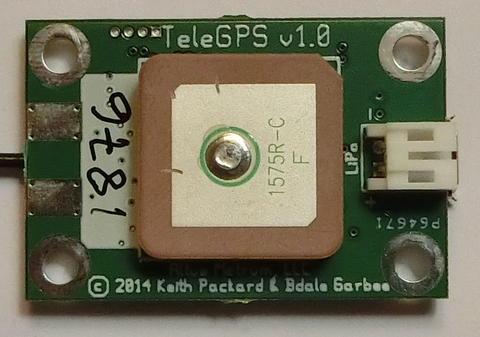

TeleMini V3.0 Dual-deploy altimeter with telemetry now available

TeleMini v3.0 is an update to our original TeleMini v1.0 flight

computer. It is a miniature (1/2 inch by 1.7 inch) dual-deploy flight

computer with data logging and radio telemetry. Small enough to fit

comfortably in an 18mm tube, this powerful package does everything you

need on a single board:

TeleMini V3.0 Dual-deploy altimeter with telemetry now available

TeleMini v3.0 is an update to our original TeleMini v1.0 flight

computer. It is a miniature (1/2 inch by 1.7 inch) dual-deploy flight

computer with data logging and radio telemetry. Small enough to fit

comfortably in an 18mm tube, this powerful package does everything you

need on a single board:

Here is my monthly update covering what I have been doing in the free software world (

Here is my monthly update covering what I have been doing in the free software world ( Started in 2008,

Started in 2008,

Embedding Python into other applications to provide a scripting mechanism is a popular practice.

Embedding Python into other applications to provide a scripting mechanism is a popular practice.