My effort to improve transparency and confidence of public apt archives continues. I started to work on this in

Apt Archive Transparency in which I mention the

debdistget project in passing. Debdistget is responsible for mirroring index files for some public apt archives. I ve realized that having a publicly auditable and preserved mirror of the apt repositories is central to being able to do apt transparency work, so the debdistget project has become more central to my project than I thought. Currently I track

Trisquel,

PureOS,

Gnuinos and their upstreams

Ubuntu,

Debian and

Devuan.

Debdistget download Release/Package/Sources files and store them in a git repository published on

GitLab. Due to size constraints, it uses two repositories: one for the Release/InRelease files (which are small) and one that also include the Package/Sources files (which are large). See for example the repository for

Trisquel release files and the

Trisquel package/sources files. Repositories for all distributions can be found in

debdistutils archives GitLab sub-group.

The reason for splitting into two repositories was that the git repository for the combined files become large, and that some of my use-cases only needed the release files. Currently the repositories with packages (which contain a couple of months worth of data now) are 9GB for

Ubuntu, 2.5GB for

Trisquel/

Debian/

PureOS, 970MB for

Devuan and 450MB for

Gnuinos. The repository size is correlated to the size of the archive (for the initial import) plus the frequency and size of updates. Ubuntu s use of

Apt Phased Updates (which triggers a higher churn of Packages file modifications) appears to be the primary reason for its larger size.

Working with large Git repositories is inefficient and the GitLab CI/CD jobs generate quite some network traffic downloading the git repository over and over again. The most heavy user is the

debdistdiff project that download all distribution package repositories to do diff operations on the package lists between distributions. The daily job takes around 80 minutes to run, with the majority of time is spent on downloading the archives. Yes I know I could look into runner-side caching but I dislike complexity caused by caching.

Fortunately not all use-cases requires the package files. The

debdistcanary project only needs the Release/InRelease files, in order to commit signatures to the

Sigstore and

Sigsum transparency logs. These jobs still run fairly quickly, but watching the repository size growth worries me. Currently these repositories are at

Debian 440MB,

PureOS 130MB,

Ubuntu/

Devuan 90MB,

Trisquel 12MB,

Gnuinos 2MB. Here I believe the main size correlation is update frequency, and Debian is large because I track the volatile unstable.

So I hit a scalability end with my first approach. A couple of months ago I solved this by discarding and resetting these archival repositories. The GitLab CI/CD jobs were fast again and all was well. However this meant discarding precious historic information. A couple of days ago I was reaching the limits of practicality again, and started to explore ways to fix this. I like having data stored in git (it allows easy integration with software integrity tools such as

GnuPG and Sigstore, and the git log provides a kind of temporal ordering of data), so it felt like giving up on nice properties to use a traditional database with on-disk approach. So I started to learn about

Git-LFS and understanding that it was able to

handle multi-GB worth of data that looked promising.

Fairly quickly I scripted up a

GitLab CI/CD job that incrementally update the Release/Package/Sources files in a git repository that uses Git-LFS to store all the files. The repository size is now at

Ubuntu 650kb,

Debian 300kb,

Trisquel 50kb,

Devuan 250kb,

PureOS 172kb and

Gnuinos 17kb. As can be expected, jobs are quick to clone the git archives:

debdistdiff pipelines went from a run-time of 80 minutes down to 10 minutes which more reasonable correlate with the archive size and CPU run-time.

The LFS storage size for those repositories are at

Ubuntu 15GB,

Debian 8GB,

Trisquel 1.7GB,

Devuan 1.1GB,

PureOS/

Gnuinos 420MB. This is for a couple of days worth of data. It seems native Git is better at compressing/deduplicating data than Git-LFS is: the combined size for Ubuntu is already 15GB for a couple of days data compared to 8GB for a couple of months worth of data with pure Git. This may be a sub-optimal implementation of Git-LFS in GitLab but it does worry me that this new approach will be difficult to scale too. At some level the difference is understandable, Git-LFS probably store two different Packages files around 90MB each for Trisquel as two 90MB files, but native Git would store it as one compressed version of the 90MB file and one relatively small patch to turn the old files into the next file. So the Git-LFS approach surprisingly scale less well for overall storage-size. Still, the original repository is much smaller, and you usually don t have to pull all LFS files anyway. So it is net win.

Throughout this work, I kept thinking about how my approach relates to

Debian s snapshot service. Ultimately what I would want is a combination of these two services. To have a good foundation to do transparency work I would want to have a collection of all Release/Packages/Sources files ever published, and ultimately also the source code and binaries. While it makes sense to start on the latest stable releases of distributions, this effort should scale backwards in time as well. For reproducing binaries from source code, I need to be able to securely find earlier versions of binary packages used for rebuilds. So I need to import all the Release/Packages/Sources packages from snapshot into my repositories. The latency to retrieve files from that server is slow so I haven t been able to find an efficient/parallelized way to download the files. If I m able to finish this, I would have confidence that my new Git-LFS based approach to store these files will scale over many years to come. This remains to be seen. Perhaps the repository has to be split up per release or per architecture or similar.

Another factor is storage costs. While the git repository size for a Git-LFS based repository with files from several years may be possible to sustain, the Git-LFS storage size surely won t be. It seems GitLab charges the same for files in repositories and in Git-LFS, and it is around $500 per 100GB per year. It may be possible to setup a separate Git-LFS backend not hosted at GitLab to serve the LFS files. Does anyone know of a suitable server implementation for this? I had a quick look at the

Git-LFS implementation list and it seems the closest reasonable approach would be to setup the Gitea-clone

Forgejo as a self-hosted server. Perhaps a cloud storage approach a la S3 is the way to go? The cost to host this on GitLab will be manageable for up to ~1TB ($5000/year) but scaling it to storing say 500TB of data would mean an yearly fee of $2.5M which seems like poor value for the money.

I realized that ultimately I would want a git repository locally with the entire content of all apt archives, including their binary and source packages, ever published. The storage requirements for a service like snapshot (~300TB of data?) is today not prohibitly expensive: 20TB disks are $500 a piece, so a storage enclosure with 36 disks would be around $18.000 for 720TB and using RAID1 means 360TB which is a good start. While I have heard about ~TB-sized Git-LFS repositories, would Git-LFS scale to 1PB? Perhaps the size of a git repository with multi-millions number of Git-LFS pointer files will become unmanageable? To get started on this approach, I decided to import a mirror of Debian s bookworm for amd64 into a Git-LFS repository. That is around 175GB so reasonable cheap to host even on GitLab ($1000/year for 200GB). Having this repository publicly available will make it possible to write software that uses this approach (e.g., porting

debdistreproduce), to find out if this is useful and if it could scale. Distributing the apt repository via Git-LFS would also enable other interesting ideas to protecting the data. Consider configuring apt to use a local file:// URL to this git repository, and verifying the git checkout using some method similar to

Guix s approach to trusting git content or

Sigstore s gitsign.

A naive push of the 175GB archive in a single git commit ran into pack size limitations:

remote: fatal: pack exceeds maximum allowed size (4.88 GiB)

however breaking up the commit into smaller commits for parts of the archive made it possible to push the entire archive. Here are the commands to create this repository:

git init

git lfs install

git lfs track 'dists/**' 'pool/**'

git add .gitattributes

git commit -m"Add Git-LFS track attributes." .gitattributes

time debmirror --method=rsync --host ftp.se.debian.org --root :debian --arch=amd64 --source --dist=bookworm,bookworm-updates --section=main --verbose --diff=none --keyring /usr/share/keyrings/debian-archive-keyring.gpg --ignore .git .

git add dists project

git commit -m"Add." -a

git remote add origin git@gitlab.com:debdistutils/archives/debian/mirror.git

git push --set-upstream origin --all

for d in pool//; do

echo $d;

time git add $d;

git commit -m"Add $d." -a

git push

done

The

resulting repository size is around 27MB with Git LFS object storage around 174GB. I think this approach would scale to handle all architectures for one release, but working with a single git repository for all releases for all architectures may lead to a too large git repository (>1GB). So maybe one repository per release? These repositories could also be split up on a subset of pool/ files, or there could be one repository per release per architecture or sources.

Finally, I have concerns about using SHA1 for identifying objects. It seems both Git and Debian s snapshot service is currently using SHA1. For Git there is

SHA-256 transition and it seems GitLab is working on support for SHA256-based repositories. For serious long-term deployment of these concepts, it would be nice to go for SHA256 identifiers directly. Git-LFS already uses SHA256 but Git internally uses SHA1 as does the Debian snapshot service.

What do you think? Happy Hacking!

I work from home these days, and my nearest office is over 100 miles away, 3 hours door to door if I travel by train (and, to be honest, probably not a lot faster given rush hour traffic if I drive). So I m reliant on a functional internet connection in order to be able to work. I m lucky to have access to Openreach FTTP, provided by Aquiss, but I worry about what happens if there s a cable cut somewhere or some other long lasting problem. Worst case I could tether to my work phone, or try to find some local coworking space to use while things get sorted, but I felt like arranging a backup option was a wise move.

Step 1 turned out to be sorting out recursive DNS. It s been many moons since I had to deal with running DNS in a production setting, and I ve mostly done my best to avoid doing it at home too. dnsmasq has done a decent job at providing for my needs over the years, covering DHCP, DNS (+ tftp for my test device network). However I just let it slave off my ISP s nameservers, which means if that link goes down it ll no longer be able to resolve anything outside the house.

One option would have been to either point to a different recursive DNS server (Cloudfare s 1.1.1.1 or Google s Public DNS being the common choices), but I ve no desire to share my lookup information with them. As another approach I could have done some sort of failover of

I work from home these days, and my nearest office is over 100 miles away, 3 hours door to door if I travel by train (and, to be honest, probably not a lot faster given rush hour traffic if I drive). So I m reliant on a functional internet connection in order to be able to work. I m lucky to have access to Openreach FTTP, provided by Aquiss, but I worry about what happens if there s a cable cut somewhere or some other long lasting problem. Worst case I could tether to my work phone, or try to find some local coworking space to use while things get sorted, but I felt like arranging a backup option was a wise move.

Step 1 turned out to be sorting out recursive DNS. It s been many moons since I had to deal with running DNS in a production setting, and I ve mostly done my best to avoid doing it at home too. dnsmasq has done a decent job at providing for my needs over the years, covering DHCP, DNS (+ tftp for my test device network). However I just let it slave off my ISP s nameservers, which means if that link goes down it ll no longer be able to resolve anything outside the house.

One option would have been to either point to a different recursive DNS server (Cloudfare s 1.1.1.1 or Google s Public DNS being the common choices), but I ve no desire to share my lookup information with them. As another approach I could have done some sort of failover of

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Last week we held our promised miniDebConf in Santa Fe City, Santa Fe province,

Argentina just across the river from Paran , where I have spent almost six

beautiful months I will never forget.

Closing arguments in the trial between various people and

Closing arguments in the trial between various people and

The Rcpp Core Team is once again thrilled to announce a new release

1.0.12 of the

The Rcpp Core Team is once again thrilled to announce a new release

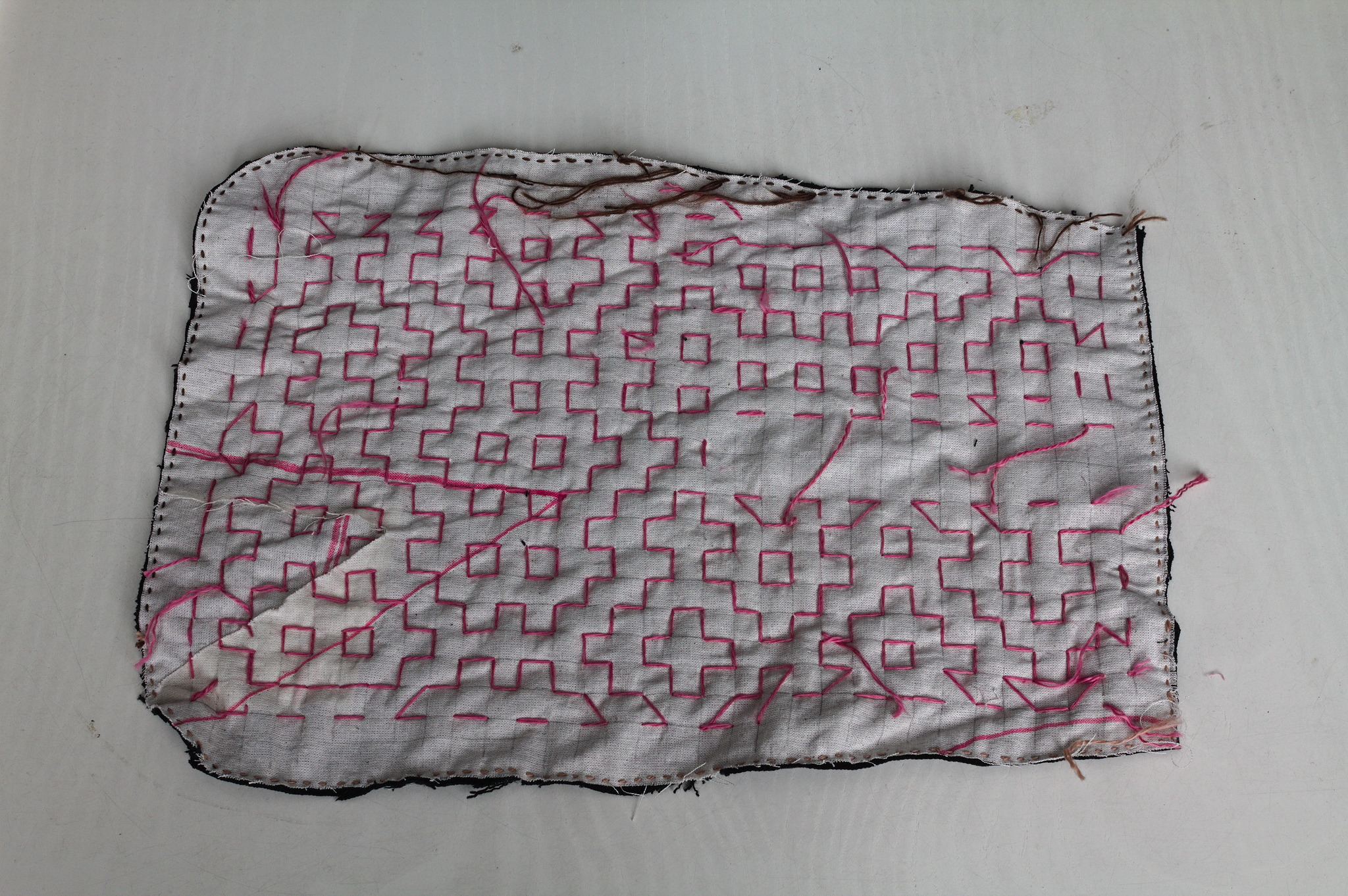

1.0.12 of the  Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a

Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a  I cut a

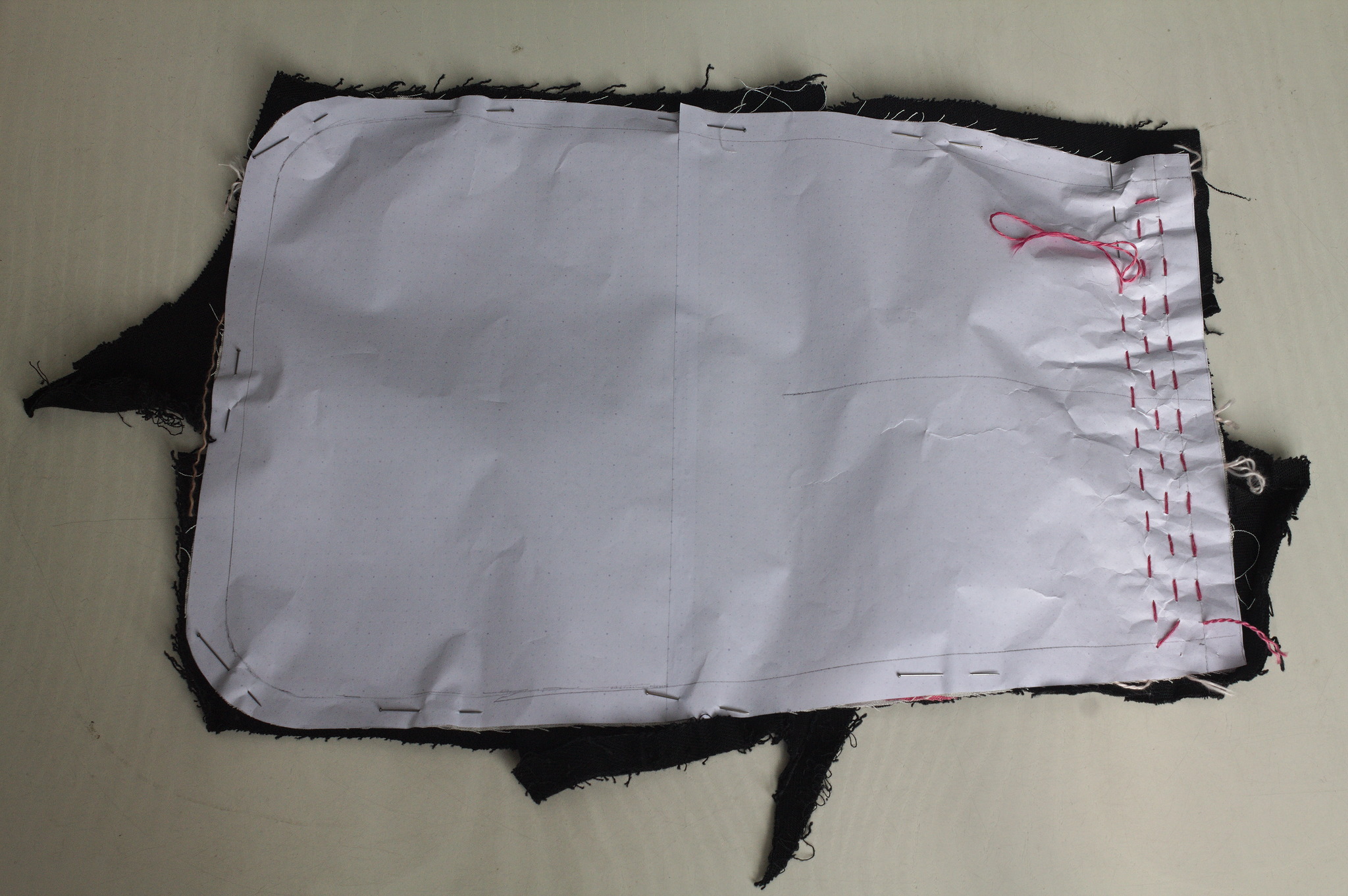

I cut a  For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

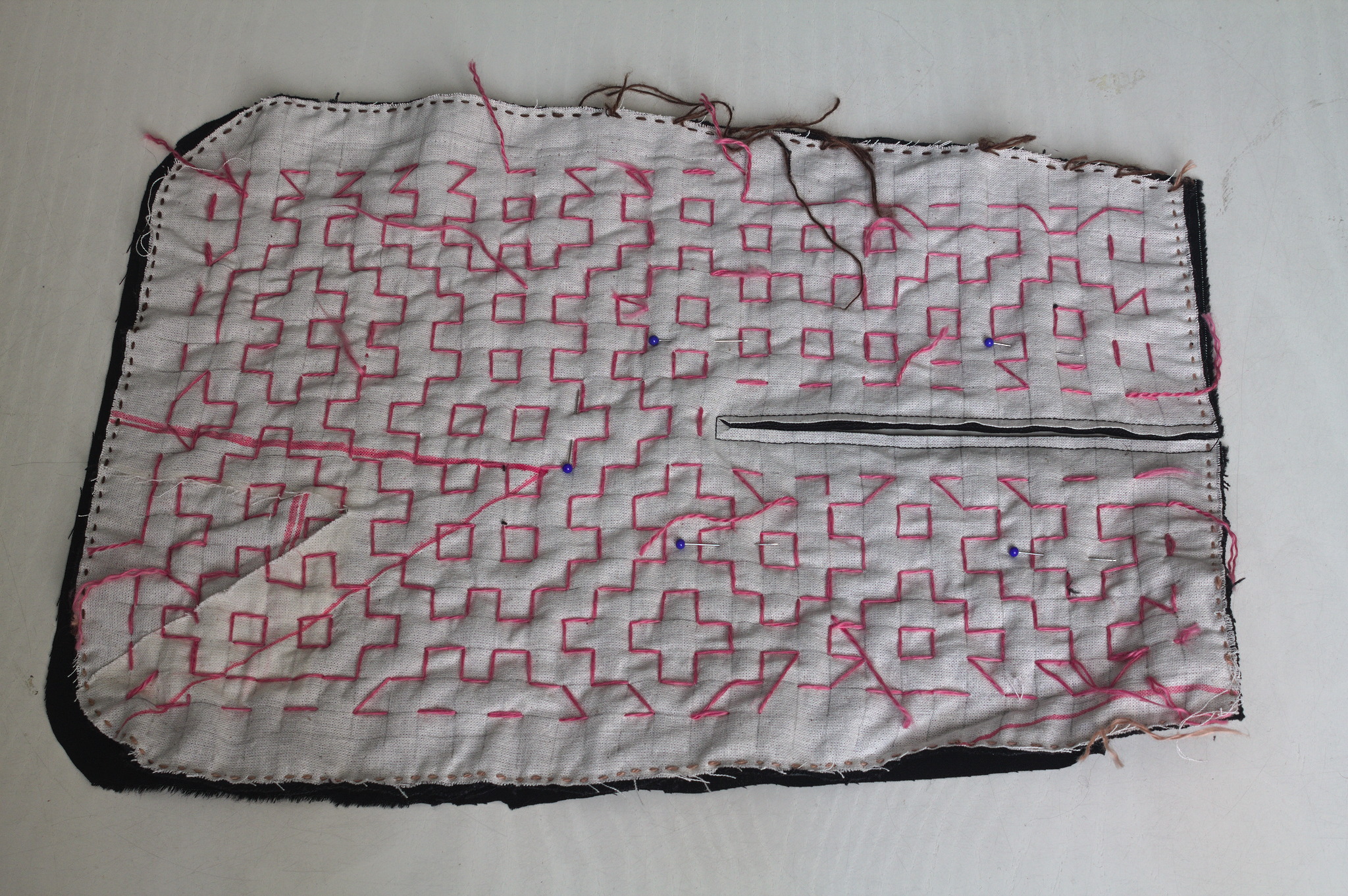

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.