Ian Jackson: Why we ve voted No to CfD for Derril Water solar farm

- Green electricity from your mainstream supplier is a lie

- Ripple

- Contracts for Difference

- Ripple and CfD

- Voting No

This article has been originally posted on November 4, 2023, and has been updated (at the bottom) since.

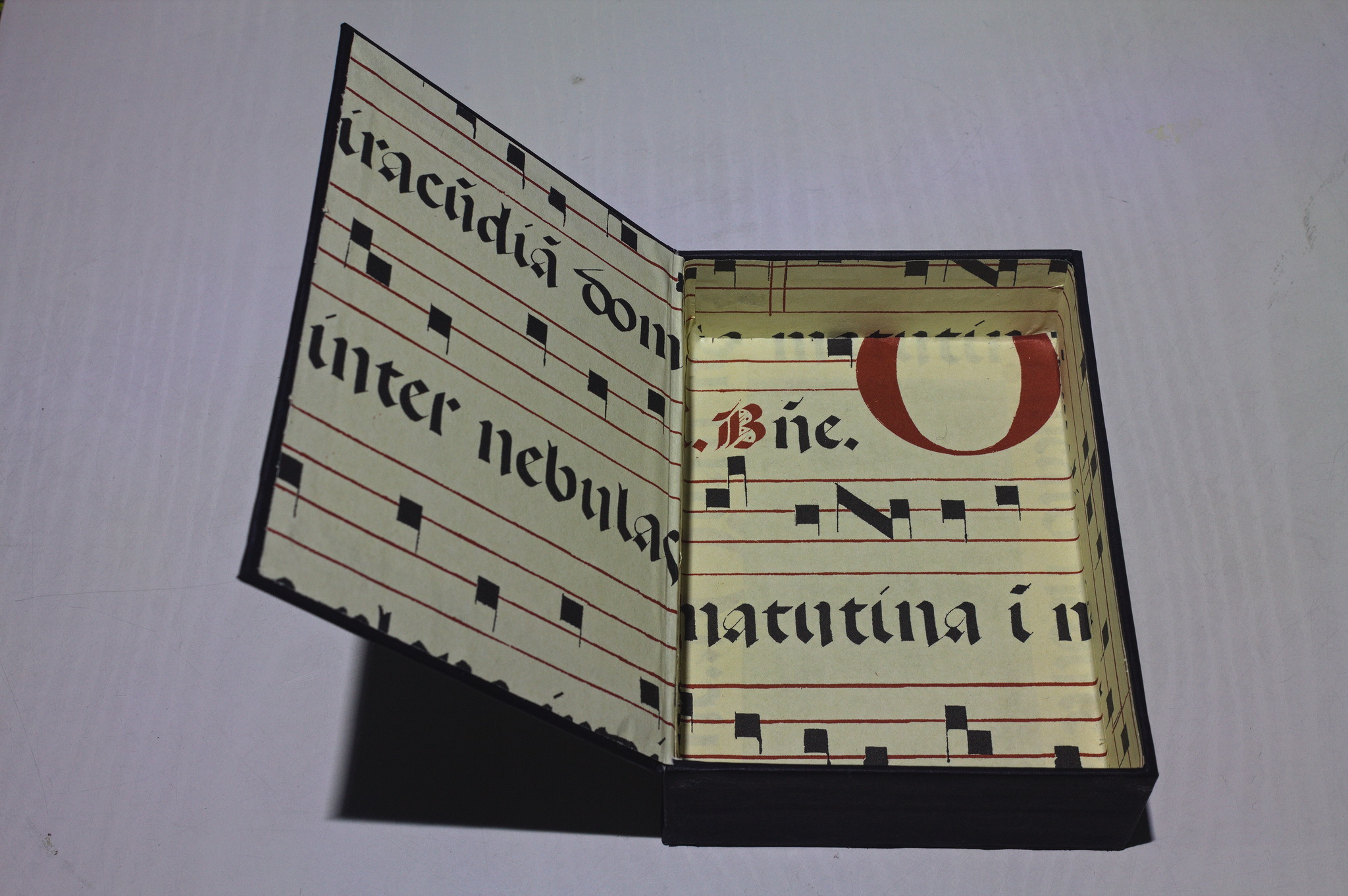

Thanks to All Saints Day, I ve just had a 5 days weekend. One of those

days I woke up and decided I absolutely needed a cartonnage box for the

cardboard and linocut piecepack I ve been working on for quite some

time.

I started drawing a plan with measures before breakfast, then decided to

change some important details, restarted from scratch, did a quick dig

through the bookbinding materials and settled on 2 mm cardboard for the

structure, black fabric-like paper for the outside and a scrap of paper

with a manuscript print for the inside.

Then we had the only day with no rain among the five, so some time was

spent doing things outside, but on the next day I quickly finished two

boxes, at two different heights.

The weather situation also meant that while I managed to take passable

pictures of the first stages of the box making in natural light, the

last few stages required some creative artificial lightning, even if it

wasn t that late in the evening. I need to build1 myself a

light box.

And then decided that since they are C6 sized, they also work well for

postcards or for other A6 pieces of paper, so I will probably need

to make another one when the piecepack set will be finally finished.

The original plan was to use a linocut of the piecepack suites as the

front cover; I don t currently have one ready, but will make it while

printing the rest of the piecepack set. One day :D

Thanks to All Saints Day, I ve just had a 5 days weekend. One of those

days I woke up and decided I absolutely needed a cartonnage box for the

cardboard and linocut piecepack I ve been working on for quite some

time.

I started drawing a plan with measures before breakfast, then decided to

change some important details, restarted from scratch, did a quick dig

through the bookbinding materials and settled on 2 mm cardboard for the

structure, black fabric-like paper for the outside and a scrap of paper

with a manuscript print for the inside.

Then we had the only day with no rain among the five, so some time was

spent doing things outside, but on the next day I quickly finished two

boxes, at two different heights.

The weather situation also meant that while I managed to take passable

pictures of the first stages of the box making in natural light, the

last few stages required some creative artificial lightning, even if it

wasn t that late in the evening. I need to build1 myself a

light box.

And then decided that since they are C6 sized, they also work well for

postcards or for other A6 pieces of paper, so I will probably need

to make another one when the piecepack set will be finally finished.

The original plan was to use a linocut of the piecepack suites as the

front cover; I don t currently have one ready, but will make it while

printing the rest of the piecepack set. One day :D

One of the boxes was temporarily used for the plastic piecepack I got

with the book, and that one works well, but since it s a set with

standard suites I think I will want to make another box, using some of

the paper with fleur-de-lis that I saw in the stash.

I ve also started to write detailed instructions: I will publish them as

soon as they are ready, and then either update this post, or they will

be mentioned in an additional post if I will have already made more

boxes in the meanwhile.

One of the boxes was temporarily used for the plastic piecepack I got

with the book, and that one works well, but since it s a set with

standard suites I think I will want to make another box, using some of

the paper with fleur-de-lis that I saw in the stash.

I ve also started to write detailed instructions: I will publish them as

soon as they are ready, and then either update this post, or they will

be mentioned in an additional post if I will have already made more

boxes in the meanwhile.

After making my Elastic Neck Top

I knew I wanted to make another one less constrained by the amount of

available fabric.

I had a big cut of white cotton voile, I bought some more swimsuit

elastic, and I also had a spool of n 100 sewing cotton, but then I

postponed the project for a while I was working on other things.

Then FOSDEM 2024 arrived, I was going to remote it, and I was working on

my Augusta Stays, but

I knew that in the middle of FOSDEM I risked getting to the stage where

I needed to leave the computer to try the stays on: not something really

compatible with the frenetic pace of a FOSDEM weekend, even one spent at

home.

I needed a backup project1, and this was perfect: I already

had everything I needed, the pattern and instructions were already on my

site (so I didn t need to take pictures while working), and it was

mostly a lot of straight seams, perfect while watching conference

videos.

So, on the Friday before FOSDEM I cut all of the pieces, then spent

three quarters of FOSDEM on the stays, and when I reached the point

where I needed to stop for a fit test I started on the top.

Like the first one, everything was sewn by hand, and one week after I

had started everything was assembled, except for the casings for the

elastic at the neck and cuffs, which required about 10 km of sewing, and

even if it was just a running stitch it made me want to reconsider my

lifestyle choices a few times: there was really no reason for me not

to do just those seams by machine in a few minutes.

Instead I kept sewing by hand whenever I had time for it, and on the

next weekend it was ready. We had a rare day of sun during the weekend,

so I wore my thermal underwear, some other layer, a scarf around my

neck, and went outside with my SO to have a batch of pictures taken

(those in the jeans posts, and others for a post I haven t written yet.

Have I mentioned I have a backlog?).

And then the top went into the wardrobe, and it will come out again when

the weather will be a bit warmer. Or maybe it will be used under the

Augusta Stays, since I don t have a 1700 chemise yet, but that requires

actually finishing them.

The pattern for this project was already online,

of course, but I ve added a picture of the casing to the relevant

section, and everything is as usual #FreeSoftWear.

After making my Elastic Neck Top

I knew I wanted to make another one less constrained by the amount of

available fabric.

I had a big cut of white cotton voile, I bought some more swimsuit

elastic, and I also had a spool of n 100 sewing cotton, but then I

postponed the project for a while I was working on other things.

Then FOSDEM 2024 arrived, I was going to remote it, and I was working on

my Augusta Stays, but

I knew that in the middle of FOSDEM I risked getting to the stage where

I needed to leave the computer to try the stays on: not something really

compatible with the frenetic pace of a FOSDEM weekend, even one spent at

home.

I needed a backup project1, and this was perfect: I already

had everything I needed, the pattern and instructions were already on my

site (so I didn t need to take pictures while working), and it was

mostly a lot of straight seams, perfect while watching conference

videos.

So, on the Friday before FOSDEM I cut all of the pieces, then spent

three quarters of FOSDEM on the stays, and when I reached the point

where I needed to stop for a fit test I started on the top.

Like the first one, everything was sewn by hand, and one week after I

had started everything was assembled, except for the casings for the

elastic at the neck and cuffs, which required about 10 km of sewing, and

even if it was just a running stitch it made me want to reconsider my

lifestyle choices a few times: there was really no reason for me not

to do just those seams by machine in a few minutes.

Instead I kept sewing by hand whenever I had time for it, and on the

next weekend it was ready. We had a rare day of sun during the weekend,

so I wore my thermal underwear, some other layer, a scarf around my

neck, and went outside with my SO to have a batch of pictures taken

(those in the jeans posts, and others for a post I haven t written yet.

Have I mentioned I have a backlog?).

And then the top went into the wardrobe, and it will come out again when

the weather will be a bit warmer. Or maybe it will be used under the

Augusta Stays, since I don t have a 1700 chemise yet, but that requires

actually finishing them.

The pattern for this project was already online,

of course, but I ve added a picture of the casing to the relevant

section, and everything is as usual #FreeSoftWear.

I had finished sewing my jeans, I had a scant 50 cm of elastic denim

left.

Unrelated to that, I had just finished drafting a vest with Valentina,

after the Cutters Practical Guide to the Cutting of Ladies Garments.

A new pattern requires a (wearable) mockup. 50 cm of leftover fabric

require a quick project. The decision didn t take a lot of time.

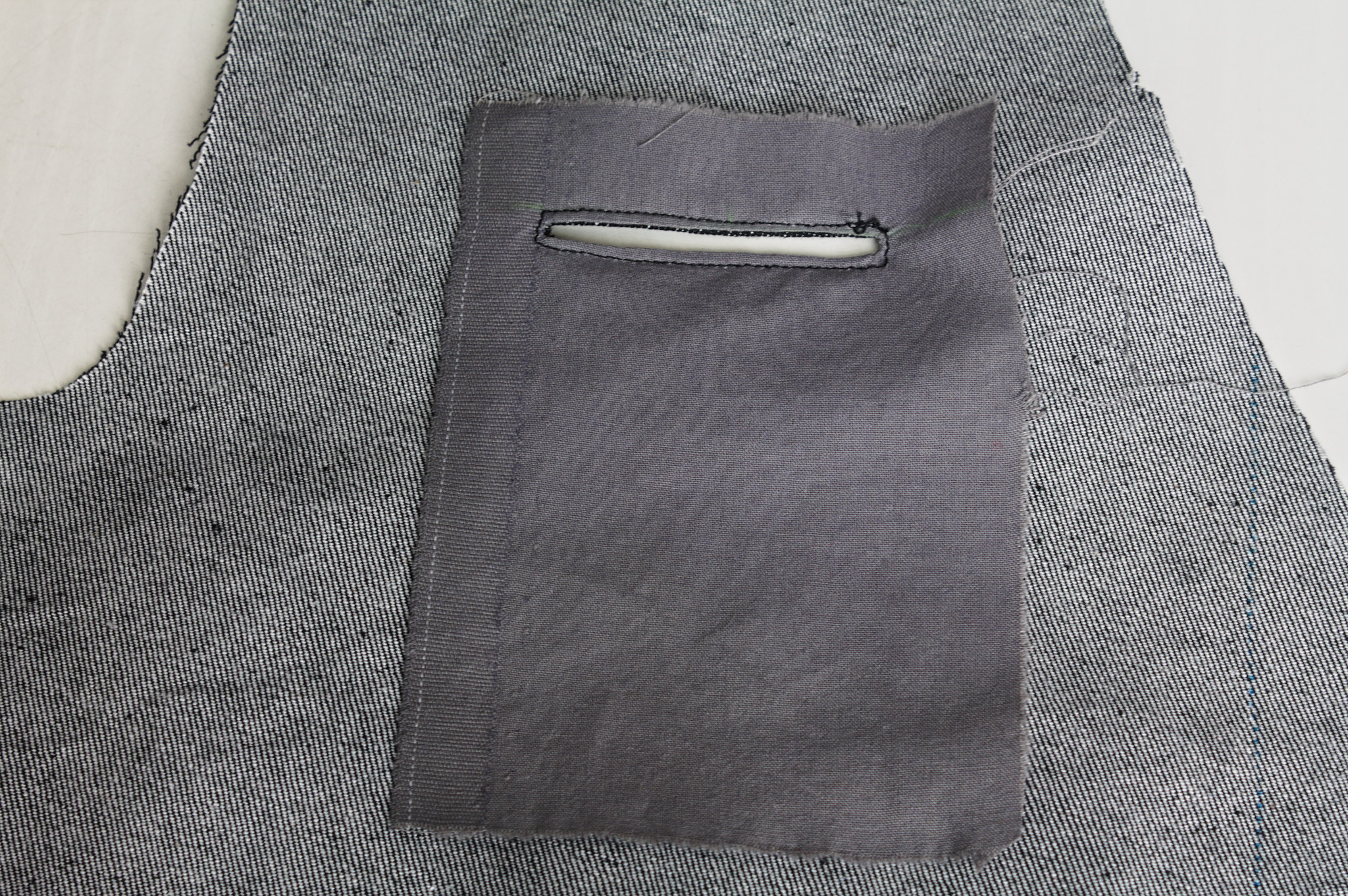

As a mockup, I kept things easy: single layer with no lining, some edges

finished with a topstitched hem and some with bias tape, and plain tape

on the fronts, to give more support to the buttons and buttonholes.

I did add pockets: not real welt ones (too much effort on denim), but

simple slits covered by flaps.

I had finished sewing my jeans, I had a scant 50 cm of elastic denim

left.

Unrelated to that, I had just finished drafting a vest with Valentina,

after the Cutters Practical Guide to the Cutting of Ladies Garments.

A new pattern requires a (wearable) mockup. 50 cm of leftover fabric

require a quick project. The decision didn t take a lot of time.

As a mockup, I kept things easy: single layer with no lining, some edges

finished with a topstitched hem and some with bias tape, and plain tape

on the fronts, to give more support to the buttons and buttonholes.

I did add pockets: not real welt ones (too much effort on denim), but

simple slits covered by flaps.

piece; there is a slit in the middle that has been finished with topstitching.To do them I marked the slits, then I cut two rectangles of pocketing fabric that should have been as wide as the slit + 1.5 cm (width of the pocket) + 3 cm (allowances) and twice the sum of as tall as I wanted the pocket to be plus 1 cm (space above the slit) + 1.5 cm (allowances). Then I put the rectangle on the right side of the denim, aligned so that the top edge was 2.5 cm above the slit, sewed 2 mm from the slit, cut, turned the pocketing to the wrong side, pressed and topstitched 2 mm from the fold to finish the slit.

other sides; it does not lay flat on the right side of the fabric because the finished slit (hidden in the picture) is pulling it.Then I turned the pocketing back to the right side, folded it in half, sewed the side and top seams with a small allowance, pressed and turned it again to the wrong side, where I sewed the seams again to make a french seam. And finally, a simple rectangular denim flap was topstitched to the front, covering the slits. I wasn t as precise as I should have been and the pockets aren t exactly the right size, but they will do to see if I got the positions right (I think that the breast one should be a cm or so lower, the waist ones are fine), and of course they are tiny, but that s to be expected from a waistcoat.

The other thing that wasn t exactly as expected is the back: the pattern

splits the bottom part of the back to give it sufficient spring over

the hips . The book is probably published in 1892, but I had already

found when drafting the foundation skirt that its idea of hips

includes a bit of structure. The enough steel to carry a book or a cup

of tea kind of structure. I should have expected a lot of spring, and

indeed that s what I got.

To fit the bottom part of the back on the limited amount of fabric I had

to piece it, and I suspect that the flat felled seam in the center is

helping it sticking out; I don t think it s exactly bad, but it is

a peculiar look.

Also, I had to cut the back on the fold, rather than having a seam in

the middle and the grain on a different angle.

Anyway, my next waistcoat project is going to have a linen-cotton lining

and silk fashion fabric, and I d say that the pattern is good enough

that I can do a few small fixes and cut it directly in the lining, using

it as a second mockup.

As for the wrinkles, there is quite a bit, but it looks something that

will be solved by a bit of lightweight boning in the side seams and in

the front; it will be seen in the second mockup and the finished

waistcoat.

As for this one, it s definitely going to get some wear as is, in casual

contexts. Except. Well, it s a denim waistcoat, right? With a very

different cut from the get a denim jacket and rip out the sleeves , but

still a denim waistcoat, right? The kind that you cover in patches,

right?

The other thing that wasn t exactly as expected is the back: the pattern

splits the bottom part of the back to give it sufficient spring over

the hips . The book is probably published in 1892, but I had already

found when drafting the foundation skirt that its idea of hips

includes a bit of structure. The enough steel to carry a book or a cup

of tea kind of structure. I should have expected a lot of spring, and

indeed that s what I got.

To fit the bottom part of the back on the limited amount of fabric I had

to piece it, and I suspect that the flat felled seam in the center is

helping it sticking out; I don t think it s exactly bad, but it is

a peculiar look.

Also, I had to cut the back on the fold, rather than having a seam in

the middle and the grain on a different angle.

Anyway, my next waistcoat project is going to have a linen-cotton lining

and silk fashion fabric, and I d say that the pattern is good enough

that I can do a few small fixes and cut it directly in the lining, using

it as a second mockup.

As for the wrinkles, there is quite a bit, but it looks something that

will be solved by a bit of lightweight boning in the side seams and in

the front; it will be seen in the second mockup and the finished

waistcoat.

As for this one, it s definitely going to get some wear as is, in casual

contexts. Except. Well, it s a denim waistcoat, right? With a very

different cut from the get a denim jacket and rip out the sleeves , but

still a denim waistcoat, right? The kind that you cover in patches,

right?

I was working on what looked like a good pattern for a pair of

jeans-shaped trousers, and I knew I wasn t happy with 200-ish g/m

cotton-linen for general use outside of deep summer, but I didn t have a

source for proper denim either (I had been low-key looking for it for a

long time).

Then one day I looked at an article I had saved about fabric shops that

sell technical fabric and while window-shopping on one I found that they

had a decent selection of denim in a decent weight.

I decided it was a sign, and decided to buy the two heaviest denim they

had: a 100% cotton, 355 g/m one

and a 97% cotton, 3% elastane at 385 g/m

1; the latter was a bit of compromise as I shouldn t really be

buying fabric adulterated with the Scourge of Humanity, but it was

heavier than the plain one, and I may be having a thing for tightly

fitting jeans, so this may be one of the very few woven fabric where I m

not morally opposed to its existence.

And, I d like to add, I resisted buying any of the very nice wools they

also seem to carry, other than just a couple of samples.

Since the shop only sold in 1 meter increments, and I needed about 1.5

meters for each pair of jeans, I decided to buy 3 meters per type, and

have enough to make a total of four pair of jeans. A bit more than I

strictly needed, maybe, but I was completely out of wearable day-to-day

trousers.

I was working on what looked like a good pattern for a pair of

jeans-shaped trousers, and I knew I wasn t happy with 200-ish g/m

cotton-linen for general use outside of deep summer, but I didn t have a

source for proper denim either (I had been low-key looking for it for a

long time).

Then one day I looked at an article I had saved about fabric shops that

sell technical fabric and while window-shopping on one I found that they

had a decent selection of denim in a decent weight.

I decided it was a sign, and decided to buy the two heaviest denim they

had: a 100% cotton, 355 g/m one

and a 97% cotton, 3% elastane at 385 g/m

1; the latter was a bit of compromise as I shouldn t really be

buying fabric adulterated with the Scourge of Humanity, but it was

heavier than the plain one, and I may be having a thing for tightly

fitting jeans, so this may be one of the very few woven fabric where I m

not morally opposed to its existence.

And, I d like to add, I resisted buying any of the very nice wools they

also seem to carry, other than just a couple of samples.

Since the shop only sold in 1 meter increments, and I needed about 1.5

meters for each pair of jeans, I decided to buy 3 meters per type, and

have enough to make a total of four pair of jeans. A bit more than I

strictly needed, maybe, but I was completely out of wearable day-to-day

trousers.

The shop sent everything very quickly, the courier took their time (oh,

well) but eventually delivered my fabric on a sunny enough day that I

could wash it and start as soon as possible on the first pair.

The pattern I did in linen was a bit too fitting, but I was afraid I had

widened it a bit too much, so I did the first pair in the 100% cotton

denim. Sewing them took me about a week of early mornings and late

afternoons, excluding the weekend, and my worries proved false: they

were mostly just fine.

The only bit that could have been a bit better is the waistband, which

is a tiny bit too wide on the back: it s designed to be so for comfort,

but the next time I should pull the elastic a bit more, so that it stays

closer to the body.

The shop sent everything very quickly, the courier took their time (oh,

well) but eventually delivered my fabric on a sunny enough day that I

could wash it and start as soon as possible on the first pair.

The pattern I did in linen was a bit too fitting, but I was afraid I had

widened it a bit too much, so I did the first pair in the 100% cotton

denim. Sewing them took me about a week of early mornings and late

afternoons, excluding the weekend, and my worries proved false: they

were mostly just fine.

The only bit that could have been a bit better is the waistband, which

is a tiny bit too wide on the back: it s designed to be so for comfort,

but the next time I should pull the elastic a bit more, so that it stays

closer to the body.

I wore those jeans daily for the rest of the week, and confirmed that

they were indeed comfortable and the pattern was ok, so on the next

Monday I started to cut the elastic denim.

I decided to cut and sew two pairs, assembly-line style, using the

shaped waistband for one of them and the straight one for the other one.

I started working on them on a Monday, and on that week I had a couple

of days when I just couldn t, plus I completely skipped sewing on the

weekend, but on Tuesday the next week one pair was ready and could be

worn, and the other one only needed small finishes.

I wore those jeans daily for the rest of the week, and confirmed that

they were indeed comfortable and the pattern was ok, so on the next

Monday I started to cut the elastic denim.

I decided to cut and sew two pairs, assembly-line style, using the

shaped waistband for one of them and the straight one for the other one.

I started working on them on a Monday, and on that week I had a couple

of days when I just couldn t, plus I completely skipped sewing on the

weekend, but on Tuesday the next week one pair was ready and could be

worn, and the other one only needed small finishes.

And I have to say, I m really, really happy with the ones with a shaped

waistband in elastic denim, as they fit even better than the ones with a

straight waistband gathered with elastic. Cutting it requires more

fabric, but I think it s definitely worth it.

But it will be a problem for a later time: right now three pairs of

jeans are a good number to keep in rotation, and I hope I won t have to

sew jeans for myself for quite some time.

And I have to say, I m really, really happy with the ones with a shaped

waistband in elastic denim, as they fit even better than the ones with a

straight waistband gathered with elastic. Cutting it requires more

fabric, but I think it s definitely worth it.

But it will be a problem for a later time: right now three pairs of

jeans are a good number to keep in rotation, and I hope I won t have to

sew jeans for myself for quite some time.

I think that the leftovers of plain denim will be used for a skirt or

something else, and as for the leftovers of elastic denim, well, there

aren t a lot left, but what else I did with them is the topic for

another post.

Thanks to the fact that they are all slightly different, I ve started to

keep track of the times when I wash each pair, and hopefully I will be

able to see whether the elastic denim is significantly less durable than

the regular, or the added weight compensates for it somewhat. I m not

sure I ll manage to remember about saving the data until they get worn,

but if I do it will be interesting to know.

Oh, and I say I ve finished working on jeans and everything, but I still

haven t sewn the belt loops to the third pair. And I m currently wearing

them. It s a sewist tradition, or something. :D

I think that the leftovers of plain denim will be used for a skirt or

something else, and as for the leftovers of elastic denim, well, there

aren t a lot left, but what else I did with them is the topic for

another post.

Thanks to the fact that they are all slightly different, I ve started to

keep track of the times when I wash each pair, and hopefully I will be

able to see whether the elastic denim is significantly less durable than

the regular, or the added weight compensates for it somewhat. I m not

sure I ll manage to remember about saving the data until they get worn,

but if I do it will be interesting to know.

Oh, and I say I ve finished working on jeans and everything, but I still

haven t sewn the belt loops to the third pair. And I m currently wearing

them. It s a sewist tradition, or something. :D

Update 28.02.2024 19:45 CET: There is now a blog entry at https://blog.opencollective.com/open-collective-official-statement-ocf-dissolution/ trying to discern the legal entities in the Open Collective ecosystem and recommending potential ways forward.

Update 28.02.2024 19:45 CET: There is now a blog entry at https://blog.opencollective.com/open-collective-official-statement-ocf-dissolution/ trying to discern the legal entities in the Open Collective ecosystem and recommending potential ways forward.

This covers basically all my known omissions from last update except spellchecking of the Description field.

The X- style prefixes for field names are now understood and handled. This means the language server now considers XC-Package-Type the same as Package-Type.

More diagnostics:

- Fields without values now trigger an error marker

- Duplicated fields now trigger an error marker

- Fields used in the wrong paragraph now trigger an error marker

- Typos in field names or values now trigger a warning marker. For field names, X- style prefixes are stripped before typo detection is done.

- The value of the Section field is now validated against a dataset of known sections and trigger a warning marker if not known.

The "on-save trim end of line whitespace" now works. I had a logic bug in the server side code that made it submit "no change" edits to the editor.

The language server now provides "hover" documentation for field names. There is a small screenshot of this below. Sadly, emacs does not support markdown or, if it does, it does not announce the support for markdown. For now, all the documentation is always in markdown format and the language server will tag it as either markdown or plaintext depending on the announced support.

The language server now provides quick fixes for some of the more trivial problems such as deprecated fields or typos of fields and values.

Added more known fields including the XS-Autobuild field for non-free packages along with a link to the relevant devref section in its hover doc.

Despite its very limited feature set, I feel editing debian/control in emacs is now a much more pleasant experience. Coming back to the features that Otto requested, the above covers a grand total of zero. Sorry, Otto. It is not you, it is me.

- Diagnostics or linting of basic issues.

- Completion suggestions for all known field names that I could think of and values for some fields.

- Folding ranges (untested). This feature enables the editor to "fold" multiple lines. It is often used with multi-line comments and that is the feature currently supported.

- On save, trim trailing whitespace at the end of lines (untested). Might not be registered correctly on the server end.

Notable omission at this time:

- An error marker for syntax errors.

- An error marker for missing a mandatory field like Package or Architecture. This also includes Standards-Version, which is admittedly mandatory by policy rather than tooling falling part.

- An error marker for adding Multi-Arch: same to an Architecture: all package.

- Error marker for providing an unknown value to a field with a set of known values. As an example, writing foo in Multi-Arch would trigger this one.

- Warning marker for using deprecated fields such as DM-Upload-Allowed, or when setting a field to its default value for fields like Essential. The latter rule only applies to selected fields and notably Multi-Arch: no does not trigger a warning.

- Info level marker if a field like Priority duplicates the value of the Source paragraph.

- No errors are raised if a field does not have a value.

- No errors are raised if a field is duplicated inside a paragraph.

- No errors are used if a field is used in the wrong paragraph.

- No spellchecking of the Description field.

- No understanding that Foo and X[CBS]-Foo are related. As an example, XC-Package-Type is completely ignored despite being the old name for Package-Type.

- Quick fixes to solve these problems... :)

Obviously, the setup should get easier over time. The first three bullet points should eventually get resolved by merges and upload meaning you end up with an apt install command instead of them. For the editor part, I would obviously love it if we can add snippets for editors to make the automatically pick up the language server when the relevant file is installed.

- Build and install the deb of the main branch of pygls from https://salsa.debian.org/debian/pygls The package is in NEW and hopefully this step will soon just be a regular apt install.

- Build and install the deb of the rts-locatable branch of my python-debian fork from https://salsa.debian.org/nthykier/python-debian There is a draft MR of it as well on the main repo.

- Build and install the deb of the lsp-support branch of debputy from https://salsa.debian.org/debian/debputy

- Configure your editor to run debputy lsp debian/control as the language server for debian/control. This is depends on your editor. I figured out how to do it for emacs (see below). I also found a guide for neovim at https://neovim.io/doc/user/lsp. Note that debputy can be run from any directory here. The debian/control is a reference to the file format and not a concrete file in this case.

(with-eval-after-load 'eglot

(add-to-list 'eglot-server-programs

'(debian-control-mode . ("debputy" "lsp" "debian/control"))))

We have a cabin out in the forest, and when I say "out in the forest" I mean "in a national forest subject to regulation by the US Forest Service" which means there's an extremely thick book describing the things we're allowed to do and (somewhat longer) not allowed to do. It's also down in the bottom of a valley surrounded by tall trees (the whole "forest" bit). There used to be AT&T copper but all that infrastructure burned down in a big fire back in 2021 and AT&T no longer supply new copper links, and Starlink isn't viable because of the whole "bottom of a valley surrounded by tall trees" thing along with regulations that prohibit us from putting up a big pole with a dish on top. Thankfully there's LTE towers nearby, so I'm simply using cellular data. Unfortunately my provider rate limits connections to video streaming services in order to push them down to roughly SD resolution. The easy workaround is just to VPN back to somewhere else, which in my case is just a Wireguard link back to San Francisco.

We have a cabin out in the forest, and when I say "out in the forest" I mean "in a national forest subject to regulation by the US Forest Service" which means there's an extremely thick book describing the things we're allowed to do and (somewhat longer) not allowed to do. It's also down in the bottom of a valley surrounded by tall trees (the whole "forest" bit). There used to be AT&T copper but all that infrastructure burned down in a big fire back in 2021 and AT&T no longer supply new copper links, and Starlink isn't viable because of the whole "bottom of a valley surrounded by tall trees" thing along with regulations that prohibit us from putting up a big pole with a dish on top. Thankfully there's LTE towers nearby, so I'm simply using cellular data. Unfortunately my provider rate limits connections to video streaming services in order to push them down to roughly SD resolution. The easy workaround is just to VPN back to somewhere else, which in my case is just a Wireguard link back to San Francisco. Just like the corset, I also needed a new pair of jeans.

Back when my body size changed drastically of course my jeans no longer

fit. While I was waiting for my size to stabilize I kept wearing them

with a somewhat tight belt, but it was ugly and somewhat uncomfortable.

When I had stopped changing a lot I tried to buy new ones in the same

model, and found out that I was too thin for the menswear jeans of that

shop. I could have gone back to wearing women s jeans, but I didn t want

to have to deal with the crappy fabric and short pockets, so I basically

spent a few years wearing mostly skirts, and oversized jeans when I

really needed trousers.

Meanwhile, I had drafted a jeans pattern for my SO, which we had

planned to make in technical fabric, but ended up being made in a

cotton-wool mystery mix for winter and in linen-cotton for summer, and

the technical fabric version was no longer needed (yay for natural

fibres!)

It was clear what the solution to my jeans problems would have been, I

just had to stop getting distracted by other projects and draft a new

pattern using a womanswear block instead of a menswear one.

Which, in January 2024 I finally did, and I believe it took a bit less

time than the previous one, even if it had all of the same fiddly

pieces.

I already had a cut of the same cotton-linen I had used for my SO,

except in black, and used it to make the pair this post is about.

The parametric pattern is of course online, as #FreeSoftWear, at the

usual place.

This time it was faster, since I didn t have to write step-by-step

instructions, as they are exactly the same as the other pattern.

Just like the corset, I also needed a new pair of jeans.

Back when my body size changed drastically of course my jeans no longer

fit. While I was waiting for my size to stabilize I kept wearing them

with a somewhat tight belt, but it was ugly and somewhat uncomfortable.

When I had stopped changing a lot I tried to buy new ones in the same

model, and found out that I was too thin for the menswear jeans of that

shop. I could have gone back to wearing women s jeans, but I didn t want

to have to deal with the crappy fabric and short pockets, so I basically

spent a few years wearing mostly skirts, and oversized jeans when I

really needed trousers.

Meanwhile, I had drafted a jeans pattern for my SO, which we had

planned to make in technical fabric, but ended up being made in a

cotton-wool mystery mix for winter and in linen-cotton for summer, and

the technical fabric version was no longer needed (yay for natural

fibres!)

It was clear what the solution to my jeans problems would have been, I

just had to stop getting distracted by other projects and draft a new

pattern using a womanswear block instead of a menswear one.

Which, in January 2024 I finally did, and I believe it took a bit less

time than the previous one, even if it had all of the same fiddly

pieces.

I already had a cut of the same cotton-linen I had used for my SO,

except in black, and used it to make the pair this post is about.

The parametric pattern is of course online, as #FreeSoftWear, at the

usual place.

This time it was faster, since I didn t have to write step-by-step

instructions, as they are exactly the same as the other pattern.

Making also went smoothly, and the result was fitting. Very fitting. A

big too fitting, and the standard bum adjustment of the back was just

enough for what apparently still qualifies as a big bum, so I adjusted

the pattern to be able to add a custom amount of ease in a few places.

But at least I had a pair of jeans-shaped trousers that fit!

Except, at 200 g/m I can t say that fabric is the proper weight for a

pair of trousers, and I may have looked around online1 for

some denim, and, well, it s 2024, so my no-fabric-buy 2023 has not been

broken, right?

Let us just say that there may be other jeans-related posts in the near

future.

Making also went smoothly, and the result was fitting. Very fitting. A

big too fitting, and the standard bum adjustment of the back was just

enough for what apparently still qualifies as a big bum, so I adjusted

the pattern to be able to add a custom amount of ease in a few places.

But at least I had a pair of jeans-shaped trousers that fit!

Except, at 200 g/m I can t say that fabric is the proper weight for a

pair of trousers, and I may have looked around online1 for

some denim, and, well, it s 2024, so my no-fabric-buy 2023 has not been

broken, right?

Let us just say that there may be other jeans-related posts in the near

future.

In late 2022 I prepared a batch of drawstring backpacks in cotton as

reusable wrappers for Christmas gifts; however I didn t know what cord

to use, didn t want to use paracord, and couldn t find anything that

looked right in the local shops.

With Christmas getting dangerously closer, I visited a craft materials

website for unrelated reasons, found out that they sold macrame

cords, and panic-bought a few types in the hope that at least one would

work for the backpacks.

I got lucky, and my first choice fitted just fine, and I was able to

finish the backpacks in time for the holidays.

And then I had a box full of macrame cords in various sizes and types

that weren t the best match for the drawstring in a backpack, and no

real use for them.

I don t think I had ever done macrame, but I have made friendship

bracelets in primary school, and a few Friendship Bracelets, But For

Real Men So We Call Them Survival Bracelets(TM) more recently, so I

didn t bother reading instructions or tutorials online, I just grabbed

the Ashley Book of Knots to refresh myself on the knots used, and

decided to make myself a small bag for an A6 book.

I choose one of the thin, ~3 mm cords, Tre Sfere Macram Barbante, of

which there was plenty, so that I could stumble around with no real plan.

In late 2022 I prepared a batch of drawstring backpacks in cotton as

reusable wrappers for Christmas gifts; however I didn t know what cord

to use, didn t want to use paracord, and couldn t find anything that

looked right in the local shops.

With Christmas getting dangerously closer, I visited a craft materials

website for unrelated reasons, found out that they sold macrame

cords, and panic-bought a few types in the hope that at least one would

work for the backpacks.

I got lucky, and my first choice fitted just fine, and I was able to

finish the backpacks in time for the holidays.

And then I had a box full of macrame cords in various sizes and types

that weren t the best match for the drawstring in a backpack, and no

real use for them.

I don t think I had ever done macrame, but I have made friendship

bracelets in primary school, and a few Friendship Bracelets, But For

Real Men So We Call Them Survival Bracelets(TM) more recently, so I

didn t bother reading instructions or tutorials online, I just grabbed

the Ashley Book of Knots to refresh myself on the knots used, and

decided to make myself a small bag for an A6 book.

I choose one of the thin, ~3 mm cords, Tre Sfere Macram Barbante, of

which there was plenty, so that I could stumble around with no real plan.

I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

IEEE Software recently announced that a paper that I co-authored with Dr. Stefano Zacchiroli has recently been awarded their Best Paper award:

IEEE Software recently announced that a paper that I co-authored with Dr. Stefano Zacchiroli has recently been awarded their Best Paper award:

Although it is possible to increase confidence in Free and Open Source Software (FOSS) by reviewing its source code, trusting code is not the same as trusting its executable counterparts. These are typically built and distributed by third-party vendors with severe security consequences if their supply chains are compromised. In this paper, we present reproducible builds, an approach that can determine whether generated binaries correspond with their original source code. We first define the problem and then provide insight into the challenges of making real-world software build in a "reproducible" manner that is, when every build generates bit-for-bit identical results. Through the experience of the Reproducible Builds project making the Debian Linux distribution reproducible, we also describe the affinity between reproducibility and quality assurance (QA).According to Google Scholar, the paper has accumulated almost 40 citations since publication. The full text of the paper can be found in PDF format.

Now that I m freelancing, I need to

actually track my time, which is something I ve had the luxury of not having

to do before. That meant something of a rethink of the way I ve been

keeping track of my to-do list. Up to now that was a combination of things

like the bug lists for the projects I m working on at the moment, whatever

task tracking system Canonical was using at the moment (Jira when I left),

and a giant flat text file in which I recorded logbook-style notes of what

I d done each day plus a few extra notes at the bottom to remind myself of

particularly urgent tasks. I could have started manually adding times to

each logbook entry, but ugh, let s not.

In general, I had the following goals (which were a bit reminiscent of my

address book):

Now that I m freelancing, I need to

actually track my time, which is something I ve had the luxury of not having

to do before. That meant something of a rethink of the way I ve been

keeping track of my to-do list. Up to now that was a combination of things

like the bug lists for the projects I m working on at the moment, whatever

task tracking system Canonical was using at the moment (Jira when I left),

and a giant flat text file in which I recorded logbook-style notes of what

I d done each day plus a few extra notes at the bottom to remind myself of

particularly urgent tasks. I could have started manually adding times to

each logbook entry, but ugh, let s not.

In general, I had the following goals (which were a bit reminiscent of my

address book):

vim-based

versions of Org mode in the past I ve found they haven t really fitted my

brain very well.

Taskwarrior and Timewarrior

One of the other Freexian collaborators mentioned

Taskwarrior and

Timewarrior, so I had a look at those.

The basic idea of Taskwarrior is that you have a task command that tracks

each task as a blob of JSON and provides subcommands to let you add, modify,

and remove tasks with a minimum of friction. task add adds a task, and

you can add metadata like project:Personal (I always make sure every task

has a project, for ease of filtering). Just running task shows you a task

list sorted by Taskwarrior s idea of urgency, with an ID for each task, and

there are various other reports with different filtering and verbosity.

task <id> annotate lets you attach more information to a task. task <id>

done marks it as done. So far so good, so a redacted version of my to-do

list looks like this:

$ task ls

ID A Project Tags Description

17 Freexian Add Incus support to autopkgtest [2]

7 Columbiform Figure out Lloyds online banking [1]

2 Debian Fix troffcvt for groff 1.23.0 [1]

11 Personal Replace living room curtain rail

task all project:Personal and

it d show me both pending and completed tasks in that project, and that all

the data was stored in ~/.task - though I have to say that there are

enough reporting bells and whistles that I haven t needed to poke around

manually. In combination with the regular backups that I do anyway (you do

too, right?), this gave me enough confidence to abandon my previous

text-file logbook approach.

Next was time tracking. Timewarrior integrates with Taskwarrior, albeit in

an only semi-packaged way, and

it was easy enough to set that up. Now I can do:

$ task 25 start

Starting task 00a9516f 'Write blog post about task tracking'.

Started 1 task.

Note: '"Write blog post about task tracking"' is a new tag.

Tracking Columbiform "Write blog post about task tracking"

Started 2024-01-10T11:28:38

Current 38

Total 0:00:00

You have more urgent tasks.

Project 'Columbiform' is 25% complete (3 of 4 tasks remaining).

task active to find the ID, then task

<id> stop. Timewarrior does the tedious stopwatch business for me, and I

can manually enter times if I forget to start/stop a task. Then the really

useful bit: I can do something like timew summary :month <name-of-client>

and it tells me how much to bill that client for this month. Perfect.

I also started using VIT to simplify

the day-to-day flow a little, which means I m normally just using one or two

keystrokes rather than typing longer commands. That isn t really necessary

from my point of view, but it does save some time.

Android integration

I left Android integration for a bit later since it wasn t essential. When

I got round to it, I have to say that it felt a bit clumsy, but it did

eventually work.

The first step was to set up a

taskserver. Most

of the setup procedure was OK, but I wanted to use Let s Encrypt to minimize

the amount of messing around with CAs I had to do. Getting this to work

involved hitting things with sticks a bit, and there s still a local CA

involved for client certificates. What I ended up with was a certbot

setup with the webroot authenticator and a custom deploy hook as follows

(with cert_name replaced by a DNS name in my house domain):

#! /bin/sh

set -eu

cert_name=taskd.example.org

found=false

for domain in $RENEWED_DOMAINS; do

case "$domain" in

$cert_name)

found=:

;;

esac

done

$found exit 0

install -m 644 "/etc/letsencrypt/live/$cert_name/fullchain.pem" \

/var/lib/taskd/pki/fullchain.pem

install -m 640 -g Debian-taskd "/etc/letsencrypt/live/$cert_name/privkey.pem" \

/var/lib/taskd/pki/privkey.pem

systemctl restart taskd.service

/etc/taskd/config (server.crl.pem and

ca.cert.pem were generated using the documented taskserver setup procedure):

server.key=/var/lib/taskd/pki/privkey.pem

server.cert=/var/lib/taskd/pki/fullchain.pem

server.crl=/var/lib/taskd/pki/server.crl.pem

ca.cert=/var/lib/taskd/pki/ca.cert.pem

taskd.ca on my laptop to

/usr/share/ca-certificates/mozilla/ISRG_Root_X1.crt and otherwise follow

the client setup instructions, run task sync init to get things started,

and then task sync every so often to sync changes between my laptop and

the taskserver.

I used TaskWarrior

Mobile

as the client. I have to say I wouldn t want to use that client as my

primary task tracking interface: the setup procedure is clunky even beyond

the necessity of copying a client certificate around, it expects you to give

it a .taskrc rather than having a proper settings interface for that, and

it only seems to let you add a task if you specify a due date for it. It

also lacks Timewarrior integration, so I can only really use it when I don t

care about time tracking, e.g. personal tasks. But that s really all I

need, so it meets my minimum requirements.

Next?

Considering this is literally the first thing I tried, I have to say I m

pretty happy with it. There are a bunch of optional extras I haven t tried

yet, but in general it kind of has the vim nature for me: if I need

something it s very likely to exist or easy enough to build, but the

features I don t use don t get in my way.

I wouldn t recommend any of this to somebody who didn t already spend most

of their time in a terminal - but I do. I m glad people have gone to all

the effort to build this so I didn t have to.

This year was hard from a personal and work point of view, which impacted the amount of Free Software bits I ended up doing - even when I had the time I often wasn t in the right head space to make progress on things. However writing this annual recap up has been a useful exercise, as I achieved more than I realised. For previous years see 2019, 2020, 2021 + 2022.

This year was hard from a personal and work point of view, which impacted the amount of Free Software bits I ended up doing - even when I had the time I often wasn t in the right head space to make progress on things. However writing this annual recap up has been a useful exercise, as I achieved more than I realised. For previous years see 2019, 2020, 2021 + 2022.

CW for body size change mentions

I needed a corset, badly.

Years ago I had a chance to have my measurements taken by a former

professional corset maker and then a lesson in how to draft an underbust

corset, and that lead to me learning how nice wearing a well-fitted

corset feels.

Later I tried to extend that pattern up for a midbust corset, with

success.

And then my body changed suddenly, and I was no longer able to wear

either of those, and after a while I started missing them.

Since my body was still changing (if no longer drastically so), and I

didn t want to use expensive materials for something that had a risk of

not fitting after too little time, I decided to start by making myself a

summer lightweight corset in aida cloth and plastic boning (for which I

had already bought materials). It fitted, but not as well as the first

two ones, and I ve worn it quite a bit.

I still wanted back the feeling of wearing a comfy, heavy contraption of

coutil and steel, however.

After a lot of procrastination I redrafted a new pattern, scrapped

everything, tried again, had my measurements taken by a dressmaker

[#dressmaker], put them in the draft, cut a first mock-up in cheap

cotton, fixed the position of a seam, did a second mock-up in denim

[#jeans] from an old pair of jeans, and then cut into the cheap

herringbone coutil I was planning to use.

And that s when I went to see which one of the busks in my stash would

work, and realized that I had used a wrong vertical measurement and the

front of the corset was way too long for a midbust corset.

CW for body size change mentions

I needed a corset, badly.

Years ago I had a chance to have my measurements taken by a former

professional corset maker and then a lesson in how to draft an underbust

corset, and that lead to me learning how nice wearing a well-fitted

corset feels.

Later I tried to extend that pattern up for a midbust corset, with

success.

And then my body changed suddenly, and I was no longer able to wear

either of those, and after a while I started missing them.

Since my body was still changing (if no longer drastically so), and I

didn t want to use expensive materials for something that had a risk of

not fitting after too little time, I decided to start by making myself a

summer lightweight corset in aida cloth and plastic boning (for which I

had already bought materials). It fitted, but not as well as the first

two ones, and I ve worn it quite a bit.

I still wanted back the feeling of wearing a comfy, heavy contraption of

coutil and steel, however.

After a lot of procrastination I redrafted a new pattern, scrapped

everything, tried again, had my measurements taken by a dressmaker

[#dressmaker], put them in the draft, cut a first mock-up in cheap

cotton, fixed the position of a seam, did a second mock-up in denim

[#jeans] from an old pair of jeans, and then cut into the cheap

herringbone coutil I was planning to use.

And that s when I went to see which one of the busks in my stash would

work, and realized that I had used a wrong vertical measurement and the

front of the corset was way too long for a midbust corset.

Luckily I also had a few longer busks, I basted one to the denim mock up

and tried to wear it for a few hours, to see if it was too long to be

comfortable. It was just a bit, on the bottom, which could be easily

fixed with the Power Tools1.

Except, the more I looked at it the more doing this felt wrong: what I

needed most was a midbust corset, not an overbust one, which is what

this was starting to be.

I could have trimmed it down, but I knew that I also wanted this corset

to be a wearable mockup for the pattern, to refine it and have it

available for more corsets. And I still had more than half of the cheap

coutil I was using, so I decided to redo the pattern and cut new panels.

And this is where the or two comes in: I m not going to waste the

overbust panels: I had been wanting to learn some techniques to make

corsets with a fashion fabric layer, rather than just a single layer of

coutil, and this looks like an excellent opportunity for that, together

with a piece of purple silk that I know I have in the stash. This will

happen later, however, first I m giving priority to the underbust.

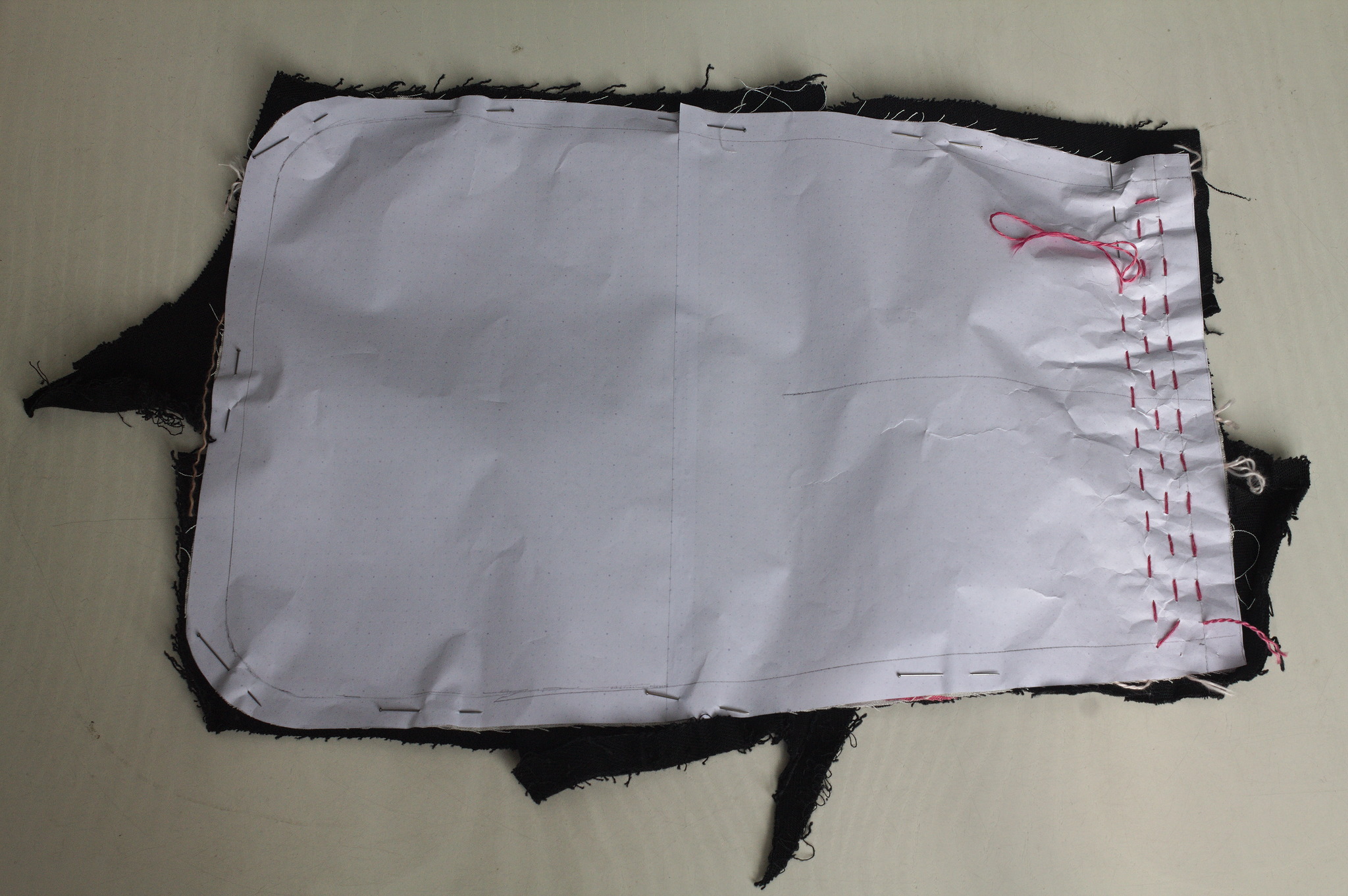

Anyway, a second set of panels was cut, all the seam lines marked with

tailor tacks, and I started sewing by inserting the busk.

And then realized that the pre-made boning channel tape I had was too

narrow for the 10 mm spiral steel I had plenty of. And that the 25 mm

twill tape was also too narrow for a double boning channel.

On the other hand, the 18 mm twill tape I had used for the waist tape

was good for a single channel, so I decided to put a single bone on each

seam, and then add another piece of boning in the middle of each panel.

Since I m making external channels, making them in self fabric would

have probably looked better, but I no longer had enough fabric, because

of the cutting mishap, and anyway this is going to be a strictly

underwear only corset, so it s not a big deal.

Once the boning channel situation was taken care of, everything else

proceeded quite smoothly and I was able to finish the corset during the

Christmas break, enlisting again my SO to take care of the flat steel

boning while I cut the spiral steels myself with wire cutters.

Luckily I also had a few longer busks, I basted one to the denim mock up

and tried to wear it for a few hours, to see if it was too long to be

comfortable. It was just a bit, on the bottom, which could be easily

fixed with the Power Tools1.

Except, the more I looked at it the more doing this felt wrong: what I

needed most was a midbust corset, not an overbust one, which is what

this was starting to be.

I could have trimmed it down, but I knew that I also wanted this corset

to be a wearable mockup for the pattern, to refine it and have it

available for more corsets. And I still had more than half of the cheap

coutil I was using, so I decided to redo the pattern and cut new panels.

And this is where the or two comes in: I m not going to waste the

overbust panels: I had been wanting to learn some techniques to make

corsets with a fashion fabric layer, rather than just a single layer of

coutil, and this looks like an excellent opportunity for that, together

with a piece of purple silk that I know I have in the stash. This will

happen later, however, first I m giving priority to the underbust.

Anyway, a second set of panels was cut, all the seam lines marked with

tailor tacks, and I started sewing by inserting the busk.

And then realized that the pre-made boning channel tape I had was too

narrow for the 10 mm spiral steel I had plenty of. And that the 25 mm

twill tape was also too narrow for a double boning channel.

On the other hand, the 18 mm twill tape I had used for the waist tape

was good for a single channel, so I decided to put a single bone on each

seam, and then add another piece of boning in the middle of each panel.

Since I m making external channels, making them in self fabric would

have probably looked better, but I no longer had enough fabric, because

of the cutting mishap, and anyway this is going to be a strictly

underwear only corset, so it s not a big deal.

Once the boning channel situation was taken care of, everything else

proceeded quite smoothly and I was able to finish the corset during the

Christmas break, enlisting again my SO to take care of the flat steel

boning while I cut the spiral steels myself with wire cutters.

I could have been a bit more precise with the binding, as it doesn t

align precisely at the front edge, but then again, it s underwear,

nobody other than me and everybody who reads this post is going to see

it and I was in a hurry to see it finished. I will be more careful with

the next one.

I could have been a bit more precise with the binding, as it doesn t

align precisely at the front edge, but then again, it s underwear,

nobody other than me and everybody who reads this post is going to see

it and I was in a hurry to see it finished. I will be more careful with

the next one.

I also think that I haven t been careful enough when pressing the seams

and applying the tape, and I ve lost about a cm of width per part, so

I m using a lacing gap that is a bit wider than I planned for, but that

may change as the corset gets worn, and is still within tolerance.

Also, on the morning after I had finished the corset I woke up and

realized that I had forgotten to add garter tabs at the bottom edge.

I don t know whether I will ever use them, but I wanted the option, so

maybe I ll try to add them later on, especially if I can do it without

undoing the binding.

The next step would have been flossing, which I proceeded to postpone

until I ve worn the corset for a while: not because there is any reason

for it, but because I still don t know how I want to do it :)

What was left was finishing and uploading the pattern and instructions,

that are now on my sewing pattern website

as #FreeSoftWear, and finally I could post this on the blog.

I also think that I haven t been careful enough when pressing the seams

and applying the tape, and I ve lost about a cm of width per part, so

I m using a lacing gap that is a bit wider than I planned for, but that

may change as the corset gets worn, and is still within tolerance.

Also, on the morning after I had finished the corset I woke up and

realized that I had forgotten to add garter tabs at the bottom edge.

I don t know whether I will ever use them, but I wanted the option, so

maybe I ll try to add them later on, especially if I can do it without

undoing the binding.

The next step would have been flossing, which I proceeded to postpone

until I ve worn the corset for a while: not because there is any reason

for it, but because I still don t know how I want to do it :)

What was left was finishing and uploading the pattern and instructions,

that are now on my sewing pattern website

as #FreeSoftWear, and finally I could post this on the blog.

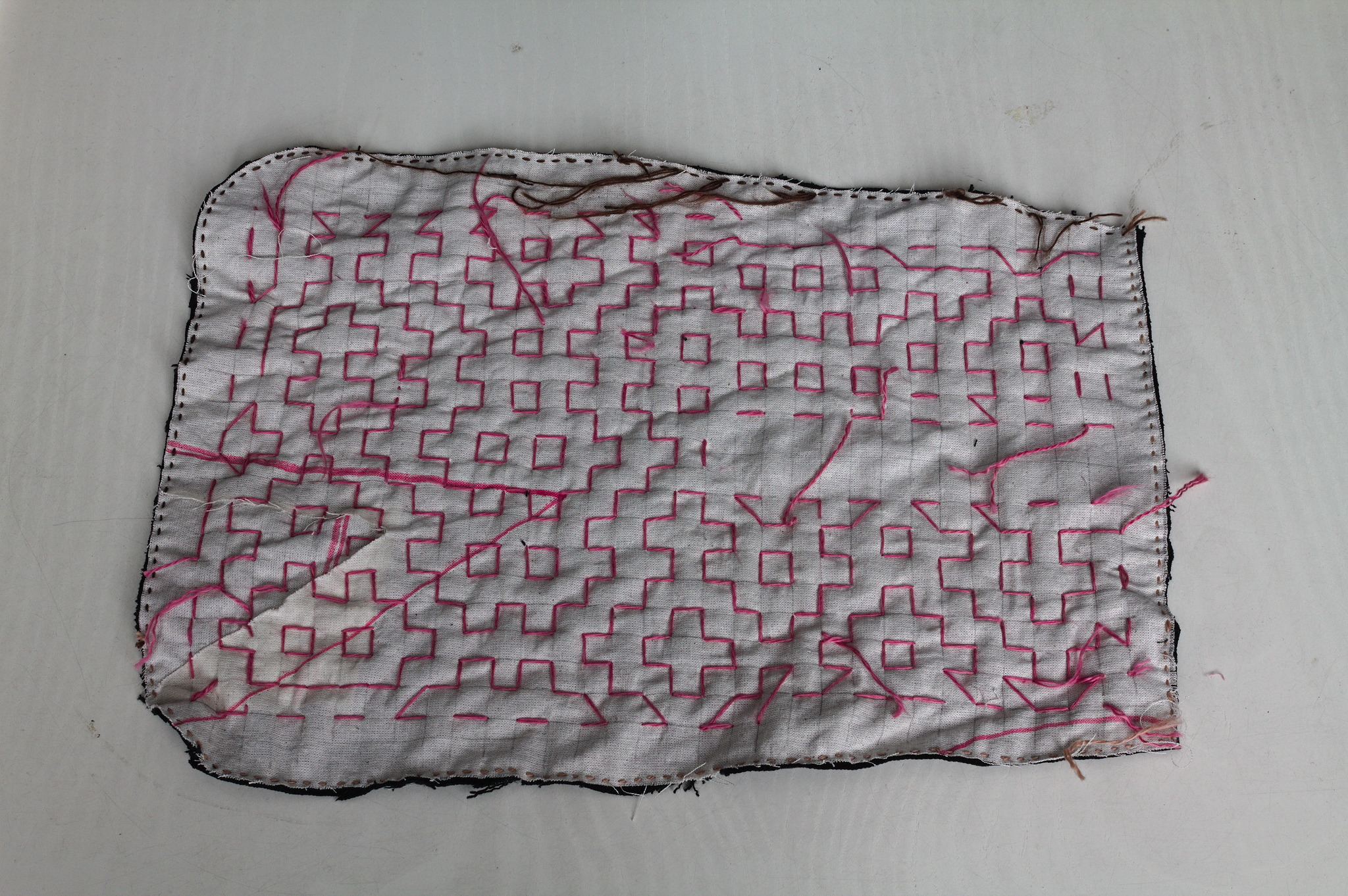

Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a Numberphile video about Hitomezashi

Stitch Patterns based on numbers, words or randomness.

Few weeks ago I had cut some fabric piece out of an old pair of jeans

and I had a lot of scraps that were too small to do anything useful on

their own.

It easy to see where this can go, right?

Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a Numberphile video about Hitomezashi

Stitch Patterns based on numbers, words or randomness.

Few weeks ago I had cut some fabric piece out of an old pair of jeans

and I had a lot of scraps that were too small to do anything useful on

their own.

It easy to see where this can go, right?

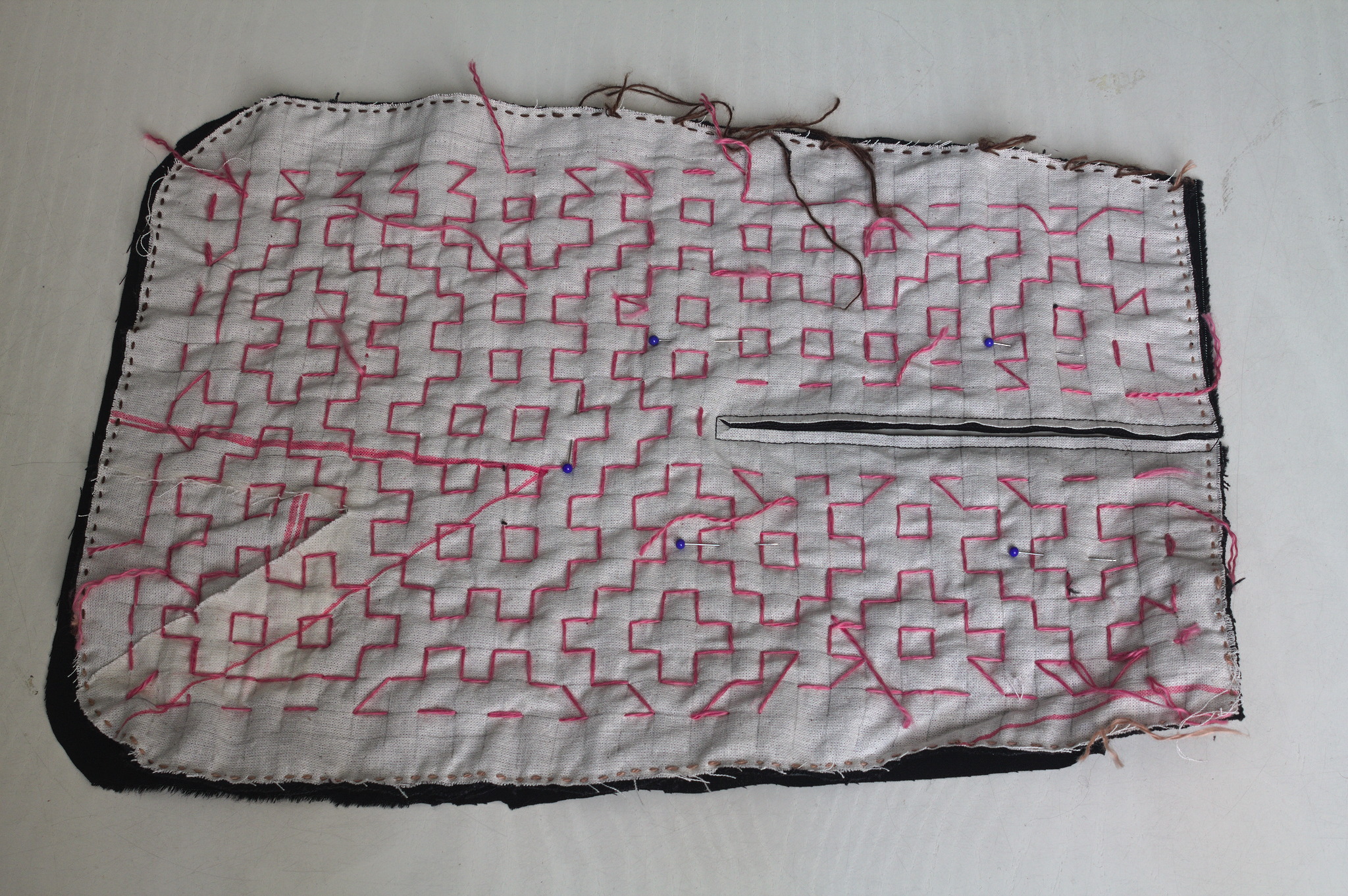

I cut a pocket shape out of old garment mockups (this required some

piecing), drew a square grid, arranged scraps of jeans to cover the

other side, kept everything together with a lot of pins, carefully

avoided basting anything, and started covering everything in sashiko /

hitomezashi stitches, starting each line with a stitch on the front or

the back of the work based on the result of:

I cut a pocket shape out of old garment mockups (this required some

piecing), drew a square grid, arranged scraps of jeans to cover the

other side, kept everything together with a lot of pins, carefully

avoided basting anything, and started covering everything in sashiko /

hitomezashi stitches, starting each line with a stitch on the front or

the back of the work based on the result of:

import random

random.choice(["front", "back"]) For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.

One of the knitting projects I m working on is a big bottom-up

triangular shawl in less-than-fingering weight yarn (NM 1/15): it feels

like a cloud should by all rights feel, and I have good expectations out

of it, but it s taking forever and a day.

And then one day last spring I started thinking in the general direction

of top-down shawls, and decided I couldn t wait until I had finished the

first one to see if I could design one.

For my first attempt I used an odd ball of 50% wool 50% plastic I had in

my stash and worked it on 12 mm tree trunks, and I quickly made

something between a scarf and a shawl that got some use during the

summer thunderstorms when temperatures got a bit lower, but not really

cold. I was happy with the shape, not with the exact position of the

increases, but I had ideas for improvements, so I just had to try

another time.

Digging through the stash I found four balls of Drops Alpaca in two

shades of grey: I had bought it with the intent to test its durability

in somewhat more demanding situations (such as gloves or even socks),

but then the LYS1 no longer carries it, so I might as well use it for

something a bit more one-off (and when I received the yarn it felt so

soft that doing something for the upper body looked like a better idea

anyway).

I decided to start working in garter stitch with the darker colour, then

some garter stitch in the lighter shade and to finish with yo / k2t

lace, to make the shawl sort of fade out.

The first half was worked relatively slowly through the summer, and then

when I reached the colour change I suddenly picked up working on it and

it was finished in a couple of weeks.

One of the knitting projects I m working on is a big bottom-up

triangular shawl in less-than-fingering weight yarn (NM 1/15): it feels

like a cloud should by all rights feel, and I have good expectations out

of it, but it s taking forever and a day.

And then one day last spring I started thinking in the general direction

of top-down shawls, and decided I couldn t wait until I had finished the

first one to see if I could design one.

For my first attempt I used an odd ball of 50% wool 50% plastic I had in

my stash and worked it on 12 mm tree trunks, and I quickly made

something between a scarf and a shawl that got some use during the

summer thunderstorms when temperatures got a bit lower, but not really

cold. I was happy with the shape, not with the exact position of the

increases, but I had ideas for improvements, so I just had to try

another time.

Digging through the stash I found four balls of Drops Alpaca in two

shades of grey: I had bought it with the intent to test its durability

in somewhat more demanding situations (such as gloves or even socks),

but then the LYS1 no longer carries it, so I might as well use it for

something a bit more one-off (and when I received the yarn it felt so

soft that doing something for the upper body looked like a better idea

anyway).

I decided to start working in garter stitch with the darker colour, then

some garter stitch in the lighter shade and to finish with yo / k2t

lace, to make the shawl sort of fade out.

The first half was worked relatively slowly through the summer, and then

when I reached the colour change I suddenly picked up working on it and

it was finished in a couple of weeks.

looks denser in a nice way, but the the lace border is scrunched up.Then I had doubts on whether I wanted to block it, since I liked the soft feel, but I decided to try it anyway: it didn t lose the feel, and the look is definitely better, even if it was my first attempt at blocking a shawl and I wasn t that good at it.

I m glad that I did it, however, as it s still soft and warm, but now

also looks nicer.

The pattern is of course online as #FreeSoftWear on my fiber craft

patterns website.

I m glad that I did it, however, as it s still soft and warm, but now

also looks nicer.

The pattern is of course online as #FreeSoftWear on my fiber craft

patterns website.

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

Books

Peter Watts: Blindsight (2006)

Peter Watts: Blindsight (2006) Reymer Banham: Los Angeles: The Architecture of Four Ecologies (2006)

Reymer Banham: Los Angeles: The Architecture of Four Ecologies (2006) Joanne McNeil: Lurking: How a Person Became a User (2020)

Joanne McNeil: Lurking: How a Person Became a User (2020) J. L. Carr: A Month in the Country (1980)

J. L. Carr: A Month in the Country (1980) Hilary Mantel: A Memoir of My Former Self: A Life in Writing (2023)

Hilary Mantel: A Memoir of My Former Self: A Life in Writing (2023) Adam Higginbotham: Midnight in Chernobyl (2019)

Adam Higginbotham: Midnight in Chernobyl (2019) Tony Judt: Postwar: A History of Europe Since 1945 (2005)

Tony Judt: Postwar: A History of Europe Since 1945 (2005) Tony Judt: Reappraisals: Reflections on the Forgotten Twentieth Century (2008)

Tony Judt: Reappraisals: Reflections on the Forgotten Twentieth Century (2008) Peter Apps: Show Me the Bodies: How We Let Grenfell Happen (2021)

Peter Apps: Show Me the Bodies: How We Let Grenfell Happen (2021) Joan Didion: Slouching Towards Bethlehem (1968)

Joan Didion: Slouching Towards Bethlehem (1968) Erik Larson: The Devil in the White City (2003)

Erik Larson: The Devil in the White City (2003)

Films Recent releases

By the influencers on the famous proprietary video platform1.

When I m crafting with no powertools I tend to watch videos, and this

autumn I ve seen a few in a row that were making red wool dresses, at

least one or two medieval kirtles. I don t remember which channels they

were, and I ve decided not to go back and look for them, at least for a

time.

By the influencers on the famous proprietary video platform1.

When I m crafting with no powertools I tend to watch videos, and this

autumn I ve seen a few in a row that were making red wool dresses, at

least one or two medieval kirtles. I don t remember which channels they

were, and I ve decided not to go back and look for them, at least for a

time.

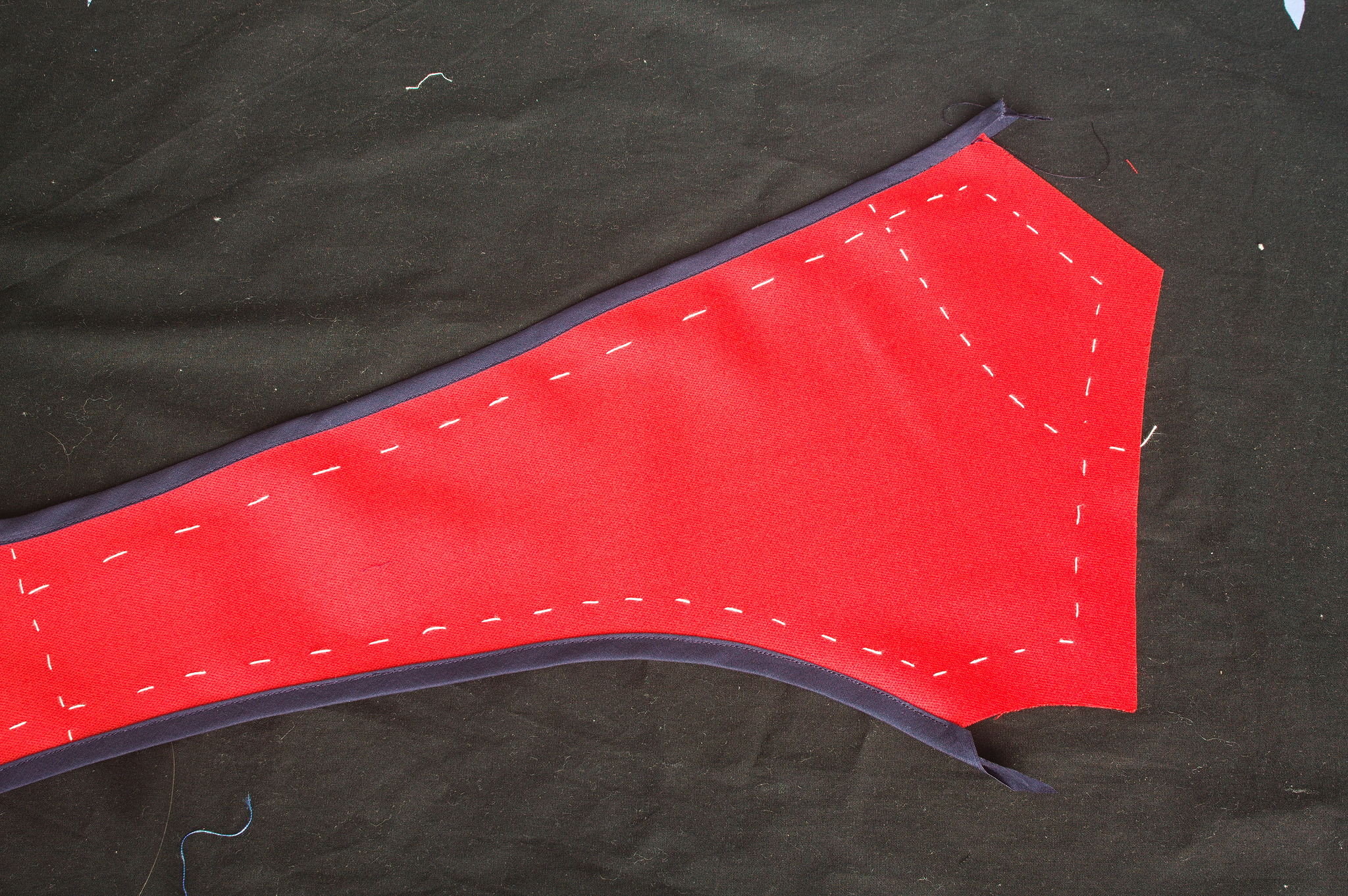

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a dress that

satisfied the latter requirement

and I still had more than half of the red wool faille I ve used for the

Garibaldi blouse (still not blogged, but I will get to it), and this

time I wanted it to be ready for this winter.

While the pattern I was going to use is Victorian, it was designed for

underwear, and this was designed to be outerwear, so from the very start

I decided not to bother too much with any kind of historical details or

techniques.

Anyway, my brain suddenly decided that I needed a red wool dress, fitted

enough to give some bust support. I had already made a dress that

satisfied the latter requirement

and I still had more than half of the red wool faille I ve used for the

Garibaldi blouse (still not blogged, but I will get to it), and this

time I wanted it to be ready for this winter.

While the pattern I was going to use is Victorian, it was designed for

underwear, and this was designed to be outerwear, so from the very start

I decided not to bother too much with any kind of historical details or

techniques.

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

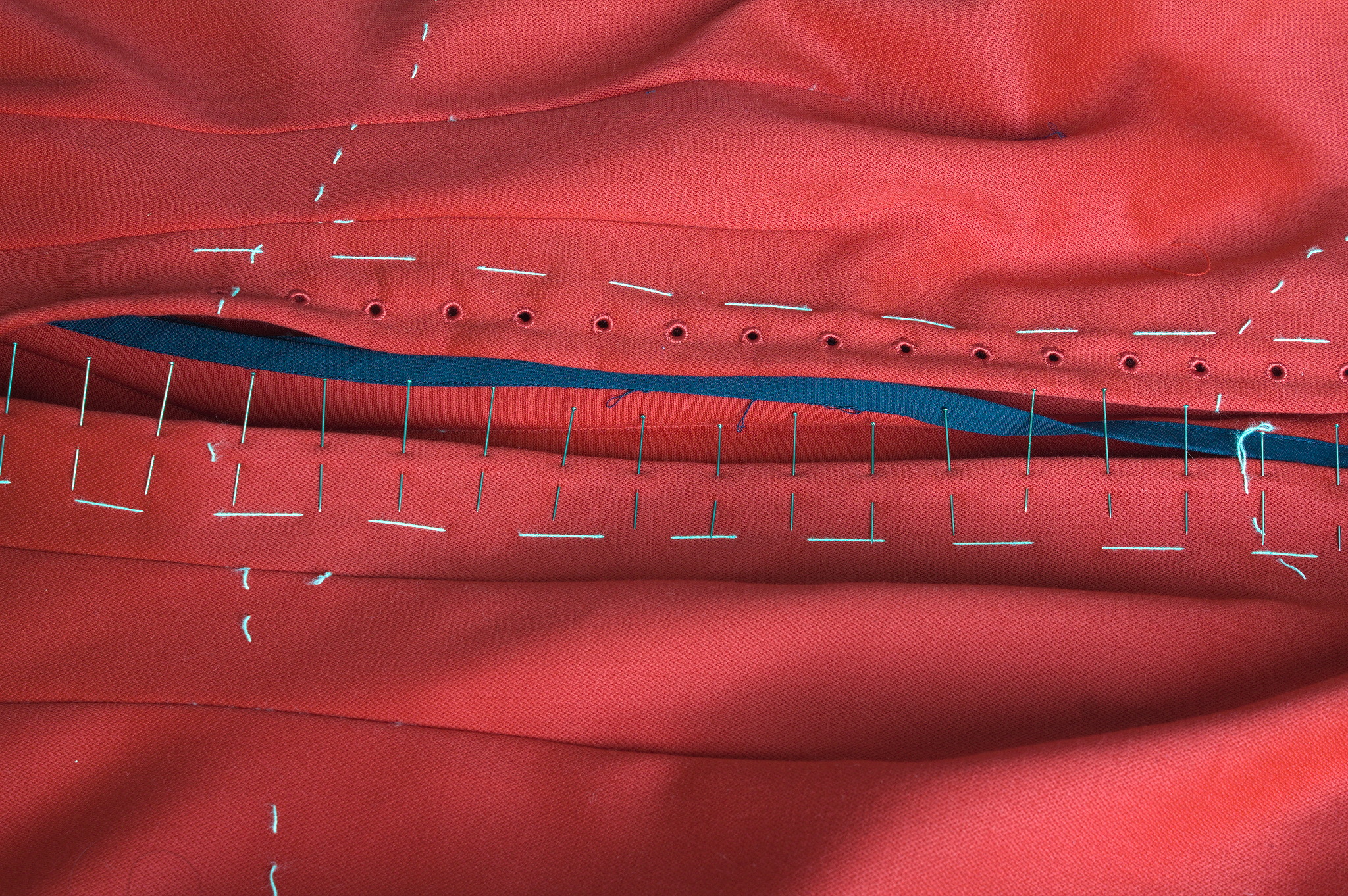

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

I knew that I didn t have enough fabric to add a flounce to the hem, as

in the cotton dress, but then I remembered that some time ago I fell for

a piece of fringed trim in black, white and red. I did a quick check

that the red wasn t clashing (it wasn t) and I knew I had a plan for the

hem decoration.

Then I spent a week finishing other projects, and the more I thought

about this dress, the more I was tempted to have spiral lacing at the

front rather than buttons, as a nod to the kirtle inspiration.

It may end up be a bit of a hassle, but if it is too much I can always

add a hidden zipper on a side seam, and only have to undo a bit of the

lacing around the neckhole to wear the dress.

Finally, I could start working on the dress: I cut all of the main

pieces, and since the seam lines were quite curved I marked them with

tailor s tacks, which I don t exactly enjoy doing or removing, but are

the only method that was guaranteed to survive while manipulating this

fabric (and not leave traces afterwards).

While cutting the front pieces I accidentally cut the high neck line

instead of the one I had used on the cotton dress: I decided to go for

it also on the back pieces and decide later whether I wanted to lower

it.