Remember, Mastodon is a new decentralized social network, based on a free software which is rapidly gaining users (already there is more than 1.5 million accounts). As I ve created my account in June, I was a fast addict and I ve already created several tools for this network, Feed2toot, Remindr and Boost (mostly written in Python).

Now, with all this experience I have to stress out the importance of choosing the correct Mastodon instance.

Some technical reminders on how Mastodon works

First, let s quickly clarify something about the decentralized part. In Mastodon, decentralization is made through a federation of dedicated servers, called instances , each one with a complete independent administration. Your user account is created on one specific instance. You have two choices:

- Create your own instance. Which requires advanced technical knowledge.

- Create your user account on a public instance. Which is the easiest and fastest way to start using Mastodon.

You can move your user account from one instance to another, but you have to follow a special procedure which can be quite long, considering your own interest for technical manipulation and the total amount of your followers you ll have to warn about your change. As such, you ll have to create another account on a new instance and import three lists: the one with your followers, the one with the accounts you have blocked, and the one with the account you have muted.

From this working process, several technical and human factors will interest us.

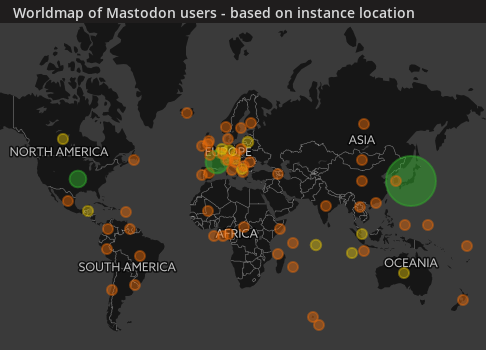

A good technical administration for instance

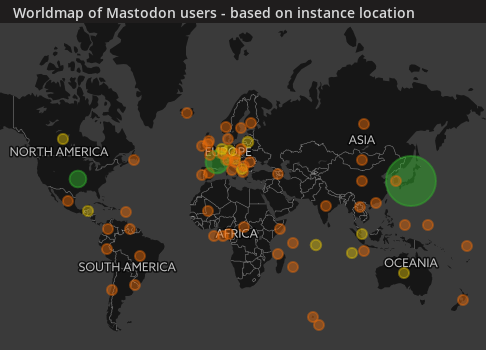

As a social network, Mastodon is truly decentralized, with more than 1.5 million users on more than 2350 existing instances. As such, the most common usage is to create an account on an open instance. To create its own instance is way too difficult for the average user. Yet, using an open instance creates a strong dependence on the technical administrator of the chosen instance.

The technical administrator will have to deal with several obligations to ensure its service continuity, with high-quality hardware and regular back-ups. All of these have a price, either in money and in time.

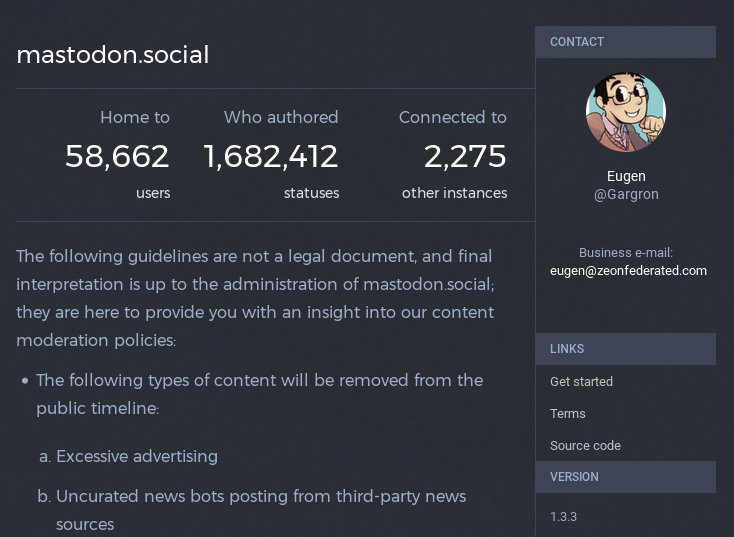

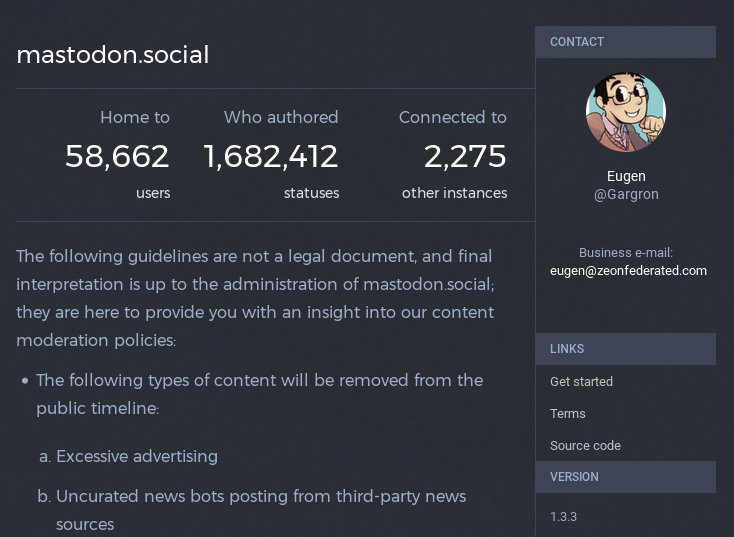

Regarding the time factor, it would be better to choose an administration team over an individual, as life events can change quite fast everyone s interests. As such, Framasoft, a French association dedicated to promoting the Free software use, offers its own Mastodon instance named: Framapiaf. The creator of the mastodon project, also offers a quite solid instance, Mastodon.social (see below).

Regarding the money factor, many instance administrators with a large number of users are currently asking for donation via Patreon, as hosting an instance server or renting one cost money.

Mastodon.social, the first instance of the Mastodon network

The Ideological Trend Of Your Instance

If anybody could have guessed the previous technical points since the recent registration explosion on the Mastodon social network, the following point took almost everyone by surprise. Little by little, different instances show their culture , their protest action, and their propaganda on this social network.

As the instance administrator has all the powers over its instance, he or she can block the instance of interacting with some other instances, or ban its instance s users from any interaction with other instances users.

With everyone having in mind the main advantages to have federalized instance from, this partial independence of some instances from the federation was a huge surprise. One of the most recent example was when the Unixcorn.xyz instance administrator banned its users from reading Aeris account, which was on its own instance. It was a cataclysm with several consequences, which I ve named the #AerisGate as it shows the different views on moderation and on its reception by various Mastodon users.

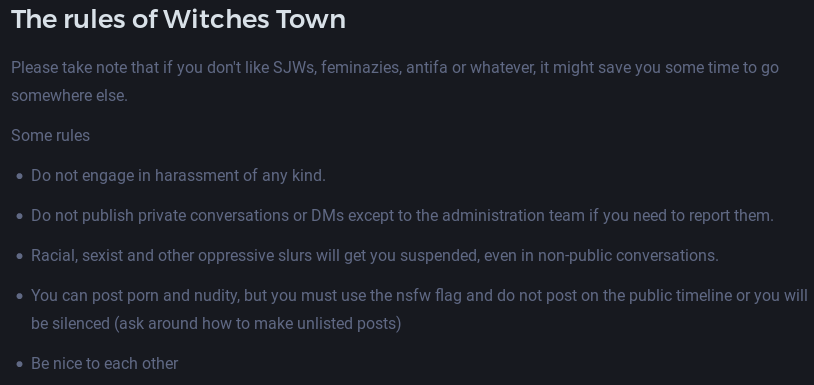

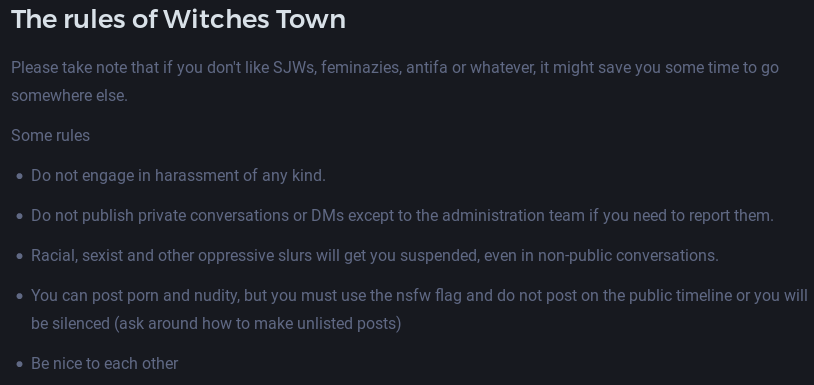

If you don t manage your own instance, when you ll have to choose the one where to create your account, make sure that the content you plan to toot is within the rules and compatible with the ideology of said instance s administrator. Yes, I know, it may seem surprising but, as stated above, by entering a public instance you become dependent on someone else s infrastructure, who may have an ideological way to conceive its Mastodon hosting service. As such, if you re a nazi, for example, don t open your Mastodon account on a far-left LGBT instance. Your account wouldn t stay open for long.

The moderation rules are described in the about/more page of the instance, and may contain ideological elements.

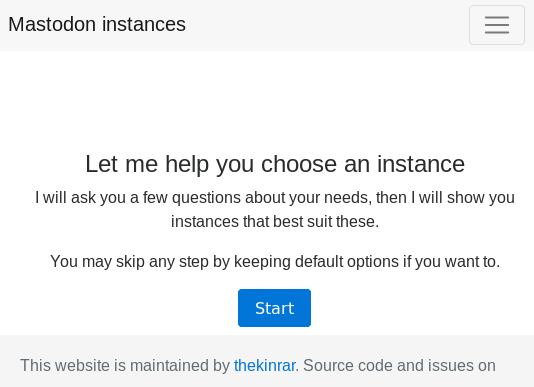

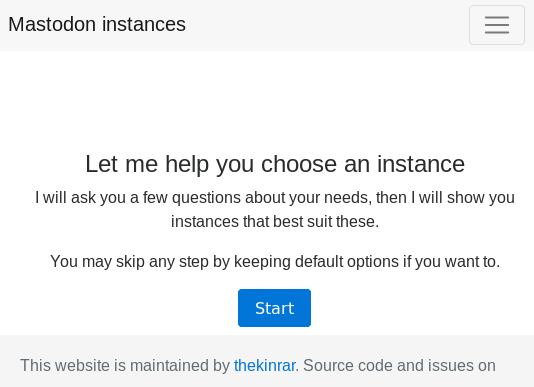

To ease the process for newcomers, it is now possible to use a great tool to select what instance should be the best to host your account.

To ease the process for newcomers, it is now possible to use a great tool to select what instance should be the best to host your account.

Remember that, as stated above, Mastodon is decentralized, and as such there is no central authority which can be reached in case you have a conflict with your instance administrator. And nobody can force said administrator to follow its own rules, or not to change them on the fly.

Think Twice Before Creating Your Account

If you want to create an account on an instance you don t control, you need to check two elements: the availability of the instance hosting service in the long run, often linked to the administrator or the administration group of said instance, and the ideological orientation of your instance. With these two elements checked, you ll be able to let your Mastodon account growth peacefully, without fearing an outage of your instance, or simple your account blocked one morning because it doesn t align with your instance s ideological line.

in Conclusion

To help me get involved in free software and writing articles for this blog, please consider a donation through my Liberapay page, even if it s only a few cents per week. My contact Bitcoin and Monero are also available on this page.

Follow me on Mastodon

Follow me on Mastodon

Translated from French to English by St phanie Chaptal.

Translated from French to English by St phanie Chaptal.

My hard-working KDE friend Mario asked me to help him to get Debian people to help him with his thesis. Here is what he writes:

Dear Free Software contributor*

I m currently in the process of writing my diploma thesis. I ve worked hard during the last few weeks and months on a

My hard-working KDE friend Mario asked me to help him to get Debian people to help him with his thesis. Here is what he writes:

Dear Free Software contributor*

I m currently in the process of writing my diploma thesis. I ve worked hard during the last few weeks and months on a  After one week of campaign on -vote@, many subjects have been mentioned already. I m trying here to list the concrete, actionable ideas I found interesting (does not necessarily mean that I agree with all of them) and that may be worth further discussion at a less busy time. There s obviously some amount of subjectivity in such a list, and I m also slightly biased ;) . Feel free to point to missing ideas or references (when an idea appeared in several emails, I ve generally tried to use the first reference).

On the campaign itself, and having general discussions inside Debian:

After one week of campaign on -vote@, many subjects have been mentioned already. I m trying here to list the concrete, actionable ideas I found interesting (does not necessarily mean that I agree with all of them) and that may be worth further discussion at a less busy time. There s obviously some amount of subjectivity in such a list, and I m also slightly biased ;) . Feel free to point to missing ideas or references (when an idea appeared in several emails, I ve generally tried to use the first reference).

On the campaign itself, and having general discussions inside Debian:

After this

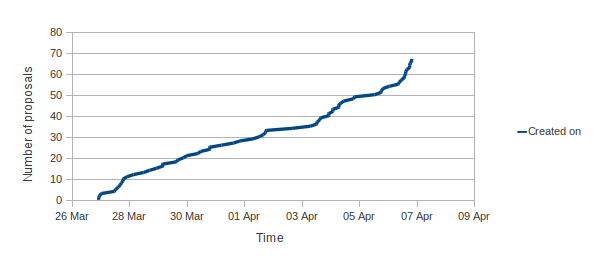

After this  This year our efforts have paid off and despite there being more mentoring organizations than there were in 2011 (175 in 2011 and 180 in 2012), this year in Debian we got 81 submissions versus 43 submissions in 2011.

This year our efforts have paid off and despite there being more mentoring organizations than there were in 2011 (175 in 2011 and 180 in 2012), this year in Debian we got 81 submissions versus 43 submissions in 2011. The result is this year we ll have 15 students in Debian versus 9 students last year! Without further ado, here is the list of projects and student who will be working with us this summer:

The result is this year we ll have 15 students in Debian versus 9 students last year! Without further ado, here is the list of projects and student who will be working with us this summer:

The

The  My responses to the objections

Those are the two major objections that we ll have to respond to. Let s try to analyze them a bit more.

It s not testing vs rolling

On the first objection I would like to respond that we must not put testing and rolling/unstable in opposition. The fact that a contributor can t do its work as usual in unstable does not mean that he will instead choose to work on fixing RC bugs in testing. Probably that some do, but in my experience we simply spend our time differently, either working more on non-Debian stuff or doing mostly hidden work that is then released in big batches at the start of the next cycle (which tends to create problems of its own).

I would also like to argue that by giving more exposure to rolling and encouraging developers to properly support their packages in rolling, it probably means that the overall state of rolling should become gradually better compared to what we re currently used to with testing.

The objection that rolling would divert resources from getting testing in a releasable shape is difficult to prove and/or disprove. The best way to have some objective data would be to setup a questionnaire and to ask all maintainers. Any volunteer for that?

Unstable as a test-bed for RC bugfixes?

It s true that unstable will quickly diverge from testing and that it will be more difficult to cherry-pick updates from unstable into testing. This cannot be refuted, it s a downside given the current workflow of the release team.

But I wonder if the importance of this workflow is not overdone. The reason why they like to cherry-pick from unstable is because it gives them some confidence that the update has not caused other regressions and ensures that testing is improving.

But if they re considering to cherry-pick an update, it s because the current package in testing is plagued by an RC bug. Supposing that the updated package has introduced a regression, is it really better to keep the current RC bug compared to trading it for a new regression? It sure depends on the precise bugs involved so that s why they prefer to know up-front about the regression instead of making a blind bet.

Given this, I think we should use testing-proposed-updates (tpu) as a test-bed for RC bug fixes. We should ask beta-testers to activate this repository and to file RC bugs for any regression. And instead of requiring a full review by a release manager for all uploads to testing-proposed-updates, uploads should be auto-accepted provided that they do not change the upstream version and that they do not add/remove binary packages. Other uploads would still need manual approval by the release managers.

On top of this, we can also add an infrastructure to encourage peer-reviews of t-p-u uploads so that reviews become more opportunistic instead of systematic. Positive reviews would help reduce the aging required in t-p-u before being accepted into testing.

This changes the balance by giving a bit more freedom to maintainers but still keeps the safety net that release managers need to have. It should also reduce the overall amount of work that the release team has to do.

Comments welcome

Do you see other important objections beside the two that I mentioned?

Do you have other ideas to overcome those objections?

What do you think of my responses? Does your experience infirm or confirm my point of view?

My responses to the objections

Those are the two major objections that we ll have to respond to. Let s try to analyze them a bit more.

It s not testing vs rolling

On the first objection I would like to respond that we must not put testing and rolling/unstable in opposition. The fact that a contributor can t do its work as usual in unstable does not mean that he will instead choose to work on fixing RC bugs in testing. Probably that some do, but in my experience we simply spend our time differently, either working more on non-Debian stuff or doing mostly hidden work that is then released in big batches at the start of the next cycle (which tends to create problems of its own).

I would also like to argue that by giving more exposure to rolling and encouraging developers to properly support their packages in rolling, it probably means that the overall state of rolling should become gradually better compared to what we re currently used to with testing.

The objection that rolling would divert resources from getting testing in a releasable shape is difficult to prove and/or disprove. The best way to have some objective data would be to setup a questionnaire and to ask all maintainers. Any volunteer for that?

Unstable as a test-bed for RC bugfixes?

It s true that unstable will quickly diverge from testing and that it will be more difficult to cherry-pick updates from unstable into testing. This cannot be refuted, it s a downside given the current workflow of the release team.

But I wonder if the importance of this workflow is not overdone. The reason why they like to cherry-pick from unstable is because it gives them some confidence that the update has not caused other regressions and ensures that testing is improving.

But if they re considering to cherry-pick an update, it s because the current package in testing is plagued by an RC bug. Supposing that the updated package has introduced a regression, is it really better to keep the current RC bug compared to trading it for a new regression? It sure depends on the precise bugs involved so that s why they prefer to know up-front about the regression instead of making a blind bet.

Given this, I think we should use testing-proposed-updates (tpu) as a test-bed for RC bug fixes. We should ask beta-testers to activate this repository and to file RC bugs for any regression. And instead of requiring a full review by a release manager for all uploads to testing-proposed-updates, uploads should be auto-accepted provided that they do not change the upstream version and that they do not add/remove binary packages. Other uploads would still need manual approval by the release managers.

On top of this, we can also add an infrastructure to encourage peer-reviews of t-p-u uploads so that reviews become more opportunistic instead of systematic. Positive reviews would help reduce the aging required in t-p-u before being accepted into testing.

This changes the balance by giving a bit more freedom to maintainers but still keeps the safety net that release managers need to have. It should also reduce the overall amount of work that the release team has to do.

Comments welcome

Do you see other important objections beside the two that I mentioned?

Do you have other ideas to overcome those objections?

What do you think of my responses? Does your experience infirm or confirm my point of view?

The

The  Well,

Well,  a) Situation

a) Situation

I had to learn it the hard way; really cheap plane tickets doesn’t exist unless you buy them months in advance, and killing three hours at an airport can be done by writing a long post on how to kill three hours at an airport. But Finnair does have the funniest instruction videos I’ve ever seen. Going to Helsinki for Assembly 2008 was worth the money.

This was my first party outside Norway, and the one thing that really surprised me was that most of the gamers actually did kill all audio and lights when told to do so. Smash told me that some hardcore gamers just put a towel over their head and screen to continue playing, but I still think it deserves some credit.

Being at the party place was ok, even with the security check. I think the scene booth organized by Truck was great, even though I don’t have anything to compare it to. It was nice to see someone walk up to the booth and ask a lot of questions. I’m happy about missing out on Hesburger, and sad that it took me two days to find the Pizza Hut.

Boozembly was a lot more fun, even with the rain. I found out that Sauli can be a very nice guy, that you don’t need toothpaste when you have mintu, that guys in kilts got nothing under and that kiitos means thanks. I sure hope information like this will be useful to someone someday.

It’s always nice to see old friends, like Spiikki, again and even nicer knowing that you can still pick up where you left two years ago. And it’s fun meeting the skilled people you’ve admired for a long time. Actually telling them that got a bit easier when I managed to stop being so shy.

I always find it difficult to keep it short and still cover the most important things, so I just want to say the following; I think the 4k by Portal Process and TBC rocks, and the 64k by Fairlight did impress me. Thanks to Leia and Little Bitchard for letting me crash at their place. And thanks to Gargaj for being awesome.

I had to learn it the hard way; really cheap plane tickets doesn’t exist unless you buy them months in advance, and killing three hours at an airport can be done by writing a long post on how to kill three hours at an airport. But Finnair does have the funniest instruction videos I’ve ever seen. Going to Helsinki for Assembly 2008 was worth the money.

This was my first party outside Norway, and the one thing that really surprised me was that most of the gamers actually did kill all audio and lights when told to do so. Smash told me that some hardcore gamers just put a towel over their head and screen to continue playing, but I still think it deserves some credit.

Being at the party place was ok, even with the security check. I think the scene booth organized by Truck was great, even though I don’t have anything to compare it to. It was nice to see someone walk up to the booth and ask a lot of questions. I’m happy about missing out on Hesburger, and sad that it took me two days to find the Pizza Hut.

Boozembly was a lot more fun, even with the rain. I found out that Sauli can be a very nice guy, that you don’t need toothpaste when you have mintu, that guys in kilts got nothing under and that kiitos means thanks. I sure hope information like this will be useful to someone someday.

It’s always nice to see old friends, like Spiikki, again and even nicer knowing that you can still pick up where you left two years ago. And it’s fun meeting the skilled people you’ve admired for a long time. Actually telling them that got a bit easier when I managed to stop being so shy.

I always find it difficult to keep it short and still cover the most important things, so I just want to say the following; I think the 4k by Portal Process and TBC rocks, and the 64k by Fairlight did impress me. Thanks to Leia and Little Bitchard for letting me crash at their place. And thanks to Gargaj for being awesome.

Well a brief post about what I've been up to over the past few days.

An alioth project was created for the maintainance of

Well a brief post about what I've been up to over the past few days.

An alioth project was created for the maintainance of