Libraries are collections of code that are intended to be usable by multiple consumers (if you're interested in the etymology, watch

this video). In the old days we had what we now refer to as "static" libraries, collections of code that existed on disk but which would be copied into newly compiled binaries. We've moved beyond that, thankfully, and now make use of what we call "dynamic" or "shared" libraries - instead of the code being copied into the binary, a reference to the library function is incorporated, and at runtime the code is mapped from the on-disk copy of the shared object[1]. This allows libraries to be upgraded without needing to modify the binaries using them, and if multiple applications are using the same library at once it only requires that one copy of the code be kept in RAM.

But for this to work, two things are necessary: when we build a binary, there has to be a way to reference the relevant library functions in the binary; and when we run a binary, the library code needs to be mapped into the process.

(I'm going to somewhat simplify the explanations from here on - things like symbol versioning make this a bit more complicated but aren't strictly relevant to what I was working on here)

For the first of these, the goal is to replace a call to a function (eg,

printf()) with a reference to the actual implementation. This is the job of the linker rather than the compiler (eg, if you use the

-c argument to tell gcc to simply compile to an object rather than linking an executable, it's not going to care about whether or not every function called in your code actually exists or not - that'll be figured out when you link all the objects together), and the linker needs to know which symbols (which aren't just functions - libraries can export variables or structures and so on) are available in which libraries. You give the linker a list of libraries, it extracts the symbols available, and resolves the references in your code with references to the library.

But how is that information extracted? Each ELF object has a fixed-size header that contains references to various things, including a reference to a list of "section headers". Each section has a name and a type, but the ones we're interested in are

.dynstr and

.dynsym.

.dynstr contains a list of strings, representing the name of each exported symbol.

.dynsym is where things get more interesting - it's a list of structs that contain information about each symbol. This includes a bunch of fairly complicated stuff that you need to care about if you're actually writing a linker, but the relevant entries for this discussion are an index into

.dynstr (which means the

.dynsym entry isn't sufficient to know the name of a symbol, you need to extract that from

.dynstr), along with the location of that symbol within the library. The linker can parse this information and obtain a list of symbol names and addresses, and can now replace the call to

printf() with a reference to libc instead.

(Note that it's not possible to simply encode this as "Call this address in this library" - if the library is rebuilt or is a different version, the function could move to a different location)

Experimentally,

.dynstr and

.dynsym appear to be sufficient for linking a dynamic library at build time - there are other sections related to dynamic linking, but you can link against a library that's missing them. Runtime is where things get more complicated.

When you run a binary that makes use of dynamic libraries, the code from those libraries needs to be mapped into the resulting process. This is the job of the runtime dynamic linker, or RTLD[2]. The RTLD needs to open every library the process requires, map the relevant code into the process's address space, and then rewrite the references in the binary into calls to the library code. This requires more information than is present in

.dynstr and

.dynsym - at the very least, it needs to know the list of required libraries.

There's a separate section called

.dynamic that contains another list of structures, and it's the data here that's used for this purpose. For example,

.dynamic contains a bunch of entries of type

DT_NEEDED - this is the list of libraries that an executable requires. There's also a bunch of other stuff that's required to actually make all of this work, but the only thing I'm going to touch on is

DT_HASH. Doing all this re-linking at runtime involves resolving the locations of a large number of symbols, and if the only way you can do that is by reading a list from

.dynsym and then looking up every name in

.dynstr that's going to take some time. The

DT_HASH entry points to a hash table - the RTLD hashes the symbol name it's trying to resolve, looks it up in that hash table, and gets the symbol entry directly (it still needs to resolve that against

.dynstr to make sure it hasn't hit a hash collision - if it has it needs to look up the next hash entry, but this is still generally faster than walking the entire

.dynsym list to find the relevant symbol). There's also

DT_GNU_HASH which fulfills the same purpose as

DT_HASH but uses a more complicated algorithm that performs even better.

.dynamic also contains entries pointing at

.dynstr and

.dynsym, which seems redundant but will become relevant shortly.

So,

.dynsym and

.dynstr are required at build time, and both are required along with

.dynamic at runtime. This seems simple enough, but obviously there's a twist and I'm sorry it's taken so long to get to this point.

I bought a

Synology NAS for home backup purposes (my previous solution was a single external USB drive plugged into a small server, which had uncomfortable single point of failure properties). Obviously I decided to poke around at it, and I found something odd - all the libraries Synology ships were entirely lacking any ELF section headers. This meant no

.dynstr,

.dynsym or

.dynamic sections, so how was any of this working?

nm asserted that the libraries exported no symbols, and

readelf agreed. If I wrote a small app that called a function in one of the libraries and built it,

gcc complained that the function was undefined. But executables on the device were clearly resolving the symbols at runtime, and if I loaded them into

ghidra the exported functions were visible. If I

dlopen()ed them,

dlsym() couldn't resolve the symbols - but if I hardcoded the offset into my code, I could call them directly.

Things finally made sense when I discovered that if I passed the

--use-dynamic argument to

readelf, I

did get a list of exported symbols. It turns out that ELF is weirder than I realised. As well as the aforementioned section headers, ELF objects also include a set of program headers. One of the program header types is

PT_DYNAMIC. This typically points to the same data that's present in the

.dynamic section. Remember when I mentioned that

.dynamic contained references to

.dynsym and

.dynstr? This means that simply pointing at

.dynamic is sufficient, there's no need to have separate entries for them.

The same information can be reached from two different locations. The information in the section headers is used at build time, and the information in the program headers at run time[3]. I do not have an explanation for this. But if the information is present in two places, it seems obvious that it should be able to reconstruct the missing section headers in my weird libraries? So that's what

this does. It extracts information from the

DYNAMIC entry in the program headers and creates equivalent section headers.

There's one thing that makes this more difficult than it might seem. The section header for

.dynsym has to contain the number of symbols present in the section. And that information doesn't directly exist in

DYNAMIC - to figure out how many symbols exist, you're expected to walk the hash tables and keep track of the largest number you've seen. Since every symbol has to be referenced in the hash table, once you've hit every entry the largest number is the number of exported symbols. This seemed annoying to implement, so instead I cheated, added code to simply pass in the number of symbols on the command line, and then just parsed the output of

readelf against the original binaries to extract that information and pass it to my tool.

Somehow, this worked. I now have a bunch of library files that I can link into my own binaries to make it easier to figure out how various things on the Synology work. Now, could someone explain (a) why this information is present in two locations, and (b) why the build-time linker and run-time linker disagree on the canonical source of truth?

[1] "Shared object" is the source of the .so filename extension used in various Unix-style operating systems

[2] You'll note that "RTLD" is not an acryonym for "runtime dynamic linker", because reasons

[3] For environments using the GNU RTLD, at least - I have no idea whether this is the case in all ELF environments

comments

Those of you who haven t been in IT for far, far too long might not know that next month will be the 16th(!) anniversary of the disclosure of what was, at the time, a fairly earth-shattering revelation: that for about 18 months, the Debian OpenSSL package was generating entirely predictable private keys.

The recent xz-stential threat (thanks to @nixCraft for making me aware of that one), has got me thinking about my own serendipitous interaction with a major vulnerability.

Given that the statute of limitations has (probably) run out, I thought I d share it as a tale of how huh, that s weird can be a powerful threat-hunting tool but only if you ve got the time to keep pulling at the thread.

Those of you who haven t been in IT for far, far too long might not know that next month will be the 16th(!) anniversary of the disclosure of what was, at the time, a fairly earth-shattering revelation: that for about 18 months, the Debian OpenSSL package was generating entirely predictable private keys.

The recent xz-stential threat (thanks to @nixCraft for making me aware of that one), has got me thinking about my own serendipitous interaction with a major vulnerability.

Given that the statute of limitations has (probably) run out, I thought I d share it as a tale of how huh, that s weird can be a powerful threat-hunting tool but only if you ve got the time to keep pulling at the thread.

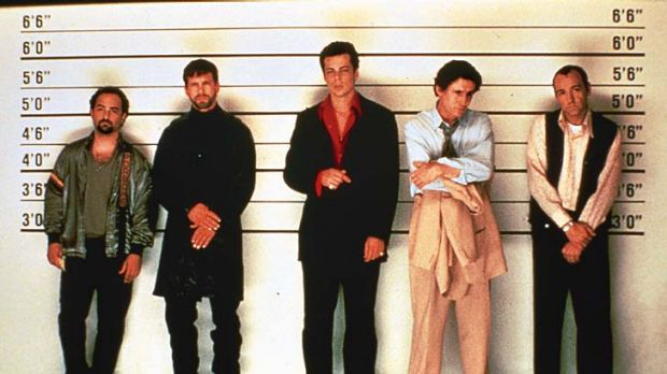

They're called The Usual Suspects for a reason, but sometimes, it really is Keyser S ze

They're called The Usual Suspects for a reason, but sometimes, it really is Keyser S ze

They're called The Usual Suspects for a reason, but sometimes, it really is Keyser S ze

They're called The Usual Suspects for a reason, but sometimes, it really is Keyser S ze

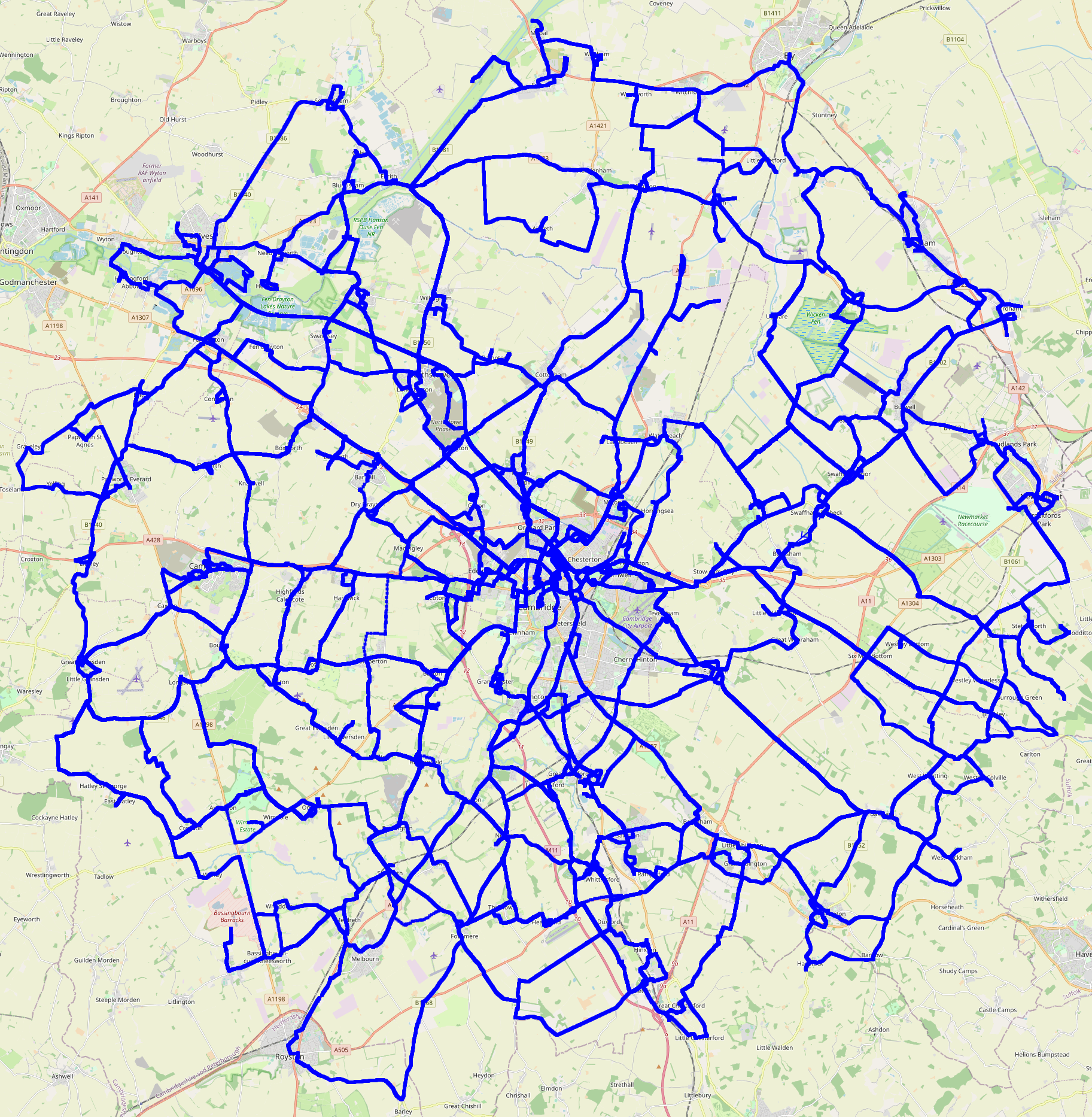

Closing arguments in the trial between various people and

Closing arguments in the trial between various people and  Insert obligatory "not THAT data" comment here

Insert obligatory "not THAT data" comment here

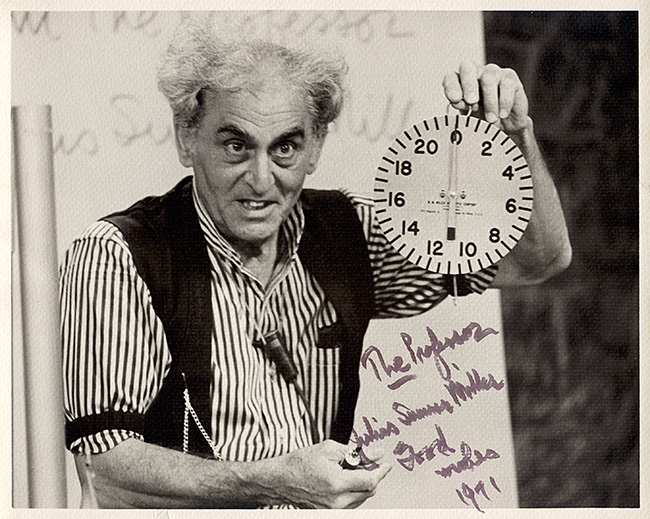

If you don't know who Professor Julius Sumner Miller is, I highly recommend

If you don't know who Professor Julius Sumner Miller is, I highly recommend  No thanks, Bender, I'm busy tonight

No thanks, Bender, I'm busy tonight

Edited 2023-08-25 01:32 BST to correct a slip.

Edited 2023-08-25 01:32 BST to correct a slip.