I must echo

John Sullivan's post: GPG keysigning and government identification.

John states some very important reasons for people everywhere to verify the identities of those parties they sign GPG keys with

in a meaningful way, and that means, not just trusting government-issued IDs. As he says,

It's not the Web of Amateur ID Checking. And I'll take the opportunity to expand, based on what some of us saw in Debian, on what this means.

I know most people (even most people involved in Free Software development not everybody needs to join a globally-distributed, thousand-people-strong project such as Debian) are not

that much into GPG, trust keyrings, or understand the value of a strong set of cross-signatures. I know many people have never been part of a

key-signing party.

I have been to several. And it was a very interesting experience. Fun, at the beginning at least, but quite tiring at the end. I was part of what could very well constitute

the largest KSP ever in DebConf5 (Finland, 2005). Quite awe-inspiring We were over 200 people,

all lined up with a printed list on one hand, our passport (or ID card for EU citizens) in the other. Actwally, we stood

face to face, in a ribbon-like ring. And, after the basic explanation was given, it was time to check ID documents.

And so it began.

The rationale of this ring is that every person who signed up for the KSP would verify each of the others' identities. Were anything

fishy to happen, somebody would surely raise a voice of alert. Of course, the interaction between every two people had to be quick More like a game than like a real check. "Hi, I'm #142 on the list. I checked, my ID is OK and my fingerprint is OK." "OK, I'm #35, I also printed the document and checked both my ID and my fingerprint are OK." The passport changes hands, the person in front of me takes the unique opportunity to look at a Mexican passport while I look at a Somewhere-y one. And all is fine and dandy. The first interactions do include some chatter while we grab up speed, so maybe a minute is spent Later on, we all get a bit tired, and things speed up a bit. But anyway, we were close to 200 people That means we surely spent over 120 minutes (2 full hours) checking ID documents. Of course, not all of the time under ideal lighting conditions.

After two hours, nobody was checking anything anymore. But yes, as a group where we trust each other more than most social groups I have ever met, we did trust on others raising the alarm were anything fishy to happen. And we all finished happy and got home with a bucketload of signatures on. Yay!

One year later, DebConf happened in Mexico.

My friend Martin Krafft tested the system, perhaps cheerful and playful in his intent but the flaw in key signing parties such as the one I described he unveiled was

huge: People join the KSP just because it's a

social ritual, without putting any thought or judgement in it. And, by doing so, we ended up dilluting instead of strengthening our web of trust.

Martin identified himself using an official-looking ID. According to

his recount of the facts, he did start presenting a German ID and later switched to this other document. We could say it was a real ID from

a fake country, or that it was a fake ID. It is up to each person to judge. But anyway, Martin brought his

Transnational Republic ID document, and many tens of people agreed to sign his key based on it Or rather, based on it plus his outgoing, friendly personality. I did, at least, know perfectly well who he was, after knowing him for three years already. Many among us also did. Until he reached a very dilligent person, Manoj, that got disgusted by this experiment and loudly denounced it. Right, Manoj is known to have strong views, and using fake IDs is (or, at least, was) outside his definition of fair play. Some time after DebConf,

a huge thread erupted questioning Martin's actions, as well as questioning what do we trust when we sign an identity document (a GPG key).

So... We continued having traditional key signing parties for a couple of years, although more carefully and with more buzz regarding these issues. Until we finally decided to switch the protocol to a better one: One that ensures we do get some more talk and inter-personal recognition. We don't need everybody to cross-sign with everyone else A better trust comes from people

chatting with each other and being able to actually pin-point who a person is, what do they do. And yes, at KSPs most people still require ID documents in order to cross-sign.

Now... What do

I think about this? First of all, if we have not ever talked for at least enough time for me to recognize you, don't be surprised: I won't sign your key or request you to sign mine (and note, I have quite a bad memory when it comes to faces and names). If it's the first conference (or social ocassion) we come together, I will most likely not look for key exchanges either.

My personal way of verifying identities is by knowing the other person. So, no, I won't trust a government-issued ID. I know I will be signing some people based on something other than their name, but hey I know many people already who live pseudonymously, and if they choose for whatever reason to forgo their original name, their original name should not mean anything to me either. I know them by their pseudonym, and based on that pseudonym I will sign their identities.

But... *sigh*, this post turned out quite long, and I'm not yet getting anywhere ;-)

But what this means in the end is: We must stop and think what do we mean when we exchange signatures. We are not validating a person's worth. We are not validating that a government believes who they claim to be. We are validating we trust them to be identified with the (name,mail,affiliation) they are presenting us. And yes, our signature is much more than just a social rite It is a binding document. I don't know if a GPG signature is legally binding anywhere (I'm tempted to believe it is, as most jurisdictions do accept digital signatures, and the procedure is mathematically sound and criptographically strong), but it does have a high value for our project, and for many other projects in the Free Software world.

So, wrapping up, I will also invite (just like John did) you to read the

E-mail self-defense guide, published by the FSF in honor of today's Reset The Net effort.

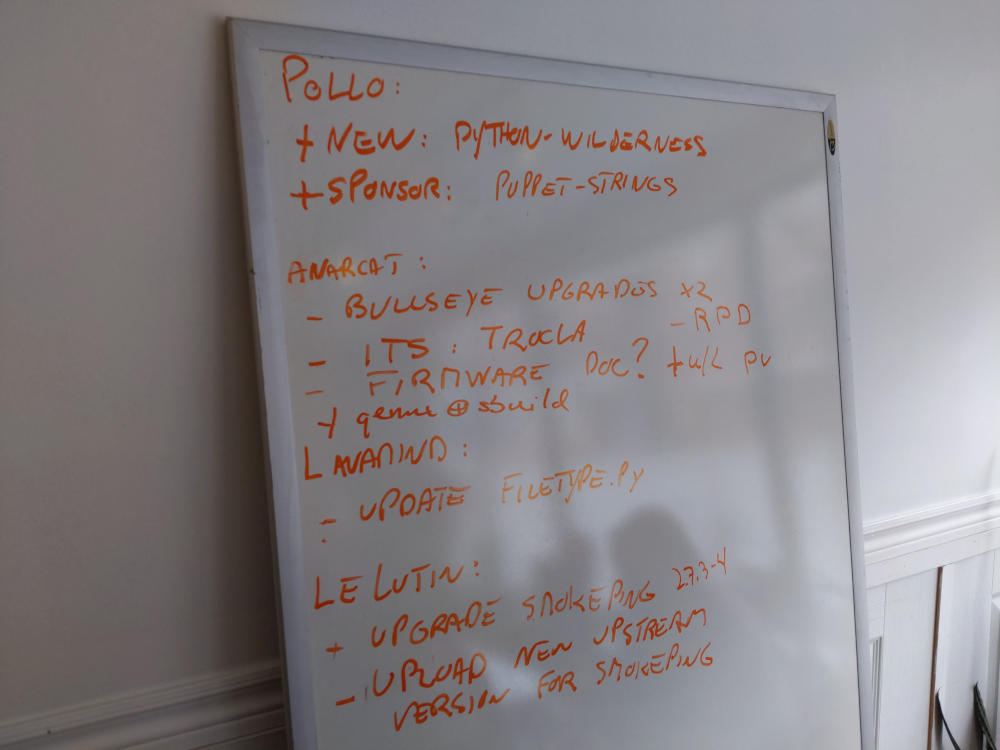

New Year, Same Great People! Our Debian User Group met for the first of our

2024 bi-monthly meetings on February 4th and it was loads of fun. Around

twelve different people made it this time to

New Year, Same Great People! Our Debian User Group met for the first of our

2024 bi-monthly meetings on February 4th and it was loads of fun. Around

twelve different people made it this time to

This is the second part of how I build a read-only root setup for my router. You might want to read

This is the second part of how I build a read-only root setup for my router. You might want to read

If everything goes according to plan, our next meeting should be sometime in

June. If you are interested, the best way to stay in touch is either to

If everything goes according to plan, our next meeting should be sometime in

June. If you are interested, the best way to stay in touch is either to

Dear Debian Users,

I met last night with

Dear Debian Users,

I met last night with  I have spent today making my first ever batch of

I have spent today making my first ever batch of

It seems to be a new fashion. Instead of tagging software with a normal version number, many upstream adds a one letter prefix. Instead of version 0.1.2, it becomes version v0.1.2.

This sickness has spread all around in Github (to tell only about the biggest one), from one repository to the next, from one author to the next. It has consequences, because Github (and others) conveniently provides tarballs out of Git tags. Then the tarball names becomes packagename-v0.1.2.tar.gz instead of package packagename-0.1.2.tar.gz. I ve even seen worse, like tags called packagename-0.1.2. Then the tarball becomes packagename-packagename-0.1.2. Consequently, we have to go around a lot of problems like mangling in our debian/watch files and so on (probably the debian/gbp.conf if you use that ). This is particularly truth when upstream doesn t make tarball and only provides tags on github (which is really fine to me, but then tags have to be made in a logical way). Worse: I ve seen this v-prefixing disease as examples in some howtos.

What s wrong with you guys? From where is coming this v sickness? Have you guys watch too much the v 2009 series on TV, and you are a fan of the visitors? How come a version number isn t just made of numbers? Or is this just a v like the virus of prefixing release names with a v ?

So, if you are an upstream author, reading debian planet, with your software packaged in Debian, and caught the bad virus of prefixing your version numbers with a v, please give-up on that. Adding a v to your tags is meaningless anyway, and it s just annoying us downstream.

Edit: Some people pointed to me some (IMO wrong) reasons why to prefix version numbers. My original post was only half serious, and responding with facts and common sense breaks the fun! :) Anyway, the most silly one being that Linus has been using it. I wont comment on that one, it s obvious that it s not a solid argumentation. Then the second one is for tab completion. Well, if your bash-completion script is broken, fix it so that it does what you need, rather than going around the problem by polluting your tags. Then the 3rd argument was if you were merging 2 repositories. First this never happened to me to merge 2 completely different repos, and I very much doubt that this is an operation that you have to do often. Second, if you merge the repositories, the tags are loosing all meanings, and I don t really think you will need them anyway. Then the last one would be working with submodules. I haven t done it, and that might be the only case where it makes sense, though this has nothing to do with prefixing with v (you would need a much smarter approach, like prefixing with project names, which in that case makes sense). So I stand by my post: prefixing with v makes no sense.

It seems to be a new fashion. Instead of tagging software with a normal version number, many upstream adds a one letter prefix. Instead of version 0.1.2, it becomes version v0.1.2.

This sickness has spread all around in Github (to tell only about the biggest one), from one repository to the next, from one author to the next. It has consequences, because Github (and others) conveniently provides tarballs out of Git tags. Then the tarball names becomes packagename-v0.1.2.tar.gz instead of package packagename-0.1.2.tar.gz. I ve even seen worse, like tags called packagename-0.1.2. Then the tarball becomes packagename-packagename-0.1.2. Consequently, we have to go around a lot of problems like mangling in our debian/watch files and so on (probably the debian/gbp.conf if you use that ). This is particularly truth when upstream doesn t make tarball and only provides tags on github (which is really fine to me, but then tags have to be made in a logical way). Worse: I ve seen this v-prefixing disease as examples in some howtos.

What s wrong with you guys? From where is coming this v sickness? Have you guys watch too much the v 2009 series on TV, and you are a fan of the visitors? How come a version number isn t just made of numbers? Or is this just a v like the virus of prefixing release names with a v ?

So, if you are an upstream author, reading debian planet, with your software packaged in Debian, and caught the bad virus of prefixing your version numbers with a v, please give-up on that. Adding a v to your tags is meaningless anyway, and it s just annoying us downstream.

Edit: Some people pointed to me some (IMO wrong) reasons why to prefix version numbers. My original post was only half serious, and responding with facts and common sense breaks the fun! :) Anyway, the most silly one being that Linus has been using it. I wont comment on that one, it s obvious that it s not a solid argumentation. Then the second one is for tab completion. Well, if your bash-completion script is broken, fix it so that it does what you need, rather than going around the problem by polluting your tags. Then the 3rd argument was if you were merging 2 repositories. First this never happened to me to merge 2 completely different repos, and I very much doubt that this is an operation that you have to do often. Second, if you merge the repositories, the tags are loosing all meanings, and I don t really think you will need them anyway. Then the last one would be working with submodules. I haven t done it, and that might be the only case where it makes sense, though this has nothing to do with prefixing with v (you would need a much smarter approach, like prefixing with project names, which in that case makes sense). So I stand by my post: prefixing with v makes no sense.

Most UNIX users have heard of the

Most UNIX users have heard of the

After seeing some book descriptions recently on planet debian,

let me add some short recommendation, too.

Almost everyone has heard about Gulliver's Travels already,

so usually only very cursory. For example: did you know the book

describes 4 journeys and not only the travel to Lilliput?

Given how influential the book has been, that is even more suprising.

Words like "endian" or "yahoo" originate from it.

My favorite is the third travel, though, especially the acadamy of Lagado,

from which I want to share two gems:

"

His lordship added, 'That he would not, by any further particulars, prevent the pleasure I

should certainly take in viewing the grand academy, whither he was resolved I should go.' He

only desired me to observe a ruined building, upon the side of a mountain about three miles

distant, of which he gave me this account: 'That he had a very convenient mill within half a

mile of his house, turned by a current from a large river, and sufficient for his own family, as

well as a great number of his tenants; that about seven years ago, a club of those projectors

came to him with proposals to destroy this mill, and build another on the side of that mountain,

on the long ridge whereof a long canal must be cut, for a repository of water, to be conveyed up

by pipes and engines to supply the mill, because the wind and air upon a height agitated the

water, and thereby made it fitter for motion, and because the water, descending down a

declivity, would turn the mill with half the current of a river whose course is more upon a

level.' He said, 'that being then not very well with the court, and pressed by many of his

friends, he complied with the proposal; and after employing a hundred men for two years, the

work miscarried, the projectors went off, laying the blame entirely upon him, railing at him

ever since, and putting others upon the same experiment, with equal assurance of success, as

well as equal disappointment.'

"

"I went into another room, where the walls and ceiling were all hung

round with cobwebs, except a narrow passage for the artist to go in and out.

At my entrance, he called aloud to me, 'not to disturb his webs.' He

lamented 'the fatal mistake the world had been so long in, of using

silkworms, while we had such plenty of domestic insects who infinitely excelled

the former, because they understood how to weave, as well as spin.'

And he proposed further, 'that by employing spiders, the charge of dyeing

silks should be wholly saved;' whereof I was fully convinced, when he

showed me a vast number of flies most beautifully coloured, wherewith he fed

his spiders, assuring us 'that the webs would take a tincture from them; and as

he had them of all hues, he hoped to fit everybody s fancy, as soon as he

could find proper food for the flies, of certain gums, oils, and other

glutinous matter, to give a strength and consistence to the threads.'"

After seeing some book descriptions recently on planet debian,

let me add some short recommendation, too.

Almost everyone has heard about Gulliver's Travels already,

so usually only very cursory. For example: did you know the book

describes 4 journeys and not only the travel to Lilliput?

Given how influential the book has been, that is even more suprising.

Words like "endian" or "yahoo" originate from it.

My favorite is the third travel, though, especially the acadamy of Lagado,

from which I want to share two gems:

"

His lordship added, 'That he would not, by any further particulars, prevent the pleasure I

should certainly take in viewing the grand academy, whither he was resolved I should go.' He

only desired me to observe a ruined building, upon the side of a mountain about three miles

distant, of which he gave me this account: 'That he had a very convenient mill within half a

mile of his house, turned by a current from a large river, and sufficient for his own family, as

well as a great number of his tenants; that about seven years ago, a club of those projectors

came to him with proposals to destroy this mill, and build another on the side of that mountain,

on the long ridge whereof a long canal must be cut, for a repository of water, to be conveyed up

by pipes and engines to supply the mill, because the wind and air upon a height agitated the

water, and thereby made it fitter for motion, and because the water, descending down a

declivity, would turn the mill with half the current of a river whose course is more upon a

level.' He said, 'that being then not very well with the court, and pressed by many of his

friends, he complied with the proposal; and after employing a hundred men for two years, the

work miscarried, the projectors went off, laying the blame entirely upon him, railing at him

ever since, and putting others upon the same experiment, with equal assurance of success, as

well as equal disappointment.'

"

"I went into another room, where the walls and ceiling were all hung

round with cobwebs, except a narrow passage for the artist to go in and out.

At my entrance, he called aloud to me, 'not to disturb his webs.' He

lamented 'the fatal mistake the world had been so long in, of using

silkworms, while we had such plenty of domestic insects who infinitely excelled

the former, because they understood how to weave, as well as spin.'

And he proposed further, 'that by employing spiders, the charge of dyeing

silks should be wholly saved;' whereof I was fully convinced, when he

showed me a vast number of flies most beautifully coloured, wherewith he fed

his spiders, assuring us 'that the webs would take a tincture from them; and as

he had them of all hues, he hoped to fit everybody s fancy, as soon as he

could find proper food for the flies, of certain gums, oils, and other

glutinous matter, to give a strength and consistence to the threads.'"