Debian v11 with codename bullseye is supposed to be released as new stable release soon-ish (let s hope for June, 2021! :)). Similar to what we had with

#newinbuster and previous releases, now it s time for

#newinbullseye!

I was the driving force at several of my customers to be well prepared for bullseye before its

freeze, and since then we re on good track there overall. In my opinion, Debian s release team did (and still does) a great job I m

very happy about how unblock requests (not only mine but also ones I kept an eye on) were handled so far.

As usual with major upgrades, there are some things to be aware of, and hereby I m starting my public notes on bullseye that might be worth also for other folks. My focus is primarily on server systems and looking at things from a sysadmin perspective.

Further readings

Of course start with taking a look at the

official Debian release notes, make sure to especially go through

What s new in Debian 11 +

Issues to be aware of for bullseye.

Chris published

notes on upgrading to Debian bullseye, and also anarcat published

upgrade notes for bullseye.

Package versions

As a starting point, let s look at some selected packages and their versions in buster vs. bullseye as of 2021-05-27 (mainly having amd64 in mind):

| Package |

buster/v10 |

bullseye/v11 |

| ansible |

2.7.7 |

2.10.8 |

| apache |

2.4.38 |

2.4.46 |

| apt |

1.8.2.2 |

2.2.3 |

| bash |

5.0 |

5.1 |

| ceph |

12.2.11 |

14.2.20 |

| docker |

18.09.1 |

20.10.5 |

| dovecot |

2.3.4 |

2.3.13 |

| dpkg |

1.19.7 |

1.20.9 |

| emacs |

26.1 |

27.1 |

| gcc |

8.3.0 |

10.2.1 |

| git |

2.20.1 |

2.30.2 |

| golang |

1.11 |

1.15 |

| libc |

2.28 |

2.31 |

| linux kernel |

4.19 |

5.10 |

| llvm |

7.0 |

11.0 |

| lxc |

3.0.3 |

4.0.6 |

| mariadb |

10.3.27 |

10.5.10 |

| nginx |

1.14.2 |

1.18.0 |

| nodejs |

10.24.0 |

12.21.0 |

| openjdk |

11.0.9.1 |

11.0.11+9 + 17~19 |

| openssh |

7.9p1 |

8.4p1 |

| openssl |

1.1.1d |

1.1.1k |

| perl |

5.28.1 |

5.32.1 |

| php |

7.3 |

7.4+76 |

| postfix |

3.4.14 |

3.5.6 |

| postgres |

11 |

13 |

| puppet |

5.5.10 |

5.5.22 |

| python2 |

2.7.16 |

2.7.18 |

| python3 |

3.7.3 |

3.9.2 |

| qemu/kvm |

3.1 |

5.2 |

| ruby |

2.5.1 |

2.7+2 |

| rust |

1.41.1 |

1.48.0 |

| samba |

4.9.5 |

4.13.5 |

| systemd |

241 |

247.3 |

| unattended-upgrades |

1.11.2 |

2.8 |

| util-linux |

2.33.1 |

2.36.1 |

| vagrant |

2.2.3 |

2.2.14 |

| vim |

8.1.0875 |

8.2.2434 |

| zsh |

5.7.1 |

5.8 |

Linux Kernel

The bullseye release will ship a Linux kernel based on v5.10 (v5.10.28 as of 2021-05-27, with v5.10.38 pending in unstable/sid), whereas buster shipped kernel 4.19. As usual there are plenty of changes in the kernel area and this might warrant a separate blog entry, but to highlight some issues:

One surprising change might be that the scrollback buffer (Shift + PageUp)

is gone from the Linux console. Make sure to always use screen/tmux or handle output through a pager of your choice if you need all of it and you re in the console.

The kernel provides BTF support (via

CONFIG_DEBUG_INFO_BTF, see

#973870), which means it s no longer necessary to install LLVM, Clang, etc (requiring >100MB of disk space), see

Gregg s excellent blog post regarding the underlying rational. Sadly the libbpf-tools packaging didn t make it into bullseye (

#978727), but if you want to use your own self-made Debian packages,

my notes might be useful.

With kernel version 5.4,

SUBDIRS support was removed from kbuild, so if an out-of-tree kernel module (like a *-dkms package) fails to compile on bullseye, make sure to use a recent version of it which uses

M= or

KBUILD_EXTMOD= instead.

Unprivileged user namespaces are enabled by default (see

#898446 +

#987777), so programs can create more restricted sandboxes without the need to run as root or via a setuid-root helper. If you prefer to keep this feature restricted (or tools like web browsers, WebKitGTK, Flatpak, don t work), use

sysctl -w kernel.unprivileged_userns_clone=0 .

The

/boot/System.map file(s) no longer provide the actual data, you need to switch to the dbg package if you rely on that information:

% cat /boot/System.map-5.10.0-6-amd64

ffffffffffffffff B The real System.map is in the linux-image-<version>-dbg package

Be aware though, that the *-dbg package requires ~5GB of additional disk space.

Systemd

systemd v247 made it into bullseye (updated from v241). Same as for the kernel this might warrant a separate blog entry, but to mention some highlights:

Systemd in bullseye activates its persistent journal functionality by default (storing its files in /var/log/journal/, see

#717388).

systemd-timesyncd is no longer part of the systemd binary package itself, but available as standalone package. This allows usage of ntp, chrony, openntpd,

without having systemd-timesyncd installed (which prevents race conditions like

#889290, which was biting me more than once).

journalctl gained new options:

--cursor-file=FILE Show entries after cursor in FILE and update FILE

--facility=FACILITY... Show entries with the specified facilities

--image=IMAGE Operate on files in filesystem image

--namespace=NAMESPACE Show journal data from specified namespace

--relinquish-var Stop logging to disk, log to temporary file system

--smart-relinquish-var Similar, but NOP if log directory is on root mount

systemctl gained new options:

clean UNIT... Clean runtime, cache, state, logs or configuration of unit

freeze PATTERN... Freeze execution of unit processes

thaw PATTERN... Resume execution of a frozen unit

log-level [LEVEL] Get/set logging threshold for manager

log-target [TARGET] Get/set logging target for manager

service-watchdogs [BOOL] Get/set service watchdog state

--with-dependencies Show unit dependencies with 'status', 'cat', 'list-units', and 'list-unit-files'

-T --show-transaction When enqueuing a unit job, show full transaction

--what=RESOURCES Which types of resources to remove

--boot-loader-menu=TIME Boot into boot loader menu on next boot

--boot-loader-entry=NAME Boot into a specific boot loader entry on next boot

--timestamp=FORMAT Change format of printed timestamps

If you use

systemctl edit to adjust overrides, then you ll now also get the existing configuration file listed as comment, which I consider

very helpful.

The

MACAddressPolicy behavior with systemd naming schema v241 changed for virtual devices (I plan to write about this in a separate blog post).

There are plenty of new manual pages:

systemd also gained new unit configurations related to security hardening:

Another new unit configuration is

SystemCallLog= , which supports listing the system calls to be logged. This is very useful for for auditing or temporarily when constructing system call filters.

The cgroupv2 change is also documented

in the release notes, but to explicitly mention it also here, quoting from

/usr/share/doc/systemd/NEWS.Debian.gz:

systemd now defaults to the unified cgroup hierarchy (i.e. cgroupv2).

This change reflects the fact that cgroups2 support has matured

substantially in both systemd and in the kernel.

All major container tools nowadays should support cgroupv2.

If you run into problems with cgroupv2, you can switch back to the previous,

hybrid setup by adding systemd.unified_cgroup_hierarchy=false to the

kernel command line.

You can read more about the benefits of cgroupv2 at

https://www.kernel.org/doc/html/latest/admin-guide/cgroup-v2.html

Note that cgroup-tools (lssubsys + lscgroup etc) don t work in cgroup2/unified hierarchy

yet (see

#959022 for the details).

Configuration management

puppet s upstream doesn t provide packages for bullseye

yet (see

PA-3624 +

MODULES-11060), and sadly neither v6 nor v7 made it into bullseye, so when using the packages from Debian you re still stuck with v5.5 (also see

#950182).

ansible is also available, and while it looked like that only version 2.9.16 would make it into bullseye (see

#984557 +

#986213), actually version 2.10.8 made it into bullseye.

chef was removed from Debian and is not available with bullseye (

due to trademark issues).

Prometheus stack

Prometheus server was updated from v2.7.1 to v2.24.1, and the prometheus service by default applies

some systemd hardening now. Also all the usual exporters are still there, but bullseye also gained some new ones:

- prometheus-elasticsearch-exporter (v1.1.0)

- prometheus-exporter-exporter (v0.4.0-1)

- prometheus-hacluster-exporter (v1.2.1-1)

- prometheus-homeplug-exporter (v0.3.0-2)

- prometheus-ipmi-exporter (v1.2.0)

- prometheus-libvirt-exporter (v0.2.0-1)

- prometheus-mqtt-exporter (v0.1.4-2)

- prometheus-nginx-vts-exporter (v0.10.3)

- prometheus-postfix-exporter (v0.2.0-3)

- prometheus-redis-exporter (v1.16.0-1)

- prometheus-smokeping-prober (v0.4.1-2)

- prometheus-tplink-plug-exporter (v0.2.0)

Virtualization

docker (v20.10.5), ganeti (v3.0.1), libvirt (v7.0.0), lxc (v4.0.6), openstack, qemu/kvm (v5.2), xen (v4.14.1), are all still around, though what s new and noteworthy is that

podman version 3.0.1 (tool for managing OCI containers and pods) made it into bullseye.

If you re using the docker packages from upstream, be aware that they still don t seem to understand Debian package version handling. The docker* packages will

not be automatically considered for upgrade, as 5:20.10.6~3-0~debian-buster is considered

newer than 5:20.10.6~3-0~debian-bullseye:

% apt-cache policy docker-ce

docker-ce:

Installed: 5:20.10.6~3-0~debian-buster

Candidate: 5:20.10.6~3-0~debian-buster

Version table:

*** 5:20.10.6~3-0~debian-buster 100

100 /var/lib/dpkg/status

5:20.10.6~3-0~debian-bullseye 500

500 https://download.docker.com/linux/debian bullseye/stable amd64 Packages

Vagrant is available in version 2.2.14, the

package from upstream works perfectly fine on bullseye as well. If you re relying on VirtualBox, be aware that upstream doesn t provide packages for bullseye

yet, but the package from Debian/unstable (v6.1.22 as of 2021-05-27) works fine on bullseye (VirtualBox isn t shipped with stable releases since quite some time due to lack of cooperation from upstream on security support for older releases, see

#794466). If you rely on the virtualbox-guest-additions-iso and its shared folders support, you might be glad to hear that v6.1.22 made it into bullseye (see

#988783), properly supporting more recent kernel versions like present in bullseye.

debuginfod

There s a new service

debuginfod.debian.net (see

debian-devel-announce and

Debian Wiki), which makes the debugging experience way smoother. You no longer need to download the debugging Debian packages (*-dbgsym/*-dbg), but instead can fetch them on demand, by exporting the following variables (before invoking gdb or alike):

% export DEBUGINFOD_PROGRESS=1 # for optional download progress reporting

% export DEBUGINFOD_URLS="https://debuginfod.debian.net"

BTW: if you can t rely on debuginfod (for whatever reason), I d like to point your attention towards

find-dbgsym-packages from the

debian-goodies package.

Vim

Sadly Vim 8.2 once again makes another change for bad defaults (hello

mouse behavior!). When

incsearch is set, it also applies to :substitute. This makes it veeeeeeeeeery annoying when running something like

:%s/\s\+$// to get rid of trailing whitespace characters, because if there are no matches it jumps to the beginning of the file and then back, sigh. To get the old behavior back, you can use this:

au CmdLineEnter : let s:incs = &incsearch set noincsearch

au CmdLineLeave : let &incsearch = s:incs

rsync

rsync was updated from v3.1.3 to v3.2.3. It provides

various checksum enhancements (see option

--checksum-choice). We got new capabilities (hardlink-specials, atimes, optional protect-args, stop-at, no crtimes) and the addition of zstd and lz4 compression algorithms. And we got new options:

--atimes: preserve access (use) times--copy-as=USER: specify user (and optionally group) for the copy--crtimes/-N: for preserving the file s create time--max-alloc=SIZE: change a limit relating to memory allocation--mkpath:create the destination s path component--open-noatime: avoid changing the atime on opened files--stop-after=MINS: stop rsync after MINS minutes have elapsed--write-devices: write to devices as files (implies inplace)

OpenSSH

OpenSSH was updated from v7.9p1 to 8.4p1, so if you re interested in all the changes, check out the release notes between those version (

8.0,

8.1,

8.2,

8.3 +

8.4). Let s highlight some notable new features:

- It now defers creation of ~/.ssh until there s something to write (e.g. the known_hosts file), so the good old admin trick to run

ssh localhost and cancel immediately to create ~/.ssh with proper permissions no longer works

- v8.2 brought FIDO/U2F + FIDO2 resident keys Support

- The new

include sshd_config keyword allows including additional configuration files via glob(3) patterns

- ssh now allows

%n to be expanded in ProxyCommand strings.

- The scp and sftp command-lines now accept

-J option as an alias to ProxyJump.

- The scp and sftp command-lines allow the

-A flag to explicitly enable agent forwarding.

Misc unsorted

),

and from time-to-time I ve made sure that my code runs on OpenBSD

),

and from time-to-time I ve made sure that my code runs on OpenBSD

too.

Platforms beyond that will likely not be supported at the expense of either

of those two. I ll take fixes for bugs that fix a problem on another

platform, but not damage the code to work around issues / lack of features

on other platforms (like Windows).

too.

Platforms beyond that will likely not be supported at the expense of either

of those two. I ll take fixes for bugs that fix a problem on another

platform, but not damage the code to work around issues / lack of features

on other platforms (like Windows). ),

and from time-to-time I ve made sure that my code runs on OpenBSD

),

and from time-to-time I ve made sure that my code runs on OpenBSD

too.

Platforms beyond that will likely not be supported at the expense of either

of those two. I ll take fixes for bugs that fix a problem on another

platform, but not damage the code to work around issues / lack of features

on other platforms (like Windows).

too.

Platforms beyond that will likely not be supported at the expense of either

of those two. I ll take fixes for bugs that fix a problem on another

platform, but not damage the code to work around issues / lack of features

on other platforms (like Windows).

This is part of a series of posts on compiling a custom version of Qt5 in order

to develop for both amd64 and a Raspberry Pi.

This is part of a series of posts on compiling a custom version of Qt5 in order

to develop for both amd64 and a Raspberry Pi.

About me

Hello, world! For those who are meeting me for the first time, I am a 31 year old History teacher from Porto Alegre, Brazil.

Some people might know me from the Python community, because I have been leading

About me

Hello, world! For those who are meeting me for the first time, I am a 31 year old History teacher from Porto Alegre, Brazil.

Some people might know me from the Python community, because I have been leading  Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle

Often, when I mention how things work in the interactive theorem prover [Isabelle/HOL] (in the following just Isabelle  I've installed 1 kilowatt of solar panels on my roof, using professional

grade eqipment. The four panels are Astronergy 260 watt panels, and they're

mounted on IronRidge XR100 rails. Did it all myself, without help.

I've installed 1 kilowatt of solar panels on my roof, using professional

grade eqipment. The four panels are Astronergy 260 watt panels, and they're

mounted on IronRidge XR100 rails. Did it all myself, without help.

For each node, the prefix is known by its path from the root node and

the prefix length is the current depth.

A lookup in such a trie is quite simple: at each step, fetch the

nth bit of the

For each node, the prefix is known by its path from the root node and

the prefix length is the current depth.

A lookup in such a trie is quite simple: at each step, fetch the

nth bit of the  Since some bits have been ignored, on a match, a final check is

executed to ensure all bits from the found entry are matching the

input

Since some bits have been ignored, on a match, a final check is

executed to ensure all bits from the found entry are matching the

input  The reduction on the average depth of the tree compensates the

necessity to handle those false positives. The insertion and deletion

of a routing entry is still easy enough.

Many routing systems are using Patricia trees:

The reduction on the average depth of the tree compensates the

necessity to handle those false positives. The insertion and deletion

of a routing entry is still easy enough.

Many routing systems are using Patricia trees:

Such a trie is called

Such a trie is called  There are several structures involved:

There are several structures involved:

The lookup time is loosely tied to the maximum depth. When the routing

table is densily populated, the maximum depth is low and the lookup

times are fast.

When forwarding at 10 Gbps, the time budget for a packet would be

about 50 ns. Since this is also the time needed for the route lookup

alone in some cases, we wouldn t be able to forward at line rate with

only one core. Nonetheless, the results are pretty good and they are

expected to scale linearly with the number of cores.

The measurements are done with a Linux kernel 4.11 from Debian

unstable. I have gathered performance metrics accross kernel versions

in

The lookup time is loosely tied to the maximum depth. When the routing

table is densily populated, the maximum depth is low and the lookup

times are fast.

When forwarding at 10 Gbps, the time budget for a packet would be

about 50 ns. Since this is also the time needed for the route lookup

alone in some cases, we wouldn t be able to forward at line rate with

only one core. Nonetheless, the results are pretty good and they are

expected to scale linearly with the number of cores.

The measurements are done with a Linux kernel 4.11 from Debian

unstable. I have gathered performance metrics accross kernel versions

in

The results are quite good. With only 256 MiB, about 2 million routes

can be stored!

The results are quite good. With only 256 MiB, about 2 million routes

can be stored!

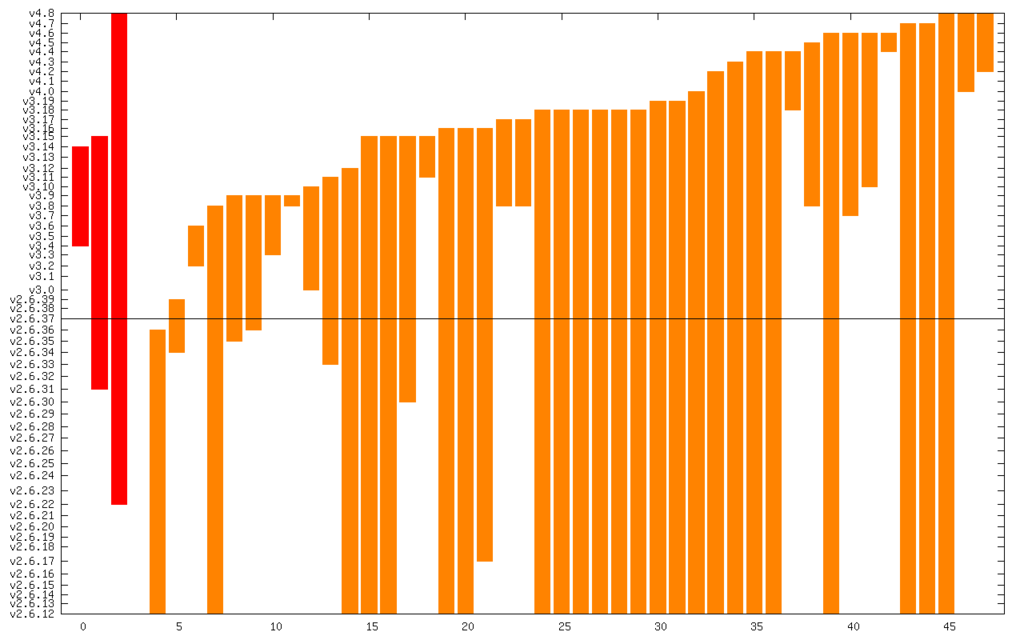

For some reason, the relation is linear when the number of rules is

between 1 and 100 but the slope increases noticeably past this

threshold. The second graph highlights the negative impact of the

first rule (about 30 ns).

A common use of rules is to create virtual routers: interfaces

are segregated into domains and when a datagram enters through an

interface from domain A, it should use routing table A:

For some reason, the relation is linear when the number of rules is

between 1 and 100 but the slope increases noticeably past this

threshold. The second graph highlights the negative impact of the

first rule (about 30 ns).

A common use of rules is to create virtual routers: interfaces

are segregated into domains and when a datagram enters through an

interface from domain A, it should use routing table A:

Reverse engineering protocols is a great deal easier when they're not encrypted. Thankfully most apps I've dealt with have been doing something convenient like using AES with a key embedded in the app, but others use remote protocols over HTTPS and that makes things much less straightforward.

Reverse engineering protocols is a great deal easier when they're not encrypted. Thankfully most apps I've dealt with have been doing something convenient like using AES with a key embedded in the app, but others use remote protocols over HTTPS and that makes things much less straightforward.

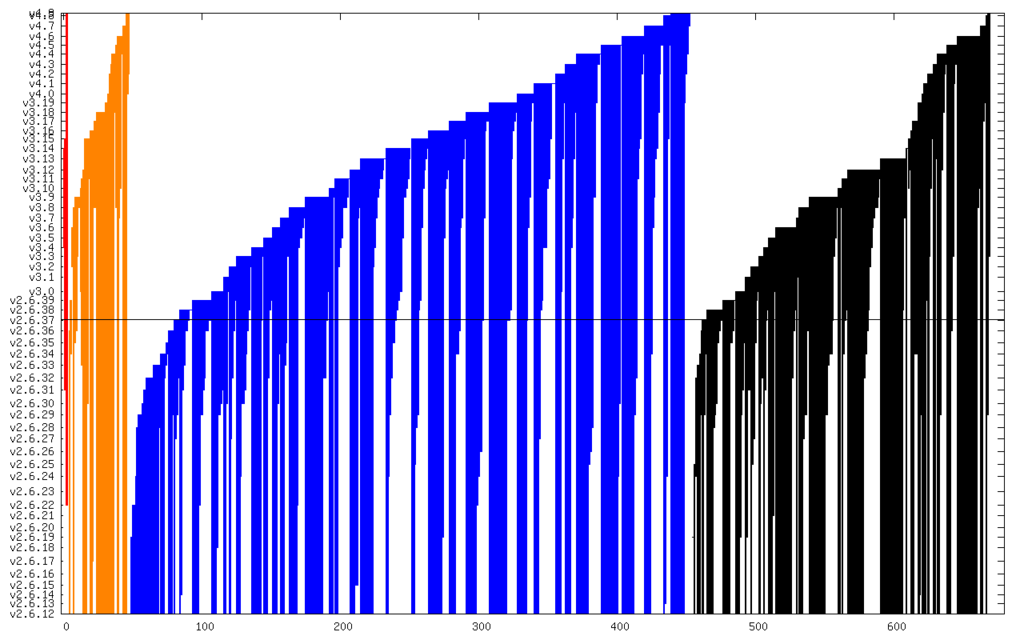

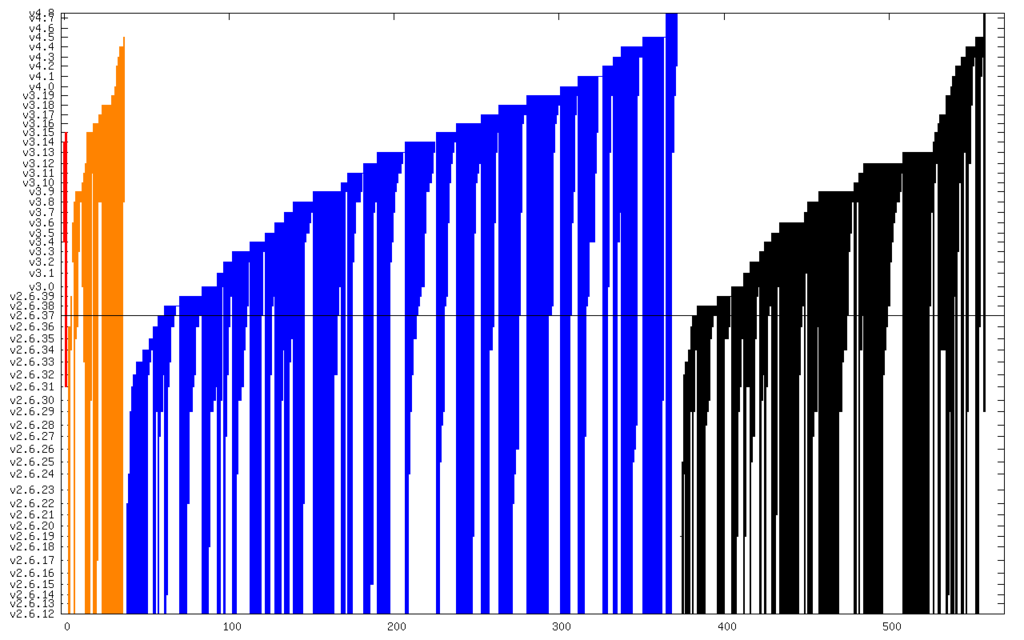

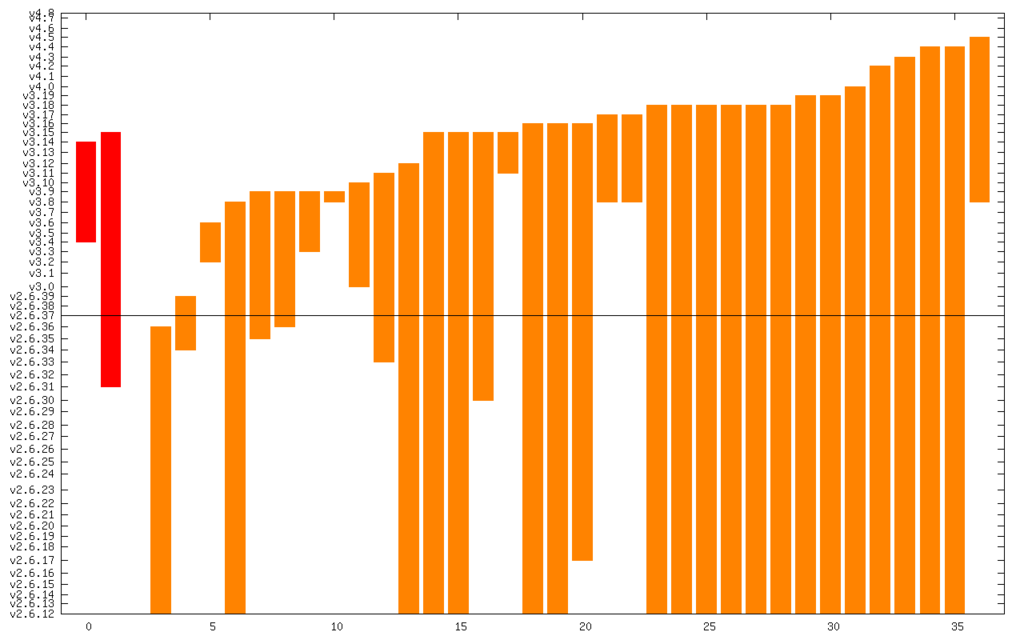

And here it is zoomed in to just Critical and High:

And here it is zoomed in to just Critical and High:

The line in the middle is the date from which I started the CVE search (2011). The vertical axis is actually linear time, but it s labeled with kernel releases (which are pretty regular). The numerical summary is:

The line in the middle is the date from which I started the CVE search (2011). The vertical axis is actually linear time, but it s labeled with kernel releases (which are pretty regular). The numerical summary is:

In order to monitor some of the

In order to monitor some of the