Review:

Going Infinite, by Michael Lewis

| Publisher: |

W.W. Norton & Company |

| Copyright: |

2023 |

| ISBN: |

1-324-07434-5 |

| Format: |

Kindle |

| Pages: |

255 |

My first reaction when I heard that Michael Lewis had been embedded with

Sam Bankman-Fried working on a book when Bankman-Fried's cryptocurrency

exchange FTX collapsed into bankruptcy after losing billions of dollars of

customer deposits was "holy shit, why would you talk to

Michael

Lewis about your dodgy cryptocurrency company?" Followed immediately by

"I have to read this book."

This is that book.

I wasn't sure how Lewis would approach this topic. His normal (although

not exclusive) area of interest is financial systems and crises, and there

is lots of room for multiple books about cryptocurrency fiascoes using

someone like Bankman-Fried as a pivot. But

Going Infinite is not

like

The Big Short or Lewis's other

financial industry books. It's a nearly straight biography of Sam

Bankman-Fried, with just enough context for the reader to follow his life.

To understand what you're getting in

Going Infinite, I think it's

important to understand what sort of book Lewis likes to write. Lewis is

not exactly a reporter, although he does explain complicated things for a

mass audience. He's primarily a storyteller who collects people he finds

fascinating. This book was therefore never going to be like, say,

Carreyrou's

Bad Blood or Isaac's

Super Pumped. Lewis's interest is not

in a forensic account of how FTX or Alameda Research were structured. His

interest is in what makes Sam Bankman-Fried tick, what's going on inside

his head.

That's not a question Lewis directly answers, though. Instead, he shows

you Bankman-Fried as Lewis saw him and was able to reconstruct from

interviews and sources and lets you draw your own conclusions. Boy did I

ever draw a lot of conclusions, most of which were highly unflattering.

However, one conclusion I didn't draw, and had been dubious about even

before reading this book, was that Sam Bankman-Fried was some sort of

criminal mastermind who intentionally plotted to steal customer money.

Lewis clearly doesn't believe this is the case, and with the caveat that

my study of the evidence outside of this book has been spotty and

intermittent, I think Lewis has the better of the argument.

I am utterly fascinated by this, and I'm afraid this review is going to

turn into a long summary of my take on the argument, so here's the capsule

review before you get bored and wander off: This is a highly entertaining

book written by an excellent storyteller. I am also inclined to believe

most of it is true, but given that I'm not on the jury, I'm not that

invested in whether Lewis is too credulous towards the explanations of the

people involved. What I do know is that it's a fantastic yarn with

characters who are too wild to put in fiction, and I thoroughly enjoyed

it.

There are a few things that everyone involved appears to agree on, and

therefore I think we can take as settled. One is that Bankman-Fried, and

most of the rest of FTX and Alameda Research, never clearly distinguished

between customer money and all of the other money. It's not obvious that

their home-grown accounting software (written entirely by one person! who

never spoke to other people! in code that no one else could understand!)

was even capable of clearly delineating between their piles of money.

Another is that FTX and Alameda Research were thoroughly intermingled.

There was no official reporting structure and possibly not even a coherent

list of employees. The environment was so chaotic that lots of people,

including Bankman-Fried, could have stolen millions of dollars without

anyone noticing. But it was also so chaotic that they could, and did,

literally misplace millions of dollars by accident, or because

Bankman-Fried had problems with object permanence.

Something that was previously less obvious from news coverage but that

comes through very clearly in this book is that Bankman-Fried seriously

struggled with normal interpersonal and societal interactions. We know

from multiple sources that he was diagnosed with ADHD and depression

(Lewis describes it specifically as anhedonia, the inability to feel

pleasure). The ADHD in Lewis's account is quite severe and does not sound

controlled, despite medication; for example, Bankman-Fried routinely

played timed video games while he was having important meetings, forgot

things the moment he stopped dealing with them, was constantly on his

phone or seeking out some other distraction, and often stimmed (by

bouncing his leg) to a degree that other people found it distracting.

Perhaps more tellingly, Bankman-Fried repeatedly describes himself in

diary entries and correspondence to other people (particularly Caroline

Ellison, his employee and on-and-off secret girlfriend) as being devoid of

empathy and unable to access his own emotions, which Lewis supports with

stories from former co-workers. I'm very hesitant to diagnose someone via

a book, but, at least in Lewis's account, Bankman-Fried nearly walks down

the symptom list of antisocial personality disorder in his own description

of himself to other people. (The one exception is around physical

violence; there is nothing in this book or in any of the other reporting

that I've seen to indicate that Bankman-Fried was violent or physically

abusive.) One of the recurrent themes of this book is that Bankman-Fried

never saw the point in following rules that didn't make sense to him or

worrying about things he thought weren't important, and therefore simply

didn't.

By about a third of the way into this book, before FTX is even properly

started, very little about its eventual downfall will seem that

surprising. There was no way that Sam Bankman-Fried was going to be able

to run a successful business over time. He was extremely good at

probabilistic trading and spotting exploitable market inefficiencies, and

extremely bad at essentially every other aspect of living in a society

with other people, other than a hit-or-miss ability to charm that worked

much better with large audiences than one-on-one. The real question was

why anyone would ever entrust this man with millions of dollars or decide

to work for him for longer than two weeks.

The answer to those questions changes over the course of this story.

Later on, it was timing. Sam Bankman-Fried took the techniques of high

frequency trading he learned at Jane Street Capital and applied them to

exploiting cryptocurrency markets at precisely the right time in the

cryptocurrency bubble. There was far more money than sense, the most

ruthless financial players were still too leery to get involved, and a

rising tide was lifting all boats, even the ones that were piles of

driftwood. When cryptocurrency inevitably collapsed, so did his

businesses. In retrospect, that seems inevitable.

The early answer, though, was effective altruism.

A full discussion of effective altruism is beyond the scope of this

review, although Lewis offers a decent introduction in the book. The

short version is that a sensible and defensible desire to use stronger

standards of evidence in evaluating charitable giving turned into a

bizarre navel-gazing exercise in making up statistical risks to

hypothetical future people and treating those made-up numbers as if they

should be the bedrock of one's personal ethics. One of the people most

responsible for this turn is an Oxford philosopher named

Will MacAskill.

Sam Bankman-Fried was already obsessed with utilitarianism, in part due to

his parents' philosophical beliefs, and it was a presentation by Will

MacAskill that converted him to the effective altruism variant of extreme

utilitarianism.

In Lewis's presentation, this was like joining a cult. The impression I

came away with feels like something out of a

science fiction novel: Bankman-Fried knew there was some serious gap in

his thought processes where most people had empathy, was deeply troubled

by this, and latched on to effective altruism as the ethical framework to

plug into that hole. So much of effective altruism sounds like a con game

that it's easy to think the participants are lying, but Lewis clearly

believes Bankman-Fried is a true believer. He appeared to be sincerely

trying to make money in order to use it to solve existential threats to

society, he does not appear to be motivated by money apart from that goal,

and he was following through (in bizarre and mostly ineffective ways).

I find this particularly believable because effective altruism as a belief

system seems designed to fit Bankman-Fried's personality and justify the

things he wanted to do anyway. Effective altruism says that empathy is

meaningless, emotion is meaningless, and ethical decisions should be made

solely on the basis of expected value: how much return (usually in safety)

does society get for your investment. Effective altruism says that all

the things that Sam Bankman-Fried was bad at were useless and unimportant,

so he could stop feeling bad about his apparent lack of normal human

morality. The only thing that mattered was the thing that he was

exceptionally good at: probabilistic reasoning under uncertainty. And,

critically to the foundation of his business career, effective altruism

gave him access to investors and a recruiting pool of employees, things he

was entirely unsuited to acquiring the normal way.

There's a ton more of this book that I haven't touched on, but this review

is already quite long, so I'll leave you with one more point.

I don't know how true Lewis's portrayal is in all the details. He took

the approach of getting very close to most of the major players in this

drama and largely believing what they said happened, supplemented by

startling access to sources like Bankman-Fried's personal diary and

Caroline Ellis's personal diary. (He also seems to have gotten extensive

information from the personal psychiatrist of most of the people involved;

I'm not sure if there's some reasonable explanation for this, but based

solely on the material in this book, it seems to be a shocking breach of

medical ethics.) But Lewis is a storyteller more than he's a reporter,

and his bias is for telling a great story. It's entirely possible that

the events related here are not entirely true, or are skewed in favor of

making a better story. It's certainly true that they're not the complete

story.

But, that said, I think a book like this is a useful counterweight to the

human tendency to believe in moral villains. This is, frustratingly, a

counterweight extended almost exclusively to higher-class white people

like Bankman-Fried. This is infuriating, but that doesn't make it wrong.

It means we should extend that analysis to more people.

Once FTX collapsed, a lot of people became very invested in the idea that

Bankman-Fried was a straightforward embezzler. Either he intended from

the start to steal everyone's money or, more likely, he started losing

money, panicked, and stole customer money to cover the hole. Lots of

people in history have done exactly that, and lots of people involved in

cryptocurrency have tenuous attachments to ethics, so this is a believable

story. But people are complicated, and there's also truth in the maxim

that every villain is the hero of their own story. Lewis is after a less

boring story than "the crook stole everyone's money," and that leads to

some bias. But sometimes the less boring story is also true.

Here's the thing: even if Sam Bankman-Fried never intended to take any

money, he clearly did intend to mix customer money with Alameda Research

funds. In Lewis's account, he never truly believed in them as separate

things. He didn't care about following accounting or reporting rules; he

thought they were boring nonsense that got in his way. There is obvious

criminal intent here in any reading of the story, so I don't think Lewis's

more complex story would let him escape prosecution. He refused to follow

the rules, and as a result a lot of people lost a lot of money. I think

it's a useful exercise to leave mental space for the possibility that he

had far less obvious reasons for those actions than that he was a simple

thief, while still enforcing the laws that he quite obviously violated.

This book was great. If you like Lewis's style, this was some of the best

entertainment I've read in a while. Highly recommended; if you are at all

interested in this saga, I think this is a must-read.

Rating: 9 out of 10

I work from home these days, and my nearest office is over 100 miles away, 3 hours door to door if I travel by train (and, to be honest, probably not a lot faster given rush hour traffic if I drive). So I m reliant on a functional internet connection in order to be able to work. I m lucky to have access to Openreach FTTP, provided by Aquiss, but I worry about what happens if there s a cable cut somewhere or some other long lasting problem. Worst case I could tether to my work phone, or try to find some local coworking space to use while things get sorted, but I felt like arranging a backup option was a wise move.

Step 1 turned out to be sorting out recursive DNS. It s been many moons since I had to deal with running DNS in a production setting, and I ve mostly done my best to avoid doing it at home too. dnsmasq has done a decent job at providing for my needs over the years, covering DHCP, DNS (+ tftp for my test device network). However I just let it slave off my ISP s nameservers, which means if that link goes down it ll no longer be able to resolve anything outside the house.

One option would have been to either point to a different recursive DNS server (Cloudfare s 1.1.1.1 or Google s Public DNS being the common choices), but I ve no desire to share my lookup information with them. As another approach I could have done some sort of failover of

I work from home these days, and my nearest office is over 100 miles away, 3 hours door to door if I travel by train (and, to be honest, probably not a lot faster given rush hour traffic if I drive). So I m reliant on a functional internet connection in order to be able to work. I m lucky to have access to Openreach FTTP, provided by Aquiss, but I worry about what happens if there s a cable cut somewhere or some other long lasting problem. Worst case I could tether to my work phone, or try to find some local coworking space to use while things get sorted, but I felt like arranging a backup option was a wise move.

Step 1 turned out to be sorting out recursive DNS. It s been many moons since I had to deal with running DNS in a production setting, and I ve mostly done my best to avoid doing it at home too. dnsmasq has done a decent job at providing for my needs over the years, covering DHCP, DNS (+ tftp for my test device network). However I just let it slave off my ISP s nameservers, which means if that link goes down it ll no longer be able to resolve anything outside the house.

One option would have been to either point to a different recursive DNS server (Cloudfare s 1.1.1.1 or Google s Public DNS being the common choices), but I ve no desire to share my lookup information with them. As another approach I could have done some sort of failover of

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

This post should have marked the beginning of my yearly roundups of the favourite books and movies I read and watched in 2023.

However, due to coming down with a nasty bout of flu recently and other sundry commitments, I wasn't able to undertake writing the necessary four or five blog posts In lieu of this, however, I will simply present my (unordered and unadorned) highlights for now. Do get in touch if this (or any of my previous posts) have spurred you into picking something up yourself

It's August Bank Holiday Weekend, we're in Cambridge. It must be

the Debian

UK

It's August Bank Holiday Weekend, we're in Cambridge. It must be

the Debian

UK  Back in June 2018,

Back in June 2018,  So This is basically a call for adoption for the Raspberry Debian images

building service. I do intend to stick around and try to help. It s not only me

(although I m responsible for the build itself) we have a nice and healthy

group of Debian people hanging out in the

So This is basically a call for adoption for the Raspberry Debian images

building service. I do intend to stick around and try to help. It s not only me

(although I m responsible for the build itself) we have a nice and healthy

group of Debian people hanging out in the  About a week back Jio launched a

About a week back Jio launched a

Unlike the Americans who chose the path to have more competition, we have chosen the path to have more monopolies. So even though, I very much liked Louis es

Unlike the Americans who chose the path to have more competition, we have chosen the path to have more monopolies. So even though, I very much liked Louis es

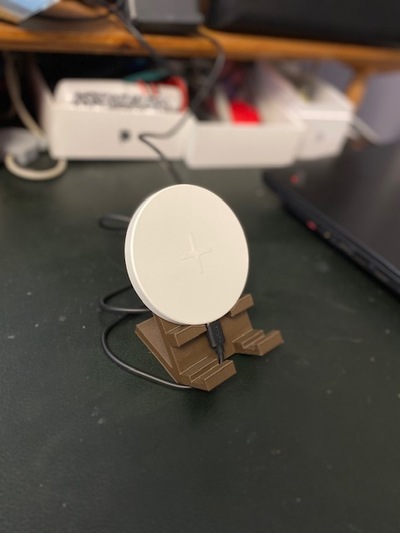

I've got a Qi-charging phone cradle at home which orients the phone up at an angle

which works with Apple's Face ID. At work, I've got a simpler "puck"-shaped one

which is less convenient, so I designed a basic cradle to raise both the charger

and the phone up.

I've got a Qi-charging phone cradle at home which orients the phone up at an angle

which works with Apple's Face ID. At work, I've got a simpler "puck"-shaped one

which is less convenient, so I designed a basic cradle to raise both the charger

and the phone up.