DebConf20 starts in about 5 weeks

DebConf20 starts in about 5 weeks, and as always, the DebConf

Videoteam is working hard to make sure it'll be a success. As such, we held a

sprint from July 9th to 13th to work on our new infrastructure.

A remote sprint certainly ain't as fun as an in-person one, but we nonetheless

managed to enjoy ourselves. Many thanks to those who participated, namely:

- Carl Karsten (CarlFK)

- Ivo De Decker (ivodd)

- Kyle Robbertze (paddatrapper)

- Louis-Philippe V ronneau (pollo)

- Nattie Mayer-Hutchings (nattie)

- Nicolas Dandrimont (olasd)

- Stefano Rivera (tumbleweed)

- Wouter Verhelst (wouter)

We also wish to extend our thanks to Thomas Goirand and

Infomaniak for

providing us with virtual machines to experiment on and host the video

infrastructure for DebConf20.

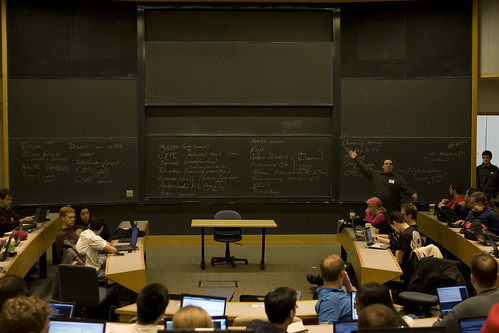

Advice for presenters

For DebConf20, we strongly encourage presenters to record their talks in

advance and send us the resulting video. We understand this is more work,

but we think it'll make for a more agreeable conference for everyone. Video

conferencing is still pretty wonky and there is nothing worse than a talk

ruined by a flaky internet connection or hardware failures.

As such, if you are giving a talk at DebConf this year, we are asking you to

read and follow our guide on

how to record your presentation.

Fear not: we are not getting rid of the Q&A period at the end of talks.

Attendees will ask their questions either on IRC or on a collaborative pad

and the Talkmeister will relay them to the speaker once the pre-recorded

video has finished playing.

New infrastructure, who dis?

Organising a virtual DebConf implies migrating from our battle-tested

on-premise workflow to a completely new remote one.

One of the major changes this means for us is the addition of Jitsi Meet to our

infrastructure. We normally have 3 different video sources in a room: two

cameras and a slides grabber. With the new online workflow, directors will be

able to play pre-recorded videos as a source, will get a feed from a Jitsi room

and will see the audience questions as a third source.

This might seem simple at first, but is in fact a very major change to our

workflow and required a lot of work to implement.

== On-premise == == Online ==

Camera 1 Jitsi

v ---> Frontend v ---> Frontend

Slides -> Voctomix -> Backend -+--> Frontend Questions -> Voctomix -> Backend -+--> Frontend

^ ---> Frontend ^ ---> Frontend

Camera 2 Pre-recorded video

In our tests, playing back pre-recorded videos to voctomix worked well, but was

sometimes unreliable due to inconsistent encoding settings. Presenters will

thus upload their pre-recorded talks to

SReview so we can make sure there

aren't any obvious errors. Videos will then be re-encoded to ensure a

consistent encoding and to normalise audio levels.

This process will also let us stitch the Q&As at the end of the pre-recorded

videos more easily prior to publication.

Reducing the stream latency

One of the pitfalls of the streaming infrastructure we have been using since

2016 is high video latency. In a worst case scenario, remote attendees could

get up to 45 seconds of latency, making participation in events like BoFs

arduous.

In preparation for DebConf20, we added a new way to stream our talks: RTMP.

Attendees will thus have the option of using either an HLS stream with higher

latency or an RTMP stream with lower latency.

Here is a comparative table that can help you decide between the two protocols:

| Pros |

- Can be watched from a browser

- Auto-selects a stream encoding

- Single URL to remember

|

|

| Cons |

- Higher latency (up to 45s)

|

- Requires a dedicated video player (VLC, mpv)

- Specific URLs for each encoding setting

|

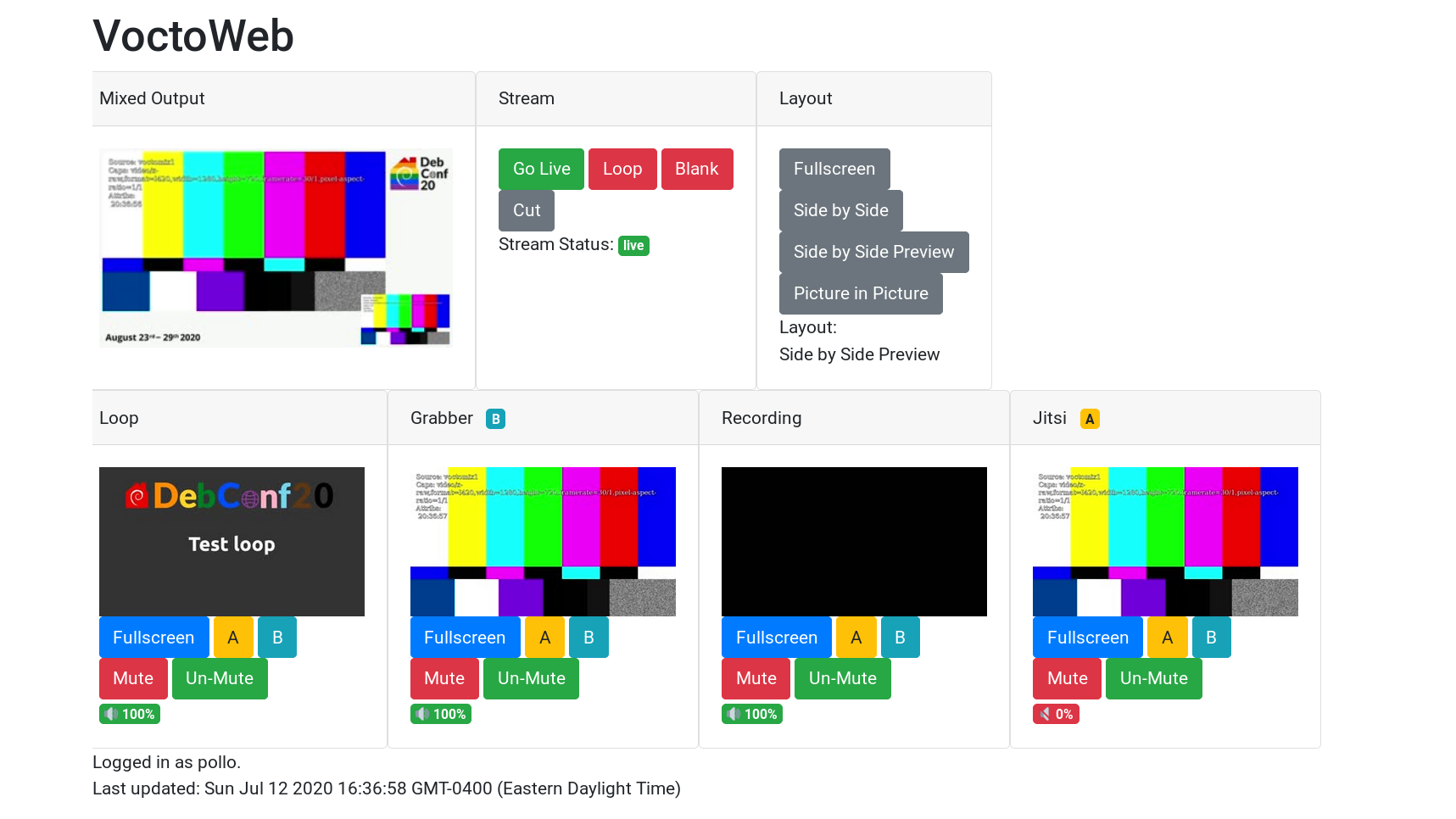

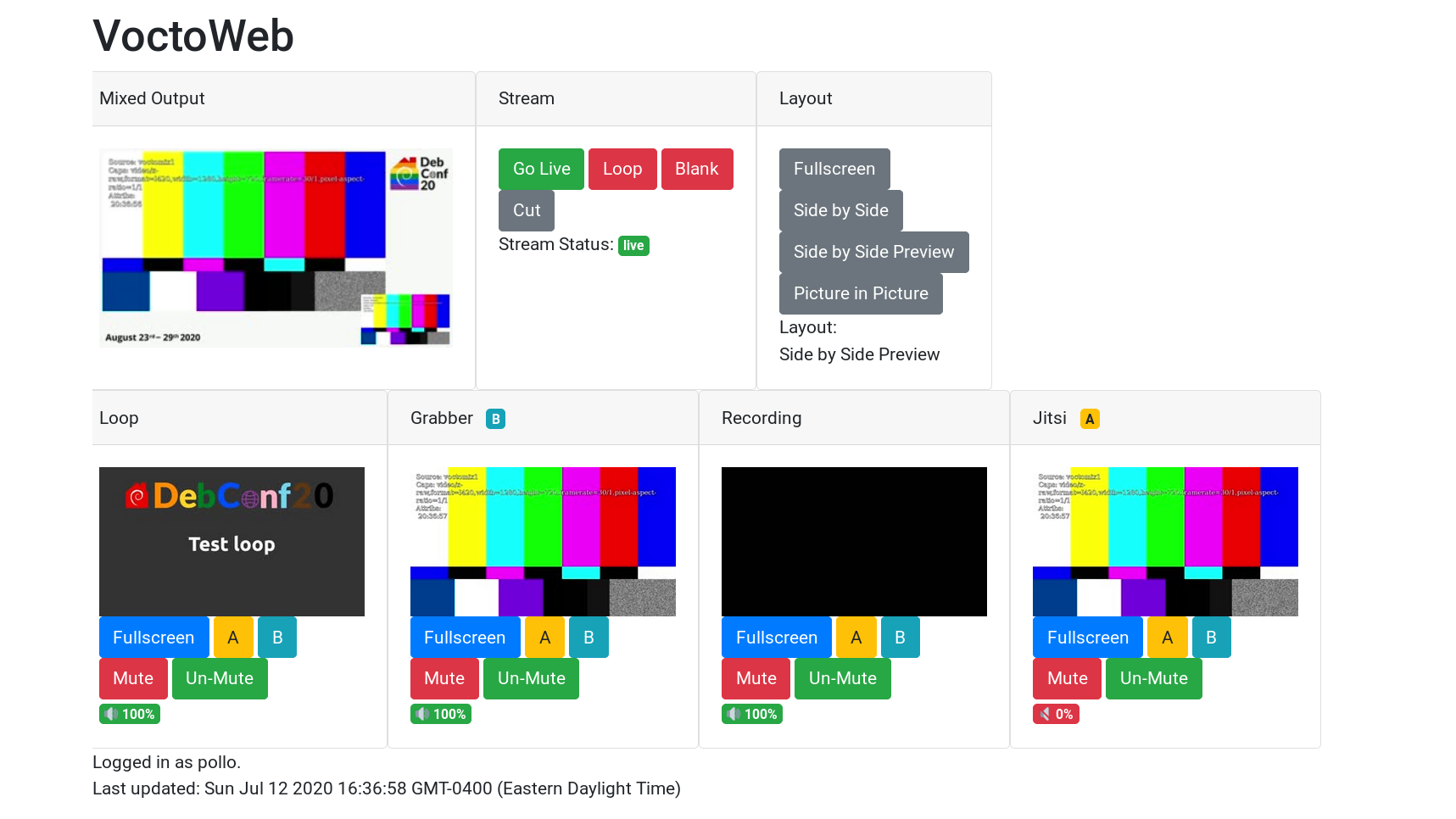

Live mixing from home with VoctoWeb

Since DebConf16, we have been using

voctomix, a live video mixer developed

by the CCC VOC. voctomix is conveniently divided in two: voctocore is the

backend server while voctogui is a GTK+ UI frontend directors can use to

live-mix.

Although voctogui can connect to a remote server, it was primarily designed to

run either on the same machine as voctocore or on the same LAN. Trying to use

voctogui from a machine at home to connect to a voctocore running in a

datacenter proved unreliable, especially for high-latency and low bandwidth

connections.

Inspired by the setup FOSDEM uses, we instead decided to go with a web frontend

for voctocore. We initially used

FOSDEM's code as a proof of

concept, but quickly reimplemented it in Python, a language we are more

familiar with as a team.

Compared to the FOSDEM PHP implementation, voctoweb implements A / B source

selection (akin to voctogui) as well as audio control, two very useful

features. In the following screen captures, you can see the old PHP UI on the

left and the new shiny Python one on the right.

Voctoweb

Voctoweb is still under development and is likely to change quite a bit

until DebConf20. Still, the current version seems to works well enough to be

used in production if you ever need to.

Python GeoIP redirector

We run multiple geographically-distributed streaming frontend servers to

minimize the load on our streaming backend and to reduce overall latency.

Although users can connect to the frontends directly, we typically point them

to

live.debconf.org and redirect connections to the nearest server.

Sadly,

6 months ago MaxMind decided to change the licence on their

GeoLite2 database and left us scrambling. To fix this annoying issue, Stefano

Rivera wrote a Python program that uses the new database and reworked

our

ansible frontend server role. Since the new database cannot be

redistributed freely, you'll have to get a (free) license key from MaxMind if

you to use this role.

Ansible & CI improvements

Infrastructure as code is a living process and needs constant care to fix bugs,

follow changes in DSL and to implement new features. All that to say a large

part of the sprint was spent making our ansible roles and continuous

integration setup more reliable, less buggy and more featureful.

All in all, we merged 26 separate ansible-related merge request during the

sprint! As always, if you are good with ansible and wish to help, we accept

merge requests on our

ansible repository :)

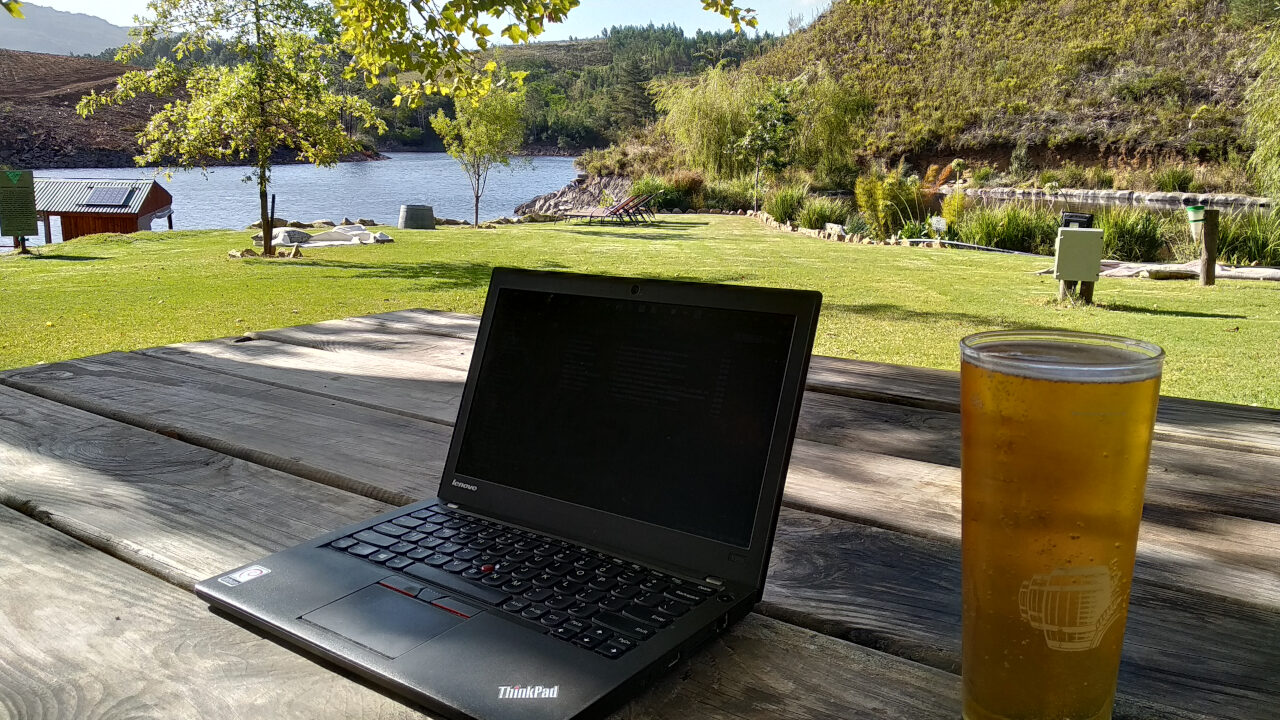

The Debian Videoteam has been

sprinting in

Cape Town, South Africa -- mostly because with Stefano here for a few

months, four of us (Jonathan, Kyle, Stefano, and myself) actually are in

the country on a regular basis. In addition to that, two more members of

the team (Nicolas and Louis-Philippe) are joining the sprint remotely

(from Paris and Montreal).

The Debian Videoteam has been

sprinting in

Cape Town, South Africa -- mostly because with Stefano here for a few

months, four of us (Jonathan, Kyle, Stefano, and myself) actually are in

the country on a regular basis. In addition to that, two more members of

the team (Nicolas and Louis-Philippe) are joining the sprint remotely

(from Paris and Montreal).

(Kyle and Stefano working on things, with me behind the camera and

Jonathan busy elsewhere.)

We've made loads of

progress!

Some highlights:

(Kyle and Stefano working on things, with me behind the camera and

Jonathan busy elsewhere.)

We've made loads of

progress!

Some highlights:

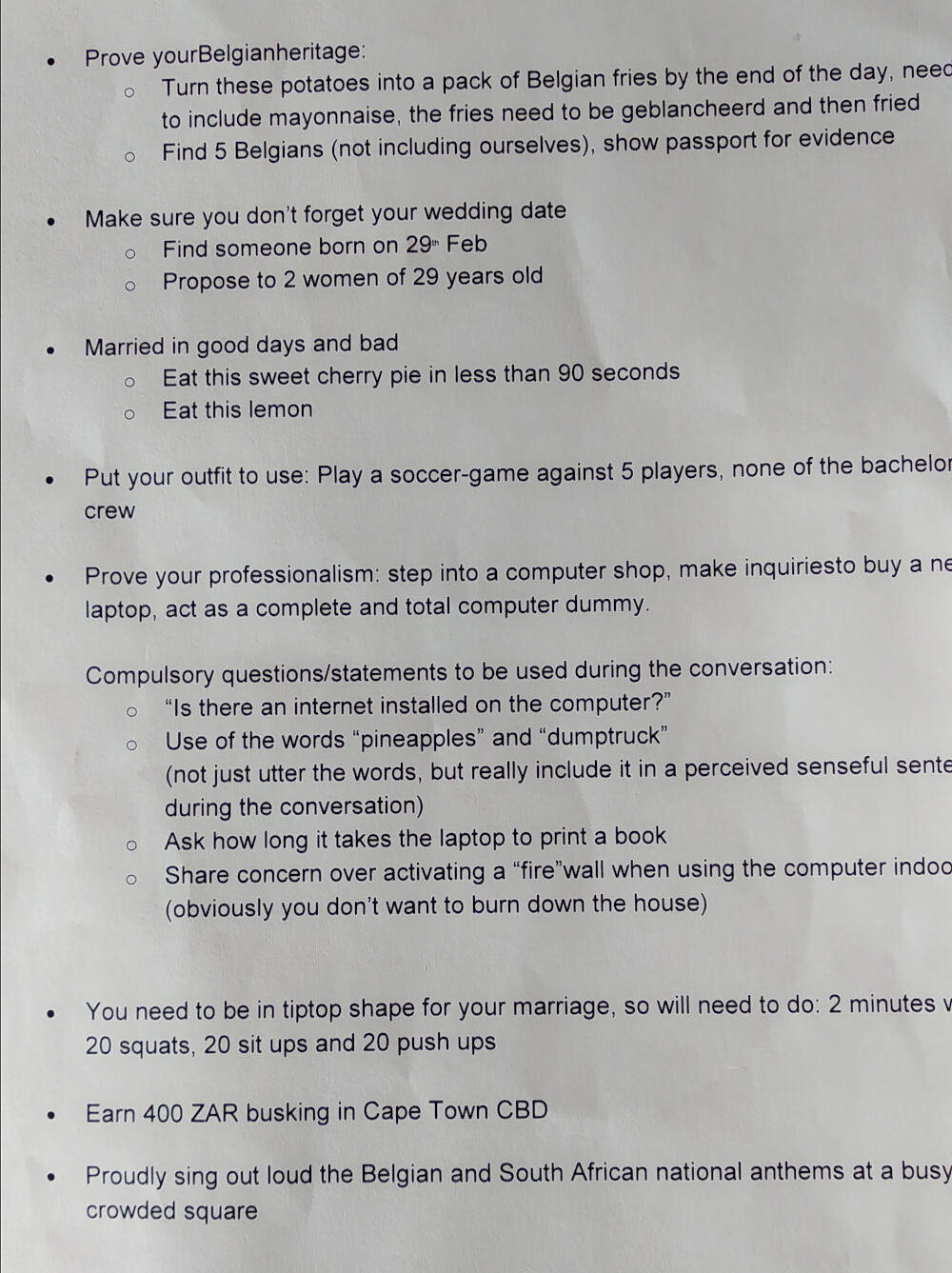

Wouter s tasks

Wouter s tasks Wouter s props, needed to complete his tasks

Wouter s props, needed to complete his tasks Bike tour leg at Cape Town Stadium.

Bike tour leg at Cape Town Stadium. Seeking out 29 year olds.

Seeking out 29 year olds. Wouter finishing his lemon and actually seemingly enjoying it.

Wouter finishing his lemon and actually seemingly enjoying it. Reciting South African national anthem notes and lyrics.

Reciting South African national anthem notes and lyrics. The national anthem, as performed by Wouter (I was actually impressed by how good his pitch was).

The national anthem, as performed by Wouter (I was actually impressed by how good his pitch was).

Accommodation at the lodge

Accommodation at the lodge Debian swirls everywhere

Debian swirls everywhere I took a canoe ride on the river and look what I found, a paddatrapper!

I took a canoe ride on the river and look what I found, a paddatrapper!

A bit of digital zoomage of previous image.

A bit of digital zoomage of previous image. Time to say the vows.

Time to say the vows. Just married. Thanks to Sue Fuller-Good for the photo.

Just married. Thanks to Sue Fuller-Good for the photo. Except for one character being out of place, this was a perfect fairy tale wedding, but I pointed Wouter to

Except for one character being out of place, this was a perfect fairy tale wedding, but I pointed Wouter to  In a few days, I ll be attending

In a few days, I ll be attending  I have just released version 1.19 of Obnam, the backup program. See

the website at

I have just released version 1.19 of Obnam, the backup program. See

the website at  I will be speaking at the

I will be speaking at the

As I am attempting to focus on writing projects that are more scholarly

and academic on the one hand (i.e., work for

As I am attempting to focus on writing projects that are more scholarly

and academic on the one hand (i.e., work for  Reading Kyle's view on

Reading Kyle's view on  two words^W^Wone command.

# echo blacklist pcspkr >/etc/modprobe.d/blacklist-pcspkr

That is all.

two words^W^Wone command.

# echo blacklist pcspkr >/etc/modprobe.d/blacklist-pcspkr

That is all.