TLDR: With the help of Helmut Grohne I finally figured out most of the bits

necessary to unshare everything without becoming root (though one might say

that this is still cheated because the suid root tools

newuidmap and

newgidmap

are used). I wrote a Perl script which documents how this is done in practice.

This script is nearly equivalent to using the existing commands

lxc-usernsexec

[opts] -- unshare [opts] -- COMMAND except that these two together cannot be

used to mount a new proc. Apart from this problem, this Perl script might also

be useful by itself because it is architecture independent and easily

inspectable for the curious mind without resorting to sources.debian.net (it is

heavily documented at nearly 2 lines of comments per line of code on average).

It can be retrieved here at

https://gitlab.mister-muffin.de/josch/user-unshare/blob/master/user-unshare

Long story: Nearly two years after my last

last rant about everything needing

superuser privileges in

Linux,

I'm still interested in techniques that let me do more things without becoming

root. Helmut Grohne had told me for a while about unshare(), or user namespaces

as the right way to have things like chroot without root. There are also

reports of LXC containers working without root privileges but they are hard to

come by. A couple of days ago I had some time again, so Helmut helped me to get

through the major blockers that were so far stopping me from using unshare in a

meaningful way without executing everything with

sudo.

My main motivation at that point was to let

dpkg-buildpackage when executed

by

sbuild be run with an unshared network namespace and thus without network

access (except for the loopback interface) because like pbuilder I wanted

sbuild to enforce the rule not to access any remote resources during the build.

After several evenings of investigating and doctoring at the Perl script I

mentioned initially, I came to the conclusion that the only place that can

unshare the network namespace without disrupting anything is schroot itself.

This is because unsharing

inside the chroot will fail because

dpkg-buildpackage is run with non-root privileges and thus the user namespace

has to be unshared. But this then will destroy all ownership information. But

even if that wasn't the case, the chroot itself is unlikely to have (and also

should not) tools like

ip or

newuidmap and

newgidmap installed. Unsharing

the schroot call itself also will not work. Again we first need to unshare the

user namespace and then schroot will complain about wrong ownership of its

configuration file

/etc/schroot/schroot.conf. Luckily, when contacting Roger

Leigh about this wishlist feature in

bug#802849 I was told that this was already

implemented in its git master \o/. So this particular problem seems to be taken

care of and once the next schroot release happens, sbuild will make use of it

and have

unshare --net capabilities just like

pbuilder already had since

last year.

With the sbuild case taken care of, the rest of this post will introduce

the

Perl script I wrote.

The name

user-unshare is really arbitrary. I just needed some identifier for

the git repository and a filename.

The most important discovery I made was, that Debian disables unprivileged user

namespaces by default with the patch

add-sysctl-to-disallow-unprivileged-CLONE_NEWUSER-by-default.patch to the

Linux kernel. To enable it, one has to first either do

echo 1 sudo tee /proc/sys/kernel/unprivileged_userns_clone > /dev/null

or

sudo sysctl -w kernel.unprivileged_userns_clone=1

The tool tries to be like unshare(1) but with the power of lxc-usernsexec(1) to

map more than one id into the new user namespace by using the programs

newgidmap and

newuidmap. Or in other words: This tool tries to be like

lxc-usernsexec(1) but with the power of unshare(1) to unshare more than just

the user and mount namespaces. It is nearly equal to calling:

lxc-usernsexec [opts] -- unshare [opts] -- COMMAND

Its main reason of existence are:

- as a project for me to learn how unprivileged namespaces work

- written in Perl which means:

- architecture independent (same executable on any architecture)

- easily inspectable by other curious minds

- tons of code comments to let others understand how things work

- no need to install the lxc package in a minimal environment (perl itself

might not be called minimal either but is present in every Debian

installation)

- not suffering from being unable to mount proc

I hoped that

systemd-nspawn could do what I wanted but it seems that its

requirement for being run as root will

not change any time

soon

Another tool in Debian that offers to do chroot without superuser privileges is

linux-user-chroot but that one cheats by being suid root.

Had I found

lxc-usernsexec earlier I would've probably not written this. But

after I found it I happily used it to get an even better understanding of the

matter and further improve the comments in my code. I started writing my own

tool in Perl because that's the language sbuild was written in and as mentioned

initially, I intended to use this script with sbuild. Now that the sbuild

problem is taken care of, this is not so important anymore but I like if I can

read the code of simple programs I run directly from /usr/bin without having to

retrieve the source code first or use sources.debian.net.

The only thing I wasn't able to figure out is how to properly mount proc into

my new mount namespace. I found a workaround that works by first mounting a new

proc to

/proc and then bind-mounting

/proc to whatever new location for

proc is requested. I didn't figure out how to do this without mounting to

/proc first partly also because this doesn't work at all when using

lxc-usernsexec and

unshare together. In this respect, this perl script is a

bit more powerful than those two tools together. I suppose that the reason is

that

unshare wasn't written with having being called without superuser

privileges in mind. If you have an idea what could be wrong, the code has a big

FIXME about this issue.

Finally, here a demonstration of what my script can do. Because of the

/proc

bug,

lxc-usernsexec and

unshare together are not able to do this but it

might also be that I'm just not using these tools in the right way. The

following will give you an interactive shell in an environment created from one

of my sbuild chroot tarballs:

$ mkdir -p /tmp/buildroot/proc

$ ./user-unshare --mount-proc=/tmp/buildroot/proc --ipc --pid --net \

--uts --mount --fork -- sh -c 'ip link set lo up && ip addr && \

hostname hoothoot-chroot && \

tar -C /tmp/buildroot -xf /srv/chroot/unstable-amd64.tar.gz; \

/usr/sbin/chroot /tmp/buildroot /sbin/runuser -s /bin/bash - josch && \

umount /tmp/buildroot/proc && rm -rf /tmp/buildroot'

(unstable-amd64-sbuild)josch@hoothoot-chroot:/$ whoami

josch

(unstable-amd64-sbuild)josch@hoothoot-chroot:/$ hostname

hoothoot-chroot

(unstable-amd64-sbuild)josch@hoothoot-chroot:/$ ls -lha /proc head

total 0

dr-xr-xr-x 218 nobody nogroup 0 Oct 25 19:06 .

drwxr-xr-x 22 root root 440 Oct 1 08:42 ..

dr-xr-xr-x 9 root root 0 Oct 25 19:06 1

dr-xr-xr-x 9 josch josch 0 Oct 25 19:06 15

dr-xr-xr-x 9 josch josch 0 Oct 25 19:06 16

dr-xr-xr-x 9 root root 0 Oct 25 19:06 7

dr-xr-xr-x 9 josch josch 0 Oct 25 19:06 8

dr-xr-xr-x 4 nobody nogroup 0 Oct 25 19:06 acpi

dr-xr-xr-x 6 nobody nogroup 0 Oct 25 19:06 asound

Of course instead of running this long command we can also instead write a

small shell script and execute that instead. The following does the same things

as the long command above but adds some comments for further explanation:

#!/bin/sh

set -exu

# I'm using /tmp because I have it mounted as a tmpfs

rootdir="/tmp/buildroot"

# bring the loopback interface up

ip link set lo up

# show that the loopback interface is really up

ip addr

# make use of the UTS namespace being unshared

hostname hoothoot-chroot

# extract the chroot tarball. This must be done inside the user namespace for

# the file permissions to be correct.

#

# tar will fail to call mknod and to change the permissions of /proc but we are

# ignoring that

tar -C "$rootdir" -xf /srv/chroot/unstable-amd64.tar.gz true

# run chroot and inside, immediately drop permissions to the user "josch" and

# start an interactive shell

/usr/sbin/chroot "$rootdir" /sbin/runuser -s /bin/bash - josch

# unmount /proc and remove the temporary directory

umount "$rootdir/proc"

rm -rf "$rootdir"

and then:

$ mkdir -p /tmp/buildroot/proc

$ ./user-unshare --mount-proc=/tmp/buildroot/proc --ipc --pid --net --uts --mount --fork -- ./chroot.sh

As mentioned in the beginning, the tool is nearly equivalent to calling

lxc-usernsexec [opts] -- unshare [opts] -- COMMAND but because of the problem

with mounting proc (mentioned earlier),

lxc-usernsexec and

unshare cannot

be used with above example. If one tries anyways one will only get:

$ lxc-usernsexec -m b:0:1000:1 -m b:1:558752:1 -- unshare --mount-proc=/tmp/buildroot/proc --ipc --pid --net --uts --mount --fork -- ./chroot.sh

unshare: mount /tmp/buildroot/proc failed: Invalid argument

I'd be interested in finding out why that is and how to fix it.

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

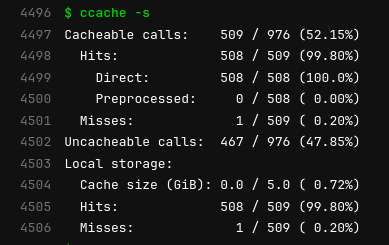

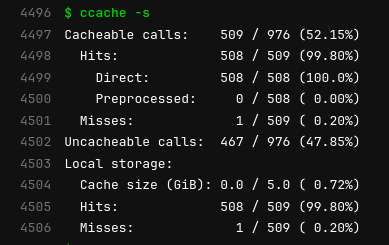

The image here comes from an example of building

The image here comes from an example of building  The image here comes from an example of building

The image here comes from an example of building

The owncloud package was

The owncloud package was  What happened in the

What happened in the

Thanks to akira for the confetti to celebrate the occasion!

Thanks to akira for the confetti to celebrate the occasion!