Following up to yesterday s article about how

NNCP rehabilitates asynchronous communication with modern encryption and onion routing, here is the first of my posts showing how to put it into action.

Email is a natural fit for async; in fact, much of early email was carried by

UUCP. It is useful for an airgapped machine to be able to send back messages; errors from cron, results of handling incoming data, disk space alerts, etc. (Of course, this would apply to a non-airgapped machine also).

The NNCP documentation already

describes how to do this for Postfix. Here I will show how to do it for Exim.

A quick detour to UUCP land

When you encounter a system such as email that has instructions for doing something via UUCP, that should be an alert to you that here is some very relevant information for doing this same thing via NNCP. The syntax is different, but broadly, here s a table of similar

NNCP commands:

| Purpose |

UUCP |

NNCP |

| Connect to remote system |

uucico -s, uupoll |

nncp-call, nncp-caller |

| Receive connection (pipe, daemon, etc) |

uucico (-l or similar) |

nncp-daemon |

| Request remote execution, stdin piped in |

uux |

nncp-exec |

| Copy file to remote machine |

uucp |

nncp-file |

| Copy file from remote machine |

uucp |

nncp-freq |

| Process received requests |

uuxqt |

nncp-toss |

| Move outbound requests to dir (for USB stick, airgap, etc) |

N/A |

nncp-xfer |

| Create streaming package of outbound requests |

N/A |

nncp-bundle |

If you used UUCP back in the day, you surely remember

bang paths. I will not be using those here. NNCP handles routing itself, rather than making the MTA be aware of the network topology, so this simplifies things considerably.

Sending from Exim to a smarthost

One common use for async email is from a satellite system: one that doesn t receive mail, or have local mailboxes, but just needs to get email out to the Internet. This is a common situation even for conventionally-connected systems; in Exim speak, this is a satellite system that routes mail via a smarthost. That is, every outbound message goes to a specific target, which then is responsible for eventual delivery (over the Internet, LAN, whatever).

This is fairly simple in Exim.

We actually have two choices for how to do this: bsmtp or rmail mode. bsmtp (batch SMTP) is the more modern way, and is essentially a derivative of SMTP that explicitly can be queued asynchronously. Basically it s a set of SMTP commands that can be saved in a file. The alternative is rmail (which is just an alias for sendmail these days), where the data is piped to rmail/sendmail with the recipients given on the command line. Both can work with Exim and NNCP, but because we re doing shiny new things, we ll use bsmtp.

These instructions are loosely based on the

Using outgoing BSMTP with Exim HOWTO. Some of these may assume Debianness in the configuration, but should be easily enough extrapolated to other configs as well.

First, configure Exim to use satellite mode with minimal DNS lookups (assuming that you may not have working DNS anyhow).

Then, in the Exim primary router section for smarthost (router/200_exim4-config_primary in Debian split configurations), just change

transport = remote_smtp_smarthost to

transport = nncp.

Now, define the NNCP transport. If you are on Debian, you might name this transports/40_exim4-config_local_nncp:

nncp:

debug_print = "T: nncp transport for $local_part@$domain"

driver = pipe

user = nncp

batch_max = 100

use_bsmtp

command = /usr/local/nncp/bin/nncp-exec -noprogress -quiet hostname_goes_here rsmtp

.ifdef REMOTE_SMTP_HEADERS_REWRITE

headers_rewrite = REMOTE_SMTP_HEADERS_REWRITE

.endif

.ifdef REMOTE_SMTP_RETURN_PATH

return_path = REMOTE_SMTP_RETURN_PATH

.endif

This is pretty straightforward. We pipe to nncp-exec, run it as the nncp user. nncp-exec sends it to a target node and runs whatever that node has called rsmtp (the command to receive bsmtp data). When the target node processes the request, it will run the configured command and pipe the data in to it.

More complicated: Routing to various NNCP nodes

Perhaps you would like to be able to send mail directly to various NNCP nodes. There are a lot of ways to do that.

Fundamentally, you will need a setup similar to the UUCP example in

Exim s manualroute manual, which lets you define how to reach various hosts via UUCP/NNCP. Perhaps you have a star topology (every NNCP node exchanges email with a central hub). In the NNCP world, you have two choices of how you do this. You could, at the Exim level, make the central hub the smarthost for all the side nodes, and let it redistribute mail. That would work, but requires decrypting messages at the hub to let Exim process. The other alternative is to configure NNCP to just send to the destinations via the central hub; that takes advantage of onion routing and doesn t require any Exim processing at the central hub at all.

Receiving mail from NNCP

On the receiving side, first you need to configure NNCP to authorize the execution of a mail program. In the section of your receiving host where you set the permissions for the client, include something like this:

exec:

rsmtp: ["/usr/sbin/sendmail", "-bS"]

The -bS option is what tells Exim to receive BSMTP on stdin.

Now, you need to tell Exim that nncp is a trusted user (able to set From headers arbitrarily). Assuming you are running NNCP as the nncp user, then add

MAIN_TRUSTED_USERS = nncp to a file such as /etc/exim4/conf.d/main/01_exim4-config_local-nncp. That s it!

Some hosts, of course, both send and receive mail via NNCP and will need configurations for both.

Because

Because

In a project I recently worked on we wanted to provide a

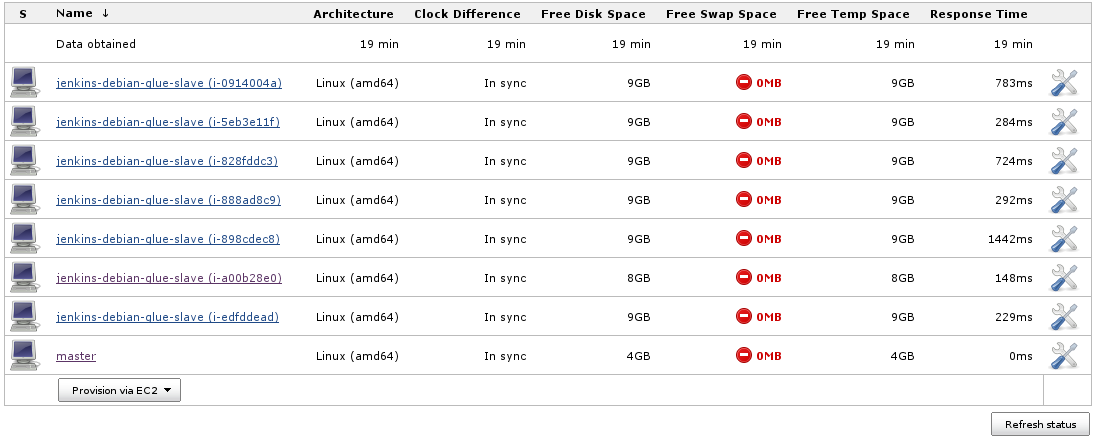

In a project I recently worked on we wanted to provide a  As you can see the configuration also includes a launch script. This script ensures that slaves are set up as needed (provide all the packages and scripts that are required for building) and always get the latest configuration and scripts before starting to serve as Jenkins slave.

Now your setup should be ready for launching Jenkins slaves as needed:

As you can see the configuration also includes a launch script. This script ensures that slaves are set up as needed (provide all the packages and scripts that are required for building) and always get the latest configuration and scripts before starting to serve as Jenkins slave.

Now your setup should be ready for launching Jenkins slaves as needed:

NOTE: you can use the Instance Cap configuration inside the advanced Amazon EC2 Jenkins configuration section to place an upward limit to the number of EC2 instances that Jenkins may launch. This can be useful for avoiding surprises in your AWS invoices.

NOTE: you can use the Instance Cap configuration inside the advanced Amazon EC2 Jenkins configuration section to place an upward limit to the number of EC2 instances that Jenkins may launch. This can be useful for avoiding surprises in your AWS invoices.