I won't reveal precisely how many books I read in 2020, but it was definitely an improvement on

74 in 2019,

53 in 2018 and

50 in 2017. But not only did I read more in a quantitative sense, the

quality seemed higher as well. There were certainly fewer disappointments: given its cultural resonance, I was nonplussed by Nick Hornby's

Fever Pitch and whilst Ian Fleming's

The Man with the Golden Gun was a little thin (again, given the obvious influence of the Bond franchise) the booked lacked 'thinness' in a way that made it interesting to critique. The weakest novel I read this year was probably J. M. Berger's

Optimal, but even this hybrid of

Ready Player One late-period

Black Mirror wasn't that cringeworthy, all things considered. Alas, graphic novels continue to not quite be my thing, I'm afraid.

I perhaps experienced more disappointments in the non-fiction section. Paul Bloom's

Against Empathy was frustrating, particularly in that it expended unnecessary energy battling its misleading title and accepted terminology, and it could so easily have been an

20-minute video essay instead). (Elsewhere in the social sciences,

David and Goliath will likely be the last Malcolm Gladwell book I voluntarily read.) After so many positive citations, I was also more than a little underwhelmed by Shoshana Zuboff's

The Age of Surveillance Capitalism, and after Ryan Holiday's

many engaging reboots of Stoic philosophy, his

Conspiracy (on

Peter Thiel and Hulk Hogan taking on Gawker) was slightly wide of the mark for me.

Anyway, here follows a selection of my favourites from 2020, in no particular order:

Fiction

Wolf Hall &

Bring Up the Bodies &

The Mirror and the Light

Hilary Mantel

During the early weeks of 2020, I re-read the first two parts of Hilary Mantel's

Thomas Cromwell trilogy in time for the March release of

The Mirror and the Light. I had actually spent the last few years eagerly following any news of the final instalment, feigning outrage whenever Mantel appeared to be spending time on other projects.

Wolf Hall turned out to be an even better book than I remembered, and when

The Mirror and the Light finally landed at midnight on 5th March, I began in earnest the next morning. Note that date carefully; this was early 2020, and the book swiftly became something of a heavy-handed allegory about the world at the time. That is to say and without claiming that I am

Monsieur Cromuel in any meaningful sense it was an uneasy experience to be reading about a man whose confident grasp on his world, friends and life was slipping beyond his control, and at least in Cromwell's case, was heading inexorably towards its denouement.

The final instalment in Mantel's trilogy is not perfect, and despite my love of her writing I would concur with the judges who decided against awarding her a third Booker Prize. For instance, there is something of the longueur that readers dislike in the second novel, although this might not be entirely Mantel's fault after all, the rise of the "ugly" Anne of Cleves and laborious trade negotiations for an uninspiring mineral (this is no Herbertian '

spice') will never match the court intrigues of Anne Boleyn, Jane Seymour and that

man for all seasons, Thomas More. Still, I am already looking forward to returning to the verbal sparring between King Henry and Cromwell when I read the entire trilogy once again, tentatively planned for 2022.

The Fault in Our Stars

John Green

I came across John Green's

The Fault in Our Stars via a

fantastic video by Lindsay Ellis discussing

Roland Barthes famous 1967

essay on authorial intent. However, I might have eventually come across

The Fault in Our Stars regardless, not because of Green's status as an internet celebrity of sorts but because I'm a complete sucker for this kind of emotionally-manipulative

bildungsroman, likely due to reading Philip Pullman's

His Dark Materials a few too many times in my teens.

Although its title is taken from Shakespeare's

Julius Caesar,

The Fault in Our Stars is actually more

Romeo & Juliet. Hazel, a 16-year-old cancer patient falls in love with Gus, an equally ill teen from her cancer support group. Hazel and Gus share the same acerbic (and distinctly unteenage) wit and a love of books, centred around Hazel's obsession of

An Imperial Affliction, a novel by the meta-fictional author Peter Van Houten. Through a kind of American version of

Jim'll Fix It, Gus and Hazel go and visit Van Houten in Amsterdam.

I'm afraid it's even cheesier than I'm describing it. Yet just as there is a time and a place for Michelin stars and Haribo Starmix, there's surely a place for this kind of well-constructed but altogether maudlin literature. One test for emotionally manipulative works like this is how well it can mask its internal contradictions while Green's story focuses on the universalities of love, fate and the shortness of life (as do almost all of his works, it seems),

The Fault in Our Stars manages to hide, for example, that this is an exceedingly favourable treatment of terminal illness that is only possible for the better off. The

2014 film adaptation does somewhat worse in peddling this fantasy (and has a much weaker treatment of the relationship between the teens' parents too, an underappreciated subtlety of the book).

The novel, however, is pretty slick stuff, and it is difficult to fault it for what it is. For some comparison, I later read Green's

Looking for Alaska and

Paper Towns which, as I mention, tug at many of the same strings, but they don't come together nearly as well as

The Fault in Our Stars. James Joyce claimed that "sentimentality is unearned emotion", and in this respect,

The Fault in Our Stars really does earn it.

The Plague

Albert Camus

P. D. James'

The Children of Men, George Orwell's

Nineteen Eighty-Four, Arthur Koestler's

Darkness at Noon ... dystopian fiction was already a theme of my reading in 2020, so given world events it was an inevitability that I would end up with Camus's novel about a plague that swept through the Algerian city of

Oran.

Is

The Plague an allegory about the Nazi occupation of France during World War Two? Where are all the female characters? Where are the Arab ones? Since its original publication in 1947, there's been so much written about

The Plague that it's hard to say anything new today. Nevertheless, I was taken aback by how well it captured so much of the nuance of 2020. Whilst we were saying just how 'unprecedented' these times were, it was eerie how a novel written in the 1940s could accurately how many of us were feeling well over seventy years on later: the attitudes of the people; the confident declarations from the institutions; the misaligned conversations that led to accidental misunderstandings. The disconnected lovers.

The only thing that perhaps did not work for me in

The Plague was the 'character' of the church. Although I could appreciate most of the allusion and metaphor, it was difficult for me to relate to the significance of Father Paneloux, particularly regarding his change of view on the doctrinal implications of the virus, and spoiler alert that he finally died of a "doubtful case" of the disease, beyond the idea that Paneloux's beliefs are in themselves "doubtful". Answers on a postcard, perhaps.

The Plague even seemed to predict how we, at least speaking of the UK, would react when the waves of the virus waxed and waned as well:

The disease stiffened and carried off three or four patients who were expected to recover. These were the unfortunates of the plague, those whom it killed when hope was high

It somehow captured the nostalgic yearning for

high-definition videos of cities and public transport; one character even visits the completely deserted railway station in Oman simply to read the timetables on the wall.

Tinker, Tailor, Soldier, Spy

John le Carr

There's absolutely none of the

Mad Men glamour of James Bond in John le Carr 's icy world of Cold War spies:

Small, podgy, and at best middle-aged, Smiley was by appearance one of London's meek who do not inherit the earth. His legs were short, his gait anything but agile, his dress costly, ill-fitting, and extremely wet.

Almost a direct rebuttal to Ian Fleming's 007,

Tinker, Tailor has broken-down cars, bad clothes, women with their own internal and external lives (!), pathetically primitive gadgets, and (contra

Mad Men) hangovers that significantly longer than ten minutes. In fact, the main aspect that the

mostly excellent 2011 film adaption doesn't really capture is the smoggy and run-down nature of 1970s London this is not your proto-

Cool Britannia of

Austin Powers or

GTA:1969, the city is truly 'gritty' in the sense there is a thin film of dirt and grime on every surface imaginable.

Another angle that the film cannot capture well is just how purposefully the novel does

not mention the United States. Despite the US obviously being the dominant power, the British vacillate between pretending it doesn't exist or implying its irrelevance to the matter at hand. This is no mistake on Le Carr 's part, as careful readers are rewarded by finding this denial of US hegemony in metaphor throughout --

pace Ian Fleming, there is no obvious

Felix Leiter to loudly throw money at the problem or a

Sheriff Pepper to serve as cartoon racist for the Brits to feel superior about. By contrast, I recall that a clever allusion to "

dusty teabags" is subtly mirrored a few paragraphs later with a reference to the installation of a coffee machine in the office, likely symbolic of the omnipresent and unavoidable influence of America. (The officer class convince themselves that coffee is a European import.) Indeed, Le Carr communicates a feeling of being surrounded on all sides by the peeling wallpaper of Empire.

Oftentimes, the writing style matches the graceless and inelegance of the world it depicts. The sentences are dense and you find your brain performing a fair amount of mid-flight sentence reconstruction, reparsing clauses, commas and conjunctions to interpret Le Carr 's intended meaning. In fact, in his

eulogy-cum-analysis of Le Carr 's writing style, William Boyd, himself a

ventrioquilist of Ian Fleming, named this intentional technique 'staccato'. Like the musical term, I suspect the effect of this literary staccato is as much about the impact it makes on a sentence as the imperceptible space it generates after it.

Lastly, the large cast in this sprawling novel is completely believable, all the way from the Russian spymaster Karla to minor schoolboy Roach the latter possibly a stand-in for Le Carr himself. I got through the 500-odd pages in just a few days, somehow managing to hold the almost-absurdly complicated plot in my head. This is one of those classic books of the genre that made me wonder why I had not got around to it before.

The Nickel Boys

Colson Whitehead

According to the judges who awarded it the Pulitzer Prize for Fiction,

The Nickel Boys is "a devastating exploration of abuse at a reform school in Jim Crow-era Florida" that serves as a "powerful tale of human perseverance, dignity and redemption". But whilst there is plenty of this perseverance and dignity on display, I found little redemption in this deeply cynical novel.

It could almost be read as a follow-up book to Whitehead's popular

The Underground Railroad, which itself won the Pulitzer Prize in 2017. Indeed, each book focuses on a young protagonist who might be euphemistically referred to as 'downtrodden'. But

The Nickel Boys is not only far darker in tone, it feels much closer and more connected to us today. Perhaps this is unsurprising, given that it is based on the story of the

Dozier School in northern Florida which operated for over a century before its long history of institutional abuse and racism was exposed a 2012 investigation. Nevertheless, if you liked the social commentary in

The Underground Railroad, then there is much more of that in

The Nickel Boys:

Perhaps his life might have veered elsewhere if the US government had opened the country to colored advancement like they opened the army. But it was one thing to allow someone to kill for you and another to let him live next door.

Sardonic aper us of this kind are pretty relentless throughout the book, but it never tips its hand too far into on nihilism, especially when some of the visual metaphors are often first-rate: "An American flag sighed on a pole" is one I can easily recall from memory. In general though,

The Nickel Boys is not only more world-weary in tenor than his previous novel, the United States it describes seems almost too beaten down to have the energy conjure up the

Swiftian magical realism that prevented

The Underground Railroad from being overly lachrymose. Indeed, even we Whitehead transports us a present-day New York City, we can't indulge in another kind of fantasy, the one where America has solved its problems:

The Daily News review described the [Manhattan restaurant] as nouveau Southern, "down-home plates with a twist." What was the twist that it was soul food made by white people?

It might be overly reductionist to connect Whitehead's tonal downshift with the racial justice movements of the past few years, but whatever the reason, we've ended up with a hard-hitting, crushing and frankly excellent book.

True Grit &

No Country for Old Men

Charles Portis &

Cormac McCarthy

It's one of the most tedious cliches to claim the book is better than the film, but these two books are of such high quality that even the Coen Brothers at their best cannot transcend them. I'm grouping these books together here though, not because their respective adaptations will exemplify some of the best cinema of the 21st century, but because of their superb treatment of language.

Take the use of dialogue. Cormac McCarthy famously

does not use any punctuation "I believe in periods, in capitals, in the occasional comma, and that's it" but the conversations in

No Country for Old Men together feel familiar and commonplace, despite being relayed through this unconventional technique. In lesser hands, McCarthy's written-out Texan drawl would be the novelistic equivalent of white rap or Jar Jar Binks, but not only is the effect entirely gripping, it helps you to believe you are physically present in the many intimate and domestic conversations that hold this book together. Perhaps the cinematic familiarity helps, as you can almost hear Tommy Lee Jones' voice as Sheriff Bell from the opening page to the last.

Charles Portis'

True Grit excels in its dialogue too, but in this book it is not so much in how it flows (although that is delightful in its own way) but in how forthright and sardonic Maddie Ross is:

"Earlier tonight I gave some thought to stealing a kiss from you, though you are very young, and sick and unattractive to boot, but now I am of a mind to give you five or six good licks with my belt."

"One would be as unpleasant as the other."

Perhaps this should be unsurprising. Maddie, a fourteen-year-old girl from Yell County, Arkansas, can barely fire her father's heavy pistol, so she can only has words to wield as her weapon. Anyway, it's not just me who treasures this book. In her

encomium that presages most modern editions, Donna Tartt of

The Secret History fame traces the novels origins through

Huckleberry Finn, praising its elegance and economy: "The plot of

True Grit is uncomplicated and as pure in its way as one of the

Canterbury Tales". I've read any Chaucer, but I am inclined to agree.

Tartt also recalls that

True Grit vanished almost entirely from the public eye after the release of

John Wayne's flimsy cinematic vehicle in 1969 this earlier film was, Tartt believes, "good enough, but doesn't do the book justice". As it happens, reading a book with its big screen adaptation as a chaser has been a minor theme of my 2020, including P. D. James'

The Children of Men, Kazuo Ishiguro's

Never Let Me Go, Patricia Highsmith's

Strangers on a Train, James Ellroy's

The Black Dahlia, John Green's

The Fault in Our Stars, John le Carr 's

Tinker, Tailor Soldier, Spy and even a staged production of

Charles Dicken's A Christmas Carol streamed from The Old Vic. For an autodidact with no academic background in literature or cinema, I've been finding this an effective and enjoyable means of getting closer to these fine books and films it is precisely where they deviate (or

perhaps where they are deficient) that offers a means by which one can see how they were constructed. I've also found that adaptations can also tell you a lot about the culture in which they were made: take the 'straightwashing' in the film version of

Strangers on a Train (1951) compared to the original novel, for example. It is certainly true that adaptions rarely (as Tartt put it) "do the book justice", but she might be also right to alight on a legal metaphor, for as the saying goes, to judge a movie in comparison to the book is to do both a disservice.

The Glass Hotel

Emily St. John Mandel

In

The Glass Hotel, Mandel somehow pulls off the impossible; writing a loose

roman- -clef on Bernie Madoff, a Ponzi scheme and the ephemeral nature of finance capital that is tranquil and shimmeringly beautiful. Indeed, don't get the wrong idea about the subject matter; this is no over over-caffeinated

The Big Short, as

The Glass Hotel is less about a Madoff or coked-up financebros but the fragile unreality of the late 2010s, a time which was, as we indeed discovered in 2020, one event away from almost shattering completely.

Mandel's prose has that translucent, phantom quality to it where the chapters slip through your fingers when you try to grasp at them, and the plot is like a ghost ship that that slips silently, like the Mary Celeste, onto

the Canadian water next to which the eponymous 'Glass Hotel' resides. Indeed, not unlike

The Overlook Hotel, the novel so overflows with symbolism so that even the title needs to evoke the idea of impermanence permanently living in a hotel might serve as a house, but it won't provide a home. It's risky to generalise about such things post-2016, but the whole story sits in that the infinitesimally small distance between perception and reality, a self-constructed culture that is not so much 'post truth' but between them.

There's something to consider in almost every character too. Take the stand-in for Bernie Madoff: no caricature of Wall Street out of a 1920s political cartoon or Brechtian satire, Jonathan Alkaitis has none of the oleaginous sleaze of a Dominic Strauss-Kahn, the cold sociopathy of a

Marcus Halberstam nor the well-exercised sinuses of, say,

Jordan Belford. Alkaitis is dare I say it? eminently likeable, and the book is all the better for it. Even the C-level characters have something to say: Enrico, trivially escaping from the regulators (who are pathetically late to the fraud without Mandel ever telling us explicitly), is daydreaming about the girlfriend he abandoned in New York: "He wished he'd realised he loved her before he left". What was in his previous life that prevented him from doing so? Perhaps he was never in love at all, or is love itself just as transient as the imaginary money in all those bank accounts? Maybe he fell in love just as he crossed safely into Mexico? When, precisely, do we fall in love anyway?

I went on to read Mandel's

Last Night in Montreal, an early work where you can feel her reaching for that other-worldly quality that she so masterfully achieves in

The Glass Hotel. Her f ted

Station Eleven is on my must-read list for 2021. "What is truth?" asked Pontius Pilate. Not even Mandel cannot give us the answer, but this will certainly do for now.

Running the Light

Sam Tallent

Although it trades in all of the clich s and stereotypes of the stand-up comedian (the triumvirate of drink, drugs and divorce), Sam Tallent's debut novel depicts an extremely convincing fictional account of a touring road comic.

The comedian Doug Stanhope (who himself released a fairly decent

No Encore for the Donkey memoir in 2020) hyped Sam's book relentlessly on his podcast during lockdown... and justifiably so. I ripped through

Running the Light in a few short hours, the only disappointment being that I can't seem to find videos online of Sam that come anywhere close to match up to his writing style. If you liked the rollercoaster energy of Paul Beatty's

The Sellout, the cynicism of George Carlin and the car-crash invertibility of final season

Breaking Bad, check this great book out.

Non-fiction

Inside Story

Martin Amis

This was my first introduction to Martin Amis's work after hearing that his "novelised autobiography" contained a fair amount about Christopher Hitchens, an author with whom I had a one of those rather clich d parasocial relationship with in the early days of YouTube. (Hey, it

could have been much worse.) Amis calls his book a "novelised autobiography", and just as much has been made of its quasi-fictional nature as the many diversions into didactic writing advice that betwixt each chapter: "Not content with being a novel, this book also wants to tell you how to write novels", complained

Tim Adams in The Guardian.

I suspect that reviewers who grew up with Martin since his debut book in 1973 rolled their eyes at yet another demonstration of his manifest cleverness, but as my first exposure to Amis's gift of observation, I confess that I was thought it was actually kinda clever. Try, for example, "it remains a maddening truth that both sexual success and sexual failure are steeply self-perpetuating" or "a hospital gym is a contradiction like a young Conservative", etc. Then again, perhaps I was experiencing a form of nostalgia for a pre-

Gamergate YouTube, when everything in the world was a lot simpler... or at least things could be solved by articulate gentlemen who honed their art of rhetoric at the Oxford Union.

I went on to read Martin's first novel,

The Rachel Papers (is it 'arrogance' if you are, indeed, that confident?), as well as his 1997

Night Train. I plan to read more of him in the future.

The Collected Essays, Journalism and Letters:

Volume 1 &

Volume 2 &

Volume 3 &

Volume 4

George Orwell

These deceptively bulky four volumes contain all of George Orwell's essays, reviews and correspondence, from his teenage letters sent to local newspapers to notes to his literary executor on his deathbed in 1950. Reading this was part of a larger, multi-year project of mine to cover the entirety of his output.

By including this here, however, I'm not recommending that you read everything that came out of Orwell's typewriter. The letters to friends and publishers will only be interesting to biographers or hardcore fans (although I would recommend Dorian Lynskey's

The Ministry of Truth: A Biography of George Orwell's 1984 first). Furthermore, many of his book reviews will be of little interest today. Still, some insights can be gleaned; if there is any inconsistency in this huge corpus is that his best work is almost 'too' good and

too impactful, making his merely-average writing appear like hackwork. There are some gems that don't make the usual essay collections too, and some of Orwell's most astute social commentary came out of

series of articles he wrote for the left-leaning newspaper

Tribune, related in many ways to the US

Jacobin. You can also see some of his most famous ideas start to take shape years if not decades before they appear in his novels in these prototype blog posts.

I also read Dennis Glover's novelised account of the writing of

Nineteen-Eighty Four called

The Last Man in Europe, and I plan to re-read some of Orwell's earlier novels during 2021 too, including

A Clergyman's Daughter and his 'antebellum'

Coming Up for Air that he wrote just before the Second World War; his most

under-rated novel in my estimation. As it happens, and with the exception of the US and Spain, copyright in the works published in his lifetime ends on 1st January 2021. Make of that what you will.

Capitalist Realism &

Chavs: The Demonisation of the Working Class

Mark Fisher &

Owen Jones

These two books are not natural companions to one another and there is likely much that Jones and Fisher would vehemently disagree on, but I am pairing these books together here because they represent the best of the 'political' books I read in 2020.

Mark Fisher was a dedicated leftist whose first book,

Capitalist Realism, marked an important contribution to political philosophy in the UK. However, since his suicide in early 2017, the currency of his writing has markedly risen, and Fisher is now frequently referenced due to his belief that the prevalence of mental health conditions in modern life is a side-effect of various material conditions, rather than a natural or unalterable fact "like weather". (Of course, our 'weather' is being increasingly determined by a combination of politics, economics and petrochemistry than pure randomness.) Still, Fisher wrote on all manner of topics, from

the 2012 London Olympics and "weird and eerie" electronic music that

yearns for a lost future that will never arrive, possibly prefiguring or influencing the

Fallout video game series.

Saying that, I suspect Fisher will resonate better with a UK audience more than one across the Atlantic, not necessarily because he was minded to write about the parochial politics and culture of Britain, but because his writing often carries some exasperation at the suppression of class in favour of identity-oriented politics, a viewpoint not entirely prevalent in the United States outside of, say, Tour F. Reed or the late Michael Brooks. (Indeed, Fisher is likely best known in the US as the author of his controversial 2013 essay,

Exiting the Vampire Castle, but that does not figure greatly in this book). Regardless,

Capitalist Realism is an insightful, damning and deeply unoptimistic book, best enjoyed in the warm sunshine I found it an ironic compliment that I had quoted so many paragraphs that my Kindle's copy protection routines prevented me from clipping any further.

Owen Jones needs no introduction to anyone who regularly reads a British newspaper, especially since 2015 where he unofficially served as a proxy and punching bag for expressing frustrations with the then-Labour leader, Jeremy Corbyn. However, as the subtitle of Jones' 2012 book suggests,

Chavs attempts to reveal the "demonisation of the working class" in post-financial crisis Britain. Indeed, the timing of the book is central to Jones' analysis, specifically that the stereotype of the "chav" is used by government and the media as a convenient figleaf to avoid meaningful engagement with economic and social problems on an austerity ridden island. (I'm not quite sure what the US equivalent to 'chav' might be. Perhaps

Florida Man without the implications of mental health.)

Anyway, Jones certainly has a point. From

Vicky Pollard to the attacks on

Jade Goody, there is an ignorance and prejudice at the heart of the 'chav' backlash, and that would be bad enough even if it was not being co-opted or criminalised for ideological ends.

Elsewhere in political science, I also caught Michael Brooks'

Against the Web and David Graeber's

Bullshit Jobs, although they are not quite methodical enough to recommend here. However, Graeber's award-winning

Debt: The First 5000 Years will be read in 2021. Matt Taibbi's

Hate Inc: Why Today's Media Makes Us Despise One Another is worth a brief mention here though, but its sprawling nature felt very much like I was reading a set of

Substack articles loosely edited together. And, indeed, I was.

The Golden Thread: The Story of Writing

Ewan Clayton

A recommendation from a dear friend, Ewan Clayton's

The Golden Thread is a journey through the long history of the writing from the

Dawn of Man to present day. Whether you are a linguist, a graphic designer, a visual artist, a typographer, an archaeologist or 'just' a reader, there is probably something in here for you. I was already dipping my quill into calligraphy this year so I suspect I would have liked this book in any case, but highlights would definitely include the changing role of writing due to the influence of textual forms in the workplace as well as digression on ergonomic desks employed by monks and scribes in the Middle Ages.

A lot of books by otherwise-sensible authors overstretch themselves when they write about computers or other technology from the Information Age, at best resulting in bizarre non-sequiturs and dangerously

Panglossian viewpoints at worst. But Clayton surprised me by writing extremely cogently and accurate on the role of text in this new and unpredictable era. After finishing it I realised why for a number of years, Clayton was a consultant for the legendary

Xerox PARC where he worked in a group focusing on documents and contemporary communications whilst his colleagues were busy inventing the graphical user interface, laser printing, text editors and the computer mouse.

New Dark Age &

Radical Technologies: The Design of Everyday Life

James Bridle &

Adam Greenfield

I struggled to describe these two books to friends, so I doubt I will suddenly do a better job here. Allow me to quote from Will Self's

review of James Bridle's New Dark Age in the Guardian:

We're accustomed to worrying about AI systems being built that will either "go rogue" and attack us, or succeed us in a bizarre evolution of, um, evolution what we didn't reckon on is the sheer inscrutability of these manufactured minds. And minds is not a misnomer. How else should we think about the neural network Google has built so its translator can model the interrelation of all words in all languages, in a kind of three-dimensional "semantic space"?

New Dark Age also turns its attention to the weird, algorithmically-derived products offered for sale on Amazon as well as the disturbing and abusive videos that are automatically uploaded by bots to YouTube. It should, by rights, be a mess of disparate ideas and concerns, but Bridle has a flair for introducing topics which reveals he comes to computer science from another discipline altogether; indeed, on a

four-part series he made for Radio 4, he's primarily referred to as "an artist".

Whilst

New Dark Age has rather abstract section topics, Adam Greenfield's

Radical Technologies is a rather different book altogether. Each chapter dissects one of the so-called 'radical' technologies that condition the choices available to us, asking how do they work, what challenges do they present to us and who ultimately benefits from their adoption. Greenfield takes his scalpel to smartphones, machine learning, cryptocurrencies, artificial intelligence, etc., and I don't think it would be unfair to say that starts and ends with a cynical point of view. He is no reactionary Luddite, though, and this is both informed and extremely well-explained, and it also lacks the lazy, affected and

Private Eye-like cynicism of, say,

Attack of the 50 Foot Blockchain.

The books aren't a natural pair, for Bridle's writing contains quite a bit of air in places, ironically mimics the very 'clouds' he inveighs against. Greenfield's book, by contrast, as little air and

much lower pH value. Still, it was more than refreshing to read two technology books that do not limit themselves to platitudinal booleans, be those dangerously naive (e.g. Kevin Kelly's

The Inevitable) or relentlessly nihilistic (Shoshana Zuboff's

The Age of Surveillance Capitalism). Sure, they are both anti-technology screeds, but they tend to make arguments about systems of power rather than specific companies and avoid being too anti-'Big Tech' through a narrower, Silicon Valley obsessed lens for that (dipping into some other 2020 reading of mine) I might suggest Wendy Liu's

Abolish Silicon Valley or Scott Galloway's

The Four.

Still, both books are superlatively written. In fact, Adam Greenfield has some of the best non-fiction writing around, both in terms of how he can explain complicated concepts (particularly the smart contract mechanism of the Ethereum cryptocurrency) as well as in the extremely finely-crafted sentences I often felt that the writing style almost had no need to be that poetic, and I particularly enjoyed his fictional scenarios at the end of the book.

The Algebra of Happiness &

Indistractable: How to Control Your Attention and Choose Your Life

Scott Galloway &

Nir Eyal

A cocktail of insight, informality and abrasiveness makes NYU Professor

Scott Galloway uncannily appealing to guys around my age. Although Galloway definitely has his own wisdom and experience, similar to Joe Rogan I suspect that a crucial part of Galloway's appeal is that you feel you are learning right alongside him. Thankfully, 'Prof G' is far less err problematic than Rogan (Galloway is more of a well-meaning, spirited centrist), although he, too, has some

pretty awful takes at time. This is a shame, because removed from the whirlwind of social media he can be really quite considered, such as in

this long-form interview with Stephanie Ruhle.

In fact, it is this kind of sentiment that he captured in his 2019

Algebra of Happiness. When I look over my highlighted sections, it's clear that it's rather schmaltzy out of context ("Things you hate become just inconveniences in the presence of people you love..."), but his one-two punch of cynicism and saccharine ("Ask somebody who purchased a home in 2007 if their 'American Dream' came true...") is weirdly effective, especially when he uses his own family experiences as part of his story:

A better proxy for your life isn't your first home, but your last. Where you draw your last breath is more meaningful, as it's a reflection of your success and, more important, the number of people who care about your well-being. Your first house signals the meaningful your future and possibility. Your last home signals the profound the people who love you. Where you die, and who is around you at the end, is a strong signal of your success or failure in life.

Nir Eyal's

Indistractable, however, is a totally different kind of 'self-help' book. The important background story is that Eyal was the author of the widely-read

Hooked which turned into a secular Bible of so-called 'addictive design'. (If you've ever been cornered by a techbro wielding a Wikipedia-thin knowledge of B. F. Skinner's behaviourist psychology and how it can get you to click 'Like' more often, it ultimately came from

Hooked.) However, Eyal's latest effort is actually an extended

mea culpa for his previous sin and he offers both high and low-level palliative advice on how to avoid falling for the tricks he so studiously espoused before. I suppose we should be thankful to capitalism for selling both cause and cure.

Speaking of markets, there appears to be a growing appetite for books in this 'anti-distraction' category, and whilst I cannot claim to have done an exhausting study of this nascent field,

Indistractable argues its points well without relying on accurate-but-dry "studies show..." or, worse,

Gladwellian gotchas. My main criticism, however, would be that Eyal doesn't acknowledge the limits of a self-help approach to this problem; it seems that many of the issues he outlines are an inescapable part of the alienation in modern Western society, and the only way one can really avoid distraction is to move up the income ladder or move

out to a 500-acre ranch.

Very few highly-anticipated movies appear in January and February, as the bigger releases are timed so they can be considered for the Golden Globes in January and the Oscars in late February or early March, so film fans have the advantage of a few weeks after the New Year to collect their thoughts on the year ahead. In other words, I'm not actually late in outlining below the films I'm most looking forward to in 2023...

Very few highly-anticipated movies appear in January and February, as the bigger releases are timed so they can be considered for the Golden Globes in January and the Oscars in late February or early March, so film fans have the advantage of a few weeks after the New Year to collect their thoughts on the year ahead. In other words, I'm not actually late in outlining below the films I'm most looking forward to in 2023...

Desde de setembro de 2015, o

Desde de setembro de 2015, o

Although I still read a lot, during my college sophomore years my reading

habits shifted from novels to more academic works. Indeed, reading dry

textbooks and economic papers for classes often kept me from reading anything

else substantial. Nowadays, I tend to binge read novels: I won't touch a book

for months on end, and suddenly, I'll read 10 novels back to back

Although I still read a lot, during my college sophomore years my reading

habits shifted from novels to more academic works. Indeed, reading dry

textbooks and economic papers for classes often kept me from reading anything

else substantial. Nowadays, I tend to binge read novels: I won't touch a book

for months on end, and suddenly, I'll read 10 novels back to back

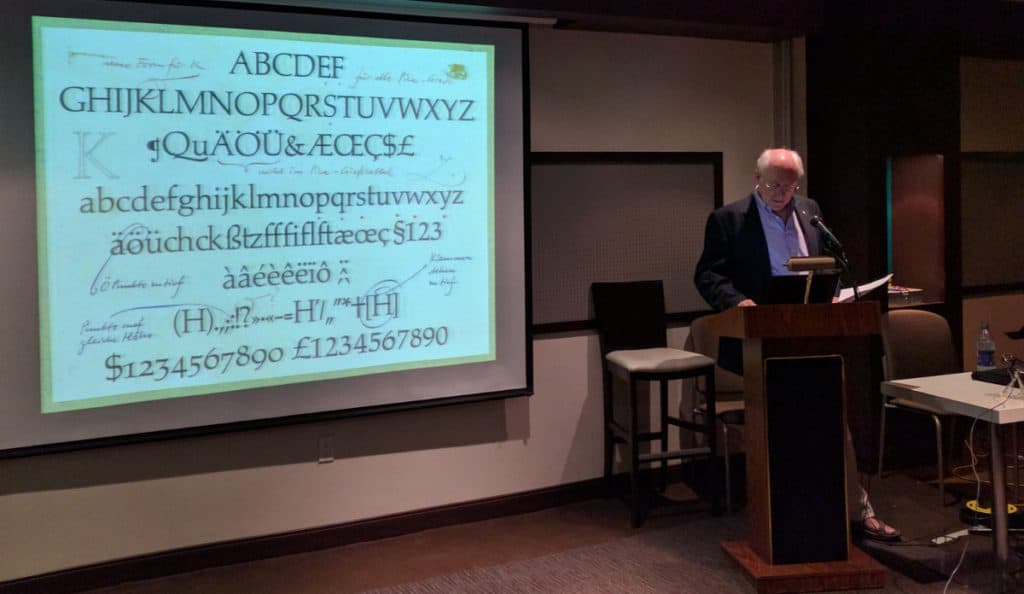

The second day of TUG 2016 was again full of interesting talks spanning from user experiences to highly technical details about astrological chart drawing, and graphical user interfaces to TikZ to the invited talk by Robert Bringhurst on the Palatino family of fonts.

The second day of TUG 2016 was again full of interesting talks spanning from user experiences to highly technical details about astrological chart drawing, and graphical user interfaces to TikZ to the invited talk by Robert Bringhurst on the Palatino family of fonts.

The idea is simply to have one coherent place with pointers to all the stuff we have and provide, without repeating nor replacing other documentation.

The idea is simply to have one coherent place with pointers to all the stuff we have and provide, without repeating nor replacing other documentation. For ages I've been advocating for the dissolution of the tech-ctte,

one of the biggest bugs in the Constitution. However, for the longest

time I was unable to suggest a suitable replacement; there will always

be people whose characters are so flawed that they will appeal to

authority as a matter of first recourse, and those people need some

sort of forum within which to waste time.

Then

For ages I've been advocating for the dissolution of the tech-ctte,

one of the biggest bugs in the Constitution. However, for the longest

time I was unable to suggest a suitable replacement; there will always

be people whose characters are so flawed that they will appeal to

authority as a matter of first recourse, and those people need some

sort of forum within which to waste time.

Then