In this blog post, I will cover how

debputy parses its manifest and the

conceptual improvements I did to make parsing of the manifest easier.

All instructions to

debputy are provided via the

debian/debputy.manifest file and

said manifest is written in the YAML format. After the YAML parser has read the

basic file structure,

debputy does another pass over the data to extract the

information from the basic structure. As an example, the following YAML file:

manifest-version: "0.1"

installations:

- install:

source: foo

dest-dir: usr/bin

would be transformed by the YAML parser into a structure resembling:

"manifest-version": "0.1",

"installations": [

"install":

"source": "foo",

"dest-dir": "usr/bin",

]

This structure is then what

debputy does a pass on to translate this into

an even higher level format where the

"install" part is translated into

an

InstallRule.

In the original prototype of

debputy, I would hand-write functions to extract

the data that should be transformed into the internal in-memory high level format.

However, it was quite tedious. Especially because I wanted to catch every possible

error condition and report "You are missing the required field X at Y" rather

than the opaque

KeyError: X message that would have been the default.

Beyond being tedious, it was also quite error prone. As an example, in

debputy/0.1.4 I added support for the

install rule and you should allegedly

have been able to add a

dest-dir: or an

as: inside it. Except I crewed up the

code and

debputy was attempting to look up these keywords from a dict that

could never have them.

Hand-writing these parsers were so annoying that it demotivated me from making

manifest related changes to

debputy simply because I did not want to code

the parsing logic. When I got this realization, I figured I had to solve this

problem better.

While reflecting on this, I also considered that I eventually wanted plugins

to be able to add vocabulary to the manifest. If the API was "provide a

callback to extract the details of whatever the user provided here", then the

result would be bad.

- Most plugins would probably throw KeyError: X or ValueError style

errors for quite a while. Worst case, they would end on my table because

the user would have a hard time telling where debputy ends and where

the plugins starts. "Best" case, I would teach debputy to say "This

poor error message was brought to you by plugin foo. Go complain to

them". Either way, it would be a bad user experience.

- This even assumes plugin providers would actually bother writing manifest

parsing code. If it is that difficult, then just providing a custom file

in debian might tempt plugin providers and that would undermine the idea

of having the manifest be the sole input for debputy.

So beyond me being unsatisfied with the current situation, it was also clear

to me that I needed to come up with a better solution if I wanted externally

provided plugins for

debputy. To put a bit more perspective on what I

expected from the end result:

- It had to cover as many parsing errors as possible. An error case this

code would handle for you, would be an error where I could ensure it

sufficient degree of detail and context for the user.

- It should be type-safe / provide typing support such that IDEs/mypy could

help you when you work on the parsed result.

- It had to support "normalization" of the input, such as

# User provides

- install: "foo"

# Which is normalized into:

- install:

source: "foo"

4) It must be simple to tell debputy what input you expected.

At this point, I remembered that I had seen a Python (PYPI) package where

you could give it a

TypedDict and an arbitrary input (Sadly, I do not

remember the name). The package would then validate the said input against the

TypedDict. If the match was successful, you would get the result back

casted as the

TypedDict. If the match was unsuccessful, the code would

raise an error for you. Conceptually, this seemed to be a good starting

point for where I wanted to be.

Then I looked a bit on the normalization requirement (point 3).

What is really going on here is that you have two "schemas" for the input.

One is what the programmer will see (the normalized form) and the other is

what the user can input (the manifest form). The problem is providing an

automatic normalization from the user input to the simplified programmer

structure. To expand a bit on the following example:

# User provides

- install: "foo"

# Which is normalized into:

- install:

source: "foo"

Given that

install has the attributes

source,

sources,

dest-dir,

as,

into, and

when, how exactly would you automatically normalize

"foo" (str) into

source: "foo"? Even if the code filtered by "type" for these

attributes, you would end up with at least

source,

dest-dir, and

as

as candidates. Turns out that

TypedDict actually got this covered. But

the Python package was not going in this direction, so I parked it here and

started looking into doing my own.

At this point, I had a general idea of what I wanted. When defining an extension

to the manifest, the plugin would provide

debputy with one or two

definitions of

TypedDict. The first one would be the "parsed" or "target" format, which

would be the normalized form that plugin provider wanted to work on. For this

example, lets look at an earlier version of the

install-examples rule:

# Example input matching this typed dict.

#

# "source": ["foo"]

# "into": ["pkg"]

#

class InstallExamplesTargetFormat(TypedDict):

# Which source files to install (dest-dir is fixed)

sources: List[str]

# Which package(s) that should have these files installed.

into: NotRequired[List[str]]

In this form, the

install-examples has two attributes - both are list of

strings. On the flip side, what the user can input would look something like

this:

# Example input matching this typed dict.

#

# "source": "foo"

# "into": "pkg"

#

#

class InstallExamplesManifestFormat(TypedDict):

# Note that sources here is split into source (str) vs. sources (List[str])

sources: NotRequired[List[str]]

source: NotRequired[str]

# We allow the user to write into: foo in addition to into: [foo]

into: Union[str, List[str]]

FullInstallExamplesManifestFormat = Union[

InstallExamplesManifestFormat,

List[str],

str,

]

The idea was that the plugin provider would use these two definitions to tell

debputy how to parse

install-examples. Pseudo-registration code could

look something like:

def _handler(

normalized_form: InstallExamplesTargetFormat,

) -> InstallRule:

... # Do something with the normalized form and return an InstallRule.

concept_debputy_api.add_install_rule(

keyword="install-examples",

target_form=InstallExamplesTargetFormat,

manifest_form=FullInstallExamplesManifestFormat,

handler=_handler,

)

This was my conceptual target and while the current actual API ended up being slightly different,

the core concept remains the same.

From concept to basic implementation

Building this code is kind like swallowing an elephant. There was no way I would just sit down

and write it from one end to the other. So the first prototype of this did not have all the

features it has now.

Spoiler warning, these next couple of sections will contain some Python typing details.

When reading this, it might be helpful to know things such as

Union[str, List[str]] being

the Python type for either a

str (string) or a

List[str] (list of strings). If typing

makes your head spin, these sections might less interesting for you.

To build this required a lot of playing around with Python's introspection and

typing APIs. My very first draft only had one "schema" (the normalized form) and

had the following features:

- Read TypedDict.__required_attributes__ and TypedDict.__optional_attributes__ to

determine which attributes where present and which were required. This was used for

reporting errors when the input did not match.

- Read the types of the provided TypedDict, strip the Required / NotRequired

markers and use basic isinstance checks based on the resulting type for str and

List[str]. Again, used for reporting errors when the input did not match.

This prototype did not take a long (I remember it being within a day) and worked surprisingly

well though with some poor error messages here and there. Now came the first challenge,

adding the manifest format schema plus relevant normalization rules. The very first

normalization I did was transforming

into: Union[str, List[str]] into

into: List[str].

At that time,

source was not a separate attribute. Instead,

sources was a

Union[str, List[str]], so it was the only normalization I needed for all my

use-cases at the time.

There are two problems when writing a normalization. First is determining what the "source"

type is, what the target type is and how they relate. The second is providing a runtime

rule for normalizing from the manifest format into the target format. Keeping it simple,

the runtime normalizer for

Union[str, List[str]] -> List[str] was written as:

def normalize_into_list(x: Union[str, List[str]]) -> List[str]:

return x if isinstance(x, list) else [x]

This basic form basically works for all types (assuming none of the types will have

List[List[...]]).

The logic for determining when this rule is applicable is slightly more involved. My current code

is about 100 lines of Python code that would probably lose most of the casual readers.

For the interested, you are looking for _union_narrowing in declarative_parser.py

With this, when the manifest format had

Union[str, List[str]] and the target format

had

List[str] the generated parser would silently map a string into a list of strings

for the plugin provider.

But with that in place, I had covered the basics of what I needed to get started. I was

quite excited about this milestone of having my first keyword parsed without handwriting

the parser logic (at the expense of writing a more generic parse-generator framework).

Adding the first parse hint

With the basic implementation done, I looked at what to do next. As mentioned, at the time

sources in the manifest format was

Union[str, List[str]] and I considered to split

into a

source: str and a

sources: List[str] on the manifest side while keeping

the normalized form as

sources: List[str]. I ended up committing to this change and

that meant I had to solve the problem getting my parser generator to understand the

situation:

# Map from

class InstallExamplesManifestFormat(TypedDict):

# Note that sources here is split into source (str) vs. sources (List[str])

sources: NotRequired[List[str]]

source: NotRequired[str]

# We allow the user to write into: foo in addition to into: [foo]

into: Union[str, List[str]]

# ... into

class InstallExamplesTargetFormat(TypedDict):

# Which source files to install (dest-dir is fixed)

sources: List[str]

# Which package(s) that should have these files installed.

into: NotRequired[List[str]]

There are two related problems to solve here:

- How will the parser generator understand that source should be normalized

and then mapped into sources?

- Once that is solved, the parser generator has to understand that while source

and sources are declared as NotRequired, they are part of a exactly one of

rule (since sources in the target form is Required). This mainly came down

to extra book keeping and an extra layer of validation once the previous step is solved.

While working on all of this type introspection for Python, I had noted the

Annotated[X, ...]

type. It is basically a fake type that enables you to attach metadata into the type system.

A very random example:

# For all intents and purposes, foo is a string despite all the Annotated stuff.

foo: Annotated[str, "hello world"] = "my string here"

The exciting thing is that you can put arbitrary details into the type field and read it

out again in your introspection code. Which meant, I could add "parse hints" into the type.

Some "quick" prototyping later (a day or so), I got the following to work:

# Map from

#

# "source": "foo" # (or "sources": ["foo"])

# "into": "pkg"

#

class InstallExamplesManifestFormat(TypedDict):

# Note that sources here is split into source (str) vs. sources (List[str])

sources: NotRequired[List[str]]

source: NotRequired[

Annotated[

str,

DebputyParseHint.target_attribute("sources")

]

]

# We allow the user to write into: foo in addition to into: [foo]

into: Union[str, List[str]]

# ... into

#

# "source": ["foo"]

# "into": ["pkg"]

#

class InstallExamplesTargetFormat(TypedDict):

# Which source files to install (dest-dir is fixed)

sources: List[str]

# Which package(s) that should have these files installed.

into: NotRequired[List[str]]

Without me (as a plugin provider) writing a line of code, I can have

debputy rename

or "merge" attributes from the manifest form into the normalized form. Obviously, this

required me (as the

debputy maintainer) to write a lot code so other me and future

plugin providers did not have to write it.

High level typing

At this point, basic normalization between one mapping to another mapping form worked.

But one thing irked me with these install rules. The

into was a list of strings

when the parser handed them over to me. However, I needed to map them to the actual

BinaryPackage (for technical reasons). While I felt I was careful with my manual

mapping, I knew this was exactly the kind of case where a busy programmer would skip

the "is this a known package name" check and some user would typo their package

resulting in an opaque

KeyError: foo.

Side note: "Some user" was me today and I was super glad to see

debputy tell me

that I had typoed a package name (I would have been more happy if I had remembered to

use

debputy check-manifest, so I did not have to wait through the upstream part

of the build that happened before

debhelper passed control to

debputy...)

I thought adding this feature would be simple enough. It basically needs two things:

- Conversion table where the parser generator can tell that BinaryPackage requires

an input of str and a callback to map from str to BinaryPackage.

(That is probably lie. I think the conversion table came later, but honestly I do

remember and I am not digging into the git history for this one)

- At runtime, said callback needed access to the list of known packages, so it could

resolve the provided string.

It was not super difficult given the existing infrastructure, but it did take some hours

of coding and debugging. Additionally, I added a parse hint to support making the

into conditional based on whether it was a single binary package. With this done,

you could now write something like:

# Map from

class InstallExamplesManifestFormat(TypedDict):

# Note that sources here is split into source (str) vs. sources (List[str])

sources: NotRequired[List[str]]

source: NotRequired[

Annotated[

str,

DebputyParseHint.target_attribute("sources")

]

]

# We allow the user to write into: foo in addition to into: [foo]

into: Union[BinaryPackage, List[BinaryPackage]]

# ... into

class InstallExamplesTargetFormat(TypedDict):

# Which source files to install (dest-dir is fixed)

sources: List[str]

# Which package(s) that should have these files installed.

into: NotRequired[

Annotated[

List[BinaryPackage],

DebputyParseHint.required_when_multi_binary()

]

]

Code-wise, I still had to check for

into being absent and providing a default for that

case (that is still true in the current codebase - I will hopefully fix that eventually). But

I now had less room for mistakes and a standardized error message when you misspell the package

name, which was a plus.

The added side-effect - Introspection

A lovely side-effect of all the parsing logic being provided to

debputy in a declarative

form was that the generated parser snippets had fields containing all expected attributes

with their types, which attributes were required, etc. This meant that adding an

introspection feature where you can ask

debputy "What does an

install rule look like?"

was quite easy. The code base already knew all of this, so the "hard" part was resolving the

input the to concrete rule and then rendering it to the user.

I added this feature recently along with the ability to provide online documentation for

parser rules. I covered that in more details in my blog post

Providing online reference documentation for debputy

in case you are interested. :)

Wrapping it up

This was a short insight into how

debputy parses your input. With this declarative

technique:

- The parser engine handles most of the error reporting meaning users get most of the errors

in a standard format without the plugin provider having to spend any effort on it.

There will be some effort in more complex cases. But the common cases are done for you.

- It is easy to provide flexibility to users while avoiding having to write code to normalize

the user input into a simplified programmer oriented format.

- The parser handles mapping from basic types into higher forms for you. These days, we have

high level types like FileSystemMode (either an octal or a symbolic mode), different

kind of file system matches depending on whether globs should be performed, etc. These

types includes their own validation and parsing rules that debputy handles for you.

- Introspection and support for providing online reference documentation. Also, debputy

checks that the provided attribute documentation covers all the attributes in the manifest

form. If you add a new attribute, debputy will remind you if you forget to document

it as well. :)

In this way everybody wins. Yes, writing this parser generator code was more enjoyable than

writing the ad-hoc manual parsers it replaced. :)

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

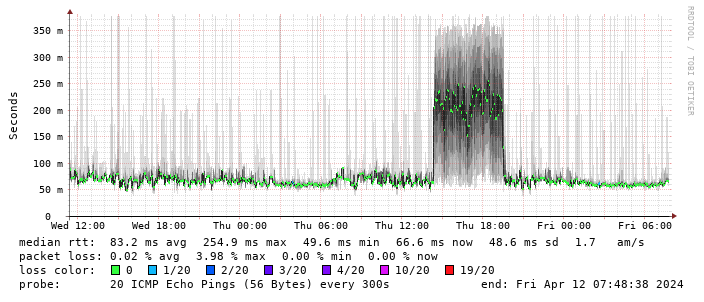

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

(There s a handy zte.js script to allow doing this from the device web interface.)

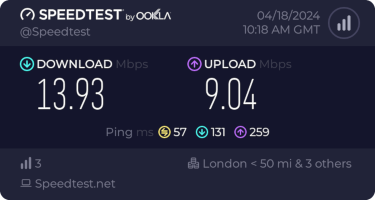

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

(There s a handy zte.js script to allow doing this from the device web interface.)

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

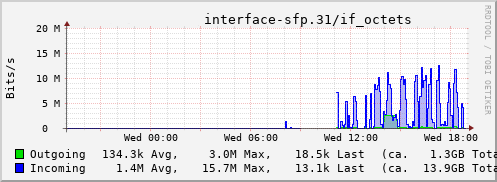

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

Years ago, at what I think I remember was DebConf 15, I hacked for a while

on debhelper to

Years ago, at what I think I remember was DebConf 15, I hacked for a while

on debhelper to

The Debian Project Developers will shortly vote for a new Debian Project Leader

known as the DPL.

The DPL is the official representative of representative of The Debian Project tasked with managing the overall project, its vision, direction, and finances.

The DPL is also responsible for the selection of Delegates, defining areas of

responsibility within the project, the coordination of Developers, and making

decisions required for the project.

Our outgoing and present DPL Jonathan Carter served 4 terms, from 2020

through 2024. Jonathan shared his last

The Debian Project Developers will shortly vote for a new Debian Project Leader

known as the DPL.

The DPL is the official representative of representative of The Debian Project tasked with managing the overall project, its vision, direction, and finances.

The DPL is also responsible for the selection of Delegates, defining areas of

responsibility within the project, the coordination of Developers, and making

decisions required for the project.

Our outgoing and present DPL Jonathan Carter served 4 terms, from 2020

through 2024. Jonathan shared his last  I recently became a maintainer of/committer to

I recently became a maintainer of/committer to  A welcome sign at Bangkok's Suvarnabhumi airport.

A welcome sign at Bangkok's Suvarnabhumi airport.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Bus from Suvarnabhumi Airport to Jomtien Beach in Pattaya.

Road near Jomtien beach in Pattaya

Road near Jomtien beach in Pattaya

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Photo of a songthaew in Pattaya. There are shared songthaews which run along Jomtien Second road and takes 10 bath to anywhere on the route.

Jomtien Beach in Pattaya.

Jomtien Beach in Pattaya.

A welcome sign at Pattaya Floating market.

A welcome sign at Pattaya Floating market.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

This Korean Vegetasty noodles pack was yummy and was available at many 7-Eleven stores.

Wat Arun temple stamps your hand upon entry

Wat Arun temple stamps your hand upon entry

Wat Arun temple

Wat Arun temple

Khao San Road

Khao San Road

A food stall at Khao San Road

A food stall at Khao San Road

Chao Phraya Express Boat

Chao Phraya Express Boat

Banana with yellow flesh

Banana with yellow flesh

Fruits at a stall in Bangkok

Fruits at a stall in Bangkok

Trimmed pineapples from Thailand.

Trimmed pineapples from Thailand.

Corn in Bangkok.

Corn in Bangkok.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

A board showing coffee menu at a 7-Eleven store along with rates in Pattaya.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

In this section of 7-Eleven, you can buy a premix coffee and mix it with hot water provided at the store to prepare.

Red wine being served in Air India

Red wine being served in Air India

If you ve perused the

If you ve perused the  Insert obligatory "not THAT data" comment here

Insert obligatory "not THAT data" comment here

If you don't know who Professor Julius Sumner Miller is, I highly recommend

If you don't know who Professor Julius Sumner Miller is, I highly recommend  No thanks, Bender, I'm busy tonight

No thanks, Bender, I'm busy tonight

Probably a trait of my family s origins as migrants from East Europe, probably

part of the collective trauma of jews throughout the world or probably

because that s just who I turned out to be, I hold in high regard the

preservation of memory of my family s photos, movies and such items. And it s a

trait shared by many people in my familiar group.

Shortly after my grandmother died 24 years ago, my mother did a large, loving

work of digitalization and restoration of my grandparent s photos. Sadly, the

higher resolution copies of said photos is lost but she took the work of not

just scanning the photos, but assembling them in presentations, telling a story,

introducing my older relatives, many of them missing 40 or more years before my

birth.

But said presentations were built using Flash. Right, not my choice of tool, and

I told her back in the day but given I wasn t around to do the work in what

I d chosen (a standards-abiding format, naturally), and given my graphic design

skills are nonexistant Several years ago, when Adobe pulled the plug on the

Flash format, we realized they would no longer be accessible. I managed to get

the photos out of the preentations, but lost the narration, that is a great part

of the work.

Three days ago, however, I read a post on

Probably a trait of my family s origins as migrants from East Europe, probably

part of the collective trauma of jews throughout the world or probably

because that s just who I turned out to be, I hold in high regard the

preservation of memory of my family s photos, movies and such items. And it s a

trait shared by many people in my familiar group.

Shortly after my grandmother died 24 years ago, my mother did a large, loving

work of digitalization and restoration of my grandparent s photos. Sadly, the

higher resolution copies of said photos is lost but she took the work of not

just scanning the photos, but assembling them in presentations, telling a story,

introducing my older relatives, many of them missing 40 or more years before my

birth.

But said presentations were built using Flash. Right, not my choice of tool, and

I told her back in the day but given I wasn t around to do the work in what

I d chosen (a standards-abiding format, naturally), and given my graphic design

skills are nonexistant Several years ago, when Adobe pulled the plug on the

Flash format, we realized they would no longer be accessible. I managed to get

the photos out of the preentations, but lost the narration, that is a great part

of the work.

Three days ago, however, I read a post on

Apparently I got an

Apparently I got an