Anuradha Weeraman: This is a test

Testing 1 2 3

Testing 1 2 3

Testing 1 2 3

Testing 1 2 3

A few days ago CISPE, a trade association of European cloud providers, published a press release complaining about the new VMware licensing scheme and asking for regulators and legislators to intervene.

But VMware does not have a monopoly on virtualization software: I think that asking regulators to interfere is unnecessary and unwise, unless, of course, they wish to question the entire foundations of copyright. Which, on the other hand, could be an intriguing position that I would support...

I believe that over-reliance on a single supplier is a typical enterprise risk: in the past decade some companies have invested in developing their own virtualization infrastructure using free software, while others have decided to rely entirely on a single proprietary software vendor.

My only big concern is that many public sector organizations will continue to use VMware and pay the huge fees designed by Broadcom to extract the maximum amount of money from their customers. However, it is ultimately the citizens who pay these bills, and blaming the evil US corporation is a great way to avoid taking responsibility for these choices.

A few days ago CISPE, a trade association of European cloud providers, published a press release complaining about the new VMware licensing scheme and asking for regulators and legislators to intervene.

But VMware does not have a monopoly on virtualization software: I think that asking regulators to interfere is unnecessary and unwise, unless, of course, they wish to question the entire foundations of copyright. Which, on the other hand, could be an intriguing position that I would support...

I believe that over-reliance on a single supplier is a typical enterprise risk: in the past decade some companies have invested in developing their own virtualization infrastructure using free software, while others have decided to rely entirely on a single proprietary software vendor.

My only big concern is that many public sector organizations will continue to use VMware and pay the huge fees designed by Broadcom to extract the maximum amount of money from their customers. However, it is ultimately the citizens who pay these bills, and blaming the evil US corporation is a great way to avoid taking responsibility for these choices.

"Several CISPE members have stated that without the ability to license and use VMware products they will quickly go bankrupt and out of business."Insert here the Jeremy Clarkson "Oh no! Anyway..." meme.

inn2 uses ephemeral UNIX domain sockets in /run/news/ to communicate with the ctlinnd program. Since the directory is only writeable by the "news" user, other unprivileged users are not able to use the command.

I solved this by extending the inn2.service systemd unit with a drop-in file which uses setfacl to give access to my user "md" to the RuntimeDirectory created by systemd. This is the content of /etc/systemd/system/inn2.service.d/md-ctlinnd.conf:

inn2 uses ephemeral UNIX domain sockets in /run/news/ to communicate with the ctlinnd program. Since the directory is only writeable by the "news" user, other unprivileged users are not able to use the command.

I solved this by extending the inn2.service systemd unit with a drop-in file which uses setfacl to give access to my user "md" to the RuntimeDirectory created by systemd. This is the content of /etc/systemd/system/inn2.service.d/md-ctlinnd.conf:

[Service] # innd will change the permissions of /run/news/ when started: without # creating it now with mode 0775 then that will change the ACL mask. RuntimeDirectoryMode=0775 # allow user md to run ctlinnd(8), which creates sockets in /run/news/ ExecStartPost=/usr/bin/setfacl --modify user:md:rwx $RUNTIME_DIRECTORYThe non-obvious issue here is that the innd daemon on startup will change the directory permissions in a way which sets a more restrictive (non group-writeable) ACL mask, and this would make the newly created user ACL ineffective. The solution is to create the directory group-writeable from start. (Beware: this creates a trivial privileges escalation from md to news.)

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

extract-source job, used to

produce a debianize source tree of the project. This job was introduced to make

it possible to build the projects on different architectures, on the subsequent

build jobs. However, that extract-source approach is sub-optimal: not only it

increases the execution time of the pipeline by some minutes, but also projects

whose source tree is too large are not able to use the pipeline. The debianize

source tree is passed as an artifact to the build jobs, and for those large

projects, the size of their source tree exceeds the Salsa s limits. This is

specific issue is documented as

issue #195, and

the proposed solution is to get rid of the extract-source job, relying on

sbuild in the very build job (see

issue #296).

Switching to sbuild would also help to improve the build source job,

solving issues such as

#187 and

#298.

The

current work-in-progress

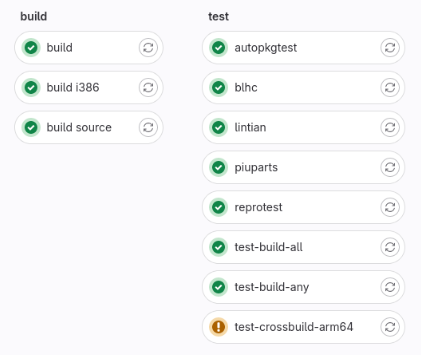

is very preliminary, but it has already been possible to run the build (amd64),

build-i386 and build-source job using sbuild with the

The

current work-in-progress

is very preliminary, but it has already been possible to run the build (amd64),

build-i386 and build-source job using sbuild with the unshare mode. The image

on the right shows a pipeline that builds grep. All the test jobs use the

artifacts of the new build job. There is a lot of remaining work, mainly making

the integration with ccache work. This change could break some things, it will

also be important to test how the new pipeline works with complex projects.

Also, thanks to Emmanuel Arias, we are proposing a

Google Summer of Code 2024 project

to improve Salsa CI. As part of the ongoing work in preparation for the GSoC

2024 project, Santiago has proposed a

merge request

to make more efficient how contributors can test their changes on the Salsa CI

pipeline.

debootstrap. Notably missing is glibc, which turns out

harder than anticipated via dumat, because

it has Conflicts between different architectures, which dumat does not analyze.

Patches for diversion mitigations have been updated in a way to not exhibit any

loss anymore.

The main change here is that packages which are being diverted now support the

diverting packages in transitioning their diversions. We also supported a few

packages with non-trivial changes such as

netplan.io. dumat has been enhanced to

better support derivatives such as Ubuntu.

-for-host support to gcc-defaults.dput-ng enabling dcut migrate and merging two MRs of Ben

Hutchings. Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

Contributing to Debian

is part of Freexian s mission. This article

covers the latest achievements of Freexian and their collaborators. All of this

is made possible by organizations subscribing to our

Long Term Support contracts and

consulting services.

udev rules, dh_installudev now installs rules to

/usr.

The story around diversions took another detour. We learned that

conflicts do not reliably prevent concurrent unpack

and the reiterated mitigation for molly-guard triggered this. After a bit of

back and forth and consultation with the developer mailing list, we concluded

that avoiding the problematic behavior when using apt or an apt-based

upgrader combined with a loss mitigation would be good enough. The involved

packages bfh-container, molly-guard, progress-linux-container and

systemd have since been uploaded to unstable and the matter seems finally

solved except that it

doesn t quite work with sysvinit yet. The

same approach is now being proposed for the diversions of

zutils for

gzip. We thank involved maintainers for

their timely cooperation.

Build-Depends of the linux source

package for instance. While this has been solved for binutils a while back,

the patches for gcc have been unfinished. With lots of constructive feedback

from gcc package maintainer Matthias Klose, Helmut worked on finalizing and

testing these patches. Patch stacks are now available for

gcc-13 and

gcc-14 and

Matthias already included parts of them in test builds for Ubuntu noble.

Finishing this work would enable us to resolve around 1000 cross build

dependency satisfiability issues in unstable.

gcc-for-host work.rebootstrap. In December, blt started

depending on libjpeg and this poses a

dependency loop. Ideally, Python would

stop depending on blt. Also linux-libc-dev having become

Multi-Arch: foreign poses non-trivial issues that are not fully resolved

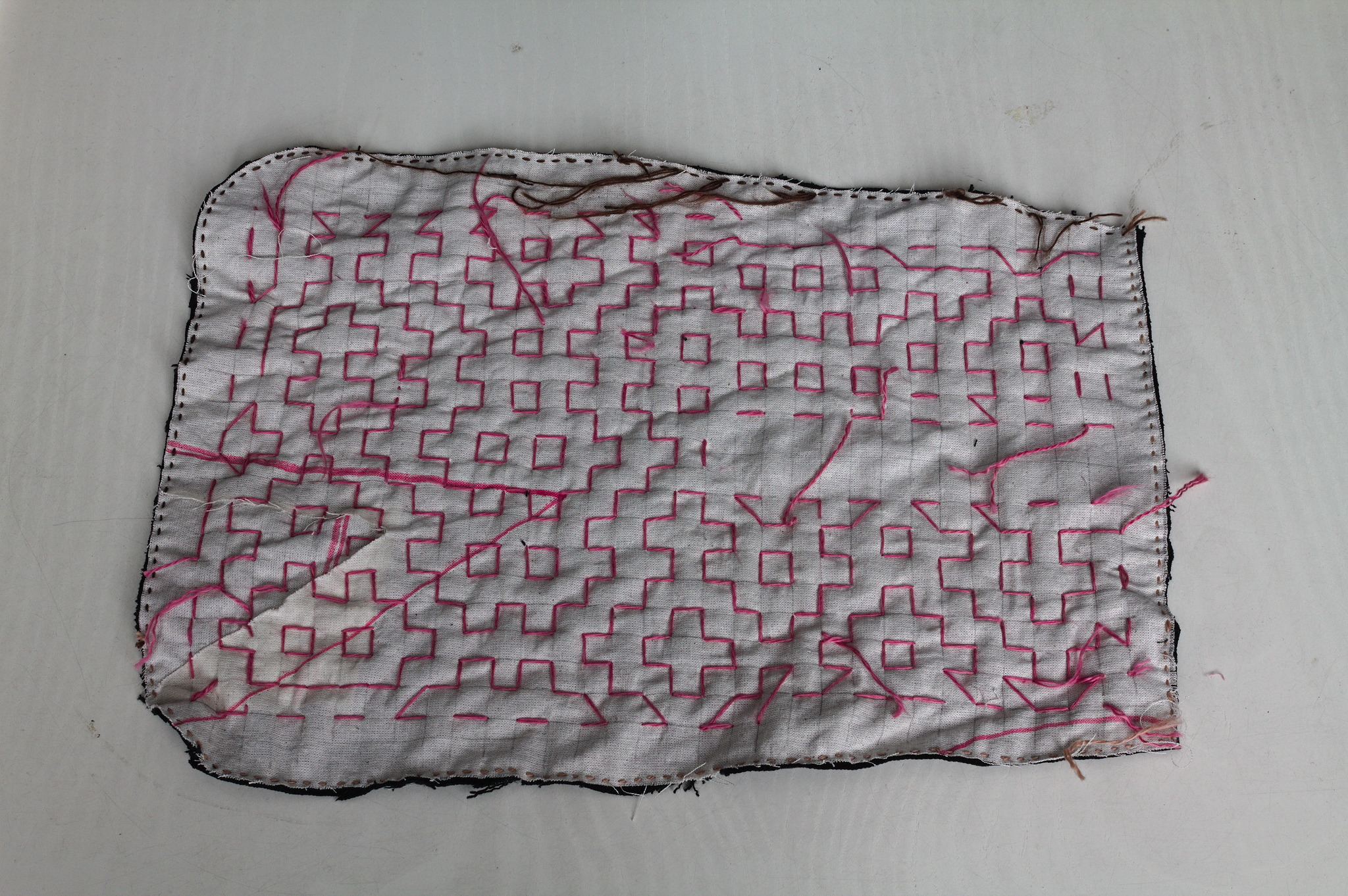

yet. Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a Numberphile video about Hitomezashi

Stitch Patterns based on numbers, words or randomness.

Few weeks ago I had cut some fabric piece out of an old pair of jeans

and I had a lot of scraps that were too small to do anything useful on

their own.

It easy to see where this can go, right?

Lately I ve seen people on the internet talking about victorian crazy

quilting. Years ago I had watched a Numberphile video about Hitomezashi

Stitch Patterns based on numbers, words or randomness.

Few weeks ago I had cut some fabric piece out of an old pair of jeans

and I had a lot of scraps that were too small to do anything useful on

their own.

It easy to see where this can go, right?

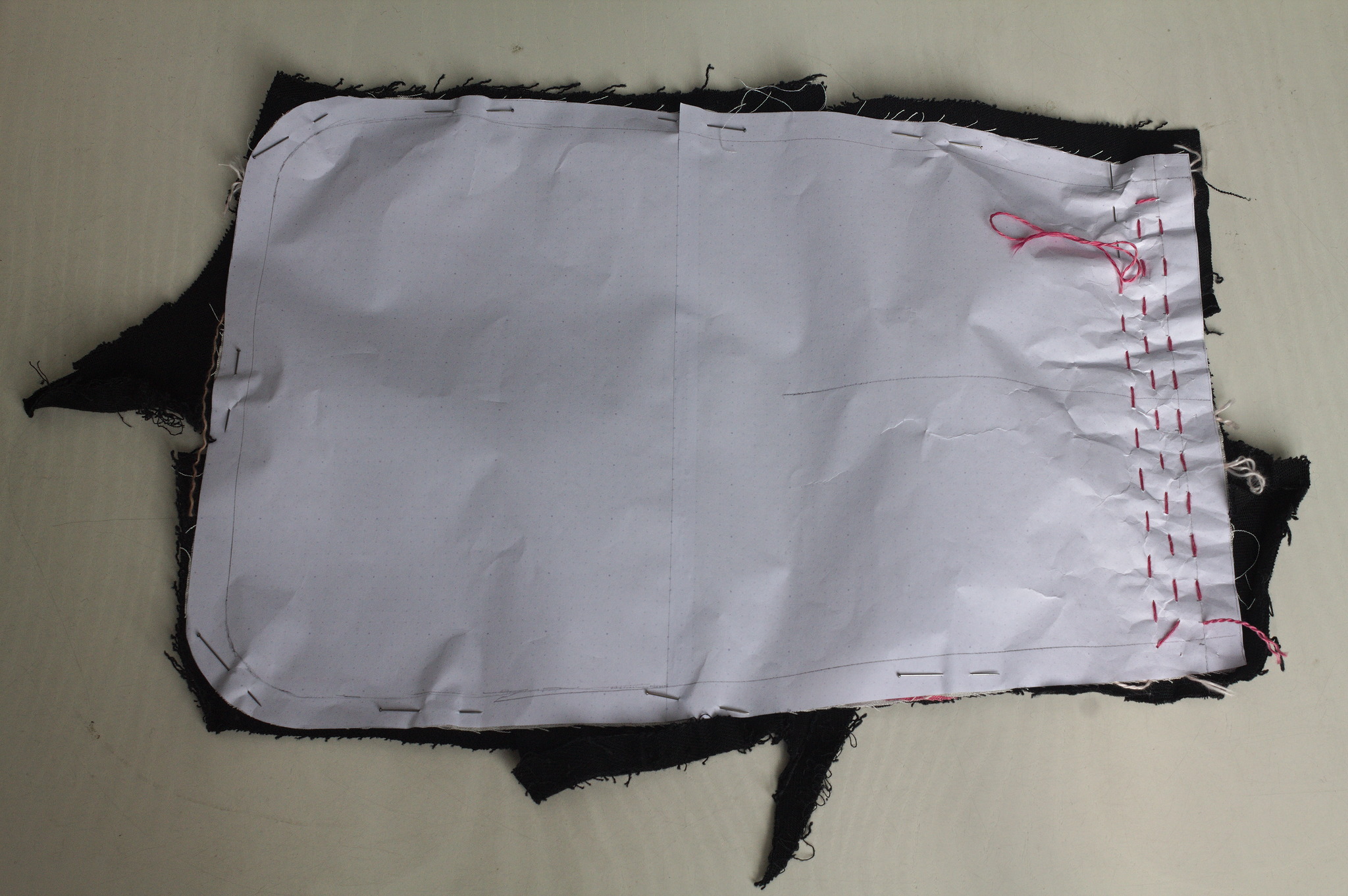

I cut a pocket shape out of old garment mockups (this required some

piecing), drew a square grid, arranged scraps of jeans to cover the

other side, kept everything together with a lot of pins, carefully

avoided basting anything, and started covering everything in sashiko /

hitomezashi stitches, starting each line with a stitch on the front or

the back of the work based on the result of:

I cut a pocket shape out of old garment mockups (this required some

piecing), drew a square grid, arranged scraps of jeans to cover the

other side, kept everything together with a lot of pins, carefully

avoided basting anything, and started covering everything in sashiko /

hitomezashi stitches, starting each line with a stitch on the front or

the back of the work based on the result of:

import random

random.choice(["front", "back"]) For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

For the second piece I tried to use a piece of paper with the square

grid instead of drawing it on the fabric: it worked, mostly, I would not

do it again as removing the paper was more of a hassle than drawing the

lines in the first place. I suspected it, but had to try it anyway.

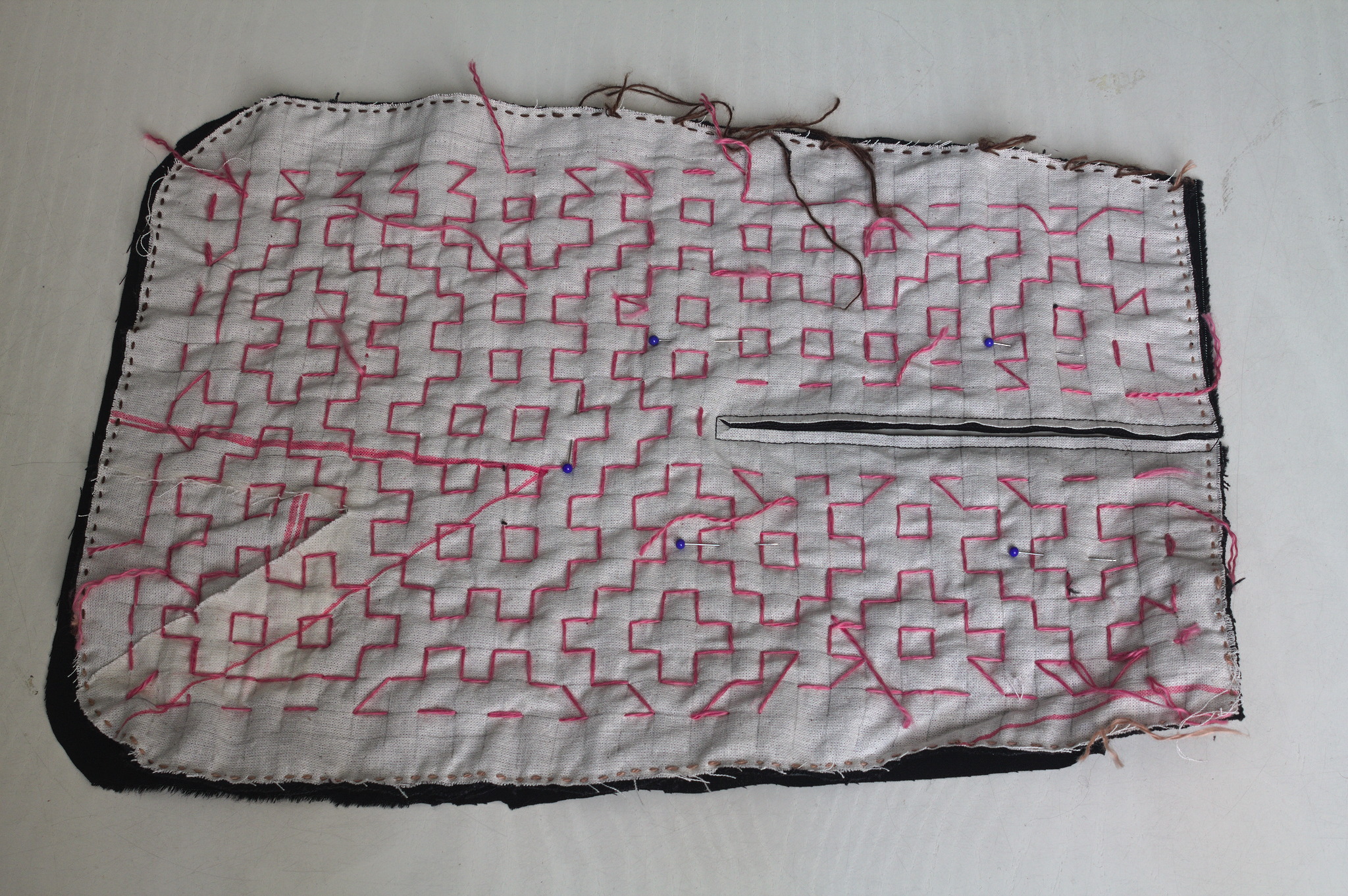

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

Then I added a lining from some plain black cotton from the stash; for

the slit I put the lining on the front right sides together, sewn

at 2 mm from the marked slit, cut it, turned the lining to the back

side, pressed and then topstitched as close as possible to the slit from

the front.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I bound everything with bias tape, adding herringbone tape loops at the

top to hang it from a belt (such as one made from the waistband of one

of the donor pair of jeans) and that was it.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.

I like the way the result feels; maybe it s a bit too stiff for a

pocket, but I can see it work very well for a bigger bag, and maybe even

a jacket or some other outer garment.

| Series: | Deep Witches #1 |

| Publisher: | A Girl and Her Fed Books |

| Copyright: | March 2021 |

| ISBN: | blackwing-war |

| Format: | Kindle |

| Pages: | 284 |

Daniel Kahn Gillmor<dkg@debian.org><dkg@fifthhorseman.net>-----BEGIN PGP PUBLIC KEY BLOCK-----

xjMEZXEJyxYJKwYBBAHaRw8BAQdA5BpbW0bpl5qCng/RiqwhQINrplDMSS5JsO/Y

O+5Zi7HCwAsEHxYKAH0FgmVxCcsDCwkHCRC7fpEBSV5r90cUAAAAAAAeACBzYWx0

QG5vdGF0aW9ucy5zZXF1b2lhLXBncC5vcmfUAgfN9tyTSxpxhmHA1r63GiI4v6NQ

mrrWVLOBRJYuhQMVCggCmwECHgEWIQTUdwQMcMIValwphUm7fpEBSV5r9wAAmaEA

/3MvYJMxQdLhIG4UDNMVd2bsovwdcTrReJhLYyFulBrwAQD/j/RS+AXQIVtkcO9b

l6zZTAO9x6yfkOZbv0g3eNyrAs0QPGRrZ0BkZWJpYW4ub3JnPsLACwQTFgoAfQWC

ZXEJywMLCQcJELt+kQFJXmv3RxQAAAAAAB4AIHNhbHRAbm90YXRpb25zLnNlcXVv

aWEtcGdwLm9yZ4l+Z3i19Uwjw3CfTNFCDjRsoufMoPOM7vM8HoOEdn/vAxUKCAKb

AQIeARYhBNR3BAxwwhVqXCmFSbt+kQFJXmv3AAALZQEAhJsgouepQVV98BHUH6Sv

WvcKrb8dQEZOvHFbZQQPNWgA/A/DHkjYKnUkCg8Zc+FonqOS/35sHhNA8CwqSQFr

tN4KzRc8ZGtnQGZpZnRoaG9yc2VtYW4ubmV0PsLACgQTFgoAfQWCZXEJywMLCQcJ

ELt+kQFJXmv3RxQAAAAAAB4AIHNhbHRAbm90YXRpb25zLnNlcXVvaWEtcGdwLm9y

ZxLvwkgnslsAuo+IoSa9rv8+nXpbBdab2Ft7n4H9S+d/AxUKCAKbAQIeARYhBNR3

BAxwwhVqXCmFSbt+kQFJXmv3AAAtFgD4wqcUfQl7nGLQOcAEHhx8V0Bg8v9ov8Gs

Y1ei1BEFwAD/cxmxmDSO0/tA+x4pd5yIvzgfGYHSTxKS0Ww3hzjuZA7NE0Rhbmll

bCBLYWhuIEdpbGxtb3LCwA4EExYKAIAFgmVxCcsDCwkHCRC7fpEBSV5r90cUAAAA

AAAeACBzYWx0QG5vdGF0aW9ucy5zZXF1b2lhLXBncC5vcmd7X4TgiINwnzh4jar0

Pf/b5hgxFPngCFxJSmtr/f0YiQMVCggCmQECmwECHgEWIQTUdwQMcMIValwphUm7

fpEBSV5r9wAAMuwBAPtMonKbhGOhOy+8miAb/knJ1cIPBjLupJbjM+NUE1WyAQD1

nyGW+XwwMrprMwc320mdJH9B0jdokJZBiN7++0NoBM4zBGVxCcsWCSsGAQQB2kcP

AQEHQI19uRatkPSFBXh8usgciEDwZxTnnRZYrhIgiFMybBDQwsC/BBgWCgExBYJl

cQnLCRC7fpEBSV5r90cUAAAAAAAeACBzYWx0QG5vdGF0aW9ucy5zZXF1b2lhLXBn

cC5vcmfCopazDnq6hZUsgVyztl5wmDCmxI169YLNu+IpDzJEtQKbAr6gBBkWCgBv

BYJlcQnLCRB3LRYeNc1LgUcUAAAAAAAeACBzYWx0QG5vdGF0aW9ucy5zZXF1b2lh

LXBncC5vcmcQglI7G7DbL9QmaDkzcEuk3QliM4NmleIRUW7VvIBHMxYhBHS8BMQ9

hghL6GcsBnctFh41zUuBAACwfwEAqDULksr8PulKRcIP6N9NI/4KoznyIcuOHi8q

Gk4qxMkBAIeV20SPEnWSw9MWAb0eKEcfupzr/C+8vDvsRMynCWsDFiEE1HcEDHDC

FWpcKYVJu36RAUlea/cAAFD1AP0YsE3Eeig1tkWaeyrvvMf5Kl1tt2LekTNWDnB+

FUG9SgD+Ka8vfPR8wuV8D3y5Y9Qq9xGO+QkEBCW0U1qNypg65QHOOARlcQnLEgor

BgEEAZdVAQUBAQdAWTLEa0WmnhUmDBdWXX0ZlYAa4g1CK/fXg0NPOQSteA4DAQgH

wsAABBgWCgByBYJlcQnLCRC7fpEBSV5r90cUAAAAAAAeACBzYWx0QG5vdGF0aW9u

cy5zZXF1b2lhLXBncC5vcmexrMBZe0QdQ+ZJOZxFkAiwCw2I7yTSF2Ox9GVFWKmA

mAKbDBYhBNR3BAxwwhVqXCmFSbt+kQFJXmv3AABcJQD/f4ltpSvLBOBEh/C2dIYa

dgSuqkCqq0B4WOhFRkWJZlcA/AxqLWG4o8UrrmwrmM42FhgxKtEXwCSHE00u8wR4

Up8G

=9Yc8

-----END PGP PUBLIC KEY BLOCK-----

|

| Photo by Pixabay |

Given a typical install of 3 generic kernel ABIs in the default configuration on a regular-sized VM (2 CPU cores 8GB of RAM) the following metrics are achieved in Ubuntu 23.10 versus Ubuntu 22.04 LTS:

2x less disk space used (1,417MB vs 2,940MB, including initrd)

3x less peak RAM usage for the initrd boot (68MB vs 204MB)

0.5x increase in download size (949MB vs 600MB)

2.5x faster initrd generation (4.5s vs 11.3s)

approximately the same total time (103s vs 98s, hardware dependent)

For minimal cloud images that do not install either linux-firmware or modules extra the numbers are:

1.3x less disk space used (548MB vs 742MB)

2.2x less peak RAM usage for initrd boot (27MB vs 62MB)

0.4x increase in download size (207MB vs 146MB)

Hopefully, the compromise of download size, relative to the disk space & initrd savings is a win for the majority of platforms and use cases. For users on extremely expensive and metered connections, the likely best saving is to receive air-gapped updates or skip updates.

This was achieved by precompressing kernel modules & firmware files with the maximum level of Zstd compression at package build time; making actual .deb files uncompressed; assembling the initrd using split cpio archives - uncompressed for the pre-compressed files, whilst compressing only the userspace portions of the initrd; enabling in-kernel module decompression support with matching kmod; fixing bugs in all of the above, and landing all of these things in time for the feature freeze. Whilst leveraging the experience and some of the design choices implementations we have already been shipping on Ubuntu Core. Some of these changes are backported to Jammy, but only enough to support smooth upgrades to Mantic and later. Complete gains are only possible to experience on Mantic and later.

The discovered bugs in kernel module loading code likely affect systems that use LoadPin LSM with kernel space module uncompression as used on ChromeOS systems. Hopefully, Kees Cook or other ChromeOS developers pick up the kernel fixes from the stable trees. Or you know, just use Ubuntu kernels as they do get fixes and features like these first.

The team that designed and delivered these changes is large: Benjamin Drung, Andrea Righi, Juerg Haefliger, Julian Andres Klode, Steve Langasek, Michael Hudson-Doyle, Robert Kratky, Adrien Nader, Tim Gardner, Roxana Nicolescu - and myself Dimitri John Ledkov ensuring the most optimal solution is implemented, everything lands on time, and even implementing portions of the final solution.

Hi, It's me, I am a Staff Engineer at Canonical and we are hiring https://canonical.com/careers.

Lots of additional technical details and benchmarks on a huge range of diverse hardware and architectures, and bikeshedding all the things below:

My previously mentioned C.H.I.P. repurposing has been partly successful; I ve found a use for it (which I still need to write up), but unfortunately it s too useful and the fact it s still a bit flaky has become a problem. I spent a while trying to isolate exactly what the problem is (I m still seeing occasional hard hangs with no obvious debug output in the logs or on the serial console), then realised I should just buy one of the cheap ARM SBC boards currently available.

The C.H.I.P. is based on an Allwinner R8, which is a single ARM v7 core (an A8). So it s fairly low power by today s standards and it seemed pretty much any board would probably do. I considered a Pi 2 Zero, but couldn t be bothered trying to find one in stock at a reasonable price (I ve had one on backorder from CPC since May 2022, and yes, I know other places have had them in stock since but I don t need one enough to chase and I m now mostly curious about whether it will ever ship). As the title of this post gives away, I settled on a Banana Pi BPI-M2 Zero, which is based on an Allwinner H3. That s a quad-core ARM v7 (an A7), so a bit more oompfh than the C.H.I.P. All in all it set me back 25, including a set of heatsinks that form a case around it.

I started with the vendor provided Debian SD card image, which is based on Debian 9 (stretch) and so somewhat old. I was able to dist-upgrade my way through buster and bullseye, and end up on bookworm. I then discovered the bookworm 6.1 kernel worked just fine out of the box, and even included a suitable DTB. Which got me thinking about whether I could do a completely fresh Debian install with minimal tweaking.

First thing, a boot loader. The Allwinner chips are nice in that they ll boot off SD, so I just needed a suitable u-boot image. Rather than go with the vendor image I had a look at mainline and discovered it had support! So let s build a clean image:

My previously mentioned C.H.I.P. repurposing has been partly successful; I ve found a use for it (which I still need to write up), but unfortunately it s too useful and the fact it s still a bit flaky has become a problem. I spent a while trying to isolate exactly what the problem is (I m still seeing occasional hard hangs with no obvious debug output in the logs or on the serial console), then realised I should just buy one of the cheap ARM SBC boards currently available.

The C.H.I.P. is based on an Allwinner R8, which is a single ARM v7 core (an A8). So it s fairly low power by today s standards and it seemed pretty much any board would probably do. I considered a Pi 2 Zero, but couldn t be bothered trying to find one in stock at a reasonable price (I ve had one on backorder from CPC since May 2022, and yes, I know other places have had them in stock since but I don t need one enough to chase and I m now mostly curious about whether it will ever ship). As the title of this post gives away, I settled on a Banana Pi BPI-M2 Zero, which is based on an Allwinner H3. That s a quad-core ARM v7 (an A7), so a bit more oompfh than the C.H.I.P. All in all it set me back 25, including a set of heatsinks that form a case around it.

I started with the vendor provided Debian SD card image, which is based on Debian 9 (stretch) and so somewhat old. I was able to dist-upgrade my way through buster and bullseye, and end up on bookworm. I then discovered the bookworm 6.1 kernel worked just fine out of the box, and even included a suitable DTB. Which got me thinking about whether I could do a completely fresh Debian install with minimal tweaking.

First thing, a boot loader. The Allwinner chips are nice in that they ll boot off SD, so I just needed a suitable u-boot image. Rather than go with the vendor image I had a look at mainline and discovered it had support! So let s build a clean image:

noodles@buildhost:~$ mkdir ~/BPI

noodles@buildhost:~$ cd ~/BPI

noodles@buildhost:~/BPI$ ls

noodles@buildhost:~/BPI$ git clone https://source.denx.de/u-boot/u-boot.git

Cloning into 'u-boot'...

remote: Enumerating objects: 935825, done.

remote: Counting objects: 100% (5777/5777), done.

remote: Compressing objects: 100% (1967/1967), done.

remote: Total 935825 (delta 3799), reused 5716 (delta 3769), pack-reused 930048

Receiving objects: 100% (935825/935825), 186.15 MiB 2.21 MiB/s, done.

Resolving deltas: 100% (785671/785671), done.

noodles@buildhost:~/BPI$ mkdir u-boot-build

noodles@buildhost:~/BPI$ cd u-boot

noodles@buildhost:~/BPI/u-boot$ git checkout v2023.07.02

...

HEAD is now at 83cdab8b2c Prepare v2023.07.02

noodles@buildhost:~/BPI/u-boot$ make O=../u-boot-build bananapi_m2_zero_defconfig

HOSTCC scripts/basic/fixdep

GEN Makefile

HOSTCC scripts/kconfig/conf.o

YACC scripts/kconfig/zconf.tab.c

LEX scripts/kconfig/zconf.lex.c

HOSTCC scripts/kconfig/zconf.tab.o

HOSTLD scripts/kconfig/conf

#

# configuration written to .config

#

make[1]: Leaving directory '/home/noodles/BPI/u-boot-build'

noodles@buildhost:~/BPI/u-boot$ cd ../u-boot-build/

noodles@buildhost:~/BPI/u-boot-build$ make CROSS_COMPILE=arm-linux-gnueabihf-

GEN Makefile

scripts/kconfig/conf --syncconfig Kconfig

...

LD spl/u-boot-spl

OBJCOPY spl/u-boot-spl-nodtb.bin

COPY spl/u-boot-spl.bin

SYM spl/u-boot-spl.sym

MKIMAGE spl/sunxi-spl.bin

MKIMAGE u-boot.img

COPY u-boot.dtb

MKIMAGE u-boot-dtb.img

BINMAN .binman_stamp

OFCHK .config

noodles@buildhost:~/BPI/u-boot-build$ ls -l u-boot-sunxi-with-spl.bin

-rw-r--r-- 1 noodles noodles 494900 Aug 8 08:06 u-boot-sunxi-with-spl.bin

noodles@buildhost:~/BPI$ wget https://deb.debian.org/debian/dists/bookworm/main/installer-armhf/20230607%2Bdeb12u1/images/netboot/netboot.tar.gz

...

2023-08-08 10:15:03 (34.5 MB/s) - netboot.tar.gz saved [37851404/37851404]

noodles@buildhost:~/BPI$ tar -axf netboot.tar.gz

noodles@buildhost:~/BPI$ sudo fdisk /dev/sdb

Welcome to fdisk (util-linux 2.38.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): o

Created a new DOS (MBR) disklabel with disk identifier 0x793729b3.

Command (m for help): n

Partition type

p primary (0 primary, 0 extended, 4 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (1-4, default 1):

First sector (2048-60440575, default 2048):

Last sector, +/-sectors or +/-size K,M,G,T,P (2048-60440575, default 60440575): +500M

Created a new partition 1 of type 'Linux' and of size 500 MiB.

Command (m for help): t

Selected partition 1

Hex code or alias (type L to list all): c

Changed type of partition 'Linux' to 'W95 FAT32 (LBA)'.

Command (m for help): n

Partition type

p primary (1 primary, 0 extended, 3 free)

e extended (container for logical partitions)

Select (default p):

Using default response p.

Partition number (2-4, default 2):

First sector (1026048-60440575, default 1026048):

Last sector, +/-sectors or +/-size K,M,G,T,P (534528-60440575, default 60440575):

Created a new partition 2 of type 'Linux' and of size 28.3 GiB.

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

$ sudo mkfs -t vfat -n BPI-UBOOT /dev/sdb1

mkfs.fat 4.2 (2021-01-31)

noodles@buildhost:~/BPI$ sudo dd if=u-boot-build/u-boot-sunxi-with-spl.bin of=/dev/sdb bs=1024 seek=8

483+1 records in

483+1 records out

494900 bytes (495 kB, 483 KiB) copied, 0.0282234 s, 17.5 MB/s

noodles@buildhost:~/BPI$ cp -r debian-installer/ /media/noodles/BPI-UBOOT/

U-Boot SPL 2023.07.02 (Aug 08 2023 - 09:05:44 +0100)

DRAM: 512 MiB

Trying to boot from MMC1

U-Boot 2023.07.02 (Aug 08 2023 - 09:05:44 +0100) Allwinner Technology

CPU: Allwinner H3 (SUN8I 1680)

Model: Banana Pi BPI-M2-Zero

DRAM: 512 MiB

Core: 60 devices, 17 uclasses, devicetree: separate

WDT: Not starting watchdog@1c20ca0

MMC: mmc@1c0f000: 0, mmc@1c10000: 1

Loading Environment from FAT... Unable to read "uboot.env" from mmc0:1...

In: serial

Out: serial

Err: serial

Net: No ethernet found.

Hit any key to stop autoboot: 0

=> setenv dibase /debian-installer/armhf

=> fatload mmc 0:1 $ kernel_addr_r $ dibase /vmlinuz

5333504 bytes read in 225 ms (22.6 MiB/s)

=> setenv bootargs "console=ttyS0,115200n8"

=> fatload mmc 0:1 $ fdt_addr_r $ dibase /dtbs/sun8i-h2-plus-bananapi-m2-zero.dtb

25254 bytes read in 7 ms (3.4 MiB/s)

=> fdt addr $ fdt_addr_r 0x40000

Working FDT set to 43000000

=> fatload mmc 0:1 $ ramdisk_addr_r $ dibase /initrd.gz

31693887 bytes read in 1312 ms (23 MiB/s)

=> bootz $ kernel_addr_r $ ramdisk_addr_r :$ filesize $ fdt_addr_r

Kernel image @ 0x42000000 [ 0x000000 - 0x516200 ]

## Flattened Device Tree blob at 43000000

Booting using the fdt blob at 0x43000000

Working FDT set to 43000000

Loading Ramdisk to 481c6000, end 49fffc3f ... OK

Loading Device Tree to 48183000, end 481c5fff ... OK

Working FDT set to 48183000

Starting kernel ...

firmware-brcm80211 once installation completed allowed the built-in wifi to work fine.

After install you need to configure u-boot to boot without intervention. At the u-boot prompt (i.e. after hitting a key to stop autoboot):

=> setenv bootargs "console=ttyS0,115200n8 root=LABEL=BPI-ROOT ro"

=> setenv bootcmd 'ext4load mmc 0:2 $ fdt_addr_r /boot/sun8i-h2-plus-bananapi-m2-zero.dtb ; fdt addr $ fdt_addr_r 0x40000 ; ext4load mmc 0:2 $ kernel_addr_r /boot/vmlinuz ; ext4load mmc 0:2 $ ramdisk_addr_r /boot/initrd.img ; bootz $ kernel_addr_r $ ramdisk_addr_r :$ filesize $ fdt_addr_r '

=> saveenv

Saving Environment to FAT... OK

=> reset

e2label /dev/sdb2 BPI-ROOT to label / appropriately; otherwise I occasionally saw the SD card appear as mmc1 for Linux (I m guessing due to asynchronous boot order with the wifi). You should now find the device boots without intervention.

You can find all my photos on:

Also, don't forget to explore the rest of the Git LFS share content - there are very many great photos

by others this year as well!

You can find all my photos on:

Also, don't forget to explore the rest of the Git LFS share content - there are very many great photos

by others this year as well!

DebConf23, the 24th edition of the Debian

conference is taking place in Infopark at Kochi, Kerala, India.

Thanks to the hard work of its organizers, it will be, this year as well, an

interesting and fruitful event for attendees.

We would like to warmly welcome the sponsors of DebConf23, and

introduce them to you.

We have three Platinum sponsors.

DebConf23, the 24th edition of the Debian

conference is taking place in Infopark at Kochi, Kerala, India.

Thanks to the hard work of its organizers, it will be, this year as well, an

interesting and fruitful event for attendees.

We would like to warmly welcome the sponsors of DebConf23, and

introduce them to you.

We have three Platinum sponsors.

Autocrypt: addr=anarcat@torproject.org; prefer-encrypt=nopreference;

keydata=xsFNBEogKJ4BEADHRk8dXcT3VmnEZQQdiAaNw8pmnoRG2QkoAvv42q9Ua+DRVe/yAEUd03EOXbMJl++YKWpVuzSFr7IlZ+/lJHOCqDeSsBD6LKBSx/7uH2EOIDizGwfZNF3u7X+gVBMy2V7rTClDJM1eT9QuLMfMakpZkIe2PpGE4g5zbGZixn9er+wEmzk2mt20RImMeLK3jyd6vPb1/Ph9+bTEuEXi6/WDxJ6+b5peWydKOdY1tSbkWZgdi+Bup72DLUGZATE3+Ju5+rFXtb/1/po5dZirhaSRZjZA6sQhyFM/ZhIj92mUM8JJrhkeAC0iJejn4SW8ps2NoPm0kAfVu6apgVACaNmFb4nBAb2k1KWru+UMQnV+VxDVdxhpV628Tn9+8oDg6c+dO3RCCmw+nUUPjeGU0k19S6fNIbNPRlElS31QGL4H0IazZqnE+kw6ojn4Q44h8u7iOfpeanVumtp0lJs6dE2nRw0EdAlt535iQbxHIOy2x5m9IdJ6q1wWFFQDskG+ybN2Qy7SZMQtjjOqM+CmdeAnQGVwxowSDPbHfFpYeCEb+Wzya337Jy9yJwkfa+V7e7Lkv9/OysEsV4hJrOh8YXu9a4qBWZvZHnIO7zRbz7cqVBKmdrL2iGqpEUv/x5onjNQwpjSVX5S+ZRBZTzah0w186IpXVxsU8dSk0yeQskblrwARAQABzSlBbnRvaW5lIEJlYXVwcsOpIDxhbmFyY2F0QHRvcnByb2plY3Qub3JnPsLBlAQTAQgAPgIbAwULCQgHAwUVCgkICwUWAgMBAAIeAQIXgBYhBI3JAc5kFGwEitUPu3khUlJ7dZIeBQJihnFIBQkacFLiAAoJEHkhUlJ7dZIeXNAP/RsX+27l9K5uGspEaMH6jabAFTQVWD8Ch1om9YvrBgfYtq2k/m4WlkMh9IpT89Ahmlf0eq+V1Vph4wwXBS5McK0dzoFuHXJa1WHThNMaexgHhqJOs

S60bWyLH4QnGxNaOoQvuAXiCYV4amKl7hSuDVZEn/9etDgm/UhGn2KS3yg0XFsqI7V/3RopHiDT+k7+zpAKd3st2V74w6ht+EFp2Gj0sNTBoCdbmIkRhiLyH9S4B+0Z5dUCUEopGIKKOSbQwyD5jILXEi7VTZhN0CrwIcCuqNo7OXI6e8gJd8McymqK4JrVoCipJbLzyOLxZMxGz8Ki0b9O844/DTzwcYcg9I1qogCsGmZfgVze2XtGxY+9zwSpeCLeef6QOPQ0uxsEYSfVgS+onCesSRCgwAPmppPiva+UlGuIMun87gPpQpV2fqFg/V8zBxRvs6YTGcfcQjfMoBHmZTGb+jk1//QAgnXMO7fGG38YH7iQSSzkmodrH2s27ZKgUTHVxpBL85ptftuRqbR7MzIKXZsKdA88kjIKKXwMmez9L1VbJkM4k+1Kzc5KdVydwi+ujpNegF6ZU8KDNFiN9TbDOlRxK5R+AjwdS8ZOIa4nci77KbNF9OZuO3l/FZwiKp8IFJ1nK7uiKUjmCukL0od/6X2rJtAzJmO5Co93ZVrd5r48oqUvjklzzsBNBFmeC3oBCADEV28RKzbv3dEbOocOsJQWr1R0EHUcbS270CrQZfb9VCZWkFlQ/1ypqFFQSjmmUGbNX2CG5mivVsW6Vgm7gg8HEnVCqzL02BPY4OmylskYMFI5Bra2wRNNQBgjg39L9XU4866q3BQzJp3r0fLRVH8gHM54Jf0FVmTyHotR/Xiw5YavNy2qaQXesqqUv8HBIha0rFblbuYI/cFwOtJ47gu0QmgrU0ytDjlnmDNx4rfsNylwTIHS0Oc7Pezp7MzLmZxnTM9b5VMprAXnQr4rewXCOUKBSto+j4rD5/77DzXw96bbueNruaupb2Iy2OHXNGkB0vKFD3xHsXE2x75NBovtABEBAAHCwqwEGAEIACAWIQSNyQHOZBRsBIrVD7t5IVJSe3WSHgUCWZ4LegIbAgFACRB5IV

JSe3WSHsB0IAQZAQgAHRYhBHsWQgTQlnI7AZY1qz6h3d2yYdl7BQJZngt6AAoJED6h3d2yYdl7CowH/Rp7GHEoPZTSUK8Ss7crwRmuAIDGBbSPkZbGmm4bOTaNs/gealc2tsVYpoMx7aYgqUW+t+84XciKHT+bjRv8uBnHescKZgDaomDuDKc2JVyx6samGFYuYPcGFReRcdmH0FOoPCn7bMW5mTPztV/wIA80LZD9kPKIXanfUyI3HLP0BPwZG4WTpKzJaalR1BNwu2oF6kEK0ymH3LfDiJ5Sr6emI2jrm4gH+/19ux/x+ST4tvm2PmH3BSQOPzgiqDiFd7RZoAIhmwr3FW4epsK9LtSxsi9gZ2vATBKO1oKtb6olW/keQT6uQCjqPSGojwzGRT2thEANH+5t6Vh0oDPZhrKUXRAAxHMBNHEaoo/M0sjZo+5OF3Ig1rMnI6XbKskLv6hu13cCymW0w/5E4XuYnyQ1cNC3pLvqDQbDx5mAPfBVHuqxJdRLQ3yDM/D2QIsxnkzQwi0FsJuni4vuJzWK/NHHDCvxMCh0YmSgbptUtgW8/niatd2Y6MbfRGxUHoctKtzqzivC8hKMTFrj4AbZhg/e9QVCsh5zSXtpWP0qFDJsxRMx0/432n9d4XUiy4U672r9Q09SsynB3QN6nTaCTWCIxGxjIb+8kJrRqTGwy/PElHX6kF0vQUWZNf2ITV1sd6LK/s/7sH+x4rzgUEHrsKr/qPvY3rUY/dQLd+owXesY83ANOu6oMWhSJnPMksbNa4tIKKbjmw3CFIOfoYHOWf3FtnydHNXoXfj4nBX8oSnkfhLILTJgf6JDFXfw6mTsv/jMzIfDs7PO1LK2oMK0+prSvSoM8bP9dmVEGIurzsTGjhTOBcb0zgyCmYVD3S48vZlTgHszAes1zwaCyt3/tOwrzU5JsRJVns+B/TUYaR/u3oIDMDygvE5ObWxXaFVnCC59r+zl0FazZ0ouyk2AYIR

zHf+n1n98HCngRO4FRel2yzGDYO2rLPkXRm+NHCRvUA/i4zGkJs2AV0hsKK9/x8uMkBjHAdAheXhY+CsizGzsKjjfwvgqf84LwAzSDdZqLVE2yGTOwU0ESiArJwEQAJhtnC6pScWjzvvQ6rCTGAai6hrRiN6VLVVFLIMaMnlUp92EtgVSNpw6kANtRTpKXUB5fIPZVUrVdfEN06t96/6LE42tgifDAFyFTZY5FdHHri1GG/Cr39MpW2VqCDCtTTPVWHTUlU1ZG631BJ+9NB+ce58TmLr6wBTQrT+W367eRFBC54EsLNb7zQAspCn9pw1xf1XNHOGnrAQ4r9BXhOW5B8CzRd4nLRQwVgtw/c5M/bjemAOoq2WkwN+0mfJe4TSfHwFUozXuN274X+0Gr10fhp8xEDYuQM0qu6W3aDXMBBwIu0jTNudEELsTzhKUbqpsBc9WjwNMCZoCuSw/RTpFBV35mXbqQoQgbcU7uWZslLl9Wvv/C6rjXgd+GeX8SGBjTqq1ZkTv5UXLHTNQzPnbkNEExzqToi/QdSjFMIACnakeOSxc0ckfnsd9pfGv1PUyPyiwrHiqWFzBijzGIZEHxhNGFxAkXwTJR7Pd40a7RDxwbO6p/TSIIum41JtteehLHwTRDdQNMoyfLxuNLEtNYS0uR2jYI1EPQfCNWXCdT2ZK/l6GVP6jyB/olHBIOr+oVXqJh+48ki8cATPczhq3fUr7UivmguGwD67/4omZ4PCKtz1hNndnyYFS9QldEGo+AsB3AoUpVIA0XfQVkxD9IZr+Zu6aJ6nWq4M2bsoxABEBAAHCwXYEGAEIACACGwwWIQSNyQHOZBRsBIrVD7t5IVJSe3WSHgUCWPerZAAKCRB5IVJSe3WSHkIgEACTpxdn/FKrwH0/LDpZDTKWEWm4416l13RjhSt9CUhZ/Gm2GNfXcVTfoF/jKXXgjHcV1DHjfLUPmPVwMdqlf5ACOiFqIUM2ag/OEARh356w

YG7YEobMjX0CThKe6AV2118XNzRBw/S2IO1LWnL5qaGYPZONUa9Pj0OaErdKIk/V1wge8Zoav2fQPautBcRLW5VA33PH1ggoqKQ4ES1hc9HC6SYKzTCGixu97mu/vjOa8DYgM+33TosLyNy+bCzw62zJkMf89X0tTSdaJSj5Op0SrRvfgjbC2YpJOnXxHr9qaXFbBZQhLjemZi6zRzUNeJ6A3Nzs+gIc4H7s/bYBtcd4ugPEhDeCGffdS3TppH9PnvRXfoa5zj5bsKFgjqjWolCyAmEvd15tXz5yNXtvrpgDhjF5ozPiNp/1EeWX4DxbH2i17drVu4fXwauFZ6lcsAcJxnvCA28RlQlmEQu/gFOx1axVXf6GIuXnQSjQN6qJbByUYrdc/cFCxPO2/lGuUxnufN9Tvb51Qh54laPgGLrlD2huQeSD9Sxa0MNUjNY0qLqaReT99Ygb2LPYGSLoFVx9iZz6sZNt07LqCx9qNgsJwsdmwYsNpMuFbc7nkWjtlEqzsXZHTvYN654p43S+hcAhmmOzQZcew6h71fAJLciiqsPBnCEdgCGFAWhZZdPkMA==

Autocrypt: addr=anarcat@torproject.org; prefer-encrypt=nopreference;

keydata=xjMEZHZPzhYJKwYBBAHaRw8BAQdAWdVzOFRW6FYVpeVaDo3sC4aJ2kUW4ukdEZ36UJLAHd7NKUFudG9pbmUgQmVhdXByw6kgPGFuYXJjYXRAdG9ycHJvamVjdC5vcmc+wpUEExYIAD4WIQS7ts1MmNdOE1inUqYCKTpvpOU0cwUCZHZgvwIbAwUJAeEzgAULCQgHAwUVCgkICwUWAgMBAAIeAQIXgAAKCRACKTpvpOU0c47SAPdEqfeHtFDx9UPhElZf7nSM69KyvPWXMocu9Kcu/sw1AQD5QkPzK5oxierims6/KUkIKDHdt8UcNp234V+UdD/ZB844BGR2UM4SCisGAQQBl1UBBQEBB0CYZha2IMY54WFXMG4S9/Smef54Pgon99LJ/hJ885p0ZAMBCAfCdwQYFggAIBYhBLu2zUyY104TWKdSpgIpOm+k5TRzBQJkdlDOAhsMAAoJEAIpOm+k5TRzBg0A+IbcsZhLx6FRIqBJCdfYMo7qovEo+vX0HZsUPRlq4HkBAIctCzmH3WyfOD/aUTeOF3tY+tIGUxxjQLGsNQZeGrQI

sq autocrypt decode gpg --import

Reduce the size of your c: partition to the smallest it can be and then turn off windows with the understanding that you will never boot this system on the iron ever again.

Reduce the size of your c: partition to the smallest it can be and then turn off windows with the understanding that you will never boot this system on the iron ever again.dd if=/dev/sda of=/dev/disk/by-id/usb0 status=progressIf you confirm that dd is available on the netinst image and the previous command runs successfully, test that your windows partition is visible in the new disk s partition table. The start block of the windows partition on each should match, as should the partition size.

fdisk -l /dev/disk/by-id/usb0 fdisk -l /dev/sdaIf the output from the first is the same as the output from the second, then you are probably safe to proceed. Once you confirm that you have made and tested a full copy of the blocks from your windows drive saved on your usb disk, nuke your windows partition table from orbit.

dd if=/dev/zero of=/dev/sda bs=1M count=42You can press alt-f1 to return to the Debian installer now. Follow the instructions to install Debian. Don t forget to remove all attached USB drives. Once you install Debian, press ctrl-alt-f3 to get a root shell. Add your user to the sudoers group:

# adduser cjac sudoerslog out

# exitlog in as your user and confirm that you have sudo

$ sudo lsDon t forget to read the spider man advice enter your password you ll need to install virt-manager. I think this should help:

$ sudo apt-get install virt-manager libvirt-daemon-driver-qemu qemu-system-x86insert the USB drive. You can now create a qcow2 file for your virtual machine.

$ sudo qemu-img convert -O qcow2 \ /dev/disk/by-id/usb0 \ /var/lib/libvirt/images/windows.qcow2I personally create a volume group called /dev/vg00 for the stuff I want to run raw and instead of converting to qcow2 like all of the other users do, I instead write it to a new logical volume.

sudo lvcreate /dev/vg00 -n windows -L 42G # or however large your drive was sudo dd if=/dev/disk/by-id/usb0 of=/dev/vg00/windows status=progressNow that you ve got the qcow2 file created, press alt-left until you return to your GDM session. The apt-get install command above installed virt-manager, so log in to your system if you haven t already and open up gnome-terminal by pressing the windows key or moving your mouse/gesture to the top left of your screen. Type in gnome-terminal and either press enter or click/tap on the icon. I like to run this full screen so that I feel like I m in a space ship. If you like to feel like you re in a spaceship, too, press F11. You can start virt-manager from this shell or you can press the windows key and type in virt-manager and press enter. You ll want the shell to run commands such as virsh console windows or virsh list When virt-manager starts, right click on QEMU/KVM and select New.

Now that Debian 12 has been released with proprietary firmwares on the official media, non-optional merged-/usr and systemd adopted by everybody, I want to take a moment to list, not without some pride, a few things that I was right about over the last 20 years:

Accepting the obvious solution about firmwares took 18 years. My work on the merged-/usr transition started in 2014, and the first discussions about replacing sysvinit are from 2011. The general adoption of udev (and dynamic device names, and persistent network interface names...) took less time in comparison and no large-scale flame wars, since people could enable it at their own pace. But it required countless little debates in the Debian Bug Tracking System: I still remember the people insisting that they would never use this newfangled dynamic /dev/, or complaining about their beloved /dev/cdrom symbolic link and persistent network interface names.

So follow me for more rants about inevitable technologies.

Now that Debian 12 has been released with proprietary firmwares on the official media, non-optional merged-/usr and systemd adopted by everybody, I want to take a moment to list, not without some pride, a few things that I was right about over the last 20 years:

Accepting the obvious solution about firmwares took 18 years. My work on the merged-/usr transition started in 2014, and the first discussions about replacing sysvinit are from 2011. The general adoption of udev (and dynamic device names, and persistent network interface names...) took less time in comparison and no large-scale flame wars, since people could enable it at their own pace. But it required countless little debates in the Debian Bug Tracking System: I still remember the people insisting that they would never use this newfangled dynamic /dev/, or complaining about their beloved /dev/cdrom symbolic link and persistent network interface names.

So follow me for more rants about inevitable technologies.

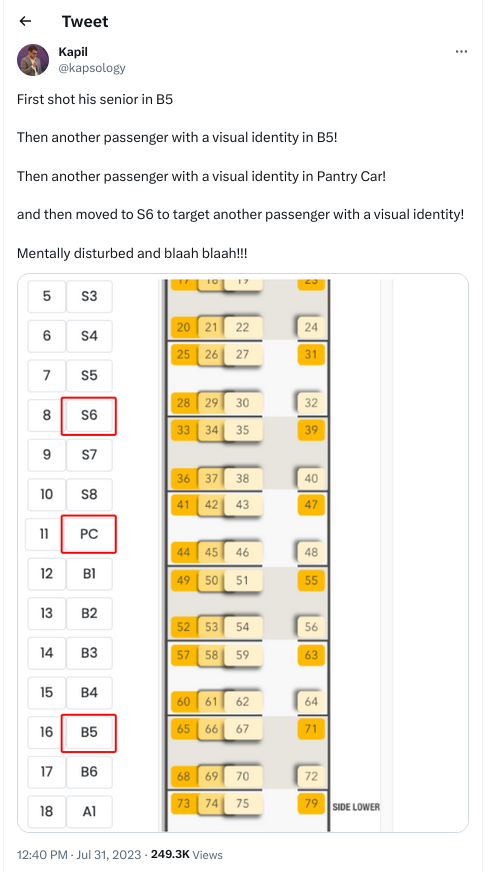

Just a few days back we came to know about the horrific Train Crash that happened in Odisha (Orissa). There are some things that are known and somethings that can be inferred by observance. Sadly, it seems the incident is going to be covered up

Just a few days back we came to know about the horrific Train Crash that happened in Odisha (Orissa). There are some things that are known and somethings that can be inferred by observance. Sadly, it seems the incident is going to be covered up  . Some of the facts that have not been contested in the public domain are that there were three lines. One loop line on which the Goods Train was standing and there was an up and a down line. So three lines were there. Apparently, the signalling system and the inter-locking system had issues as highlighted by an official about a month back. That letter, thankfully is in the public domain and I have downloaded it as well. It s a letter that goes to 4 pages. The RW is incensed that the letter got leaked and is in public domain. They are blaming everyone and espousing conspiracy theories rather than taking the minister to task. Incidentally, the Minister has three ministries that he currently holds. Ministry of Communication, Ministry of Electronics and Information Technology (MEIT), and Railways Ministry. Each Ministry in itself is important and has revenues of more than 6 lakh crore rupees. How he is able to do justice to all the three ministries is beyond me

. Some of the facts that have not been contested in the public domain are that there were three lines. One loop line on which the Goods Train was standing and there was an up and a down line. So three lines were there. Apparently, the signalling system and the inter-locking system had issues as highlighted by an official about a month back. That letter, thankfully is in the public domain and I have downloaded it as well. It s a letter that goes to 4 pages. The RW is incensed that the letter got leaked and is in public domain. They are blaming everyone and espousing conspiracy theories rather than taking the minister to task. Incidentally, the Minister has three ministries that he currently holds. Ministry of Communication, Ministry of Electronics and Information Technology (MEIT), and Railways Ministry. Each Ministry in itself is important and has revenues of more than 6 lakh crore rupees. How he is able to do justice to all the three ministries is beyond me  The other thing is funds both for safety and relaying of tracks has been either not sanctioned or unutilized. In fact, CAG and the Railway Brass had shared how derailments have increased and unfulfilled vacancies but they were given no importance

The other thing is funds both for safety and relaying of tracks has been either not sanctioned or unutilized. In fact, CAG and the Railway Brass had shared how derailments have increased and unfulfilled vacancies but they were given no importance  In fact, not talking about safety in the recently held Chintan Shivir (brainstorming session) tells you how much the Govt. is serious about safety. In fact, most of the programme was on high speed rail which is a white elephant. I have shared a whitepaper done by RW in the U.S. that tells how high-speed rail doesn t make economic sense. And that is an economy that is 20 times + the Indian Economy. Even the Chinese are stopping with HSR as it doesn t make economic sense.

Incidentally, Air Fares again went up 200% yesterday. Somebody shared in the region of 20k + for an Air ticket from their place to Bangalore

In fact, not talking about safety in the recently held Chintan Shivir (brainstorming session) tells you how much the Govt. is serious about safety. In fact, most of the programme was on high speed rail which is a white elephant. I have shared a whitepaper done by RW in the U.S. that tells how high-speed rail doesn t make economic sense. And that is an economy that is 20 times + the Indian Economy. Even the Chinese are stopping with HSR as it doesn t make economic sense.

Incidentally, Air Fares again went up 200% yesterday. Somebody shared in the region of 20k + for an Air ticket from their place to Bangalore  Coming back to the story itself. the Goods Train was on the loopline. Some say it was a little bit on the outer, some say otherwise, but it is established that it was on the loopline. This is standard behavior on and around Railway Stations around the world. Whether it was in the Inner or Outer doesn t make much of a difference with what happened next. The first train that collided with the goods train was the 12864 (SMVB-HWH) Yashwantpur Howrah Express and got derailed on to the next track where from the opposite direction 12841 (Shalimar- Bangalore) Coramandel Express was coming. Now they have said that around 300 people have died and that seems to be part of the cover-up. Both the trains are long trains, having between 23 odd coaches each. Even if you have reserved tickets you have 80 odd people in a coach and usually in most of these trains, it is at least double of that. Lot of money goes to TC and then above (Corruption). The Railway fares have gone up enormously but that s a question for perhaps another time

Coming back to the story itself. the Goods Train was on the loopline. Some say it was a little bit on the outer, some say otherwise, but it is established that it was on the loopline. This is standard behavior on and around Railway Stations around the world. Whether it was in the Inner or Outer doesn t make much of a difference with what happened next. The first train that collided with the goods train was the 12864 (SMVB-HWH) Yashwantpur Howrah Express and got derailed on to the next track where from the opposite direction 12841 (Shalimar- Bangalore) Coramandel Express was coming. Now they have said that around 300 people have died and that seems to be part of the cover-up. Both the trains are long trains, having between 23 odd coaches each. Even if you have reserved tickets you have 80 odd people in a coach and usually in most of these trains, it is at least double of that. Lot of money goes to TC and then above (Corruption). The Railway fares have gone up enormously but that s a question for perhaps another time  . So at the very least, we could be looking at more than 1000 people having died. The numbers are being under-reported so that nobody has to take responsibility. The Railways itself has told that it is unable to identify 80% of the people who have died. This means that 80% were unreserved ticket holders or a majority of them. There have been disturbing images as how bodies have been flung over on tractors and whatnot to be either buried or cremated without a thought. We are in peak summer season so bodies will start to rot within 24-48 hours

. So at the very least, we could be looking at more than 1000 people having died. The numbers are being under-reported so that nobody has to take responsibility. The Railways itself has told that it is unable to identify 80% of the people who have died. This means that 80% were unreserved ticket holders or a majority of them. There have been disturbing images as how bodies have been flung over on tractors and whatnot to be either buried or cremated without a thought. We are in peak summer season so bodies will start to rot within 24-48 hours  No arrangements made to cool the bodies and take some information and identifying marks or whatever. The whole thing being done in a very callous manner, not giving dignity to even those who have died for no fault of their own. The dissent note also tells that a cover-up is also in the picture. Apparently, India doesn t have nor does it feel to have a need for something like the NTSB that the U.S. used when it hauled both the plane manufacturer (Boeing) and the FAA when the 737 Max went down due to improper data collection and sharing of data with pilots. And with no accountability being fixed to Minister or any of the senior staff, a small junior staff person may be fired. Perhaps the same official that actually told them about the signal failures almost 3 months back

No arrangements made to cool the bodies and take some information and identifying marks or whatever. The whole thing being done in a very callous manner, not giving dignity to even those who have died for no fault of their own. The dissent note also tells that a cover-up is also in the picture. Apparently, India doesn t have nor does it feel to have a need for something like the NTSB that the U.S. used when it hauled both the plane manufacturer (Boeing) and the FAA when the 737 Max went down due to improper data collection and sharing of data with pilots. And with no accountability being fixed to Minister or any of the senior staff, a small junior staff person may be fired. Perhaps the same official that actually told them about the signal failures almost 3 months back  There were and are also some reports that some jugaadu /temporary fixes were applied to signalling and inter-locking just before this incident happened. I do not know nor confirm one way or the other if the above happened. I can however point out that if such a thing happened, then usually a traffic block is announced and all traffic on those lines are stopped. This has been the thing I know for decades. Traveling between Mumbai and Pune multiple times over the years am aware about traffic block. If some repair work was going on and it wasn t able to complete the work within the time-frame then that may well have contributed to the accident. There is also a bit muddying of the waters where it is being said that one of the trains was 4 hours late, which one is conflicting stories.

On top of the whole thing, they have put the case to be investigated by CBI and hinting at sabotage. They also tried to paint a religious structure as mosque, later turned out to be a temple. The RW says done by Muslims as it was Friday not taking into account as shared before that most Railway maintenance works are usually done between Friday Monday. This is a practice followed not just in India but world over.

There has been also move over a decade to remove wooden sleepers and have concrete sleepers. Unlike the wooden ones they do not expand and contract as much and their life is much more longer than the wooden ones. Funds had been marked (although lower than last few years) but not yet spent. As we know in case of any accident, it is when all the holes in cheese line up it happens. Fukushima is a great example of that, no sea wall even though Japan is no stranger to Tsunamis. External power at the same level as the plant. (10 meters above sea-level), no training for cascading failures scenarios which is what happened. The Days mini-series shares some but not all the faults that happened at Fukushima and the Govt. response to it. There is a difference though, the Japanese Prime Minister resigned on moral grounds. Here, nor the PM, nor the Minister would be resigning on moral grounds or otherwise :(. Zero accountability and that was partly a natural disaster, here it s man-made. In fact, both the Minister and the Prime Minister arrived with their entourages, did a PR blitzkrieg showing how concerned they are. Within 50 hours, the lines were cleared. The part-time Railway Minister shared that he knows the root cause and then few hours later has given the case to CBI. All are saying, wait for the inquiry report. To date, none of the accidents even in this Govt. has produced an investigation report. And even if it did, I am sure it will whitewash as it did in case of Adani as I had shared before in the previous blog post. Incidentally, it is reported that Adani paid off some of its debt, but when questioned as to where they got the money, complete silence on that part :(. As can be seen cover-up after cover-up

There were and are also some reports that some jugaadu /temporary fixes were applied to signalling and inter-locking just before this incident happened. I do not know nor confirm one way or the other if the above happened. I can however point out that if such a thing happened, then usually a traffic block is announced and all traffic on those lines are stopped. This has been the thing I know for decades. Traveling between Mumbai and Pune multiple times over the years am aware about traffic block. If some repair work was going on and it wasn t able to complete the work within the time-frame then that may well have contributed to the accident. There is also a bit muddying of the waters where it is being said that one of the trains was 4 hours late, which one is conflicting stories.

On top of the whole thing, they have put the case to be investigated by CBI and hinting at sabotage. They also tried to paint a religious structure as mosque, later turned out to be a temple. The RW says done by Muslims as it was Friday not taking into account as shared before that most Railway maintenance works are usually done between Friday Monday. This is a practice followed not just in India but world over.

There has been also move over a decade to remove wooden sleepers and have concrete sleepers. Unlike the wooden ones they do not expand and contract as much and their life is much more longer than the wooden ones. Funds had been marked (although lower than last few years) but not yet spent. As we know in case of any accident, it is when all the holes in cheese line up it happens. Fukushima is a great example of that, no sea wall even though Japan is no stranger to Tsunamis. External power at the same level as the plant. (10 meters above sea-level), no training for cascading failures scenarios which is what happened. The Days mini-series shares some but not all the faults that happened at Fukushima and the Govt. response to it. There is a difference though, the Japanese Prime Minister resigned on moral grounds. Here, nor the PM, nor the Minister would be resigning on moral grounds or otherwise :(. Zero accountability and that was partly a natural disaster, here it s man-made. In fact, both the Minister and the Prime Minister arrived with their entourages, did a PR blitzkrieg showing how concerned they are. Within 50 hours, the lines were cleared. The part-time Railway Minister shared that he knows the root cause and then few hours later has given the case to CBI. All are saying, wait for the inquiry report. To date, none of the accidents even in this Govt. has produced an investigation report. And even if it did, I am sure it will whitewash as it did in case of Adani as I had shared before in the previous blog post. Incidentally, it is reported that Adani paid off some of its debt, but when questioned as to where they got the money, complete silence on that part :(. As can be seen cover-up after cover-up  FWIW, the Coramandel Express is known as the Migrant train so has a huge number of passengers, the other one which was collided with is known as sick train as huge number of cancer patients use it to travel to Chennai and come back

FWIW, the Coramandel Express is known as the Migrant train so has a huge number of passengers, the other one which was collided with is known as sick train as huge number of cancer patients use it to travel to Chennai and come back

The main takeaway is if you do not have their voice, you won t make policies for them. They won t go away but you will make life hell for them. One thing to keep in mind that most people assume that most people are disabled from birth. This may or may not be true. For e.g. in the above triple Railways accidents, there are bound to be disabled people or newly disabled people who were healthy before the accident. The most common accident is road accidents, some involving pedestrians and vehicles or both, the easiest is Ministry of Road Transport data that says 4,00,000 people sustained injuries in 2021 alone in road mishaps. And this is in a country where even accidents are highly under-reported, for more than one reason. The biggest reason especially in 2 and 4 wheeler is the increased premium they would have to pay if in an accident, so they usually compromise with the other and pay off the Traffic Inspector.

Sadly, I haven t read a new book, although there are a few books I m looking forward to have. People living in India and neighbors please be careful as more heat waves are expected. Till later.

The main takeaway is if you do not have their voice, you won t make policies for them. They won t go away but you will make life hell for them. One thing to keep in mind that most people assume that most people are disabled from birth. This may or may not be true. For e.g. in the above triple Railways accidents, there are bound to be disabled people or newly disabled people who were healthy before the accident. The most common accident is road accidents, some involving pedestrians and vehicles or both, the easiest is Ministry of Road Transport data that says 4,00,000 people sustained injuries in 2021 alone in road mishaps. And this is in a country where even accidents are highly under-reported, for more than one reason. The biggest reason especially in 2 and 4 wheeler is the increased premium they would have to pay if in an accident, so they usually compromise with the other and pay off the Traffic Inspector.

Sadly, I haven t read a new book, although there are a few books I m looking forward to have. People living in India and neighbors please be careful as more heat waves are expected. Till later.

Next.