Day trip on the Olympic Peninsula

TL;DR: drove many kilometres on very nice roads, took lots of

pictures, saw sunshine and fog and clouds, an angry ocean and a calm

one, a quiet lake and lots and lots of trees: a very well spent

day. Pictures at

http://photos.k1024.org/Daytrips/Olympic-Peninsula-2014/.

Sometimes I travel to the US on business, and as such I've been a few

times in the Seattle area. Until this summer, when I had my last trip

there, I was content to spend any extra days (weekend or such) just

visiting Seattle itself, or shopping (I can spend hours in the REI

store!), or working on my laptop in the hotel.

This summer though, I thought - I should do something a bit

different. Not too much, but still - no sense in wasting both days of

the weekend. So I thought maybe driving to Mount Rainier, or something

like that.

On the Wednesday of my first week in Kirkland, as I was preparing my

drive to the mountain, I made the mistake of scrolling the map

westwards, and I saw for the first time the

Olympic Peninsula; furthermore, I was zoomed in enough

that I saw there was a small road right up to the north-west

corner. Intrigued, I zoomed further and learned about

Cape Flattery (

the northwestern-most point of the

contiguous United States! ), so after spending a bit time reading

about it, I was determined to go there.

Easier said than done - from Kirkland, it's a 4h 40m drive (according

to Google Maps), so it would be a full day on the road. I was thinking

of maybe spending the night somewhere on the peninsula then, in order

to actually explore the area a bit, but from Wednesday to Saturday it

was a too short notice - all hotels that seemed OK-ish were fully

booked. I spent some time trying to find something, even not directly

on my way, but I failed to find any room.

What I did manage to do though, is to learn a bit about the area, and

to realise that there's a nice loop around the whole peninsula - the

104 from Kirkland up to where it meets the 101N on the eastern side,

then take the 101 all the way to Port Angeles, Lake Crescent, near

Lake Pleasant, then south toward Forks, crossing the Hoh river, down

to Ruby Beach, down along the coast, crossing the Queets River, east

toward Lake Quinault, south toward Aberdeen, then east towards Olympia

and back out of the wilderness, into the highway network and back to

Kirkland. This looked like an awesome road trip, but it is as long as

it sounds - around 8 hours (continuous) drive, though skipping Cape

Flattery. Well, I said to myself, something to keep in mind for a

future trip to this area, with a night in between. I was still

planning to go just to Cape Flattery and back, without realising at

that point that this trip was actually longer (as you drive on

smaller, lower-speed roads).

Preparing my route, I read about the queues at the Edmonds-Kingston

ferry, so I was planning to wake up early on the weekend, go to Cape

Flattery, and go right back (maybe stop by Lake Crescent).

Saturday comes, I - of course - sleep longer than my trip schedule

said, and start the day in a somewhat cloudy weather, driving north

from my hotel on Simonds Road, which was quite nicer than the usual

East-West or North-South roads in this area. The weather was becoming

nicer, however as I was nearing the ferry terminal and the traffic was

getting denser, I started suspecting that I'll spend a quite a bit of

time waiting to board the ferry.

And unfortunately so it was (photo altered to hide some personal

information):

.

The weather at least was nice, so I tried to enjoy it and simply

observe the crowd - people were looking forward to a weekend relaxing,

so nobody seemed annoyed by the wait. After almost half an hour, time

to get on the ferry - my first time on a ferry in US, yay! But it was

quite the same as in Europe, just that the ship was much larger.

Once I secured the car, I went up deck, and was very surprised to be

treated with some excellent views:

The crossing was not very short, but it seemed so, because of the

view, the sun, the water and the wind. Soon we were nearing the other

shore; also, see how well panorama software deals with waves :P!

And I was finally on the "real" part of the trip.

The road was quite interesting. Taking the 104 North, crossing the

"Hood Canal Floating Bridge" (my, what a boring name), then finally

joining the 101 North. The environment was quite varied, from bare

plains and hills, to wooded areas, to quite dense forests, then into

inhabited areas - quite a long stretch of human presence, from the

Sequim Bay to Port Angeles.

Port Angeles surprised me: it had nice views of the ocean, and an

interesting port (a few big ships), but it was much smaller than I

expected. The 101 crosses it, and in less than 10 minutes or so it was

already over. I expected something nicer, based on the name, but

Anyway, onwards!

Soon I was at a crossroads and had to decide: I could either follow

the 101, crossing the Elwha River and then to Lake Crescent, then go

north on the 113/112, or go right off 101 onto 112, and follow it

until close to my goal. I took the 112, because on the map it looked

"nicer", and closer to the shore.

Well, the road itself was nice, but quite narrow and twisty here and

there, and there was some annoying traffic, so I didn't enjoy this

segment very much. At least it had the very interesting property (to

me) that whenever I got closer to the ocean, the sun suddenly

disappeared, and I was finding myself in the fog:

So my plan to drive nicely along the coast failed. At one point, there

was even heavy smoke (not fog!), and I wondered for a moment how safe

was to drive out there in the wilderness (there were other cars

though, so I was not alone).

Only quite a bit later, close to Neah Bay, did I finally see the

ocean: I saw a small parking spot, stopped, and crossing a small line

of trees I found myself in a small cove? bay? In any case, I had the

impression I stepped out of the daily life in the city and out into

the far far wilderness:

There was a couple, sitting on chairs, just enjoying the view. I felt

very much intruding, behaving like I did as a tourist: running in,

taking pictures, etc., so I tried at least to be quiet . I then

quickly moved on, since I still had some road ahead of me.

Soon I entered

Neah Bay, and was surprised to see once

more blue, and even more blue. I'm a sucker for blue, whether sky blue

or sea blue , so I took a few more pictures (watch out for the evil

fog in the second one):

Well, the town had some event, and there were

lots of people, so I

just drove on, now on the last stretch towards the cape. The road here

was also very interesting, yet another environment - I was driving on

Cape Flattery Road, which cuts across the tip of the peninsula (quite

narrow here) along the Waatch River and through its flooding plains

(at least this is how it looked to me). Then it finally starts going

up through the dense forest, until it reaches the parking lot, and

from there, one goes on foot towards the cape. It's a very easy and

nice walk (not a hike), and the sun was shining very nicely through

the trees:

But as I reached the peak of the walk, and started descending towards

the coast, I was surprised, yet again, by fog:

I realised that probably this means the cape is fully in fog, so I

won't have any chance to enjoy the view.

Boy, was I wrong! There are three viewpoints on the cape, and at each

one I was just "wow" and "aah" at the view. Even thought it was not a

sunny summer view, and there was no blue in sight, the combination

between the fog (which was hiding the horizon and even the closer

islands), the angry ocean which was throwing wave after wave at the

shore, making a loud noise, and the fact that even this seemingly

inhospitable area was just teeming with life, was both unexpected and

awesome. I took here waay to many pictures, here are just a couple

inlined:

I spent around half an hour here, just enjoying the rawness of

nature. It was so amazing to see life encroaching on each bit of land,

even though it was not what I would consider a nice place. Ah, how we

see everything through our own eyes!

The walk back was through fog again, and at one point it switched over

back to sunny. Driving back on the same road was quite different,

knowing what lies at its end. On this side, the road had some parking

spots, so I managed to stop and take a picture - even though this area

was much less wild, it still has that outdoors flavour, at least for

me:

Back in Neah Bay, I stopped to eat. I had a place in mind from

TripAdvisor, and indeed - I was able to get a custom order pizza at

"Linda's Woodfired Kitchen". Quite good, and I ate without hurry,

looking at the people walking outside, as they were coming back from

the fair or event that was taking place.

While eating, a somewhat disturbing thought was going through my

mind. It was still early, around two to half past two, so if

I went straight back to Kirkland I would be early at the hotel. But it

was also early enough that I could - in theory at least - still do the

"big round-trip". I was still rummaging the thought as I left

On the drive back I passed once more near

Sekiu, Washington, which is a very small place but the

map tells me it even has an airport! Fun, and the view was quite nice

(a bit of blue before the sea is swallowed by the fog):

After passing Sekiu and Clallam Bay, the 112 curves inland and goes on

a bit until you are at the crossroads: to the left the 112 continues,

back the same way I came; to the right, it's the 113, going south

until it meets the 101. I looked left - remembering the not-so-nice

road back, I looked south - where a very appealing, early afternoon

sun was beckoning - so I said, let's take the long way home!

It's just a short stretch on the 113, and then you're on the 101. The

101 is a very nice road, wide enough, and it goes through very very

nice areas. Here, west to south-west of the Olympic Mountains, it's a

very different atmosphere from the 112/101 that I drove on in the

morning; much warmer colours, a bit different tree types (I think),

and more flat. I soon passed through Forks, which is one of the places

I looked at when searching for hotels. I did so without any knowledge

of the town itself (its wikipedia page is quite drab), so imagine my

surprise when a month later I learned from a colleague that this is

actually a very important place for vampire-book fans. Oh my, and I

didn't even stop! This town also had some event, so I just drove on,

enjoying the (mostly empty) road.

My next planned waypoint was

Ruby Beach, and I was

looking forward to relaxing a bit under the warm sun - the drive was

excellent, weather perfect, so I was watching the distance countdown

on my Garmin. At two miles out, the "Near waypoint Ruby Beach" message

appeared, and two seconds later the sun went out. What the I was

hoping this is something temporary, but as I slowly drove the

remaining mile I couldn't believe my eyes that I was, yet again,

finding myself in the fog

I park the car, thinking that asking for a refund would at least allow

me to feel better - but it was I who planned the trip! So I resigned

myself, thinking that possibly this beach is another special location

that is always in the fog. However, getting near the beach it was

clear that it was not so - some people were still in their bathing

suits, just getting dressed, so it seems I was just unlucky with

regards to timing. However, I the beach itself was nice, even in the

fog (I later saw online sunny pictures, and it is quite beautiful),

the the lush trees reach almost to the shore, and the way the rocks

are sitting on the beach:

Since the weather was not that nice, I took a few more pictures, then

headed back and started driving again. I was soo happy that the

weather didn't clear at the 2 mile mark (it was not just Ruby Beach!),

but alas - it cleared as soon as the 101 turns left and leaves the

shore, as it crosses the Queets river. Driving towards my next planned

stop was again a nice drive in the afternoon sun, so I think it simply

was not a sunny day on the Pacific shore. Maybe seas and oceans have

something to do with fog and clouds ! In Switzerland, I'm very happy

when I see fog, since it's a somewhat rare event (and seeing mountains

disappearing in the fog is nice, since it gives the impression of a

wider space). After this day, I was a bit fed up with fog for a while

Along the 101 one reaches

Lake Quinault, which seemed

pretty nice on the map, and driving a bit along the lake - a local

symbol, the "World's largest spruce tree". I don't know what a spruce

tree is, but I like trees, so I was planning to go there, weather

allowing. And the weather did cooperate, except that the tree was not

so imposing as I thought! In any case, I was glad to stretch my legs a

bit:

However, the most interesting thing here in Quinault was not this

tree, but rather - the quiet little town and the view on the lake, in

the late afternoon sun:

The entire town was very very quiet, and the sun shining down on the

lake gave an even stronger sense of tranquillity. No wind, not many

noises that tell of human presence, just a few, and an overall sense

of peace. It was quite the opposite of the Cape Flattery and a very

nice way to end the trip.

Well, almost end - I still had a bit of driving ahead. Starting from

Quinault, driving back and entering the 101, driving down to Aberdeen:

then turning east towards Olympia, and back onto the highways.

As to Aberdeen and Olympia, I just drove through, so I couldn't make

any impression of them. The old harbour and the rusted things in

Aberdeen were a bit interesting, but the day was late so I didn't

stop.

And since the day shouldn't end without any surprises, during the last

profile change between walking and driving in Quinault, my GPS decided

to reset its active maps list and I ended up with all maps

activated. This usually is not a problem, at least if you follow a

pre-calculated route, but I did trigger recalculation as I restarted

my driving, so the Montana was trying to decide on which map to route

me - between the Garmin North America map and the Open StreeMap one,

the result was that it never understood which road I was on. It always

said "Drive to I5", even though I

was on I5. Anyway, thanks to road

signs, and no thanks to "just this evening ramp closures", I was able

to arrive safely at my hotel.

Overall, a very successful, if long trip: around 725 kilometres,

10h:30m moving, 13h:30m total:

There were many individual good parts, but the overall think about

this road trip was that I was able to experience lots of different

environments of the peninsula on the same day, and that overall it's a

very very nice area.

The downside was that I was in a rush, without being able to actually

stop and enjoy the locations I visited. And there's still so much to

see! A two nights trip sound just about right, with some long hikes in

the rain forest, and afternoons spent on a lake somewhere.

Another not so optimal part was that I only had my "travel" camera (a

Nikon 1 series camera, with a small sensor), which was a bit

overwhelmed here and there by the situation. It was fortunate that the

light was more or less good, but looking back at the pictures, how I

wish that I had my "serious" DSLR

So, that means I have two reasons to go back! Not too soon though,

since Mount Rainier is also a good location to visit .

If the pictures didn't bore you yet, the entire gallery is on

my smugmug site. In

any case, thanks for reading!

It didn't drop packets at random, though. Some traffic would be

deterministically dropped, such as the parallel A/AAAA DNS lookups generated by

the glibc DNS stub resolver. For instance, in the following

It didn't drop packets at random, though. Some traffic would be

deterministically dropped, such as the parallel A/AAAA DNS lookups generated by

the glibc DNS stub resolver. For instance, in the following  It seemed unlikely that Arris would fix the firmware issues in the SB6190 before

the end of my 30-day return window, so I returned the SB6190 and purchased a

Netgear CM600. This modem appears to be based on the Broadcom BCM3384 and

looking at an older release of the CM600 firmware source reveals that the

device runs the open source eCos embedded operating system.

The Netgear CM600 so far hasn't exhibited any of the issues I found with the

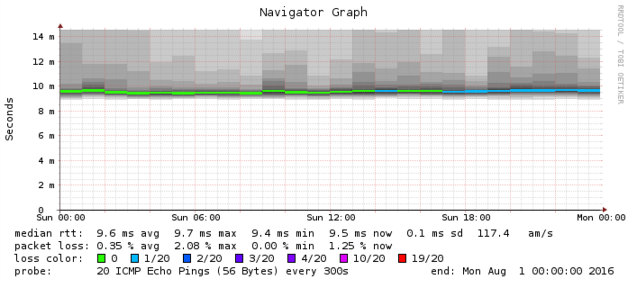

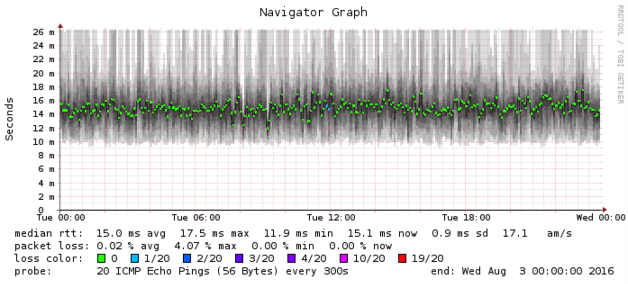

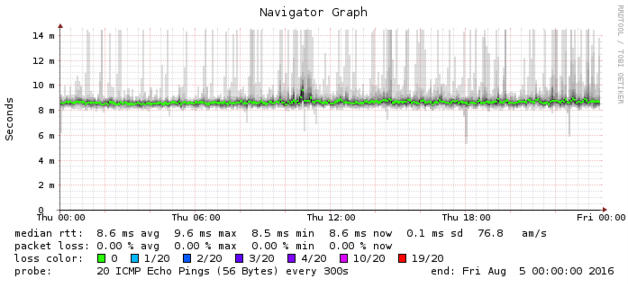

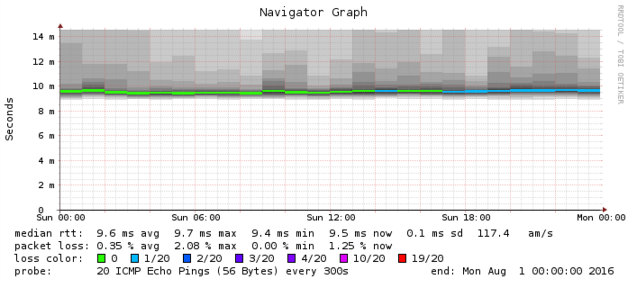

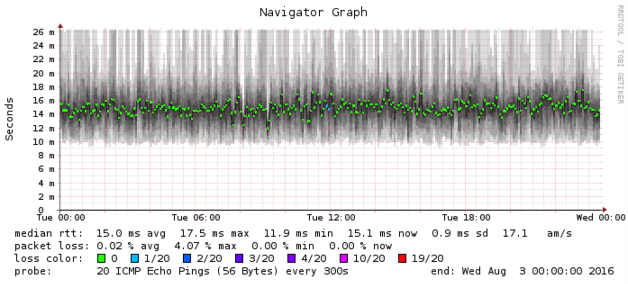

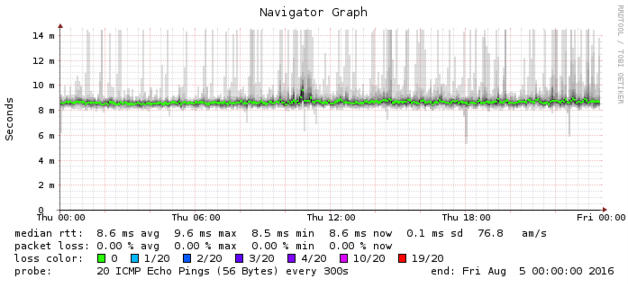

Arris SB6190 modem. Here is a SmokePing graph for the CM600, which shows median

latency about 1 ms lower than the Arris modem:

It seemed unlikely that Arris would fix the firmware issues in the SB6190 before

the end of my 30-day return window, so I returned the SB6190 and purchased a

Netgear CM600. This modem appears to be based on the Broadcom BCM3384 and

looking at an older release of the CM600 firmware source reveals that the

device runs the open source eCos embedded operating system.

The Netgear CM600 so far hasn't exhibited any of the issues I found with the

Arris SB6190 modem. Here is a SmokePing graph for the CM600, which shows median

latency about 1 ms lower than the Arris modem:

It's not clear which company is to blame for the problems in the Arris modem.

Looking at the DOCSIS drivers in the SB6190 firmware source reveals copyright

statements from ARRIS Group, Texas Instruments, and Intel. However, I would

recommend avoiding cable modems produced by Arris in particular, and cable

modems based on the Intel Puma SoC in general.

It's not clear which company is to blame for the problems in the Arris modem.

Looking at the DOCSIS drivers in the SB6190 firmware source reveals copyright

statements from ARRIS Group, Texas Instruments, and Intel. However, I would

recommend avoiding cable modems produced by Arris in particular, and cable

modems based on the Intel Puma SoC in general.

It didn't drop packets at random, though. Some traffic would be

deterministically dropped, such as the parallel A/AAAA DNS lookups generated by

the glibc DNS stub resolver. For instance, in the following

It didn't drop packets at random, though. Some traffic would be

deterministically dropped, such as the parallel A/AAAA DNS lookups generated by

the glibc DNS stub resolver. For instance, in the following  It seemed unlikely that Arris would fix the firmware issues in the SB6190 before

the end of my 30-day return window, so I returned the SB6190 and purchased a

Netgear CM600. This modem appears to be based on the Broadcom BCM3384 and

looking at an older release of the CM600 firmware source reveals that the

device runs the open source eCos embedded operating system.

The Netgear CM600 so far hasn't exhibited any of the issues I found with the

Arris SB6190 modem. Here is a SmokePing graph for the CM600, which shows median

latency about 1 ms lower than the Arris modem:

It seemed unlikely that Arris would fix the firmware issues in the SB6190 before

the end of my 30-day return window, so I returned the SB6190 and purchased a

Netgear CM600. This modem appears to be based on the Broadcom BCM3384 and

looking at an older release of the CM600 firmware source reveals that the

device runs the open source eCos embedded operating system.

The Netgear CM600 so far hasn't exhibited any of the issues I found with the

Arris SB6190 modem. Here is a SmokePing graph for the CM600, which shows median

latency about 1 ms lower than the Arris modem:

It's not clear which company is to blame for the problems in the Arris modem.

Looking at the DOCSIS drivers in the SB6190 firmware source reveals copyright

statements from ARRIS Group, Texas Instruments, and Intel. However, I would

recommend avoiding cable modems produced by Arris in particular, and cable

modems based on the Intel Puma SoC in general.

It's not clear which company is to blame for the problems in the Arris modem.

Looking at the DOCSIS drivers in the SB6190 firmware source reveals copyright

statements from ARRIS Group, Texas Instruments, and Intel. However, I would

recommend avoiding cable modems produced by Arris in particular, and cable

modems based on the Intel Puma SoC in general.

I've lately been working on support for

I've lately been working on support for

What happened in the

What happened in the  Portability toward other systems has been improved: old versions of GNU diff are now supported (Mike McQuaid), suggestion of the appropriate locale is now the more generic

Portability toward other systems has been improved: old versions of GNU diff are now supported (Mike McQuaid), suggestion of the appropriate locale is now the more generic

I feel a sense of pride when I think that I was involved in the development and maintenance of what was probably the first piece of software accepted into Debian which then had and still has direct up-stream support from Microsoft. The world is a better place for having Microsoft in it. The first operating system I ever ran on an

I feel a sense of pride when I think that I was involved in the development and maintenance of what was probably the first piece of software accepted into Debian which then had and still has direct up-stream support from Microsoft. The world is a better place for having Microsoft in it. The first operating system I ever ran on an  The Debian Python Modules Team is

The Debian Python Modules Team is