There is intense interest in communications privacy at the moment thanks to the

Snowden scandal. Open source software has offered credible solutions for privacy and encryption for many years. Sadly, making these solutions work together is not always plug-and-play.

In fact, secure networking, like

VoIP, has been plagued by problems with interoperability and firewall/filtering issues although now the solutions are starting to become apparent. Here I will look at some of them, such as the use of firewall zones to simplify management or the use of ECDSA certificates to avoid UDP fragmentation problems. I've drawn together a lot of essential tips from different documents and mailing list discussions to demonstrate how to solve a real-world networking problem.

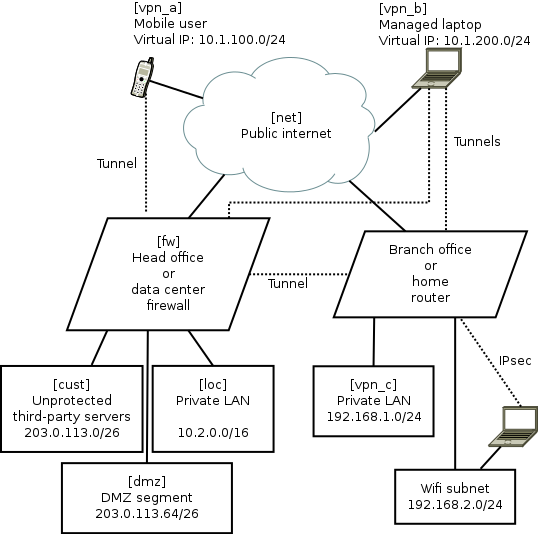

A typical network scenario and requirements

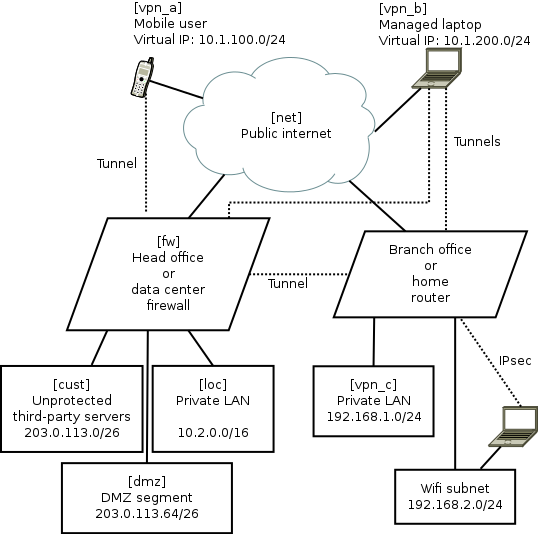

Here is a diagram of the network that will be used to help us examine the capabilities of these open source solutions.

Some comments about the diagram:

- The names in square brackets are the zones for Shorewall, they are explained later.

- The dotted lines are IPsec tunnels over the untrusted Internet.

- The road-warrior users (mobiles and laptops) get virtual IP addresses from the VPN gateway. The branch offices/home routers do not use virtual IPs.

- The mobile phones are largely untrusted: easily lost or stolen, many apps have malware, they can only tunnel to the central VPN and only access a very limited range of services.

- The laptops are managed devices so they are trusted with access to more services. For efficiency they can connect directly to branch office/home VPNs as well as the central server.

- Smart-phone user browsing habits are systematically monitored by mobile companies with insidious links into mass-media and advertising. Road-warriors sometimes plug-in at client sites or hotels where IT staff may monitor their browsing. Therefore, all these users want to tunnel all their browsing through the VPN.

- The central VPN gateway/firewall is running strongSwan VPN and Shorewall firewall on Linux. It could be Debian, Fedora or Ubuntu. Other open source platforms such as OpenBSD are also very well respected for building firewall and VPN solutions, but Shorewall, which is one of the key ingredients in this recipe, only works on Linux at present.

- The branch office/home network could be another strongSwan/Shorewall server or it could also be an OpenWRT router

- The default configuration for most wifi routers creates a bridge joining wifi users with wired LAN users. Not here, it has been deliberately configured as an independent network. Road-warriors who attach to the wifi must use VPN tunnelling to access the local wired network. In OpenWRT, it is relatively easy to make the Wifi network an independent subnet. This is an essential security precaution because wifi passwords should not be considered secure: they are often transmitted to third parties, for example, by the cloud backup service in many newer smart phones.

Package mayhem

The major components are packaged on all the major Linux distributions. Nonetheless, in every case, I found that it is necessary to re-compile fresh

strongSwan packages from sources. It is not so hard to do but it is necessary and worth the effort. Here are related blog entries where I provide the details about how to re-build fresh versions of the official packages with all necessary features enabled:

Using X.509 certificates as a standard feature of the VPN

For convenience, many people building a point-to-point VPN start with passwords (sometimes referred to as pre-shared keys (PSK)) as a security mechanism. As the VPN grows, passwords become unmanageable.

In this solution, we only look at how to build a VPN secured by

X.509 certificates. The certificate concept is not hard.

In this scenario, there are a few things that make it particularly easy to work with certificates:

- strongSwan comes with a convenient command line tool, ipsec pki. Many of it's functions are equivalent to things you can do with OpenSSL or GnuTLS. However, the ipsec pki syntax is much more lightweight and simply provides a convenient way to do the things you need to do when maintaining a VPN.

- Many of the routine activities involved in certificate maintainence can be scripted. My recent blog about using Android clients with strongSwan gives a sample script demonstrating how ipsec pki commands can be used to prepare a PKCS#12 (.p12) file that can be easily loaded into an Android device using the SD-card.

- For building a VPN, there is no need to use a public certificate authority such as Verisign. Consequently, there is no need to fill in all their forms or make any payments for each device/certificate. Some larger organisations do choose to outsource their certificate management to such firms. For smaller organisations, an effective and sometimes better solution can be achieved by maintaining the process in-house with a private root CA.

UDP fragmentation during IPsec IKEv2 key exchange and ECDSA

A common problem for IPsec VPNs using X.509 certificates is the

fragmentation of key exchange datagrams during session setup. Sometimes it works, sometimes it doesn't. Various workarounds exist, such as keeping copies of all certificates from potential peers on every host. As the network grows, this becomes inconvenient to maintain and to some extent it eliminates the benefits of using

PKI.

Fortunately, there is a solution: Elliptic Curve Cryptography (ECC). Many people currently use RSA key-pairs. Best practice suggests

using RSA keys of at least 2048 bits and often 4096 bits. Using ECC with a smaller 384-bit key is

considered to be equivalent to a 7680 bit RSA key pair. Consequently, ECDSA certificates are much smaller than RSA certificates. Furthermore, at these key sizes, the key exchange packets are almost always smaller than the typical 1500 byte MTU.

A further demand for ECDSA is arising due to the use of ECC within smart cards. Many smartcards don't support any RSA key larger than 2048 bits. The highly secure 384-bit ECC key is implemented in quite a few common smart cards. Smart card vendors have shown a preference for the ECC keys due to the

US Government's preference for ECC and the lower computational overheads make them more suitable for constrained execution environments. Anyone who wants to use smart cards as part of their VPN or general IT security now, or in the future, needs to consider ECC/ECDSA.

Making the network simple with Shorewall zones

For this example, we are not just taking some easy point-to-point example. We have a real-world, multi-site, multi-device network with road warriors. Simplifying this architecture is important to help us understand and secure it. The solution? Each of these is abstracted to a "zone" in

Shorewall. In the diagram above, the zone names are in square brackets. The purpose of each zone is described below:

| Zone name |

Description |

|---|

| loc |

This is the private LAN and contains servers like databases, private source code respositories and NFS file servers |

|---|

| dmz |

This is the DMZ and contains web servers that are accessible from the public internet. Some of these servers talk to databases or message queues in the LAN network loc |

|---|

| vpn_a |

These are road warriors that are not very trustworthy, such as mobile devices. They are occasionally stolen and usually full of spyware (referred to by users as "apps"). They have limited access to ports on some DMZ servers, e.g. for sending and receiving mail using SMTP and IMAP (those ports are not exposed to the public Internet at large). They use the VPN tunnel for general internet access/browsing, to avoid surveillance by their mobile carrier. |

|---|

| vpn_b |

These are managed laptops that have a low probability of malware infection. They may well be using smart cards for access control. Consequently, they are more trusted than the vpn_a users and have access to some extra intranet pages and file servers. Like the smart-phone users, they use the VPN tunnel for general internet access/browsing, to avoid surveillance by third-party wifi hotspot operators. |

|---|

| vpn_c |

This firewall zone represents remote sites with managed hardware, such as branch offices or home networks with IPsec routers running OpenWRT. |

|---|

| cust |

These are servers hosted for third-parties or collaborative testing/development purposes. They have their own firewall arrangements if necessary. |

|---|

| net |

This zone represents traffic from the public Internet. |

|---|

A practical

Shorewall configuration

Shorewall is chosen to manage the

iptables and

ip6tables firewall rules.

Shorewall provides a level of abstraction that makes

netfilter much more manageable than manual

iptables scripting. The

Shorewall concept of

zones is very similar to the zones implemented in

OpenWRT and this is an extremely useful paradigm for firewall management.

Practical configuration of

Shorewall is very well explained in the

Shorewall quick start. The one thing that is not immediately obvious is a strategy for planning the contents of the

/etc/shorewall/policy and

/etc/shorewall/rules files. The exact details for making it work effectively with a modern IPsec VPN are not explained in a single document, so I've gathered those details below as well.

An effective way to plan the

Shorewall zone configuration is with a table like this:

|

Destination zone |

|---|

| loc |

dmz |

vpn_a |

vpn_b |

vpn_c |

cust |

net |

|---|

| Source zone |

loc |

\ |

|

|

|

|

|

|

|---|

| dmz |

? |

\ |

X |

X |

X |

|

|

|---|

| vpn_a |

? |

? |

\ |

X |

X |

|

|

|---|

| vpn_b |

? |

? |

X |

\ |

X |

|

|

|---|

| vpn_c |

X |

|

|

|

\ |

|

|

|---|

| cust |

X |

? |

X |

X |

X |

\ |

|

|---|

| net |

X |

? |

X |

X |

X |

|

\ |

|---|

The symbols in the table are defined:

| Symbol |

Meaning |

|---|

| |

ACCEPT in policy file |

|---|

| X |

REJECT or DROP in policy file |

|---|

| ? |

REJECT or DROP in policy file, but ACCEPT some specific ports in the rules file |

|---|

Naturally, this modelling technique is valid for both IPv4 and IPv6 firewalling (with

Shorewall6)

Looking at the diagram in two dimensions, it is easy to spot patterns. Each pattern can be condensed into a single entry in the rules file. For example, it is clear from the first row that the

loc zone can access all other zones. That can be expressed very concisely with a single line in the policy file:

loc all ACCEPT

Specific

Shorewall tips for use with IPsec VPNs and

strongSwan

Shorewall has several web pages dedicated to VPNs, including the

IPsec specific documentation.. Personally, I found that I had to gather a few details from several of these pages to make an optimal solution. Here are those tips:

- Ignore everything about the /etc/shorewall/tunnels file. It is not needed and not used any more

- Name the VPN zones (we call them vpn_a, vpn_b and vpn_c) in the zones file but there is no need to put them in the /etc/shorewall/interfaces file.

- The /etc/shorewall/hosts file is not just for hosts and can be used to specify network ranges, such as those associated with the VPN virtual IP addresses. The ranges you put in this file should match the rightsourceip pool assignments in strongSwan's /etc/ipsec.conf

- One of the examples suggests using mss=1400 in the /etc/shorewall/zones file. I found that is too big and leads to packets being dropped in some situations. To start with, try a small value such as 1024 and then try larger values later after you prove everything works. Setting mss for IPsec appears to be essential.

- Do not use the routefilter feature in the /etc/shorewall/interfaces file as it is not compatible with IPsec

Otherwise, just follow the typical examples from the

Shorewall quick start guide and configure it to work the way you want.

Here is an example

/etc/shorewall/zones file:

fw firewall

net ipv4

dmz ipv4

loc ipv4

cust ipv4

vpn_a ipsec mode=tunnel mss=1024

vpn_b ipsec mode=tunnel mss=1024

vpn_c ipsec mode=tunnel mss=1024

Here is an example

/etc/shorewall/hosts file describing the VPN ranges from the diagram:

vpn_a eth0:10.1.100.0/24 ipsec

vpn_b eth0:10.1.200.0/24 ipsec

vpn_c eth0:192.168.1.0/24 ipsec

Here is an example

/etc/shorewall/policy file based on the table above:

loc all ACCEPT

vpn_c all ACCEPT

cust net ACCEPT

net cust ACCEPT

all all REJECT

Here is an example

/etc/shorewall/rules file based on the network:

SECTION ALL

# allow connections to the firewall itself to start VPNs:

# Rule source dest protocol/port details

ACCEPT all fw ah

ACCEPT all fw esp

ACCEPT all fw udp 500

ACCEPT all fw udp 4500

# allow access to HTTP servers in DMZ:

ACCEPT all dmz tcp 80

# allow connections from HTTP servers to MySQL database in private LAN:

ACCEPT dmz loc:10.2.0.43 tcp 3306

# allow connections from all VPN users to IMAPS server in private LAN:

ACCEPT vpn_a,vpn_b,vpn_c loc:10.2.0.58 tcp 993

# allow VPN users (but not the smartphones in vpn_a) to the

# PostgresQL database for PostBooks accounting system:

ACCEPT vpn_b,vpn_c loc:10.2.0.48 tcp 5432

SECTION ESTABLISHED

ACCEPT all all

SECTION RELATED

ACCEPT all all

Once the files are created,

Shorewall can be easily activated with:

# shorewall compile && shorewall restart

strongSwan IPsec VPN setup

Like

Shorewall,

strongSwan is also

very well documented and I'm just going to focus on those specific areas that are relevant to this type of VPN project.

- Allow the road-warriors to send all browsing traffic over the VPN means including leftsubnet=0.0.0.0/0 in the VPN server's /etc/ipsec.conf file. Be wary though: sometimes the road-warriors start sending the DHCP renewal over the tunnel instead of to their local DHCP server.

- As we are using Shorewall zones for firewalling, you must set the options leftfirewall=no and lefthostaccess=no in ipsec.conf. Shorewall already knows about the remote networks as they are defined in the /etc/shorewall/hosts file and so firewall rules don't need to be manipulated each time a tunnel goes up or down.

- As discussed above, X.509 certificates are used for peer authentication. In the certificate Distinguished Name (DN), store the zone name in the Organizational Unit (OU) component, for example, OU=vpn_c, CN=gw.branch1.example.org

- In the ipsec.conf file, match the users to connections using wildcard specifications such as rightid="OU=vpn_a, CN=*"

- Put a subjectAltName with hostname in every certificate. The --san option to the ipsec pki commands adds the subjectAltName.

- Keep the certificate distinguished names (DN) short, this makes the certificate smaller and reduces the risk of fragmented authentication packets. Many examples show a long and verbose DN such as C=GB, O=Acme World Wide Widget Corporation, OU=Engineering, CN=laptop1.eng.acme.example.org. On a private VPN, it is rarely necessary to have all that in the DN, just include OU for the zone name and CN for the host name.

- As discussed above, use the ECDSA scheme for keypairs (not RSA) to ensure that the key exchange datagrams don't suffer from fragmentation problems. For example, generate a keypair with the command ipsec pki --gen --type ecdsa --size 384 > user1Key.der

- Road warriors should have leftid=%fromcert in their ipsec.conf file. This forces them to use the Distinguished Name and not the subjectAltName (SAN) to identify themselves.

- For road warriors who require virtual tunnel IPs, configure them to request both IPv4 and IPv6 addresses (dual stack) with leftsourceip=%config4,%config6 and on the VPN gateway, configure the ranges as arguments to rightsourceip

- To ensure that roadwarriors query the LAN DNS, add the DNS settings to strongswan.conf and make sure road warriors are using a more recent strongSwan version that can dynamically update /etc/resolv.conf. Protecting DNS traffic is important for privacy reasons. It also makes sure that the road-warriors can discover servers that are not advertised in public DNS records.

Central firewall/VPN gateway configuration

Although they are not present in the diagram, IPv6 networks are also configured in these

strongSwan examples. It is very easy to combine IPv4 and IPv6 into a single

/etc/ipsec.conf file. As long as the road-warriors have

leftsourceip=%config4,%config6 in their own configurations, they will operate dual-stack IPv4/IPv6 whenver they connect to the VPN.

Here is an example

/etc/ipsec.conf for the central VPN gateway:

config setup

charonstart=yes

charondebug=all

plutostart=no

conn %default

ikelifetime=60m

keylife=20m

rekeymargin=3m

keyingtries=1

keyexchange=ikev2

conn branch1

left=198.51.100.1

leftsubnet=203.0.113.0/24,10.0.0.0/8,2001:DB8:12:80:/64

leftcert=fw1Cert.der

leftid=@fw1.example.org

leftfirewall=no

lefthostaccess=no

right=%any

rightid=@branch1-fw.example.org

rightsubnet=192.168.1.0/24

auto=add

conn rw_vpn_a

left=198.51.100.1

leftsubnet=0.0.0.0/0,::0/0

leftcert=fw1Cert.der

leftid=@fw1.example.org

leftfirewall=no

lefthostaccess=no

right=%any

rightid="OU=vpn_a, CN=*"

rightsourceip=10.1.100.0/24,2001:DB8:1000:100::/64

auto=add

conn rw_vpn_b

left=198.51.100.1

leftsubnet=0.0.0.0/0,::0/0

leftcert=fw1Cert.der

leftid=@fw1.example.org

leftfirewall=no

lefthostaccess=no

right=%any

rightid="OU=vpn_b, CN=*"

rightsourceip=10.1.200.0/24,2001:DB8:1000:200::/64

auto=add

Sample branch office or home router VPN configuration

Here is an example

/etc/ipsec.conf for the Linux server or

OpenWRT VPN at the branch office or home:

conn head_office

left=%defaultroute

leftid=@branch1-fw.example.org

leftcert=branch1-fwCert.der

leftsubnet=192.168.1.0/24,2001:DB8:12:80:/64

leftfirewall=no

lefthostaccess=no

right=fw1.example.org

rightid=@fw1.example.org

rightsubnet=203.0.113.0/24,10.0.0.0/8,2001:DB8:1000::/52

auto=start

# notice we only allow vpn_b users, not vpn_a

# these users are given virtual IPs from our own

# 192.168.1.0 subnet

conn rw_vpn_b

left=branch1-fw.example.org

leftsubnet=192.168.1.0/24,2001:DB8:12:80:/64

leftcert=branch1-fwCert.der

leftid=@branch1-fw.example.org

leftfirewall=no

lefthostaccess=no

right=%any

rightid="OU=vpn_b, CN=*"

rightsourceip=192.168.1.160/27,2001:DB8:12:80::8000/116

auto=add

Further topics

Shorewall and

Shorewall6 don't currently support a unified configuration. This can make it slightly tedious to duplicate rules between the two IP variations. However, the syntax for IPv4 and IPv6 configuration is virtually identical.

Shorewall only currently supports Linux

netfilter rules. In theory it could be extended to support other types of firewall API, such as

pf used by

OpenBSD and the related BSD family of systems.

A more advanced architecture would split the single firewall into multiple firewall hosts, like the inner and outer walls of a large castle. The VPN gateway would also become a standalone host in the DMZ. This would require more complex routing table entries.

Smart cards with PIN numbers provide an effective form of

two-factor authentication that can protect certificates for remote users.

Smart cards are documented well by strongSwan already so I haven't repeated any of that material in this article.

Managing a private X.509 certificate authority in practice may require slightly more effort than I've described, especially when an organisation grows. Small networks and home users don't need to worry about these details too much, but for most deployments it is necessary to consider things like

certificate revocation lists and special schemes to protect the root certificate's private key.

EJBCA is one open source project that might help.

Some users may want to consider ways to prevent the road-warriors from accidentally browsing the Internet when the IPsec tunnel is not active. Such policies could be implemented with some basic

iptables firewalls rules in the road-warrior devices.

Summary

Using these strategies and configuration tips, planning and building a VPN will hopefully be much simpler. Please feel free to ask questions on the mailing lists for any of the projects discussed in this blog.

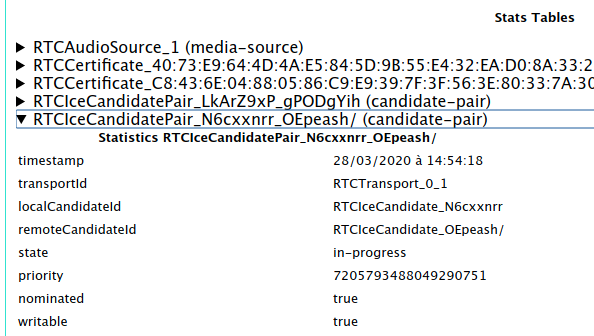

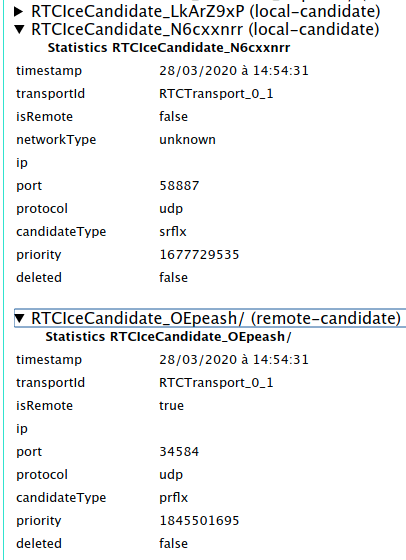

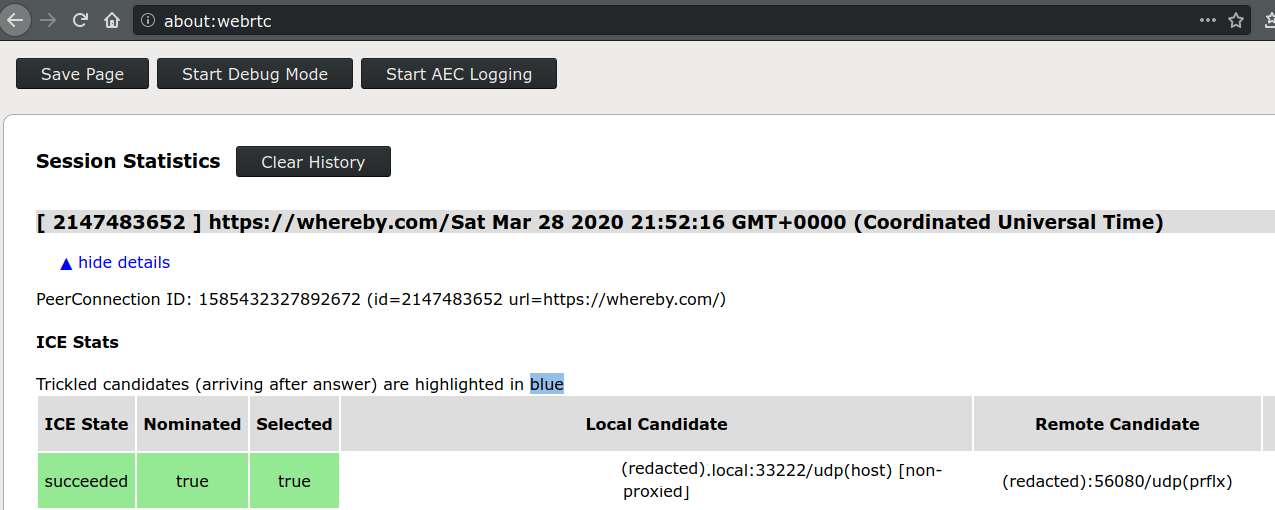

Then from the name of that pair (

Then from the name of that pair ( In the case of a direct connection, I saw the following on the

In the case of a direct connection, I saw the following on the

Some comments about the diagram:

Some comments about the diagram:

Nothing special here. You would have seen a similar window before if you have installed apport. What changes with this release is, that now, when you check the Send an error report to help fix this problem, it will really file a bug report on the BTS.Here's what the emailed bug report will look like:

Nothing special here. You would have seen a similar window before if you have installed apport. What changes with this release is, that now, when you check the Send an error report to help fix this problem, it will really file a bug report on the BTS.Here's what the emailed bug report will look like:  Ever since I blogged about the

Ever since I blogged about the  No GNOME 3 for Debian 6.0

Don t hold your breath, it s highly unlikely that anyone of the Debian GNOME team will prepare backports of GNOME 3 for Debian 6.0 Squeeze. It s already difficult enough to do everything right in unstable with a solid upgrade path from the current versions in Squeeze

But if you are brave enough to want to install GNOME 3 with Debian 6.0 on your machine then I would suggest that you re the kind of person who should run Debian testing instead (or even Debian unstable, it s

No GNOME 3 for Debian 6.0

Don t hold your breath, it s highly unlikely that anyone of the Debian GNOME team will prepare backports of GNOME 3 for Debian 6.0 Squeeze. It s already difficult enough to do everything right in unstable with a solid upgrade path from the current versions in Squeeze

But if you are brave enough to want to install GNOME 3 with Debian 6.0 on your machine then I would suggest that you re the kind of person who should run Debian testing instead (or even Debian unstable, it s  You should not install GNOME 3 from experimental if you re not ready to deal with some problems and glitches. Beware: once you upgraded to GNOME 3 it will be next to impossible to go back to GNOME 2.32 (you can try it, but it s not officially supported by Debian).

You should not install GNOME 3 from experimental if you re not ready to deal with some problems and glitches. Beware: once you upgraded to GNOME 3 it will be next to impossible to go back to GNOME 2.32 (you can try it, but it s not officially supported by Debian). Frequently Asked Questions and Common Problems

Why do links always open in epiphany instead of iceweasel? You need to upgrade to the latest version on libglib2.0-0, gvfs and gnome-control-center in experimental. Then you can customize the default application used in the control center (under System Information > Default applications ).

You might need to switch to iceweasel 4.0 in experimental to have iceweasel appear in the list of browsers. Or you can edit

Frequently Asked Questions and Common Problems

Why do links always open in epiphany instead of iceweasel? You need to upgrade to the latest version on libglib2.0-0, gvfs and gnome-control-center in experimental. Then you can customize the default application used in the control center (under System Information > Default applications ).

You might need to switch to iceweasel 4.0 in experimental to have iceweasel appear in the list of browsers. Or you can edit  I asked

I asked  The current state of g-i, the graphical version of the

The current state of g-i, the graphical version of the  The way

The way  It's now three weeks since I am officially a Ph.D. student at the

It's now three weeks since I am officially a Ph.D. student at the  Sometime in August,

Sometime in August,