Reproducible Builds: Reproducible Builds in June 2023

Welcome to the June 2023 report from the Reproducible Builds project

In our reports, we outline the most important things that we have been up to over the past month. As always, if you are interested in contributing to the project, please visit our Contribute page on our website.

In our reports, we outline the most important things that we have been up to over the past month. As always, if you are interested in contributing to the project, please visit our Contribute page on our website.

We are very happy to announce the upcoming Reproducible Builds Summit which set to take place from October 31st November 2nd 2023, in the vibrant city of Hamburg, Germany.

Our summits are a unique gathering that brings together attendees from diverse projects, united by a shared vision of advancing the Reproducible Builds effort. During this enriching event, participants will have the opportunity to engage in discussions, establish connections and exchange ideas to drive progress in this vital field. Our aim is to create an inclusive space that fosters collaboration, innovation and problem-solving. We are thrilled to host the seventh edition of this exciting event, following the success of previous summits in various iconic locations around the world, including Venice, Marrakesh, Paris, Berlin and Athens.

If you re interesting in joining us this year, please make sure to read the event page] which has more details about the event and location. (You may also be interested in attending PackagingCon 2023 held a few days before in Berlin.)

We are very happy to announce the upcoming Reproducible Builds Summit which set to take place from October 31st November 2nd 2023, in the vibrant city of Hamburg, Germany.

Our summits are a unique gathering that brings together attendees from diverse projects, united by a shared vision of advancing the Reproducible Builds effort. During this enriching event, participants will have the opportunity to engage in discussions, establish connections and exchange ideas to drive progress in this vital field. Our aim is to create an inclusive space that fosters collaboration, innovation and problem-solving. We are thrilled to host the seventh edition of this exciting event, following the success of previous summits in various iconic locations around the world, including Venice, Marrakesh, Paris, Berlin and Athens.

If you re interesting in joining us this year, please make sure to read the event page] which has more details about the event and location. (You may also be interested in attending PackagingCon 2023 held a few days before in Berlin.)

This month, Vagrant Cascadian will present at FOSSY 2023 on the topic of Breaking the Chains of Trusting Trust:

This month, Vagrant Cascadian will present at FOSSY 2023 on the topic of Breaking the Chains of Trusting Trust:

Corrupted build environments can deliver compromised cryptographically signed binaries. Several exploits in critical supply chains have been demonstrated in recent years, proving that this is not just theoretical. The most well secured build environments are still single points of failure when they fail. [ ] This talk will focus on the state of the art from several angles in related Free and Open Source Software projects, what works, current challenges and future plans for building trustworthy toolchains you do not need to trust.Hosted by the Software Freedom Conservancy and taking place in Portland, Oregon, FOSSY aims to be a community-focused event: Whether you are a long time contributing member of a free software project, a recent graduate of a coding bootcamp or university, or just have an interest in the possibilities that free and open source software bring, FOSSY will have something for you . More information on the event is available on the FOSSY 2023 website, including the full programme schedule.

Marcel Fourn , Dominik Wermke, William Enck, Sascha Fahl and Yasemin Acar recently published an academic paper in the 44th IEEE Symposium on Security and Privacy titled It s like flossing your teeth: On the Importance and Challenges of Reproducible Builds for Software Supply Chain Security . The abstract reads as follows:

Marcel Fourn , Dominik Wermke, William Enck, Sascha Fahl and Yasemin Acar recently published an academic paper in the 44th IEEE Symposium on Security and Privacy titled It s like flossing your teeth: On the Importance and Challenges of Reproducible Builds for Software Supply Chain Security . The abstract reads as follows:

The 2020 Solarwinds attack was a tipping point that caused a heightened awareness about the security of the software supply chain and in particular the large amount of trust placed in build systems. Reproducible Builds (R-Bs) provide a strong foundation to build defenses for arbitrary attacks against build systems by ensuring that given the same source code, build environment, and build instructions, bitwise-identical artifacts are created.However, in contrast to other papers that touch on some theoretical aspect of reproducible builds, the authors paper takes a different approach. Starting with the observation that much of the software industry believes R-Bs are too far out of reach for most projects and conjoining that with a goal of to help identify a path for R-Bs to become a commonplace property , the paper has a different methodology:

We conducted a series of 24 semi-structured expert interviews with participants from the Reproducible-Builds.org project, and iterated on our questions with the reproducible builds community. We identified a range of motivations that can encourage open source developers to strive for R-Bs, including indicators of quality, security benefits, and more efficient caching of artifacts. We identify experiences that help and hinder adoption, which heavily include communication with upstream projects. We conclude with recommendations on how to better integrate R-Bs with the efforts of the open source and free software community.A PDF of the paper is now available, as is an entry on the CISPA Helmholtz Center for Information Security website and an entry under the TeamUSEC Human-Centered Security research group.

On our mailing list this month:

- Martin Monperrus asked whether there are any project[s] where reproducibility is checked in a continuous integration pipeline? which received a number of replies from various projects.

- Vagrant Cascadian mentioned that Packaging Con 2023 is being held in Berlin, the weekend before the Reproducible Builds summit later this year. In particular, Vagrant noticed that the Call for Proposals (CFP) closes at the end of July.

-

Larry Doolittle was searching Usenet archives and discovered a thread from December 1999 titled Time independent checksum(cksum) on

comp.unix.programming. Larry notes that it starts with Jayan asking about comparing binaries that might have difference in their embedded timestamps (that is, perhaps, Foreshadowing diffoscope, amiright? ) and goes on to observe that:

The antagonist is David Schwartz, who correctly says There are dozens of complex reasons why what seems to be the same sequence of operations might produce different end results, but goes on to say I totally disagree with your general viewpoint that compilers must provide for reproducability [sic]. Dwight Tovey and I (Larry Doolittle) argue for reproducible builds. I assert Any program especially a mission-critical program like a compiler that cannot reproduce a result at will is broken. Also it s commonplace to take a binary from the net, and check to see if it was trojaned by attempting to recreate it from source.

Lastly, there were a few changes to our website this month too, including Bernhard M. Wiedemann adding a simplified Rust example to our documentation about the

SOURCE_DATE_EPOCH environment variable [ ], Chris Lamb made it easier to parse our summit announcement at a glance [ ], Mattia Rizzolo added the summit announcement at a glance [ ] itself [ ][ ][ ] and Rahul Bajaj added a taxonomy of variations in build environments [ ].

Distribution work

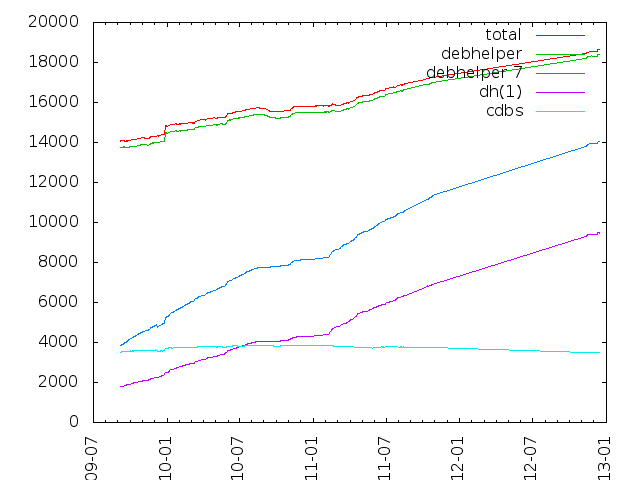

27 reviews of Debian packages were added, 40 were updated and 8 were removed this month adding to our knowledge about identified issues. A new

27 reviews of Debian packages were added, 40 were updated and 8 were removed this month adding to our knowledge about identified issues. A new randomness_in_documentation_generated_by_mkdocs toolchain issue was added by Chris Lamb [ ], and the deterministic flag on the paths_vary_due_to_usrmerge issue as we are not currently testing usrmerge issues [ ] issues.

Roland Clobus posted his 18th update of the status of reproducible Debian ISO images on our mailing list. Roland reported that all major desktops build reproducibly with bullseye, bookworm, trixie and sid , but he also mentioned amongst many changes that not only are the non-free images being built (and are reproducible) but that the live images are generated officially by Debian itself. [ ]

Jan-Benedict Glaw noticed a problem when building NetBSD for the VAX architecture. Noting that Reproducible builds [are] probably not as reproducible as we thought , Jan-Benedict goes on to describe that when two builds from different source directories won t produce the same result and adds various notes about sub-optimal handling of the CFLAGS environment variable. [ ]

F-Droid added 21 new reproducible apps in June, resulting in a new record of 145 reproducible apps in total. [ ]. (This page now sports missing data for March May 2023.) F-Droid contributors also reported an issue with broken resources in APKs making some builds unreproducible. [ ]

F-Droid added 21 new reproducible apps in June, resulting in a new record of 145 reproducible apps in total. [ ]. (This page now sports missing data for March May 2023.) F-Droid contributors also reported an issue with broken resources in APKs making some builds unreproducible. [ ]

Bernhard M. Wiedemann published another monthly report about reproducibility within openSUSE

Bernhard M. Wiedemann published another monthly report about reproducibility within openSUSE

Upstream patches

-

Bernhard M. Wiedemann:

bcachefs (sort find / filesys)build-compare (reports files as identical)build-time (toolchain date)cockpit (merged, gzip mtime)gcc13 (gcc13 toolchain LTO parallelism)ghc-rpm-macros (toolchain parallelism)golangcli-lint (date)gutenprint (date+time)mage (date (golang))mumble (filesys)pcr (date)python-nss (drop sphinx .doctrees)python310 (merged, bisected+backported)warpinator (merged, date)xroachng (date)

-

Chris Lamb:

- #1037159 filed against

elinks.

- #1037205 filed against

multipath-tools.

- #1037216 filed against

mkdocstrings-python-handlers.

- #1038730 filed against

fribidi.

- #1038957 filed against

jtreg7.

- #1039932 filed against

python-bitstring (forwarded upstream).

-

Vagrant Cascadian:

- #1037235 filed against

gradle-kotlin-dsl.

- #1037240 filed against

libsdl-console.

- #1037267 filed against

kawari8.

- #1037269 filed against

freetds.

- #1037272 filed against

gbrowse.

- #1037276 filed against

bglibs.

- #1037277 filed against

advi.

- #1037278 filed against

afterstep.

- #1037280 filed against

simstring.

- #1037296 filed against

manderlbot.

- #1037298 filed against

erlang-proper.

- #1037300 filed against

comedilib.

- #1037309 filed against

libint.

- #1037427 filed against

newlib.

- #1038908 filed against

binutils-msp430.

- #1038970 & #1038971 filed against

c-munipack.

- #1039044 filed against

python-marshmallow-sqlalchemy.

- #1039457 filed against

mplayer.

- #1039493 & #1039500 filed against

menu.

- #1039506 filed against

mini-buildd.

- #1039610 filed against

pnetcdf.

- #1039617 & #1039618 filed against

liblopsub.

- #1039619 filed against

wcc.

- #1039623 filed against

shotcut.

- #1039624 filed against

icu.

- #1039741 filed against

libapache-poi-java.

- #1039808 filed against

atf.

- #1039865 filed against

valgrind.

Testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In June, a number of changes were made by Holger Levsen, including:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In June, a number of changes were made by Holger Levsen, including:

-

Additions to a (relatively) new Documented Jenkins Maintenance (djm) script to automatically shrink a cache & save a backup of old data [ ], automatically split out previous months data from logfiles into specially-named files [ ], prevent concurrent remote logfile fetches by using a lock file [ ] and to add/remove various debugging statements [ ].

-

Updates to the automated system health checks to, for example, to correctly detect new kernel warnings due to a wording change [ ] and to explicitly observe which old/unused kernels should be removed [ ]. This was related to an improvement so that various kernel issues on Ubuntu-based nodes are automatically fixed. [ ]

Holger and Vagrant Cascadian updated all thirty-five hosts running Debian on the amd64, armhf, and i386 architectures to Debian bookworm, with the exception of the Jenkins host itself which will be upgraded after the release of Debian 12.1. In addition, Mattia Rizzolo updated the email configuration for the @reproducible-builds.org domain to correctly accept incoming mails from jenkins.debian.net [ ] as well as to set up DomainKeys Identified Mail (DKIM) signing [ ]. And working together with Holger, Mattia also updated the Jenkins configuration to start testing Debian trixie which resulted in stopped testing Debian buster. And, finally, Jan-Benedict Glaw contributed patches for improved NetBSD testing.

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Twitter: @ReproBuilds

-

Bernhard M. Wiedemann:

bcachefs(sort find / filesys)build-compare(reports files as identical)build-time(toolchain date)cockpit(merged, gzip mtime)gcc13(gcc13 toolchain LTO parallelism)ghc-rpm-macros(toolchain parallelism)golangcli-lint(date)gutenprint(date+time)mage(date (golang))mumble(filesys)pcr(date)python-nss(drop sphinx .doctrees)python310(merged, bisected+backported)warpinator(merged, date)xroachng(date)

-

Chris Lamb:

- #1037159 filed against

elinks. - #1037205 filed against

multipath-tools. - #1037216 filed against

mkdocstrings-python-handlers. - #1038730 filed against

fribidi. - #1038957 filed against

jtreg7. - #1039932 filed against

python-bitstring(forwarded upstream).

- #1037159 filed against

-

Vagrant Cascadian:

- #1037235 filed against

gradle-kotlin-dsl. - #1037240 filed against

libsdl-console. - #1037267 filed against

kawari8. - #1037269 filed against

freetds. - #1037272 filed against

gbrowse. - #1037276 filed against

bglibs. - #1037277 filed against

advi. - #1037278 filed against

afterstep. - #1037280 filed against

simstring. - #1037296 filed against

manderlbot. - #1037298 filed against

erlang-proper. - #1037300 filed against

comedilib. - #1037309 filed against

libint. - #1037427 filed against

newlib. - #1038908 filed against

binutils-msp430. - #1038970 & #1038971 filed against

c-munipack. - #1039044 filed against

python-marshmallow-sqlalchemy. - #1039457 filed against

mplayer. - #1039493 & #1039500 filed against

menu. - #1039506 filed against

mini-buildd. - #1039610 filed against

pnetcdf. - #1039617 & #1039618 filed against

liblopsub. - #1039619 filed against

wcc. - #1039623 filed against

shotcut. - #1039624 filed against

icu. - #1039741 filed against

libapache-poi-java. - #1039808 filed against

atf. - #1039865 filed against

valgrind.

- #1037235 filed against

Testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In June, a number of changes were made by Holger Levsen, including:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In June, a number of changes were made by Holger Levsen, including:

-

Additions to a (relatively) new Documented Jenkins Maintenance (djm) script to automatically shrink a cache & save a backup of old data [ ], automatically split out previous months data from logfiles into specially-named files [ ], prevent concurrent remote logfile fetches by using a lock file [ ] and to add/remove various debugging statements [ ].

-

Updates to the automated system health checks to, for example, to correctly detect new kernel warnings due to a wording change [ ] and to explicitly observe which old/unused kernels should be removed [ ]. This was related to an improvement so that various kernel issues on Ubuntu-based nodes are automatically fixed. [ ]

Holger and Vagrant Cascadian updated all thirty-five hosts running Debian on the amd64, armhf, and i386 architectures to Debian bookworm, with the exception of the Jenkins host itself which will be upgraded after the release of Debian 12.1. In addition, Mattia Rizzolo updated the email configuration for the @reproducible-builds.org domain to correctly accept incoming mails from jenkins.debian.net [ ] as well as to set up DomainKeys Identified Mail (DKIM) signing [ ]. And working together with Holger, Mattia also updated the Jenkins configuration to start testing Debian trixie which resulted in stopped testing Debian buster. And, finally, Jan-Benedict Glaw contributed patches for improved NetBSD testing.

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Twitter: @ReproBuilds

#reproducible-builds on irc.oftc.net.

rb-general@lists.reproducible-builds.org

I've been digging through the firmware for an AMD laptop with a Ryzen 6000 that incorporates Pluton for the past couple of weeks, and I've got some rough conclusions. Note that these are extremely preliminary and may not be accurate, but I'm going to try to encourage others to look into this in more detail. For those of you at home, I'm using an image from

I've been digging through the firmware for an AMD laptop with a Ryzen 6000 that incorporates Pluton for the past couple of weeks, and I've got some rough conclusions. Note that these are extremely preliminary and may not be accurate, but I'm going to try to encourage others to look into this in more detail. For those of you at home, I'm using an image from  This post was originally published in the

This post was originally published in the

To work with GnuPG and smartcards you install GnuPG agent, scdaemon, pscsd and pcsc-tools. On Debian you can do it like this:

To work with GnuPG and smartcards you install GnuPG agent, scdaemon, pscsd and pcsc-tools. On Debian you can do it like this:

Update for 06/10/2012

Well I had not written the weekly work log for the last week that

is because the week was short (oh yeah thanks to all these bandhs

our week got fluctuated and to be frank the week was only 4 days

long) and second was my lazyness. Here goes the update

* After a long discussion with

Update for 06/10/2012

Well I had not written the weekly work log for the last week that

is because the week was short (oh yeah thanks to all these bandhs

our week got fluctuated and to be frank the week was only 4 days

long) and second was my lazyness. Here goes the update

* After a long discussion with  Rapha l Hertzog

Rapha l Hertzog  Apropos of

Apropos of

Hello,

The

Hello,

The