Antoine Beaupr : My free software activities, September 2017

Debian Long Term Support (LTS)

This is my monthly Debian LTS report. I mostly worked on the git,

git-annex and ruby packages this month but didn't have time to

completely use my allocated hours because I started too late in the

month.

Ruby

I was hoping someone would pick up the Ruby work I submitted in

August, but it seems no one wanted to touch that mess,

understandably. Since then, new issues came up, and not only did I

have to work on the rubygems and ruby1.9 package, but now the ruby1.8

package also had to get security updates. Yes: it's bad enough that

the rubygems code is duplicated in one other package, but wheezy had

the misfortune of having two Ruby versions supported.

The Ruby 1.9 also failed to build from source because of test suite

issues, which I haven't found a clean and easy fix for, so I ended up

making test suite failures non-fatal in 1.9, which they were already

in 1.8. I did keep a close eye on changes in the test suite output to

make sure tests introduced in the security fixes would pass and that I

wouldn't introduce new regressions as well.

So I published the following advisories:

- ruby 1.8: DLA-1113-1, fixing CVE-2017-0898 and

CVE-2017-10784. 1.8 doesn't seem affected by CVE-2017-14033 as the provided test does not fail (but it does

fail in 1.9.1). test suite was, before patch:

2199 tests, 1672513 assertions, 18 failures, 51 errors

and after patch:

2200 tests, 1672514 assertions, 18 failures, 51 errors

- rubygems: uploaded the package prepared in August as is

in DLA-1112-1, fixing CVE-2017-0899, CVE-2017-0900, CVE-2017-0901. here the test suite

passed normally.

- ruby 1.9: here I used the used 2.2.8 release tarball to generate

a patch that would cover all issues and published DLA-1114-1

that fixes the CVEs of the two packages above. the test suite was,

before patches:

10179 tests, 2232711 assertions, 26 failures, 23 errors, 51 skips

and after patches:

1.9 after patches (B): 10184 tests, 2232771 assertions, 26 failures, 23 errors, 53 skips

Git

I also quickly issued an advisory (DLA-1120-1) for CVE-2017-14867, an odd issue affecting git in wheezy. The backport

was tricky because it wouldn't apply cleanly and the git package had a

custom patching system which made it tricky to work on.

Git-annex

I did a quick stint on git-annex as well: I was able

to reproduce the issue and confirm an approach to fixing the

issue in wheezy, although I didn't have time to complete the work

before the end of the month.

Other free software work

New project: feed2exec

I should probably make a separate blog post about this, but

ironically, I don't want to spend too much time writing those reports,

so this will be quick.

I wrote a new program, called feed2exec. It's basically a

combination of feed2imap, rss2email and feed2tweet: it

allows you to fetch RSS feeds and send them in a mailbox, but what's

special about it, compared to the other programs above, is that it is

more generic: you can basically make it do whatever you want on new

feed items. I have, for example, replaced my feed2tweet instance

with it, using this simple configuration:

[anarcat]

url = https://anarc.at/blog/index.rss

output = feed2exec.plugins.exec

args = tweet "%(title)0.70s %(link)0.70s"

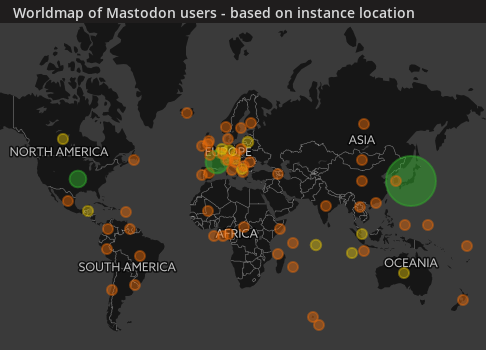

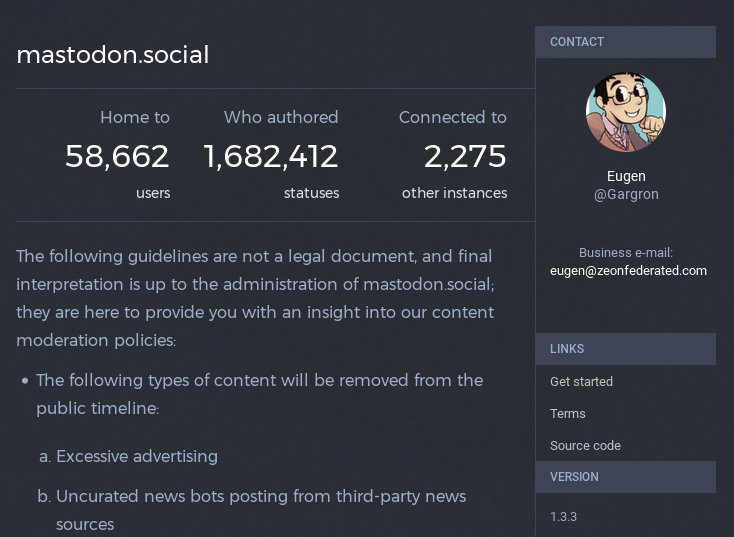

The sample configuration file also has examples to talk with Mastodon,

Pump.io and, why not, a torrent server to download torrent files

available over RSS feeds. A trivial configuration can also make it

work as a crude podcast client. My main motivation to work on this was

that it was difficult to extend feed2imap to do what I needed (which

was to talk to transmission to download torrent files) and rss2email

didn't support my workflow (which is delivering to feed-specific mail

folders). Because both projects also seemed abandoned, it seemed like

a good idea at the time to start a new one, although the rss2email

community has now restarted the project and may produce interesting

results.

As an experiment, I tracked my time working on this project. It turns

out it took about 45 hours to write that software. Considering

feed2exec is about 1400 SLOC, that's 30 lines of code per hour. I

don't know if that's slow or fast, but it's an interesting metric for

future projects. It sure seems slow to me, but we need to keep in mind

those 30 lines of code don't include documentation and repeated head

banging on the keyboard. For example, I found two issues

with the upstream feedparser package which I use to parse feeds

which also seems unmaintained, unfortunately.

Feed2exec is beta software at this point, but it's working well enough

for me and the design is much simpler than the other programs of the

kind. The main issue people can expect from it at this point is

formatting issues or parse errors on exotic feeds, and noisy error

messages on network errors, all of which should be fairly easy to fix

in the test suite. I hope it will be useful for the community and, as

usual, I welcome contributions, help and suggestions on how to improve

the software.

More Python templates

As part of the work on feed2exec, I did cleanup a few things in

the ecdysis project, mostly to hook tests up in the CI, improve

on the advancedConfig logger and cleanup more stuff.

While I was there, it turns out that I built a pretty decent

basic CI configuration for Python on GitLab. Whereas the previous

templates only had a non-working Django example, you should now be

able to chose a Python template when you configure CI on GitLab 10

and above, which should hook you up with normal Python setup

procedures like setup.py install and setup.py test.

Selfspy

I mentioned working on a monitoring tool in my last post, because it

was a feature from Workrave missing in SafeEyes. It turns

out there is already such a tool called selfspy. I did an

extensive review of the software to make sure it wouldn't leak

out confidential information out before using it, and it looks,

well... kind of okay. It crashed on me at least once so far, which is

too bad because then it loses track of the precious activity. I have

used it at least once to figure out what the heck I worked on during

the day, so it's pretty useful. I particularly used it to backtrack my

work on feed2exec as I didn't originally track my time on the project.

Unfortunately, selfspy seems unmaintained. I have proposed a

maintenance team and hopefully the project maintainer will respond

and at least share access so we don't end up in a situation like

linkchecker. I also sent a bunch of pull requests to fix some issues

like being secure by default and fixing

the build. Apart from the crash, the main issue I have found with

the software is that it doesn't detect idle time which means

certain apps are disproportionatly represented in statistics. There

are also some weaknesses in the crypto that should be adressed

for people that encrypt their database.

Next step is to package selfspy in Debian which should hopefully

be simple enough...

Restic documentation security

As part of a documentation patch on the Restic backup software, I

have improved on my previous Perl script to snoop on process

commandline arguments. A common flaw in shell scripts and cron jobs is

to pass secret material in the environment (usually safe) but often

through commandline arguments (definitely not safe). The challenge, in

this peculiar case, was the env binary, but the last time I

encountered such an issue was with the Drush commandline

tool, which was passing database credentials in clear to the mysql

binary. Using my Perl sniffer, I could get to 60 checks per

second (or 60Hz). After reimplementing it in Python, this number

went up to 160Hz, which still wasn't enough to catch the elusive

env command, which is much faster at hiding arguments than MySQL, in

large part because it simply does an execve() once the environment

is setup.

Eventually, I just went crazy and rewrote the whole thing in C

which was able to get 700-900Hz and did catch the env command

about 10-20% of the time. I could probably have rewritten this by

simply walking /proc myself (since this is what all those libraries

do in the end) to get better result, but then my point was made. I was

able to prove to the restic author the security issues that warranted

the warning. It's too bad I need to repeat this again and again, but

then my tools are getting better at proving that issue... I suspect

it's not the last time I have to deal with this issue and I am happy

to think that I can come up with an even more efficient proof of

concept tool the next time around.

Ansible 101

After working on documentation last month, I ended up writing my first

Ansible playbook this month, converting my

tasksel list to a working Ansible configuration. This was a useful

exercise: it allow me to find a bunch of packages which have been

removed from Debian and provides much better usability than

tasksel. For example, it provides a --diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

- ruby 1.8: DLA-1113-1, fixing CVE-2017-0898 and

CVE-2017-10784. 1.8 doesn't seem affected by CVE-2017-14033 as the provided test does not fail (but it does

fail in 1.9.1). test suite was, before patch:

and after patch:2199 tests, 1672513 assertions, 18 failures, 51 errors2200 tests, 1672514 assertions, 18 failures, 51 errors - rubygems: uploaded the package prepared in August as is in DLA-1112-1, fixing CVE-2017-0899, CVE-2017-0900, CVE-2017-0901. here the test suite passed normally.

- ruby 1.9: here I used the used 2.2.8 release tarball to generate

a patch that would cover all issues and published DLA-1114-1

that fixes the CVEs of the two packages above. the test suite was,

before patches:

and after patches:10179 tests, 2232711 assertions, 26 failures, 23 errors, 51 skips1.9 after patches (B): 10184 tests, 2232771 assertions, 26 failures, 23 errors, 53 skips

Git

I also quickly issued an advisory (DLA-1120-1) for CVE-2017-14867, an odd issue affecting git in wheezy. The backport

was tricky because it wouldn't apply cleanly and the git package had a

custom patching system which made it tricky to work on.

Git-annex

I did a quick stint on git-annex as well: I was able

to reproduce the issue and confirm an approach to fixing the

issue in wheezy, although I didn't have time to complete the work

before the end of the month.

Other free software work

New project: feed2exec

I should probably make a separate blog post about this, but

ironically, I don't want to spend too much time writing those reports,

so this will be quick.

I wrote a new program, called feed2exec. It's basically a

combination of feed2imap, rss2email and feed2tweet: it

allows you to fetch RSS feeds and send them in a mailbox, but what's

special about it, compared to the other programs above, is that it is

more generic: you can basically make it do whatever you want on new

feed items. I have, for example, replaced my feed2tweet instance

with it, using this simple configuration:

[anarcat]

url = https://anarc.at/blog/index.rss

output = feed2exec.plugins.exec

args = tweet "%(title)0.70s %(link)0.70s"

The sample configuration file also has examples to talk with Mastodon,

Pump.io and, why not, a torrent server to download torrent files

available over RSS feeds. A trivial configuration can also make it

work as a crude podcast client. My main motivation to work on this was

that it was difficult to extend feed2imap to do what I needed (which

was to talk to transmission to download torrent files) and rss2email

didn't support my workflow (which is delivering to feed-specific mail

folders). Because both projects also seemed abandoned, it seemed like

a good idea at the time to start a new one, although the rss2email

community has now restarted the project and may produce interesting

results.

As an experiment, I tracked my time working on this project. It turns

out it took about 45 hours to write that software. Considering

feed2exec is about 1400 SLOC, that's 30 lines of code per hour. I

don't know if that's slow or fast, but it's an interesting metric for

future projects. It sure seems slow to me, but we need to keep in mind

those 30 lines of code don't include documentation and repeated head

banging on the keyboard. For example, I found two issues

with the upstream feedparser package which I use to parse feeds

which also seems unmaintained, unfortunately.

Feed2exec is beta software at this point, but it's working well enough

for me and the design is much simpler than the other programs of the

kind. The main issue people can expect from it at this point is

formatting issues or parse errors on exotic feeds, and noisy error

messages on network errors, all of which should be fairly easy to fix

in the test suite. I hope it will be useful for the community and, as

usual, I welcome contributions, help and suggestions on how to improve

the software.

More Python templates

As part of the work on feed2exec, I did cleanup a few things in

the ecdysis project, mostly to hook tests up in the CI, improve

on the advancedConfig logger and cleanup more stuff.

While I was there, it turns out that I built a pretty decent

basic CI configuration for Python on GitLab. Whereas the previous

templates only had a non-working Django example, you should now be

able to chose a Python template when you configure CI on GitLab 10

and above, which should hook you up with normal Python setup

procedures like setup.py install and setup.py test.

Selfspy

I mentioned working on a monitoring tool in my last post, because it

was a feature from Workrave missing in SafeEyes. It turns

out there is already such a tool called selfspy. I did an

extensive review of the software to make sure it wouldn't leak

out confidential information out before using it, and it looks,

well... kind of okay. It crashed on me at least once so far, which is

too bad because then it loses track of the precious activity. I have

used it at least once to figure out what the heck I worked on during

the day, so it's pretty useful. I particularly used it to backtrack my

work on feed2exec as I didn't originally track my time on the project.

Unfortunately, selfspy seems unmaintained. I have proposed a

maintenance team and hopefully the project maintainer will respond

and at least share access so we don't end up in a situation like

linkchecker. I also sent a bunch of pull requests to fix some issues

like being secure by default and fixing

the build. Apart from the crash, the main issue I have found with

the software is that it doesn't detect idle time which means

certain apps are disproportionatly represented in statistics. There

are also some weaknesses in the crypto that should be adressed

for people that encrypt their database.

Next step is to package selfspy in Debian which should hopefully

be simple enough...

Restic documentation security

As part of a documentation patch on the Restic backup software, I

have improved on my previous Perl script to snoop on process

commandline arguments. A common flaw in shell scripts and cron jobs is

to pass secret material in the environment (usually safe) but often

through commandline arguments (definitely not safe). The challenge, in

this peculiar case, was the env binary, but the last time I

encountered such an issue was with the Drush commandline

tool, which was passing database credentials in clear to the mysql

binary. Using my Perl sniffer, I could get to 60 checks per

second (or 60Hz). After reimplementing it in Python, this number

went up to 160Hz, which still wasn't enough to catch the elusive

env command, which is much faster at hiding arguments than MySQL, in

large part because it simply does an execve() once the environment

is setup.

Eventually, I just went crazy and rewrote the whole thing in C

which was able to get 700-900Hz and did catch the env command

about 10-20% of the time. I could probably have rewritten this by

simply walking /proc myself (since this is what all those libraries

do in the end) to get better result, but then my point was made. I was

able to prove to the restic author the security issues that warranted

the warning. It's too bad I need to repeat this again and again, but

then my tools are getting better at proving that issue... I suspect

it's not the last time I have to deal with this issue and I am happy

to think that I can come up with an even more efficient proof of

concept tool the next time around.

Ansible 101

After working on documentation last month, I ended up writing my first

Ansible playbook this month, converting my

tasksel list to a working Ansible configuration. This was a useful

exercise: it allow me to find a bunch of packages which have been

removed from Debian and provides much better usability than

tasksel. For example, it provides a --diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

Other free software work

New project: feed2exec

I should probably make a separate blog post about this, but

ironically, I don't want to spend too much time writing those reports,

so this will be quick.

I wrote a new program, called feed2exec. It's basically a

combination of feed2imap, rss2email and feed2tweet: it

allows you to fetch RSS feeds and send them in a mailbox, but what's

special about it, compared to the other programs above, is that it is

more generic: you can basically make it do whatever you want on new

feed items. I have, for example, replaced my feed2tweet instance

with it, using this simple configuration:

[anarcat]

url = https://anarc.at/blog/index.rss

output = feed2exec.plugins.exec

args = tweet "%(title)0.70s %(link)0.70s"

The sample configuration file also has examples to talk with Mastodon,

Pump.io and, why not, a torrent server to download torrent files

available over RSS feeds. A trivial configuration can also make it

work as a crude podcast client. My main motivation to work on this was

that it was difficult to extend feed2imap to do what I needed (which

was to talk to transmission to download torrent files) and rss2email

didn't support my workflow (which is delivering to feed-specific mail

folders). Because both projects also seemed abandoned, it seemed like

a good idea at the time to start a new one, although the rss2email

community has now restarted the project and may produce interesting

results.

As an experiment, I tracked my time working on this project. It turns

out it took about 45 hours to write that software. Considering

feed2exec is about 1400 SLOC, that's 30 lines of code per hour. I

don't know if that's slow or fast, but it's an interesting metric for

future projects. It sure seems slow to me, but we need to keep in mind

those 30 lines of code don't include documentation and repeated head

banging on the keyboard. For example, I found two issues

with the upstream feedparser package which I use to parse feeds

which also seems unmaintained, unfortunately.

Feed2exec is beta software at this point, but it's working well enough

for me and the design is much simpler than the other programs of the

kind. The main issue people can expect from it at this point is

formatting issues or parse errors on exotic feeds, and noisy error

messages on network errors, all of which should be fairly easy to fix

in the test suite. I hope it will be useful for the community and, as

usual, I welcome contributions, help and suggestions on how to improve

the software.

More Python templates

As part of the work on feed2exec, I did cleanup a few things in

the ecdysis project, mostly to hook tests up in the CI, improve

on the advancedConfig logger and cleanup more stuff.

While I was there, it turns out that I built a pretty decent

basic CI configuration for Python on GitLab. Whereas the previous

templates only had a non-working Django example, you should now be

able to chose a Python template when you configure CI on GitLab 10

and above, which should hook you up with normal Python setup

procedures like setup.py install and setup.py test.

Selfspy

I mentioned working on a monitoring tool in my last post, because it

was a feature from Workrave missing in SafeEyes. It turns

out there is already such a tool called selfspy. I did an

extensive review of the software to make sure it wouldn't leak

out confidential information out before using it, and it looks,

well... kind of okay. It crashed on me at least once so far, which is

too bad because then it loses track of the precious activity. I have

used it at least once to figure out what the heck I worked on during

the day, so it's pretty useful. I particularly used it to backtrack my

work on feed2exec as I didn't originally track my time on the project.

Unfortunately, selfspy seems unmaintained. I have proposed a

maintenance team and hopefully the project maintainer will respond

and at least share access so we don't end up in a situation like

linkchecker. I also sent a bunch of pull requests to fix some issues

like being secure by default and fixing

the build. Apart from the crash, the main issue I have found with

the software is that it doesn't detect idle time which means

certain apps are disproportionatly represented in statistics. There

are also some weaknesses in the crypto that should be adressed

for people that encrypt their database.

Next step is to package selfspy in Debian which should hopefully

be simple enough...

Restic documentation security

As part of a documentation patch on the Restic backup software, I

have improved on my previous Perl script to snoop on process

commandline arguments. A common flaw in shell scripts and cron jobs is

to pass secret material in the environment (usually safe) but often

through commandline arguments (definitely not safe). The challenge, in

this peculiar case, was the env binary, but the last time I

encountered such an issue was with the Drush commandline

tool, which was passing database credentials in clear to the mysql

binary. Using my Perl sniffer, I could get to 60 checks per

second (or 60Hz). After reimplementing it in Python, this number

went up to 160Hz, which still wasn't enough to catch the elusive

env command, which is much faster at hiding arguments than MySQL, in

large part because it simply does an execve() once the environment

is setup.

Eventually, I just went crazy and rewrote the whole thing in C

which was able to get 700-900Hz and did catch the env command

about 10-20% of the time. I could probably have rewritten this by

simply walking /proc myself (since this is what all those libraries

do in the end) to get better result, but then my point was made. I was

able to prove to the restic author the security issues that warranted

the warning. It's too bad I need to repeat this again and again, but

then my tools are getting better at proving that issue... I suspect

it's not the last time I have to deal with this issue and I am happy

to think that I can come up with an even more efficient proof of

concept tool the next time around.

Ansible 101

After working on documentation last month, I ended up writing my first

Ansible playbook this month, converting my

tasksel list to a working Ansible configuration. This was a useful

exercise: it allow me to find a bunch of packages which have been

removed from Debian and provides much better usability than

tasksel. For example, it provides a --diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

feed2tweet instance

with it, using this simple configuration:

[anarcat]

url = https://anarc.at/blog/index.rss

output = feed2exec.plugins.exec

args = tweet "%(title)0.70s %(link)0.70s"

More Python templates

As part of the work on feed2exec, I did cleanup a few things in

the ecdysis project, mostly to hook tests up in the CI, improve

on the advancedConfig logger and cleanup more stuff.

While I was there, it turns out that I built a pretty decent

basic CI configuration for Python on GitLab. Whereas the previous

templates only had a non-working Django example, you should now be

able to chose a Python template when you configure CI on GitLab 10

and above, which should hook you up with normal Python setup

procedures like setup.py install and setup.py test.

Selfspy

I mentioned working on a monitoring tool in my last post, because it

was a feature from Workrave missing in SafeEyes. It turns

out there is already such a tool called selfspy. I did an

extensive review of the software to make sure it wouldn't leak

out confidential information out before using it, and it looks,

well... kind of okay. It crashed on me at least once so far, which is

too bad because then it loses track of the precious activity. I have

used it at least once to figure out what the heck I worked on during

the day, so it's pretty useful. I particularly used it to backtrack my

work on feed2exec as I didn't originally track my time on the project.

Unfortunately, selfspy seems unmaintained. I have proposed a

maintenance team and hopefully the project maintainer will respond

and at least share access so we don't end up in a situation like

linkchecker. I also sent a bunch of pull requests to fix some issues

like being secure by default and fixing

the build. Apart from the crash, the main issue I have found with

the software is that it doesn't detect idle time which means

certain apps are disproportionatly represented in statistics. There

are also some weaknesses in the crypto that should be adressed

for people that encrypt their database.

Next step is to package selfspy in Debian which should hopefully

be simple enough...

Restic documentation security

As part of a documentation patch on the Restic backup software, I

have improved on my previous Perl script to snoop on process

commandline arguments. A common flaw in shell scripts and cron jobs is

to pass secret material in the environment (usually safe) but often

through commandline arguments (definitely not safe). The challenge, in

this peculiar case, was the env binary, but the last time I

encountered such an issue was with the Drush commandline

tool, which was passing database credentials in clear to the mysql

binary. Using my Perl sniffer, I could get to 60 checks per

second (or 60Hz). After reimplementing it in Python, this number

went up to 160Hz, which still wasn't enough to catch the elusive

env command, which is much faster at hiding arguments than MySQL, in

large part because it simply does an execve() once the environment

is setup.

Eventually, I just went crazy and rewrote the whole thing in C

which was able to get 700-900Hz and did catch the env command

about 10-20% of the time. I could probably have rewritten this by

simply walking /proc myself (since this is what all those libraries

do in the end) to get better result, but then my point was made. I was

able to prove to the restic author the security issues that warranted

the warning. It's too bad I need to repeat this again and again, but

then my tools are getting better at proving that issue... I suspect

it's not the last time I have to deal with this issue and I am happy

to think that I can come up with an even more efficient proof of

concept tool the next time around.

Ansible 101

After working on documentation last month, I ended up writing my first

Ansible playbook this month, converting my

tasksel list to a working Ansible configuration. This was a useful

exercise: it allow me to find a bunch of packages which have been

removed from Debian and provides much better usability than

tasksel. For example, it provides a --diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

Restic documentation security

As part of a documentation patch on the Restic backup software, I

have improved on my previous Perl script to snoop on process

commandline arguments. A common flaw in shell scripts and cron jobs is

to pass secret material in the environment (usually safe) but often

through commandline arguments (definitely not safe). The challenge, in

this peculiar case, was the env binary, but the last time I

encountered such an issue was with the Drush commandline

tool, which was passing database credentials in clear to the mysql

binary. Using my Perl sniffer, I could get to 60 checks per

second (or 60Hz). After reimplementing it in Python, this number

went up to 160Hz, which still wasn't enough to catch the elusive

env command, which is much faster at hiding arguments than MySQL, in

large part because it simply does an execve() once the environment

is setup.

Eventually, I just went crazy and rewrote the whole thing in C

which was able to get 700-900Hz and did catch the env command

about 10-20% of the time. I could probably have rewritten this by

simply walking /proc myself (since this is what all those libraries

do in the end) to get better result, but then my point was made. I was

able to prove to the restic author the security issues that warranted

the warning. It's too bad I need to repeat this again and again, but

then my tools are getting better at proving that issue... I suspect

it's not the last time I have to deal with this issue and I am happy

to think that I can come up with an even more efficient proof of

concept tool the next time around.

Ansible 101

After working on documentation last month, I ended up writing my first

Ansible playbook this month, converting my

tasksel list to a working Ansible configuration. This was a useful

exercise: it allow me to find a bunch of packages which have been

removed from Debian and provides much better usability than

tasksel. For example, it provides a --diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

--diff argument that shows which

packages are missing from a given setup.

I am still unsure about Ansible. Manifests do seem really verbose and

I still can't get used to the YAML DSL. I could probably have done the

same thing with Puppet and just run puppet apply on the resulting

config. But I must admit my bias towards Python is showing here: I

can't help but think Puppet is going to be way less accessible with

its rewrite in Clojure and C (!)... But then again, I really like

Puppet's approach of having generic types like package or service

rather than Ansible's clunky apt/yum/dnf/package/win_package

types...

Pat and Ham radio

After responding (too late) to

a request for volunteers to help in Puerto Rico, I realized that

my amateur radio skills were somewhat lacking in the "packet"

(data transmission in ham jargon) domain, as I wasn't used to operate

a Winlink node. Such a node can receive and transmit actual

emails over the airwaves, for free, without direct access to the

internet, which is very useful in disaster relief efforts. Through

summary research, I stumbled upon the new and very promising Pat

project which provides one of the first user-friendly Linux-compatible

Winlink programs. I provided improvements on the documentation

and some questions regarding compatibility issues which are still

pending.

But my pet issue is the establishment of pat as a normal internet

citizen by using standard protocols for receiving and sending

email. Not sure how that can be implemented, but we'll see. I am also

hoping to upload an official Debian package and hopefully write

more about this soon. Stay tuned!

Random stuff

I ended up fixing my Kodi issue by starting it as a standalone systemd

service, instead of gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

The downside of this is that it needs Xorg to run as root, whereas

modern Xorg can now run rootless. Not sure how to fix this or

where... But if I put needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

After fooling around with iPython, I ended up trying

the xonsh shell, which is supposed to provide a bash-compatible

Python shell environment. Unfortunately, I found it pretty unusable as

a shell: it works fine to do Python stuff, but then all my environment

and legacy bash configuration files were basically ignored so I

couldn't get working quickly. This is too bad because the project

looked very promising...

Finally, one of my TLS hosts using a Let's Encrypt certificate

wasn't renewing properly, and I figured out why. It turns out the

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

gdm3, which is now completely disabled on the

media box. I simply used the following

/etc/systemd/service/kodi.service file:

[Unit]

Description=Kodi Media Center

After=systemd-user-sessions.service network.target sound.target

[Service]

User=xbmc

Group=video

Type=simple

TTYPath=/dev/tty7

StandardInput=tty

ExecStart=/usr/bin/xinit /usr/bin/dbus-launch --exit-with-session /usr/bin/kodi-standalone -- :1 -nolisten tcp vt7

Restart=on-abort

RestartSec=5

[Install]

WantedBy=multi-user.target

needs_root_rights=no in Xwrapper.config,

I get the following error in .local/share/xorg/Xorg.1.log:

[ 2502.533] (EE) modeset(0): drmSetMaster failed: Permission denied

ProxyPass command was passing everything to the backend, including

the /.well-known requests, which obviously broke ACME

verification. The solution was simple enough, disable the proxy

for that directory:

ProxyPass /.well-known/ !

Feel free to contribute to this starting project to help spreading the Monero use by using the PyMoneroWallet project with your Python applications

Feel free to contribute to this starting project to help spreading the Monero use by using the PyMoneroWallet project with your Python applications