Paulo Henrique de Lima Santana: Bits from FOSDEM 2023 and 2024

Link para vers o em portugu s

Link para vers o em portugu s

Intro

Since 2019, I have traveled to Brussels at the beginning of the year to join FOSDEM, considered the largest and most important Free Software event in Europe. The 2024 edition was the fourth in-person edition in a row that I joined (2021 and 2022 did not happen due to COVID-19) and always with the financial help of Debian, which kindly paid my flight tickets after receiving my request asking for help to travel and approved by the Debian leader.

In 2020 I wrote several posts with a very complete report of the days I spent in Brussels. But in 2023 I didn t write anything, and becayse last year and this year I coordinated a room dedicated to translations of Free Software and Open Source projects, I m going to take the opportunity to write about these two years and how it was my experience.

After my first trip to FOSDEM, I started to think that I could join in a more active way than just a regular attendee, so I had the desire to propose a talk to one of the rooms. But then I thought that instead of proposing a tal, I could organize a room for talks :-) and with the topic translations which is something that I m very interested in, because it s been a few years since I ve been helping to translate the Debian for Portuguese.

Joining FOSDEM 2023

In the second half of 2022 I did some research and saw that there had never been a room dedicated to translations, so when the FOSDEM organization opened the call to receive room proposals (called DevRoom) for the 2023 edition, I sent a proposal to a translation room and it was accepted!

After the room was confirmed, the next step was for me, as room coordinator, to publicize the call for talk proposals. I spent a few weeks hoping to find out if I would receive a good number of proposals or if it would be a failure. But to my happiness, I received eight proposals and I had to select six to schedule the room programming schedule due to time constraints .

FOSDEM 2023 took place from February 4th to 5th and the translation devroom was scheduled on the second day in the afternoon.

The talks held in the room were these below, and in each of them you can watch the recording video.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Translate All The Things! An Introduction to LibreTranslate.

- Piero Toffanin

- Bringing your project closer to users - translating libre with Weblate. News, features and plans of the project.

- Benjamin Alan Jamie

- 20 years with Gettext. Experiences from the PostgreSQL project.

- Peter Eisentraut

- Building an atractive way in an old infra for new translators.

- Texou

- Managing KDE s translation project. Are we the biggest FLOSS translation project?

- Albert Astals Cid

- Translating documentation with cloud tools and scripts. Using cloud tools and scripts to translate, review and update documents.

- Nilo Coutinho Menezes

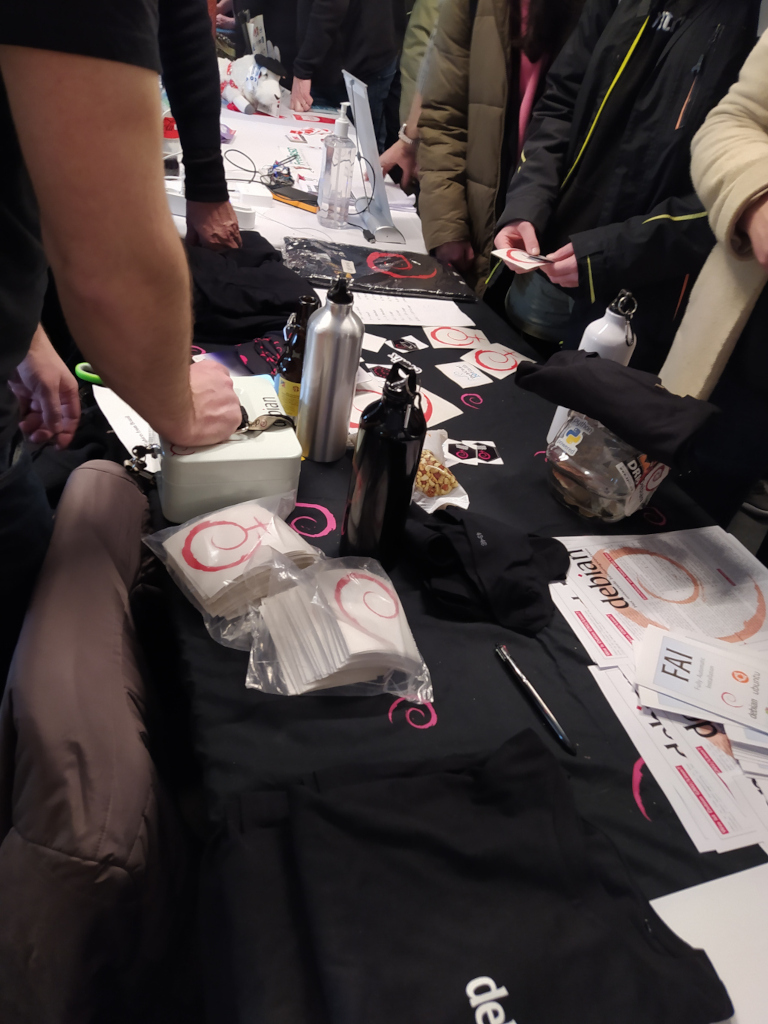

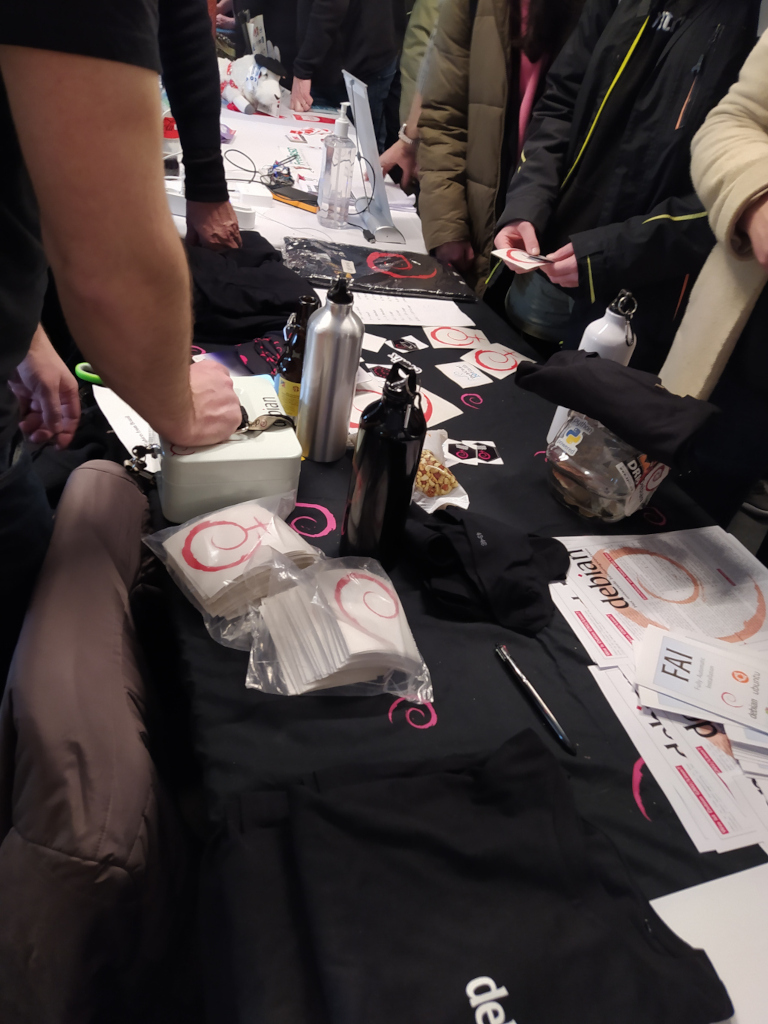

And on the first day of FOSDEM I was at the Debian stand selling the t-shirts that I had taken from Brazil. People from France were also there selling other products and it was cool to interact with people who visited the booth to buy and/or talk about Debian.

Photos

Joining FOSDEM 2024

The 2023 result motivated me to propose the translation devroom again when the FOSDEM 2024 organization opened the call for rooms . I was waiting to find out if the FOSDEM organization would accept a room on this topic for the second year in a row and to my delight, my proposal was accepted again :-)

This time I received 11 proposals! And again due to time constraints, I had to select six to schedule the room schedule grid.

FOSDEM 2024 took place from February 3rd to 4th and the translation devroom was scheduled for the second day again, but this time in the morning.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Localization of Open Source Tools into Swahili.

- Cecilia Maundu

- A universal data model for localizable messages.

- Eemeli Aro

- Happy translating! It is possible to overcome the language barrier in Open Source!

- Wentao Liu

- Lessons learnt as a translation contributor the past 4 years.

- Tom De Moor

- Long Term Effort to Keep Translations Up-To-Date.

- Andika Triwidada

- Using Open Source AIs for Accessibility and Localization.

- Nevin Daniel

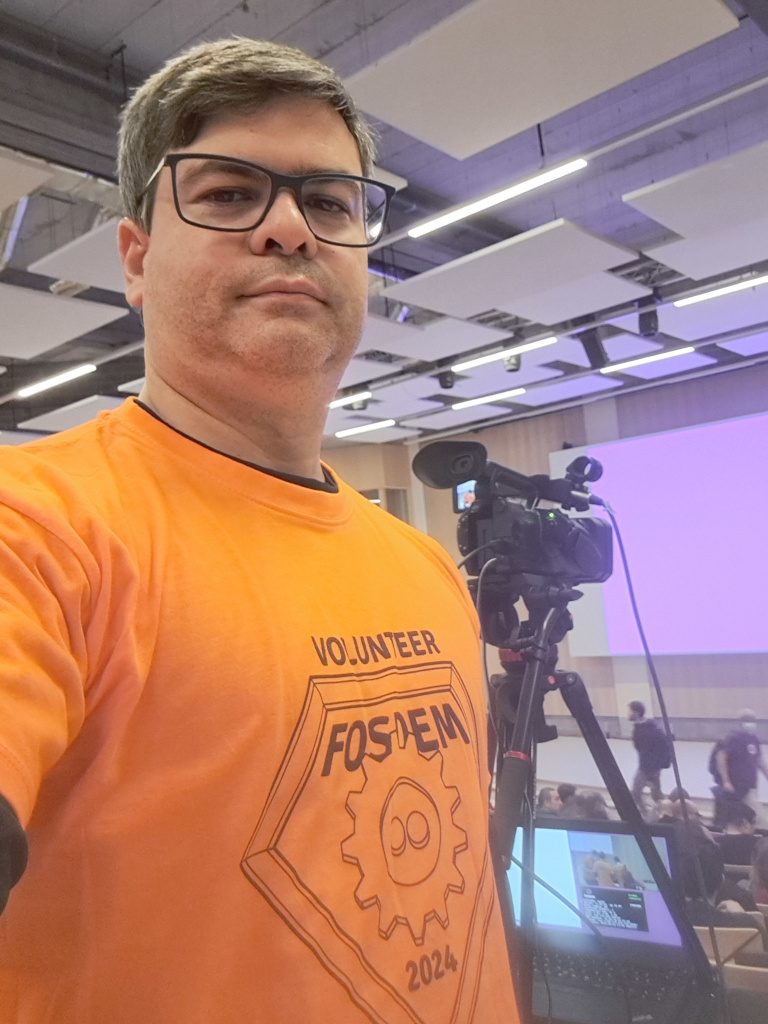

This time I didn t help at the Debian stand because I couldn t bring t-shirts to sell from Brazil. So I just stopped by and talked to some people who were there like some DDs. But I volunteered for a few hours to operate the streaming camera in one of the main rooms.

Photos

Conclusion

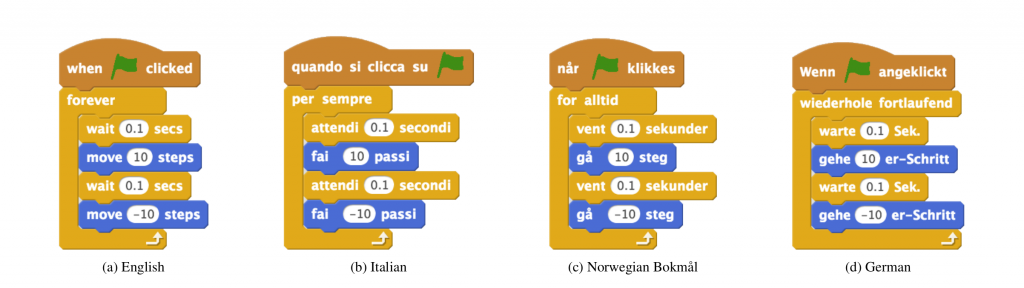

The topics of the talks in these two years were quite diverse, and all the lectures were really very good. In the 12 talks we can see how translations happen in some projects such as KDE, PostgreSQL, Debian and Mattermost. We had the presentation of tools such as LibreTranslate, Weblate, scripts, AI, data model. And also reports on the work carried out by communities in Africa, China and Indonesia.

The rooms were full for some talks, a little more empty for others, but I was very satisfied with the final result of these two years.

I leave my special thanks to Jonathan Carter, Debian Leader who approved my flight tickets requests so that I could join FOSDEM 2023 and 2024. This help was essential to make my trip to Brussels because flight tickets are not cheap at all.

I would also like to thank my wife Jandira, who has been my travel partner :-)

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

The talks held in the room were these below, and in each of them you can watch the recording video.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Translate All The Things! An Introduction to LibreTranslate.

- Piero Toffanin

- Bringing your project closer to users - translating libre with Weblate. News, features and plans of the project.

- Benjamin Alan Jamie

- 20 years with Gettext. Experiences from the PostgreSQL project.

- Peter Eisentraut

- Building an atractive way in an old infra for new translators.

- Texou

- Managing KDE s translation project. Are we the biggest FLOSS translation project?

- Albert Astals Cid

- Translating documentation with cloud tools and scripts. Using cloud tools and scripts to translate, review and update documents.

- Nilo Coutinho Menezes

Photos

Joining FOSDEM 2024

The 2023 result motivated me to propose the translation devroom again when the FOSDEM 2024 organization opened the call for rooms . I was waiting to find out if the FOSDEM organization would accept a room on this topic for the second year in a row and to my delight, my proposal was accepted again :-)

This time I received 11 proposals! And again due to time constraints, I had to select six to schedule the room schedule grid.

FOSDEM 2024 took place from February 3rd to 4th and the translation devroom was scheduled for the second day again, but this time in the morning.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Localization of Open Source Tools into Swahili.

- Cecilia Maundu

- A universal data model for localizable messages.

- Eemeli Aro

- Happy translating! It is possible to overcome the language barrier in Open Source!

- Wentao Liu

- Lessons learnt as a translation contributor the past 4 years.

- Tom De Moor

- Long Term Effort to Keep Translations Up-To-Date.

- Andika Triwidada

- Using Open Source AIs for Accessibility and Localization.

- Nevin Daniel

This time I didn t help at the Debian stand because I couldn t bring t-shirts to sell from Brazil. So I just stopped by and talked to some people who were there like some DDs. But I volunteered for a few hours to operate the streaming camera in one of the main rooms.

Photos

Conclusion

The topics of the talks in these two years were quite diverse, and all the lectures were really very good. In the 12 talks we can see how translations happen in some projects such as KDE, PostgreSQL, Debian and Mattermost. We had the presentation of tools such as LibreTranslate, Weblate, scripts, AI, data model. And also reports on the work carried out by communities in Africa, China and Indonesia.

The rooms were full for some talks, a little more empty for others, but I was very satisfied with the final result of these two years.

I leave my special thanks to Jonathan Carter, Debian Leader who approved my flight tickets requests so that I could join FOSDEM 2023 and 2024. This help was essential to make my trip to Brussels because flight tickets are not cheap at all.

I would also like to thank my wife Jandira, who has been my travel partner :-)

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

- Paulo Henrique de Lima Santana

- Cecilia Maundu

- Eemeli Aro

- Wentao Liu

- Tom De Moor

- Andika Triwidada

- Nevin Daniel

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

The Rcpp team is proud to announce release 1.0.6 of

The Rcpp team is proud to announce release 1.0.6 of