Reproducible Builds: Reproducible Builds in January 2024

Welcome to the January 2024 report from the Reproducible Builds project. In these reports we outline the most important things that we have been up to over the past month. If you are interested in contributing to the project, please visit our Contribute page on our website.

Welcome to the January 2024 report from the Reproducible Builds project. In these reports we outline the most important things that we have been up to over the past month. If you are interested in contributing to the project, please visit our Contribute page on our website.

How we executed a critical supply chain attack on PyTorch

John Stawinski and Adnan Khan published a lengthy blog post detailing how they executed a supply-chain attack against PyTorch, a popular machine learning platform used by titans like Google, Meta, Boeing, and Lockheed Martin :

Our exploit path resulted in the ability to upload malicious PyTorch releases to GitHub, upload releases to [Amazon Web Services], potentially add code to the main repository branch, backdoor PyTorch dependencies the list goes on. In short, it was bad. Quite bad.

The attack pivoted on PyTorch s use of self-hosted runners as well as submitting a pull request to address a trivial typo in the project s README file to gain access to repository secrets and API keys that could subsequently be used for malicious purposes.

New Arch Linux forensic filesystem tool

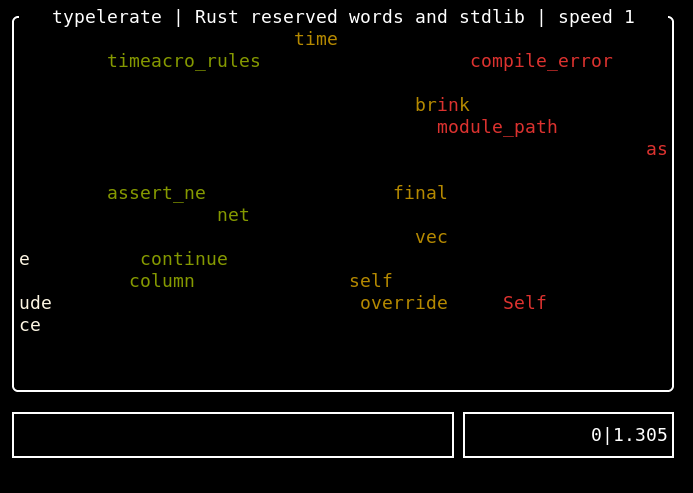

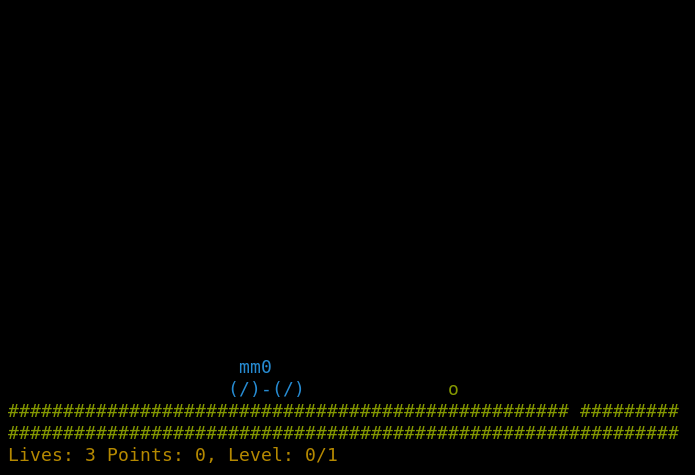

On our mailing list this month, long-time Reproducible Builds developer kpcyrd announced a new tool designed to forensically analyse Arch Linux filesystem images.

Called

On our mailing list this month, long-time Reproducible Builds developer kpcyrd announced a new tool designed to forensically analyse Arch Linux filesystem images.

Called archlinux-userland-fs-cmp, the tool is supposed to be used from a rescue image (any Linux) with an Arch install mounted to, [for example], /mnt. Crucially, however, at no point is any file from the mounted filesystem eval d or otherwise executed. Parsers are written in a memory safe language.

More information about the tool can be found on their announcement message, as well as on the tool s homepage. A GIF of the tool in action is also available.

Issues with our SOURCE_DATE_EPOCH code?

Chris Lamb started a thread on our mailing list summarising some potential problems with the source code snippet the Reproducible Builds project has been using to parse the SOURCE_DATE_EPOCH environment variable:

I m not 100% sure who originally wrote this code, but it was probably sometime in the ~2015 era, and it must be in a huge number of codebases by now.

Anyway, Alejandro Colomar was working on the shadow security tool and pinged me regarding some potential issues with the code. You can see this conversation here.

Chris ends his message with a request that those with intimate or low-level knowledge of time_t, C types, overflows and the various parsing libraries in the C standard library (etc.) contribute with further info.

Distribution updates

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Community updates

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about SOURCE_DATE_EPOCH to document its interaction with distribution rebuilds. [ ].

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 254 and 255 to Debian but focusing on triaging and/or merging code from other contributors. This included adding support for comparing eXtensible ARchive (.XAR/.PKG) files courtesy of Seth Michael Larson [ ][ ], as well considerable work from Vekhir in order to fix compatibility between various and subtle incompatible versions of the progressbar libraries in Python [ ][ ][ ][ ]. Thanks!

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

-

Debian-related changes:

- Reduce the number of

arm64 architecture workers from 24 to 16. [ ]

- Use diffoscope from the Debian release being tested again. [ ]

- Improve the handling when killing unwanted processes [ ][ ][ ] and be more verbose about it, too [ ].

- Don t mark a job as failed if process marked as to-be-killed is already gone. [ ]

- Display the architecture of builds that have been running for more than 48 hours. [ ]

- Reboot

arm64 nodes when they hit an OOM (out of memory) state. [ ]

-

Package rescheduling changes:

-

OpenWrt-related changes:

-

Misc:

- As it s January, set the

real_year variable to 2024 [ ] and bump various copyright years as well [ ].

- Fix a large (!) number of spelling mistakes in various scripts. [ ][ ][ ]

- Prevent Squid and Systemd processes from being killed by the kernel s OOM killer. [ ]

- Install the

iptables tool everywhere, else our custom rc.local script fails. [ ]

- Cleanup the

/srv/workspace/pbuilder directory on boot. [ ]

- Automatically restart Squid if it fails. [ ]

- Limit the execution of

chroot-installation jobs to a maximum of 4 concurrent runs. [ ][ ]

Significant amounts of node maintenance was performed by Holger Levsen (eg. [ ][ ][ ][ ][ ][ ][ ] etc.) and Vagrant Cascadian (eg. [ ][ ][ ][ ][ ][ ][ ][ ]). Indeed, Vagrant Cascadian handled an extended power outage for the network running the Debian armhf architecture test infrastructure. This provided the incentive to replace the UPS batteries and consolidate infrastructure to reduce future UPS load. [ ]

Elsewhere in our infrastructure, however, Holger Levsen also adjusted the email configuration for @reproducible-builds.org to deal with a new SMTP email attack. [ ]

Upstream patches

The Reproducible Builds project tries to detects, dissects and fix as many (currently) unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Bernhard M. Wiedemann:

cython (nondeterminstic path issue)deluge (issue with modification time of .egg file)gap-ferret, gap-semigroups & gap-simpcomp (nondeterministic config.log file)grpc (filesystem ordering issue )hub (random)kubernetes1.22 & kubernetes1.23 (sort-related issue)kubernetes1.24 & kubernetes1.25 (go -trimpath vs random issue)libjcat (drop test files with random bytes)luajit (Use new d option for deterministic bytecode output)meson [ ][ ] (sort the results from Python filesystem call)python-rjsmin (drop GCC instrumentation artifacts)qt6-virtualkeyboard+others (bug parallelism/race)SoapySDR (parallelism-related issue)systemd (sorting problem)warewulf (CPIO modification time issue, etc.)

-

Chris Lamb:

-

James Addison:

guake ( Schroedinger file due to race condition)qhelpgenerator-qt5 (timezone localization; fix also merged upstream for QT6)sphinx (search index doctitle sorting)

Separate to this, Vagrant Cascadian followed up with the relevant maintainers when reproducibility fixes were not included in newly-uploaded versions of the mm-common package in Debian this was quickly fixed, however. [ ]

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Mastodon: @reproducible_builds

-

Twitter: @ReproBuilds

On our mailing list this month, long-time Reproducible Builds developer kpcyrd announced a new tool designed to forensically analyse Arch Linux filesystem images.

Called

On our mailing list this month, long-time Reproducible Builds developer kpcyrd announced a new tool designed to forensically analyse Arch Linux filesystem images.

Called archlinux-userland-fs-cmp, the tool is supposed to be used from a rescue image (any Linux) with an Arch install mounted to, [for example], /mnt. Crucially, however, at no point is any file from the mounted filesystem eval d or otherwise executed. Parsers are written in a memory safe language.

More information about the tool can be found on their announcement message, as well as on the tool s homepage. A GIF of the tool in action is also available.

Issues with our SOURCE_DATE_EPOCH code?

Chris Lamb started a thread on our mailing list summarising some potential problems with the source code snippet the Reproducible Builds project has been using to parse the SOURCE_DATE_EPOCH environment variable:

I m not 100% sure who originally wrote this code, but it was probably sometime in the ~2015 era, and it must be in a huge number of codebases by now.

Anyway, Alejandro Colomar was working on the shadow security tool and pinged me regarding some potential issues with the code. You can see this conversation here.

Chris ends his message with a request that those with intimate or low-level knowledge of time_t, C types, overflows and the various parsing libraries in the C standard library (etc.) contribute with further info.

Distribution updates

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Community updates

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about SOURCE_DATE_EPOCH to document its interaction with distribution rebuilds. [ ].

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 254 and 255 to Debian but focusing on triaging and/or merging code from other contributors. This included adding support for comparing eXtensible ARchive (.XAR/.PKG) files courtesy of Seth Michael Larson [ ][ ], as well considerable work from Vekhir in order to fix compatibility between various and subtle incompatible versions of the progressbar libraries in Python [ ][ ][ ][ ]. Thanks!

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

-

Debian-related changes:

- Reduce the number of

arm64 architecture workers from 24 to 16. [ ]

- Use diffoscope from the Debian release being tested again. [ ]

- Improve the handling when killing unwanted processes [ ][ ][ ] and be more verbose about it, too [ ].

- Don t mark a job as failed if process marked as to-be-killed is already gone. [ ]

- Display the architecture of builds that have been running for more than 48 hours. [ ]

- Reboot

arm64 nodes when they hit an OOM (out of memory) state. [ ]

-

Package rescheduling changes:

-

OpenWrt-related changes:

-

Misc:

- As it s January, set the

real_year variable to 2024 [ ] and bump various copyright years as well [ ].

- Fix a large (!) number of spelling mistakes in various scripts. [ ][ ][ ]

- Prevent Squid and Systemd processes from being killed by the kernel s OOM killer. [ ]

- Install the

iptables tool everywhere, else our custom rc.local script fails. [ ]

- Cleanup the

/srv/workspace/pbuilder directory on boot. [ ]

- Automatically restart Squid if it fails. [ ]

- Limit the execution of

chroot-installation jobs to a maximum of 4 concurrent runs. [ ][ ]

Significant amounts of node maintenance was performed by Holger Levsen (eg. [ ][ ][ ][ ][ ][ ][ ] etc.) and Vagrant Cascadian (eg. [ ][ ][ ][ ][ ][ ][ ][ ]). Indeed, Vagrant Cascadian handled an extended power outage for the network running the Debian armhf architecture test infrastructure. This provided the incentive to replace the UPS batteries and consolidate infrastructure to reduce future UPS load. [ ]

Elsewhere in our infrastructure, however, Holger Levsen also adjusted the email configuration for @reproducible-builds.org to deal with a new SMTP email attack. [ ]

Upstream patches

The Reproducible Builds project tries to detects, dissects and fix as many (currently) unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Bernhard M. Wiedemann:

cython (nondeterminstic path issue)deluge (issue with modification time of .egg file)gap-ferret, gap-semigroups & gap-simpcomp (nondeterministic config.log file)grpc (filesystem ordering issue )hub (random)kubernetes1.22 & kubernetes1.23 (sort-related issue)kubernetes1.24 & kubernetes1.25 (go -trimpath vs random issue)libjcat (drop test files with random bytes)luajit (Use new d option for deterministic bytecode output)meson [ ][ ] (sort the results from Python filesystem call)python-rjsmin (drop GCC instrumentation artifacts)qt6-virtualkeyboard+others (bug parallelism/race)SoapySDR (parallelism-related issue)systemd (sorting problem)warewulf (CPIO modification time issue, etc.)

-

Chris Lamb:

-

James Addison:

guake ( Schroedinger file due to race condition)qhelpgenerator-qt5 (timezone localization; fix also merged upstream for QT6)sphinx (search index doctitle sorting)

Separate to this, Vagrant Cascadian followed up with the relevant maintainers when reproducibility fixes were not included in newly-uploaded versions of the mm-common package in Debian this was quickly fixed, however. [ ]

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Mastodon: @reproducible_builds

-

Twitter: @ReproBuilds

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

In Debian this month, Roland Clobus posted another detailed update of the status of reproducible ISO images on our mailing list. In particular, Roland helpfully summarised that all major desktops build reproducibly with bullseye, bookworm, trixie and sid provided they are built for a second time within the same DAK run (i.e. [within] 6 hours) . Additionally 7 of the 8 bookworm images from the official download link build reproducibly at any later time.

In addition to this, three reviews of Debian packages were added, 17 were updated and 15 were removed this month adding to our knowledge about identified issues.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Elsewhere, Bernhard posted another monthly update for his work elsewhere in openSUSE.

Community updates

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about

There were made a number of improvements to our website, including Bernhard M. Wiedemann fixing a number of typos of the term nondeterministic . [ ] and Jan Zerebecki adding a substantial and highly welcome section to our page about SOURCE_DATE_EPOCH to document its interaction with distribution rebuilds. [ ].

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions

diffoscope is our in-depth and content-aware diff utility that can locate and diagnose reproducibility issues. This month, Chris Lamb made a number of changes such as uploading versions 254 and 255 to Debian but focusing on triaging and/or merging code from other contributors. This included adding support for comparing eXtensible ARchive (.XAR/.PKG) files courtesy of Seth Michael Larson [ ][ ], as well considerable work from Vekhir in order to fix compatibility between various and subtle incompatible versions of the progressbar libraries in Python [ ][ ][ ][ ]. Thanks!

Reproducibility testing framework

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

-

Debian-related changes:

- Reduce the number of

arm64 architecture workers from 24 to 16. [ ]

- Use diffoscope from the Debian release being tested again. [ ]

- Improve the handling when killing unwanted processes [ ][ ][ ] and be more verbose about it, too [ ].

- Don t mark a job as failed if process marked as to-be-killed is already gone. [ ]

- Display the architecture of builds that have been running for more than 48 hours. [ ]

- Reboot

arm64 nodes when they hit an OOM (out of memory) state. [ ]

-

Package rescheduling changes:

-

OpenWrt-related changes:

-

Misc:

- As it s January, set the

real_year variable to 2024 [ ] and bump various copyright years as well [ ].

- Fix a large (!) number of spelling mistakes in various scripts. [ ][ ][ ]

- Prevent Squid and Systemd processes from being killed by the kernel s OOM killer. [ ]

- Install the

iptables tool everywhere, else our custom rc.local script fails. [ ]

- Cleanup the

/srv/workspace/pbuilder directory on boot. [ ]

- Automatically restart Squid if it fails. [ ]

- Limit the execution of

chroot-installation jobs to a maximum of 4 concurrent runs. [ ][ ]

Significant amounts of node maintenance was performed by Holger Levsen (eg. [ ][ ][ ][ ][ ][ ][ ] etc.) and Vagrant Cascadian (eg. [ ][ ][ ][ ][ ][ ][ ][ ]). Indeed, Vagrant Cascadian handled an extended power outage for the network running the Debian armhf architecture test infrastructure. This provided the incentive to replace the UPS batteries and consolidate infrastructure to reduce future UPS load. [ ]

Elsewhere in our infrastructure, however, Holger Levsen also adjusted the email configuration for @reproducible-builds.org to deal with a new SMTP email attack. [ ]

Upstream patches

The Reproducible Builds project tries to detects, dissects and fix as many (currently) unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Bernhard M. Wiedemann:

cython (nondeterminstic path issue)deluge (issue with modification time of .egg file)gap-ferret, gap-semigroups & gap-simpcomp (nondeterministic config.log file)grpc (filesystem ordering issue )hub (random)kubernetes1.22 & kubernetes1.23 (sort-related issue)kubernetes1.24 & kubernetes1.25 (go -trimpath vs random issue)libjcat (drop test files with random bytes)luajit (Use new d option for deterministic bytecode output)meson [ ][ ] (sort the results from Python filesystem call)python-rjsmin (drop GCC instrumentation artifacts)qt6-virtualkeyboard+others (bug parallelism/race)SoapySDR (parallelism-related issue)systemd (sorting problem)warewulf (CPIO modification time issue, etc.)

-

Chris Lamb:

-

James Addison:

guake ( Schroedinger file due to race condition)qhelpgenerator-qt5 (timezone localization; fix also merged upstream for QT6)sphinx (search index doctitle sorting)

Separate to this, Vagrant Cascadian followed up with the relevant maintainers when reproducibility fixes were not included in newly-uploaded versions of the mm-common package in Debian this was quickly fixed, however. [ ]

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Mastodon: @reproducible_builds

-

Twitter: @ReproBuilds

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

The Reproducible Builds project operates a comprehensive testing framework (available at tests.reproducible-builds.org) in order to check packages and other artifacts for reproducibility. In January, a number of changes were made by Holger Levsen:

-

Debian-related changes:

- Reduce the number of

arm64architecture workers from 24 to 16. [ ] - Use diffoscope from the Debian release being tested again. [ ]

- Improve the handling when killing unwanted processes [ ][ ][ ] and be more verbose about it, too [ ].

- Don t mark a job as failed if process marked as to-be-killed is already gone. [ ]

- Display the architecture of builds that have been running for more than 48 hours. [ ]

- Reboot

arm64nodes when they hit an OOM (out of memory) state. [ ]

- Reduce the number of

- Package rescheduling changes:

- OpenWrt-related changes:

-

Misc:

- As it s January, set the

real_yearvariable to 2024 [ ] and bump various copyright years as well [ ]. - Fix a large (!) number of spelling mistakes in various scripts. [ ][ ][ ]

- Prevent Squid and Systemd processes from being killed by the kernel s OOM killer. [ ]

- Install the

iptablestool everywhere, else our customrc.localscript fails. [ ] - Cleanup the

/srv/workspace/pbuilderdirectory on boot. [ ] - Automatically restart Squid if it fails. [ ]

- Limit the execution of

chroot-installationjobs to a maximum of 4 concurrent runs. [ ][ ]

- As it s January, set the

armhf architecture test infrastructure. This provided the incentive to replace the UPS batteries and consolidate infrastructure to reduce future UPS load. [ ]

Elsewhere in our infrastructure, however, Holger Levsen also adjusted the email configuration for @reproducible-builds.org to deal with a new SMTP email attack. [ ]

Upstream patches

The Reproducible Builds project tries to detects, dissects and fix as many (currently) unreproducible packages as possible. We endeavour to send all of our patches upstream where appropriate. This month, we wrote a large number of such patches, including:

-

Bernhard M. Wiedemann:

cython (nondeterminstic path issue)deluge (issue with modification time of .egg file)gap-ferret, gap-semigroups & gap-simpcomp (nondeterministic config.log file)grpc (filesystem ordering issue )hub (random)kubernetes1.22 & kubernetes1.23 (sort-related issue)kubernetes1.24 & kubernetes1.25 (go -trimpath vs random issue)libjcat (drop test files with random bytes)luajit (Use new d option for deterministic bytecode output)meson [ ][ ] (sort the results from Python filesystem call)python-rjsmin (drop GCC instrumentation artifacts)qt6-virtualkeyboard+others (bug parallelism/race)SoapySDR (parallelism-related issue)systemd (sorting problem)warewulf (CPIO modification time issue, etc.)

-

Chris Lamb:

-

James Addison:

guake ( Schroedinger file due to race condition)qhelpgenerator-qt5 (timezone localization; fix also merged upstream for QT6)sphinx (search index doctitle sorting)

Separate to this, Vagrant Cascadian followed up with the relevant maintainers when reproducibility fixes were not included in newly-uploaded versions of the mm-common package in Debian this was quickly fixed, however. [ ]

If you are interested in contributing to the Reproducible Builds project, please visit our Contribute page on our website. However, you can get in touch with us via:

-

IRC:

#reproducible-builds on irc.oftc.net.

-

Mailing list:

rb-general@lists.reproducible-builds.org

-

Mastodon: @reproducible_builds

-

Twitter: @ReproBuilds

cython(nondeterminstic path issue)deluge(issue with modification time of.eggfile)gap-ferret,gap-semigroups&gap-simpcomp(nondeterministicconfig.logfile)grpc(filesystem ordering issue )hub(random)kubernetes1.22&kubernetes1.23(sort-related issue)kubernetes1.24&kubernetes1.25(go -trimpathvs random issue)libjcat(drop test files with random bytes)luajit(Use newdoption for deterministic bytecode output)meson[ ][ ] (sort the results from Python filesystem call)python-rjsmin(drop GCC instrumentation artifacts)qt6-virtualkeyboard+others(bug parallelism/race)SoapySDR(parallelism-related issue)systemd(sorting problem)warewulf(CPIO modification time issue, etc.)

guake( Schroedinger file due to race condition)qhelpgenerator-qt5(timezone localization; fix also merged upstream for QT6)sphinx(search indexdoctitlesorting)

#reproducible-builds on irc.oftc.net.

rb-general@lists.reproducible-builds.org

As promised, on this post I m going to explain how I ve configured this blog

using

As promised, on this post I m going to explain how I ve configured this blog

using  Networking starts when you login as root, stops when you log off !

Networking starts when you login as root, stops when you log off ! Back in April 2021 I introduced

Back in April 2021 I introduced