Robert McQueen: Update from the GNOME board

It s been around 6 months since the GNOME Foundation was joined by our new Executive Director, Holly Million, and the board and I wanted to update members on the Foundation s current status and some exciting upcoming changes.

It s been around 6 months since the GNOME Foundation was joined by our new Executive Director, Holly Million, and the board and I wanted to update members on the Foundation s current status and some exciting upcoming changes.

Finances

As you may be aware, the GNOME Foundation has operated at a deficit (nonprofit speak for a loss ie spending more than we ve been raising each year) for over three years, essentially running the Foundation on reserves from some substantial donations received 4-5 years ago. The Foundation has a reserves policy which specifies a minimum amount of money we have to keep in our accounts. This is so that if there is a significant interruption to our usual income, we can preserve our core operations while we work on new funding sources. We ve now hit the buffers of this reserves policy, meaning the Board can t approve any more deficit budgets to keep spending at the same level we must increase our income.

One of the board s top priorities in hiring Holly was therefore her experience in communications and fundraising, and building broader and more diverse support for our mission and work. Her goals since joining as well as building her familiarity with the community and project have been to set up better financial controls and reporting, develop a strategic plan, and start fundraising. You may have noticed the Foundation being more cautious with spending this year, because Holly prepared a break-even budget for the Board to approve in October, so that we can steady the ship while we prepare and launch our new fundraising initiatives.

Strategy & Fundraising

The biggest prerequisite for fundraising is a clear strategy we need to explain what we re doing and why it s important, and use that to convince people to support our plans. I m very pleased to report that Holly has been working hard on this and meeting with many stakeholders across the community, and has prepared a detailed and insightful five year strategic plan. The plan defines the areas where the Foundation will prioritise, develop and fund initiatives to support and grow the GNOME project and community. The board has approved a draft version of this plan, and over the coming weeks Holly and the Foundation team will be sharing this plan and running a consultation process to gather feedback input from GNOME foundation and community members.

In parallel, Holly has been working on a fundraising plan to stabilise the Foundation, growing our revenue and ability to deliver on these plans. We will be launching a variety of fundraising activities over the coming months, including a development fund for people to directly support GNOME development, working with professional grant writers and managers to apply for government and private foundation funding opportunities, and building better communications to explain the importance of our work to corporate and individual donors.

Board Development

Another observation that Holly had since joining was that we had, by general nonprofit standards, a very small board of just 7 directors. While we do have some committees which have (very much appreciated!) volunteers from outside the board, our officers are usually appointed from within the board, and many board members end up serving on multiple committees and wearing several hats. It also means the number of perspectives on the board is limited and less representative of the diverse contributors and users that make up the GNOME community.

Holly has been working with the board and the governance committee to reduce how much we ask from individual board members, and improve representation from the community within the Foundation s governance. Firstly, the board has decided to increase its size from 7 to 9 members, effective from the upcoming elections this May & June, allowing more voices to be heard within the board discussions. After that, we re going to be working on opening up the board to more participants, creating non-voting officer seats to represent certain regions or interests from across the community, and take part in committees and board meetings. These new non-voting roles are likely to be appointed with some kind of application process, and we ll share details about these roles and how to be considered for them as we refine our plans over the coming year.

Elections

We re really excited to develop and share these plans and increase the ways that people can get involved in shaping the Foundation s strategy and how we raise and spend money to support and grow the GNOME community. This brings me to my final point, which is that we re in the run up to the annual board elections which take place in the run up to GUADEC. Because of the expansion of the board, and four directors coming to the end of their terms, we ll be electing 6 seats this election. It s really important to Holly and the board that we use this opportunity to bring some new voices to the table, leading by example in growing and better representing our community.

Allan wrote in the past about what the board does and what s expected from directors. As you can see we re working hard on reducing what we ask from each individual board member by increasing the number of directors, and bringing additional members in to committees and non-voting roles. If you re interested in seeing more diverse backgrounds and perspectives represented on the board, I would strongly encourage you consider standing for election and reach out to a board member to discuss their experience.

Thanks for reading! Until next time.

Best Wishes,

Rob

President, GNOME Foundation

(also posted to GNOME Discourse, please head there if you have any questions or comments)

Board Development

Another observation that Holly had since joining was that we had, by general nonprofit standards, a very small board of just 7 directors. While we do have some committees which have (very much appreciated!) volunteers from outside the board, our officers are usually appointed from within the board, and many board members end up serving on multiple committees and wearing several hats. It also means the number of perspectives on the board is limited and less representative of the diverse contributors and users that make up the GNOME community.

Holly has been working with the board and the governance committee to reduce how much we ask from individual board members, and improve representation from the community within the Foundation s governance. Firstly, the board has decided to increase its size from 7 to 9 members, effective from the upcoming elections this May & June, allowing more voices to be heard within the board discussions. After that, we re going to be working on opening up the board to more participants, creating non-voting officer seats to represent certain regions or interests from across the community, and take part in committees and board meetings. These new non-voting roles are likely to be appointed with some kind of application process, and we ll share details about these roles and how to be considered for them as we refine our plans over the coming year.

Elections

We re really excited to develop and share these plans and increase the ways that people can get involved in shaping the Foundation s strategy and how we raise and spend money to support and grow the GNOME community. This brings me to my final point, which is that we re in the run up to the annual board elections which take place in the run up to GUADEC. Because of the expansion of the board, and four directors coming to the end of their terms, we ll be electing 6 seats this election. It s really important to Holly and the board that we use this opportunity to bring some new voices to the table, leading by example in growing and better representing our community.

Allan wrote in the past about what the board does and what s expected from directors. As you can see we re working hard on reducing what we ask from each individual board member by increasing the number of directors, and bringing additional members in to committees and non-voting roles. If you re interested in seeing more diverse backgrounds and perspectives represented on the board, I would strongly encourage you consider standing for election and reach out to a board member to discuss their experience.

Thanks for reading! Until next time.

Best Wishes,

Rob

President, GNOME Foundation

(also posted to GNOME Discourse, please head there if you have any questions or comments)

Rob

President, GNOME Foundation (also posted to GNOME Discourse, please head there if you have any questions or comments)

A new minor release 0.4.22 of

A new minor release 0.4.22 of  With

With  I've been enjoying Biosphere as the soundtrack to my recent "concentrated work" spells.

I've been enjoying Biosphere as the soundtrack to my recent "concentrated work" spells.

The voting period for the Debian Project Leader election has ended. Please join

us in congratulating Andreas Tille as the new Debian Project Leader.

The new term for the project leader started on 2024-04-21.

369 of 1,010 Debian Developers voted using the

The voting period for the Debian Project Leader election has ended. Please join

us in congratulating Andreas Tille as the new Debian Project Leader.

The new term for the project leader started on 2024-04-21.

369 of 1,010 Debian Developers voted using the

I am upstream and

I am upstream and  Time really flies when

Time really flies when

(There s a handy

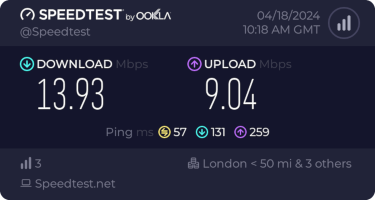

(There s a handy  This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

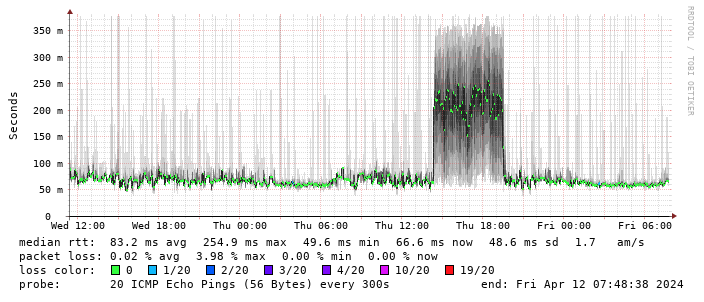

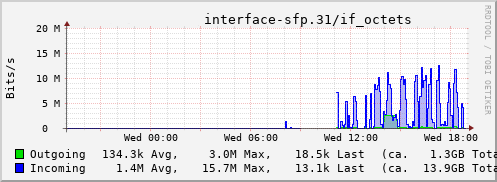

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.