(TL;DR: I ve been trying out btrfs in some places instead of ext4, I ve hit absolutely zero issues and there are a few features that make me plan to use it more.)

Despite (or perhaps because of) working on storage products for a reasonable chunk of my career I have tended towards a conservative approach to my filesystems. By the time I came to Linux

ext2 was well established, the move to

ext3 was a logical one (the joys of added journalling for faster recovery after unclean shutdowns) and for a long time my default stack has been

MD raid with

LVM2 on top and then

ext4 as the filesystem.

I ve dabbled with other filesystems; I ran

XFS for a while on my

VDR machine, and also when I had a large tradspool with

INN, but never really had a hard requirement for it. I ve ended up adminning a machine that had

JFS in the past, largely for historical reasons, but don t really remember any issues (vague recollections of NFS problems but that might just have been NFS being NFS).

However.

ZFS has gathered itself a significant fan base and that makes me wonder about what it can offer and whether I want that. Firstly, let s be clear that I m never going to run a primary filesystem that isn t part of the mainline kernel. So ZFS itself is out, because I run Linux. So what do I want that I can t get with ext4? Firstly, I d like data checksumming. As storage gets larger there s a bigger chance of silent data corruption and while I have backups of the important stuff that doesn t help if you don t know you need to use them. Secondly, these days I have machines running containers, VMs, or with lots of source checkouts with a reasonable amount of overlap in their data. Disk space has got cheaper, but I d still like to be able to do some sort of deduplication of common blocks.

So, I ve been trying out

btrfs. When I

installed my desktop I went with btrfs for

/ and

/home (I kept

/boot as ext4). The thought process was that this was a local machine (so easy access if it all went wrong) and I take regular backups (so if it all went wrong I could recover). That was a year and a half ago and it s been pretty dull; I mostly forget I m running btrfs instead of ext4. This is on a machine that tracks Debian testing, so currently on kernel 6.1 but originally installed with 5.10. So it seems modern btrfs is reasonably stable for a machine that isn t driven especially hard. Good start.

The fact I forget what filesystem I m running points to the fact that I m not actually doing anything special here. I get the advantage of data checksumming, but not much else. 2 things spring to mind. Firstly, I don t do snapshots. Given I run testing it might be wiser if I

did take a snapshot before every

apt-get upgrade, and I have a friend who does just that, but even when I ve run unstable I ve never had a machine get itself into a state that I couldn t recover so I haven t spent time investigating. I note Ubuntu has

apt-btrfs-snapshot but it doesn t seem to have any updates for years.

The other thing I didn t do when I installed my desktop is take advantage of subvolumes. I m still trying to get my head around exactly what I want them for, but they provide a partial replacement for LVM when it comes to carving up disk space. Instead of the separate

/ and

/home LVs I created I could have created a single LV that would have a single btrfs filesystem on it.

/ and

/home would then be separate subvolumes, allowing me to snapshot each individually. Quotas can also be applied separately so there s still the potential to prevent one subvolume taking all available space.

Encouraged by the lack of hassle with my desktop I decided to try moving my

sbuild machine over to use btrfs for its build chroots. For Reasons this is a VM kindly hosted by a friend, rather than something local. To be honest these days I would probably go for local hosting, but it works and there s no strong reason to move. The point is it s remote, and so if migrating went wrong and I had to ask for assistance I d be bothering someone who s doing me a favour as it is.

The build VM is, of course, running LVM, and there was luckily some free space available. I m reasonably sure the underlying storage involves spinning rust, so I did a laborious set of

pvmove commands to make sure all the available space was at the start of the PV, and created a new btrfs volume there. I was advised that while

btrfs-convert would do the job it was better to create a fresh filesystem where possible. This time I did create an initial

root subvolume.

Configuring up sbuild was then much simpler than I d expected. My setup originally started out as a set of tarballs for the chroots that would get untarred + used for the builds, which is pretty slow. Once overlayfs was mature enough I switched to that. I d had a conversation with Enrico about his

nspawn/btrfs setup, but it turned out Russ Allbery had written an excellent set of instructions on

sbuild with btrfs. I tweaked my existing setup based on his details, and I was in business. Each chroot is a separate subvolume - I don t actually end up having to mount them individually, but it means that only the chroot in use gets snapshotted. For example during a build the following can be observed:

# btrfs subvolume list /

ID 257 gen 111534 top level 5 path root

ID 271 gen 111525 top level 257 path srv/chroot/unstable-amd64-sbuild

ID 275 gen 27873 top level 257 path srv/chroot/bullseye-amd64-sbuild

ID 276 gen 27873 top level 257 path srv/chroot/buster-amd64-sbuild

ID 343 gen 111533 top level 257 path srv/chroot/snapshots/unstable-amd64-sbuild-328059a0-e74b-4d9f-be70-24b59ccba121

I was a little confused about whether I d got something wrong because the snapshot top level is listed as

257 rather than

271, but digging further with

btrfs subvolume show on the 2 mounted directories correctly showed the snapshot had a parent equal to the chroot, not

/.

As a final step I ran

jdupes via

jdupes -1Br / to deduplicate things across the filesystem. It didn t end up providing a significant saving unfortunately - I guess there s a reasonable amount of change between Debian releases - but I think tried it on my desktop, which tends to have a large number of similar source trees checked out. There I managed to save about 5% on

/home, which didn t seem too shabby.

The sbuild setup has been in place for a couple of months now, and I ve run quite a few builds on it while preparing for the freeze. So I m fairly confident in the stability of the setup and my next move is to transition my local house server over to btrfs for its containers (which all run under

systemd-nspawn). Those are generally running a Debian stable base so there should be a decent amount of commonality for deduping.

I m not saying I m yet at the point where I ll default to btrfs on new installs, but I m definitely looking at it for situations where I think I can get benefits from deduplication, or being able to divide up disk space without hard partitioning space.

(And, just to answer the worry I had when I started, I ve got nowhere near

ENOSPC problems, but I believe they re handled much more gracefully these days. And my experience of ZFS when it got above 90% utilization was far from ideal too.)

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

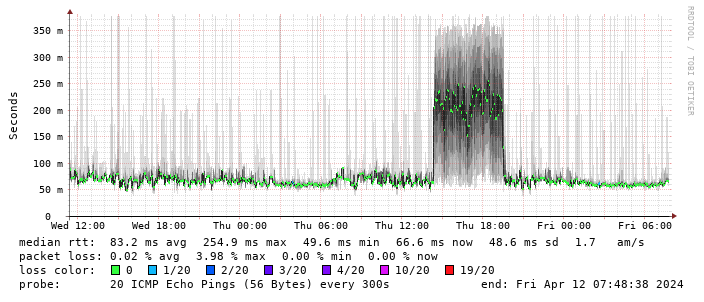

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

Having setup recursive DNS it was time to actually sort out a backup internet connection. I live in a Virgin Media area, but I still haven t forgiven them for my terrible Virgin experiences when moving here. Plus it involves a bigger contractual commitment. There are no altnets locally (though I m watching youfibre who have already rolled out in a few Belfast exchanges), so I decided to go for a 5G modem. That gives some flexibility, and is a bit easier to get up and running.

I started by purchasing a ZTE MC7010. This had the advantage of being reasonably cheap off eBay, not having any wifi functionality I would just have to disable (it s going to plug it into the same router the FTTP connection terminates on), being outdoor mountable should I decide to go that way, and, finally, being powered via PoE.

For now this device sits on the window sill in my study, which is at the top of the house. I printed a table stand for it which mostly does the job (though not as well with a normal, rather than flat, network cable). The router lives downstairs, so I ve extended a dedicated VLAN through the study switch, down to the core switch and out to the router. The PoE study switch can only do GigE, not 2.5Gb/s, but at present that s far from the limiting factor on the speed of the connection.

The device is 3 branded, and, as it happens, I ve ended up with a 3 SIM in it. Up until recently my personal phone was with them, but they ve kicked me off Go Roam, so I ve moved. Going with 3 for the backup connection provides some slight extra measure of resiliency; we now have devices on all 4 major UK networks in the house. The SIM is a preloaded data only SIM good for a year; I don t expect to use all of the data allowance, but I didn t want to have to worry about unexpected excess charges.

Performance turns out to be disappointing; I end up locking the device to 4G as the 5G signal is marginal - leaving it enabled results in constantly switching between 4G + 5G and a significant extra latency. The smokeping graph below shows a brief period where I removed the 4G lock and allowed 5G:

(There s a handy zte.js script to allow doing this from the device web interface.)

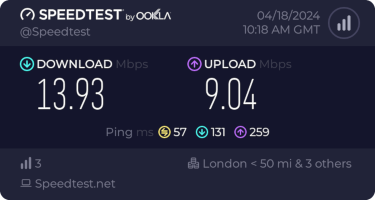

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

(There s a handy zte.js script to allow doing this from the device web interface.)

I get about 10Mb/s sustained downloads out of it. EE/Vodafone did not lead to significantly better results, so for now I m accepting it is what it is. I tried relocating the device to another part of the house (a little tricky while still providing switch-based PoE, but I have an injector), without much improvement. Equally pinning the 4G to certain bands provided a short term improvement (I got up to 40-50Mb/s sustained), but not reliably so.

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

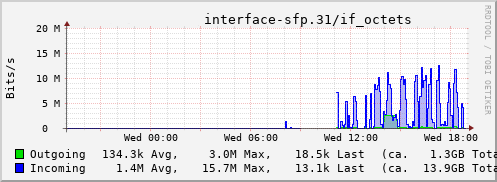

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

This is disappointing, but if it turns out to be a problem I can look at mounting it externally. I also assume as 5G is gradually rolled out further things will naturally improve, but that might be wishful thinking on my part.

Rather than wait until my main link had a problem I decided to try a day working over the 5G connection. I spend a lot of my time either in browser based apps or accessing remote systems via SSH, so I m reasonably sensitive to a jittery or otherwise flaky connection. I picked a day that I did not have any meetings planned, but as it happened I ended up with an adhoc video call arranged. I m pleased to say that it all worked just fine; definitely noticeable as slower than the FTTP connection (to be expected), but all workable and even the video call was fine (at least from my end). Looking at the traffic graph shows the expected ~ 10Mb/s peak (actually a little higher, and looking at the FTTP stats for previous days not out of keeping with what we see there), and you can just about see the ~ 3Mb/s symmetric use by the video call at 2pm:

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

The test run also helped iron out the fact that the content filter was still enabled on the SIM, but that was easily resolved.

Up next, vaguely automatic failover.

(I wrote this up for an internal work post, but I figure it s worth sharing more publicly too.)

I spent last week at

(I wrote this up for an internal work post, but I figure it s worth sharing more publicly too.)

I spent last week at  As is traditional for the UK August Bank Holiday weekend I made my way to Cambridge for the Debian UK BBQ. As was pointed out we ve been doing this for more than 20 years now, and it s always good to catch up with old friends and meet new folk.

Thanks to

As is traditional for the UK August Bank Holiday weekend I made my way to Cambridge for the Debian UK BBQ. As was pointed out we ve been doing this for more than 20 years now, and it s always good to catch up with old friends and meet new folk.

Thanks to  Back in September last year I chose to back the

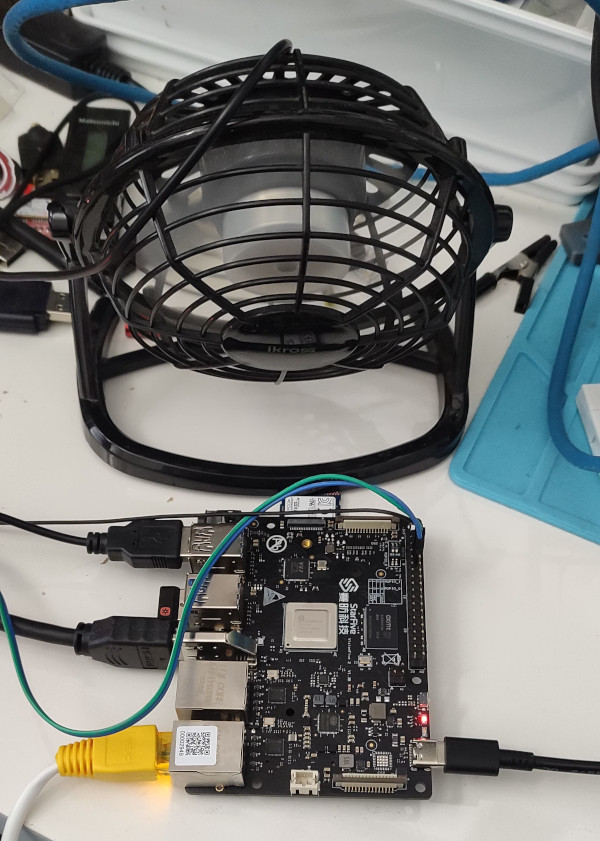

Back in September last year I chose to back the  I haven t seen any actual stability problems, but I wouldn t want to run for any length of time like that. I ve ordered a heatsink and also realised that the board supports a Raspberry Pi style PoE Hat , so I ve got one of those that includes a fan ordered (I am a complete convert to PoE especially for small systems like this).

With the desk fan setup I ve been able to run the board for extended periods under load (I did a full recompile of the Debian 6.1.12-1 kernel package and it took about 10 hours). The M.2 slot is unfortunately only a single PCIe v2 lane, and my testing topped out at about 180MB/s. IIRC that is about half what the slot should be capable of, and less than a 10th of what the SSD can do. Ethernet testing with

I haven t seen any actual stability problems, but I wouldn t want to run for any length of time like that. I ve ordered a heatsink and also realised that the board supports a Raspberry Pi style PoE Hat , so I ve got one of those that includes a fan ordered (I am a complete convert to PoE especially for small systems like this).

With the desk fan setup I ve been able to run the board for extended periods under load (I did a full recompile of the Debian 6.1.12-1 kernel package and it took about 10 hours). The M.2 slot is unfortunately only a single PCIe v2 lane, and my testing topped out at about 180MB/s. IIRC that is about half what the slot should be capable of, and less than a 10th of what the SSD can do. Ethernet testing with