live demo

live demo

As far as I know this is the first Haskell program

compiled to Webassembly (WASM) with mainline ghc and using the browser DOM.

ghc's WASM backend is solid, but it only provides very low-level FFI bindings

when used in the browser. Ints and pointers to WASM memory.

(

See here for

details and for instructions on getting the ghc WASM toolchain I used.)

I imagine that in the future, WASM code will interface with the DOM by

using a

WASI "world"

that defines a complete API (and browsers won't include Javascript engines

anymore). But currently, WASM can't do anything in a browser without

calling back to Javascript.

For this project, I needed 63 lines of (reusable) javascript

(

here).

Plus another 18 to bootstrap running the WASM program

(

here).

(Also

browser_wasi_shim)

But let's start with the Haskell code. A simple program to pop up

an alert in the browser looks like this:

-# LANGUAGE OverloadedStrings #-

import Wasmjsbridge

foreign export ccall hello :: IO ()

hello :: IO ()

hello = do

alert <- get_js_object_method "window" "alert"

call_js_function_ByteString_Void alert "hello, world!"

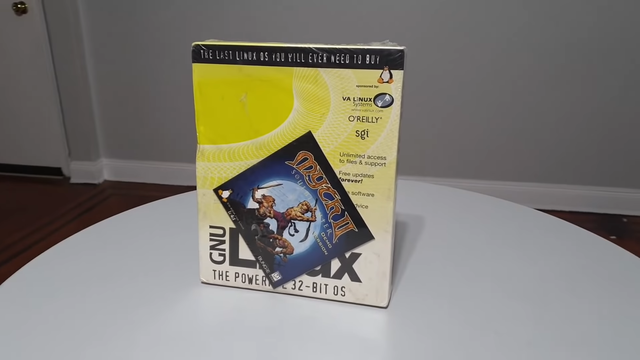

A larger program that draws on the canvas and generated the image above

is

here.

The Haskell side of the FFI interface is a bunch of fairly mechanical

functions like this:

foreign import ccall unsafe "call_js_function_string_void"

_call_js_function_string_void :: Int -> CString -> Int -> IO ()

call_js_function_ByteString_Void :: JSFunction -> B.ByteString -> IO ()

call_js_function_ByteString_Void (JSFunction n) b =

BU.unsafeUseAsCStringLen b $ \(buf, len) ->

_call_js_function_string_void n buf len

Many more would need to be added, or generated, to continue down this

path to complete coverage of all data types. All in all it's 64 lines

of code so far

(

here).

Also a C shim is needed, that imports from WASI modules and provides

C functions that are used by the Haskell FFI. It looks like this:

void _call_js_function_string_void(uint32_t fn, uint8_t *buf, uint32_t len) __attribute__((

__import_module__("wasmjsbridge"),

__import_name__("call_js_function_string_void")

));

void call_js_function_string_void(uint32_t fn, uint8_t *buf, uint32_t len)

_call_js_function_string_void(fn, buf, len);

Another 64 lines of code for that

(

here).

I found this pattern in Joachim Breitner's

haskell-on-fastly and copied it rather blindly.

Finally, the Javascript that gets run for that is:

call_js_function_string_void(n, b, sz)

const fn = globalThis.wasmjsbridge_functionmap.get(n);

const buffer = globalThis.wasmjsbridge_exports.memory.buffer;

fn(decoder.decode(new Uint8Array(buffer, b, sz)));

,

Notice that this gets an identifier representing the javascript function

to run, which might be any method of any object. It looks it up in a map

and runs it. And the ByteString that got passed from Haskell has to be decoded to a

javascript string.

In the Haskell program above, the function is

document.alert. Why not

pass a ByteString with that through the FFI? Well, you could. But then

it would have to eval it. That would make running WASM in the browser be

evaling Javascript every time it calls a function. That does not seem like a

good idea if the goal is speed. GHC's

javascript backend

does use Javascript FFI snippets like that, but there they get pasted into the generated

Javascript hairball, so no eval is needed.

So my code has things like

get_js_object_method that look up things like

Javascript functions and generate identifiers. It also has this:

call_js_function_ByteString_Object :: JSFunction -> B.ByteString -> IO JSObject

Which can be used to call things like

document.getElementById

that return a javascript object:

getElementById <- get_js_object_method (JSObjectName "document") "getElementById"

canvas <- call_js_function_ByteString_Object getElementById "myCanvas"

Here's the Javascript called by

get_js_object_method. It generates a

Javascript function that will be used to call the desired method of the object,

and allocates an identifier for it, and returns that to the caller.

get_js_objectname_method(ob, osz, nb, nsz)

const buffer = globalThis.wasmjsbridge_exports.memory.buffer;

const objname = decoder.decode(new Uint8Array(buffer, ob, osz));

const funcname = decoder.decode(new Uint8Array(buffer, nb, nsz));

const func = function (...args) return globalThis[objname][funcname](...args) ;

const n = globalThis.wasmjsbridge_counter + 1;

globalThis.wasmjsbridge_counter = n;

globalThis.wasmjsbridge_functionmap.set(n, func);

return n;

,

This does mean that every time a Javascript function id is looked up,

some more memory is used on the Javascript side. For more serious uses of this,

something would need to be done about that. Lots of other stuff like

object value getting and setting is also not implemented, there's

no support yet for callbacks, and so on. Still, I'm happy where this has

gotten to after 12 hours of work on it.

I

might release the reusable parts of this as a Haskell library, although

it seems likely that ongoing development of ghc will make it obsolete. In the

meantime, clone the

git repo

to have a play with it.

This blog post was sponsored by unqueued

on Patreon.

Was the ssh backdoor the only goal that "Jia Tan" was pursuing

with their multi-year operation against xz?

I doubt it, and if not, then every fix so far has been incomplete,

because everything is still running code written by that entity.

If we assume that they had a multilayered plan, that their every action was

calculated and malicious, then we have to think about the full threat

surface of using xz. This quickly gets into nightmare scenarios of the

"trusting trust" variety.

What if xz contains a hidden buffer overflow or other vulnerability, that

can be exploited by the xz file it's decompressing? This would let the

attacker target other packages, as needed.

Let's say they want to target gcc. Well, gcc contains a lot of

documentation, which includes png images. So they spend a while getting

accepted as a documentation contributor on that project, and get added to

it a png file that is specially constructed, it has additional binary data

appended that exploits the buffer overflow. And instructs xz to modify the

source code that comes later when decompressing

Was the ssh backdoor the only goal that "Jia Tan" was pursuing

with their multi-year operation against xz?

I doubt it, and if not, then every fix so far has been incomplete,

because everything is still running code written by that entity.

If we assume that they had a multilayered plan, that their every action was

calculated and malicious, then we have to think about the full threat

surface of using xz. This quickly gets into nightmare scenarios of the

"trusting trust" variety.

What if xz contains a hidden buffer overflow or other vulnerability, that

can be exploited by the xz file it's decompressing? This would let the

attacker target other packages, as needed.

Let's say they want to target gcc. Well, gcc contains a lot of

documentation, which includes png images. So they spend a while getting

accepted as a documentation contributor on that project, and get added to

it a png file that is specially constructed, it has additional binary data

appended that exploits the buffer overflow. And instructs xz to modify the

source code that comes later when decompressing

One of my goals for

One of my goals for  For my

For my